In this guide, we'll walk through how to capture data from production with a preprocessing step, then sending the results to create a new dataset. This allows you to chain multiple models together, break down complex problems into modular stages, and create more scalable computer vision systems

This technique is especially valuable when you want to isolate data from production to train a different model on a new task. Our example today will be detecting numbers on sports jerseys and then using the jersey numbers dataset to fine-tune a vision-language model for optical character recognition.

Let's get started!

Workflow Overview

Roboflow Workflows are typically used for end to end inference–taking an image from input to prediction in one seamless pipeline. But you can also use Workflows not just to make predictions, but to prepare data for another model.

If you’re new to Workflows, I suggest checking out this guide to get acquainted with Workflows and their typical use cases. However, as we’ll see in this guide, there are many blocks that make Workflows more versatile, and make them applicable to other domains in computer vision, such as bootstrapping data for model training.

How to build a Preprocessing Workflow

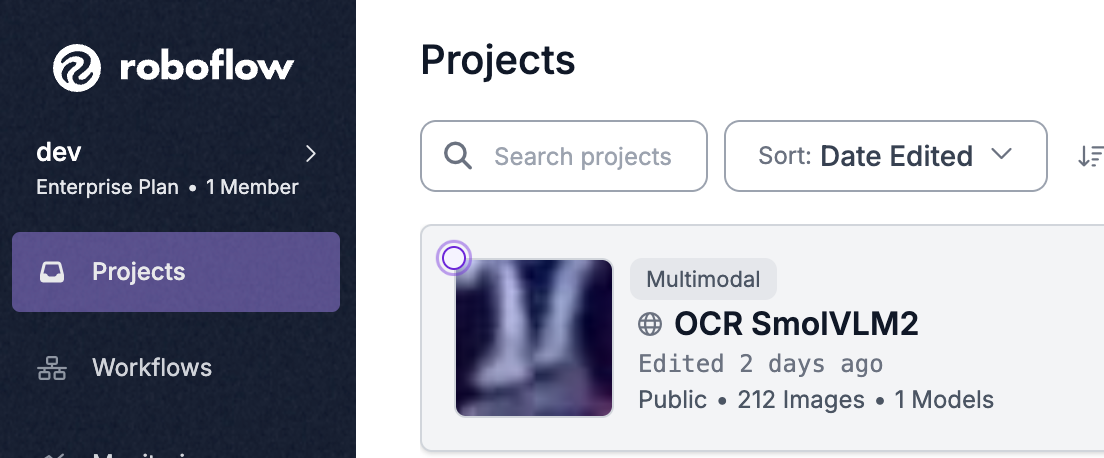

Head over to Roboflow, create a new workspace, and create a new project. For this tutorial, I’ll be creating a multimodal project with SmolVLM2, but I’ll be using predictions from an existing object detection model to process with the Workflow and later on supply it to the multimodal model for training.

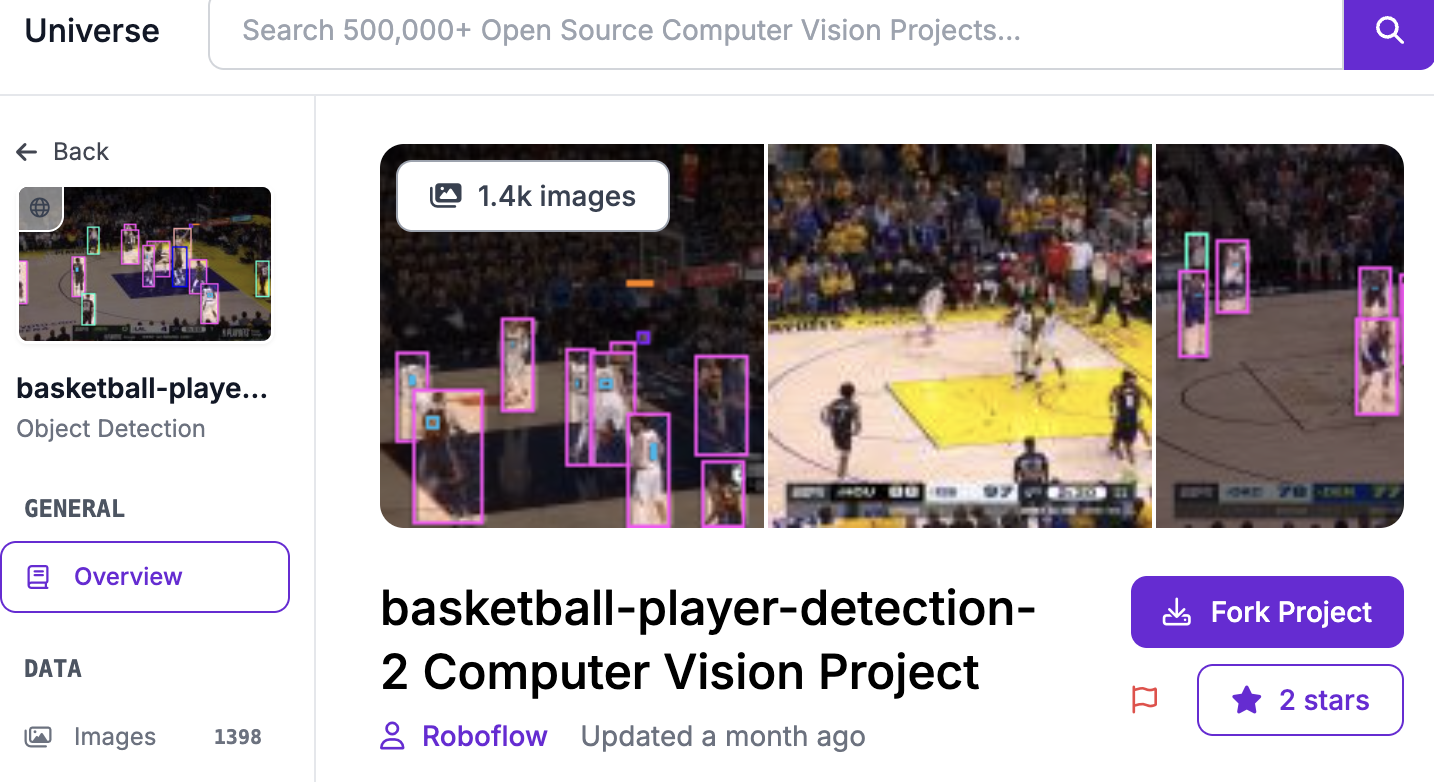

This particular object detection model, found on universe, is a cool vision AI that can recognize players, referees, and jersey numbers from different snapshots in a basketball game. For my project, I care particularly about the bounding boxes around the jersey numbers, which is what I’m aiming to get by processing it through the Workflow.

In my Workflow, I’m going to add the object detection model, as well as filtering the predictions that I get by the class “number”.

Adding model and class filter

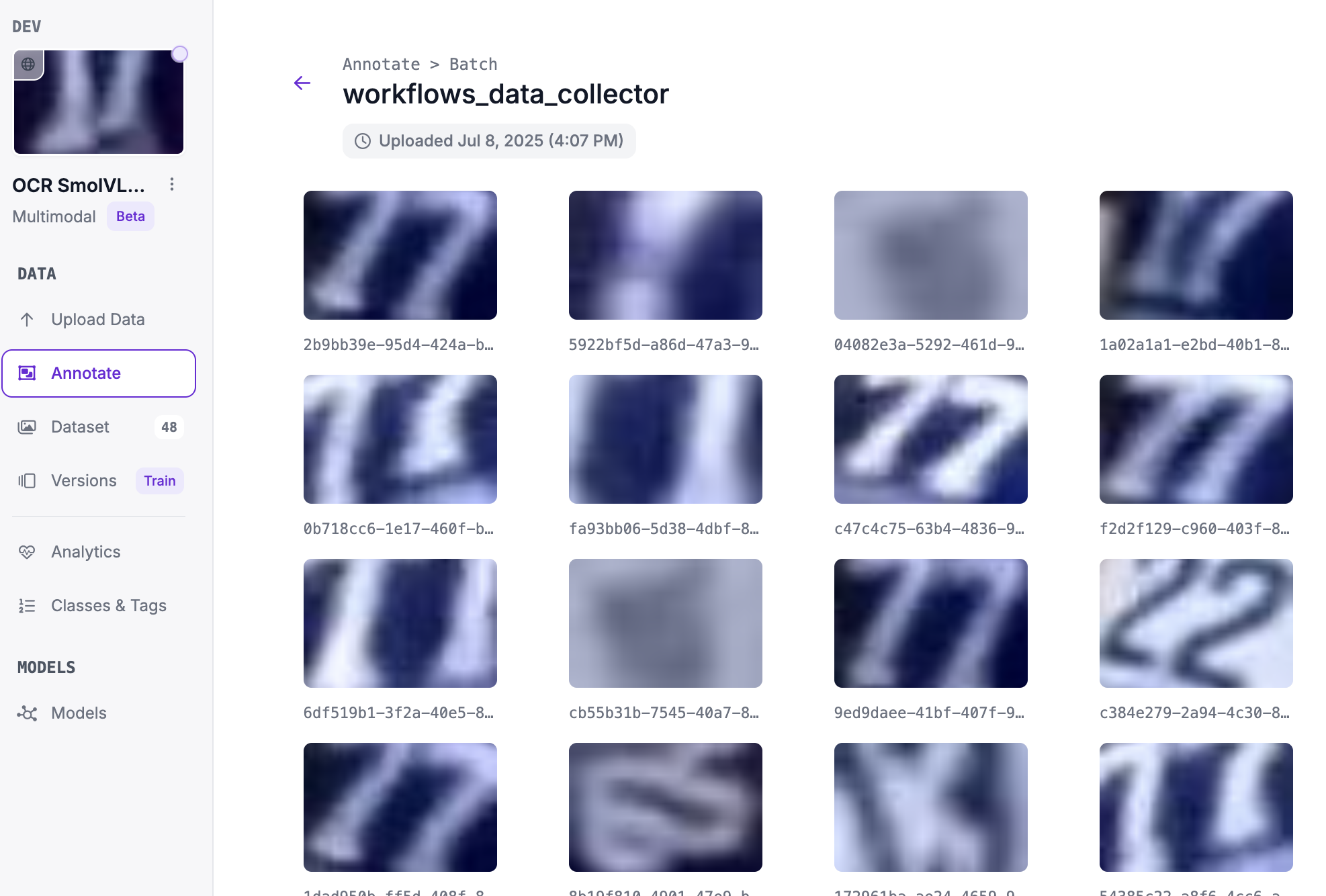

Right after, I’ll add a dynamic crop block to cut out everything except for the jersey numbers in that frame. Now that we have the jersey numbers from the frame, I want to use the dataset upload block to then upload the cropped images to the multimodal model I already created.

While these steps are catered to my specific model, they are applicable to any model where initial processing of data is required. For more intricate or different kinds of operations, I suggest referring to Roboflow’s Workflow block documentation

Now that the preprocessing Workflow is done, we can run it on different input data, and it will upload the data we care about to our model, in preparation for training.

Execution

In your complete preprocessing Workflow, hit deploy to bring up code snippets for running the workflow. Depending on your initial data, for example mine are video clips, you’ll have to choose the deployment boilerplate suitable for your data.

I’ve chosen to extract frames and upload the jersey numbers in each frame with the workflow, so I’ve used the local video boilerplate.

To run it, create a new workspace/folder in a code editor, and create a file called preprocess.py, and add the boilerplate code

from inference import InferencePipeline

import os

from dotenv import load_dotenv

load_dotenv()

def added():

print("added")

# initialize a pipeline object

pipeline = InferencePipeline.init_with_workflow(

api_key=os.getenv("API_KEY"),

workspace_name="dev-m9yee",

workflow_id="vlm-ocr-preprocess",

video_reference="../sample/clip2.mp4",

on_prediction=added,

max_fps=10,

)

pipeline.start()

pipeline.join()

The difference here is that I have stored my api key in an environment variable. If you wish to do this, I suggest following this guide. Additionally, the path for video_reference will change, depending on the location of the video you intend to run the Workflow.

From here, running the code should be simultaneously uploading pictures to your model that you created earlier.

After annotating and uploading to the dataset, the model can be trained on these modified input images, allowing you to incorporate them into your initial workflow with confidence that it can make accurate predictions on the data it will receive!

Conclusion

Using the Dataset Upload block in Roboflow Workflows opens the door to more than just inference–it allows you to build modular, multi-stage pipelines that clean, transform, and route your data intelligently. Whether you're preparing a second model, curating a new dataset, or creating a scalable vision system, this method unlocks a new level of control.

If you have any questions about the project, you can check out the github repository over here.

Cite this Post

Use the following entry to cite this post in your research:

Aryan Vasudevan. (Jul 11, 2025). Use Roboflow Workflows to Collect and Preprocess Image Training Data. Roboflow Blog: https://blog.roboflow.com/computer-vision-collect-training-data/