We are excited to announce support for format conversion to YOLO11 PyTorch TXT, training YOLO11 models on Roboflow, deploying YOLO11 models with Roboflow Inference, and using YOLO11 models in Workflows.

In this guide, we are going to walk through all of these features. Without further ado, let’s get started!

Curious to learn more about YOLO11? Check out our “What is YOLO11?” guide.

Label Data for YOLO11 Models with Roboflow

Roboflow allows you to convert data from 40+ formats to the data format required by YOLO11 (YOLO11 PyTorch TXT). For example, if you have a COCO JSON dataset, you can convert it to the required format for YOLO11 detection, segmentation, and more.

You can see a full list of supported formats on the Roboflow Formats list.

You can also label data in Roboflow and export it to the YOLO11 format for use in training in Colab notebooks. Roboflow has an extensive suite of annotation tools to help speed up your labeling process, including SAM-powered annotation and auto-label. You can also use trained YOLO11 models as a label assistant to help speed up labeling data.

Here is an example showing SAM-powered labeling, where you can hover over an object and click on it to draw a polygon annotation:

Train YOLO11 Models with Roboflow

You can train YOLO11 models on the Roboflow hosted platform, Roboflow Train.

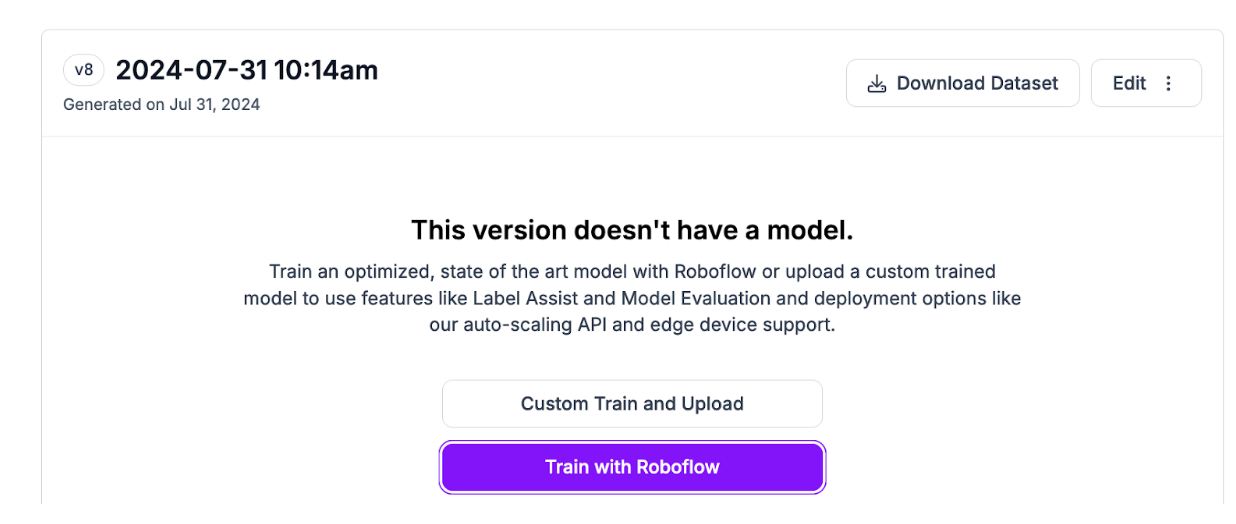

To train a model, first create a project on Roboflow and generate a dataset version. Then, click “Train with Roboflow” on your dataset version dashboard:

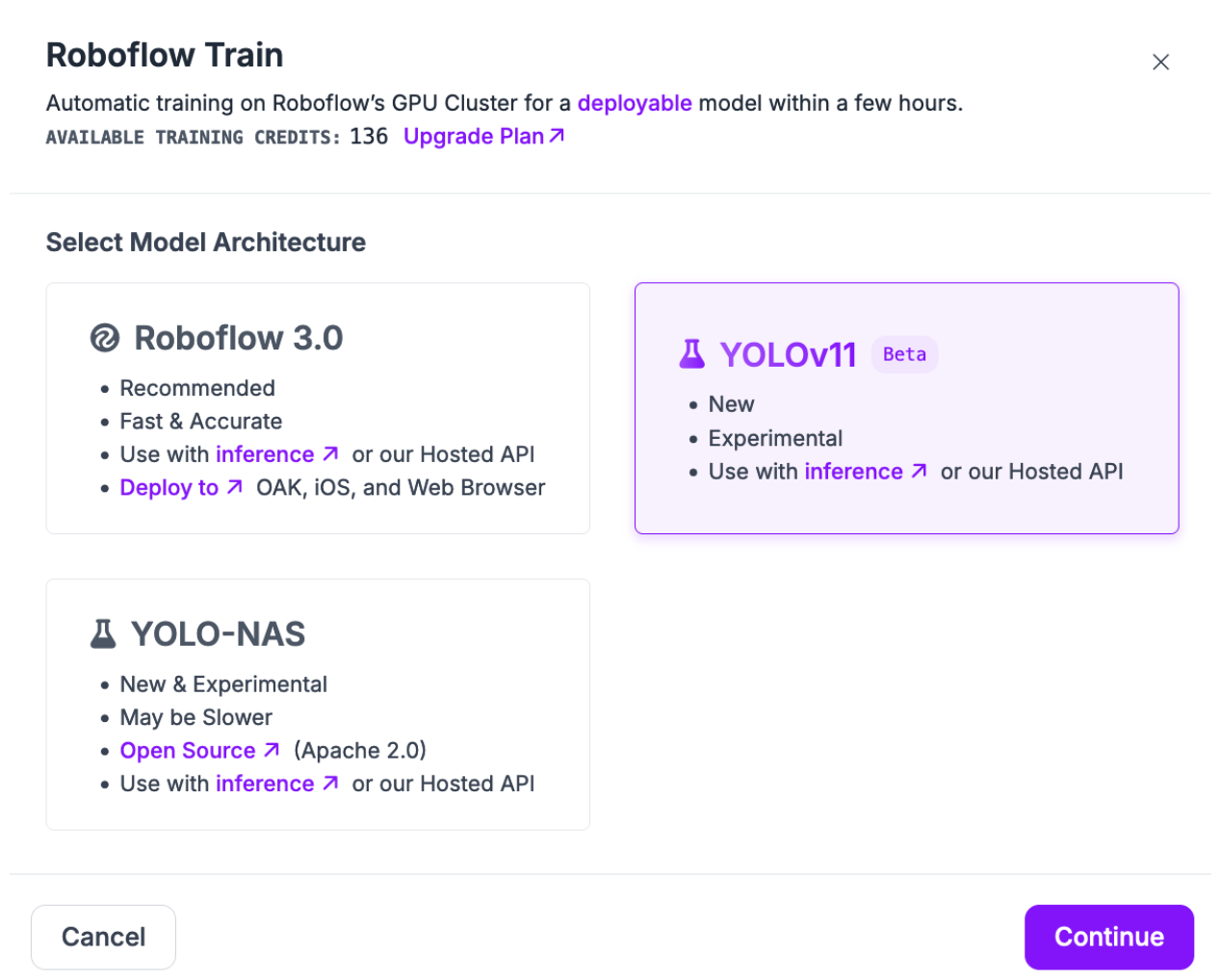

A window will appear from which you can choose the type of model you want to train. Select “YOLO11”:

Then, click “Continue”. You will then be asked whether you want to train a Fast, Accurate, or Extra Large model. For testing, we recommend training a Fast model. For production use cases where accuracy is essential, we recommend training Accurate models.

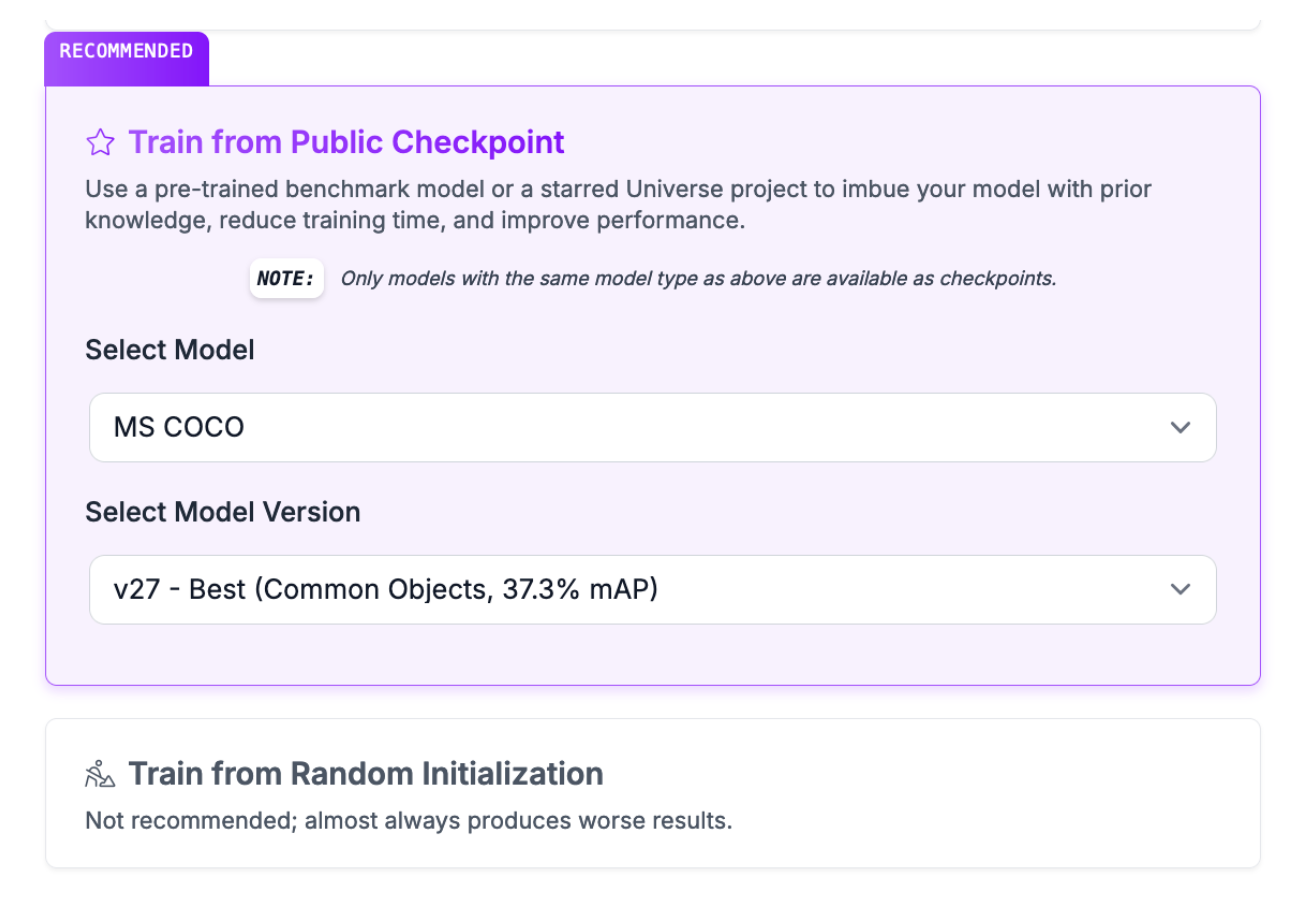

You will then be asked from what training checkpoint you want to start training. By default, we recommend training from our YOLO11 COCO Checkpoint. If you have already trained a YOLO11 model on a previous version of your dataset, you can use the model as a checkpoint. This may help you achieve higher accuracy.

Click “Start Training” to start training your model.

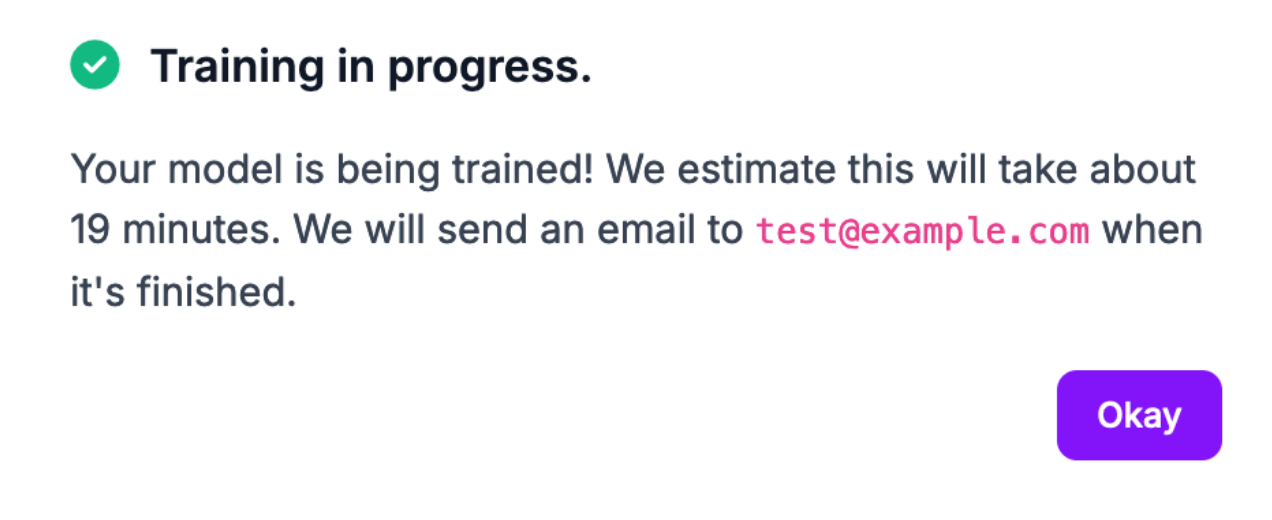

You will receive an estimate for how long we expect the training job to take:

The amount of time your training job will take will vary depending on the number of images in your dataset and several other factors.

Deploy YOLO11 Models with Roboflow

When your model has trained, it will be available for testing in the Roboflow web interface, and deployment through either the Roboflow cloud REST API or on-device deployment with Inference.

To achieve the lowest latency, we recommend deploying on device with Roboflow Inference. You can deploy on both CPU and GPU devices. If you deploy on a device that supports a CUDA GPU – for example, an NVIDIA Jetson – the GPU will be used to accelerate inference.

To deploy a YOLO11 model on your own hardware, first install Inference:

pip install inferenceYou can run Inference in two ways:

- In a Docker container, or;

- Using our Python SDK.

For this guide, we are going to deploy with the Python SDK.

Create a new Python file and add the following code:from inference import get_model

import supervision as sv

import cv2

# define the image url to use for inference

image_file = "image.jpeg"

image = cv2.imread(image_file)

# load a pre-trained yolo11n model

model = get_model(model_id="yolov11n-640")

# run inference on our chosen image, image can be a url, a numpy array, a PIL image, etc.

results = model.infer(image)[0]

# load the results into the supervision Detections api

detections = sv.Detections.from_inference(results)

# create supervision annotators

bounding_box_annotator = sv.BoundingBoxAnnotator()

label_annotator = sv.LabelAnnotator()

# annotate the image with our inference results

annotated_image = bounding_box_annotator.annotate(

scene=image, detections=detections)

annotated_image = label_annotator.annotate(

scene=annotated_image, detections=detections)

# display the image

sv.plot_image(annotated_image)Above, set your Roboflow workspace ID, model ID, and API key, if you want to use a custom model you have trained in your workspace.

Also, set the URL of an image on which you want to run inference. This can be a local file.

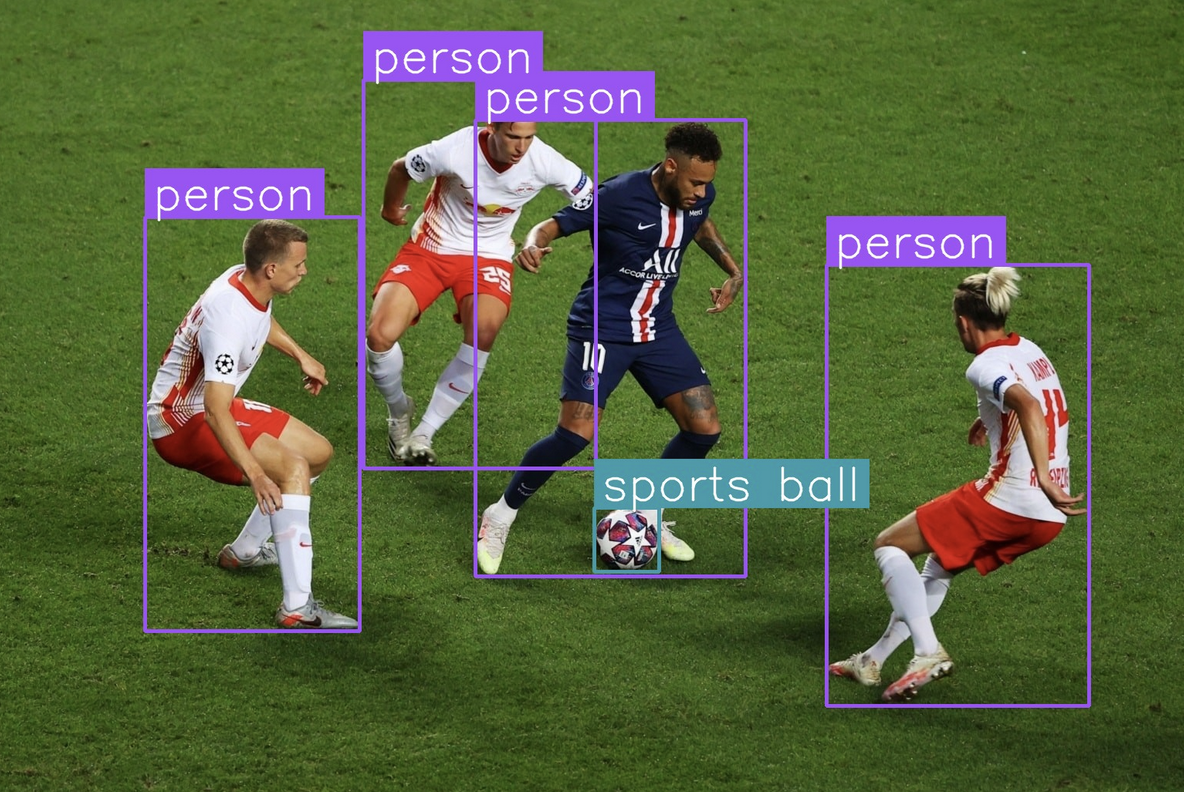

Here is an example of an image running through the model:

The model successfully detected a shipping container in the image, indicated by the purple bounding box surrounding an object of interest.

You can also run inference on a video stream. To learn more about running your model on video streams – from RTSP to webcam feeds – refer to the Inference video guide.

Use YOLO11 Models in Roboflow Workflows

You can also deploy YOLO11 models in Roboflow Workflows. Workflows allows you to build complex, multi-step computer vision solutions in a web-based application builder.

To use YOLO11 in a Workflow, navigate to the Workflows tab in your Roboflow dashboard. This is accessible from the Workflows link in the dashboard sidebar.

Then, click “Create a Workflow”.

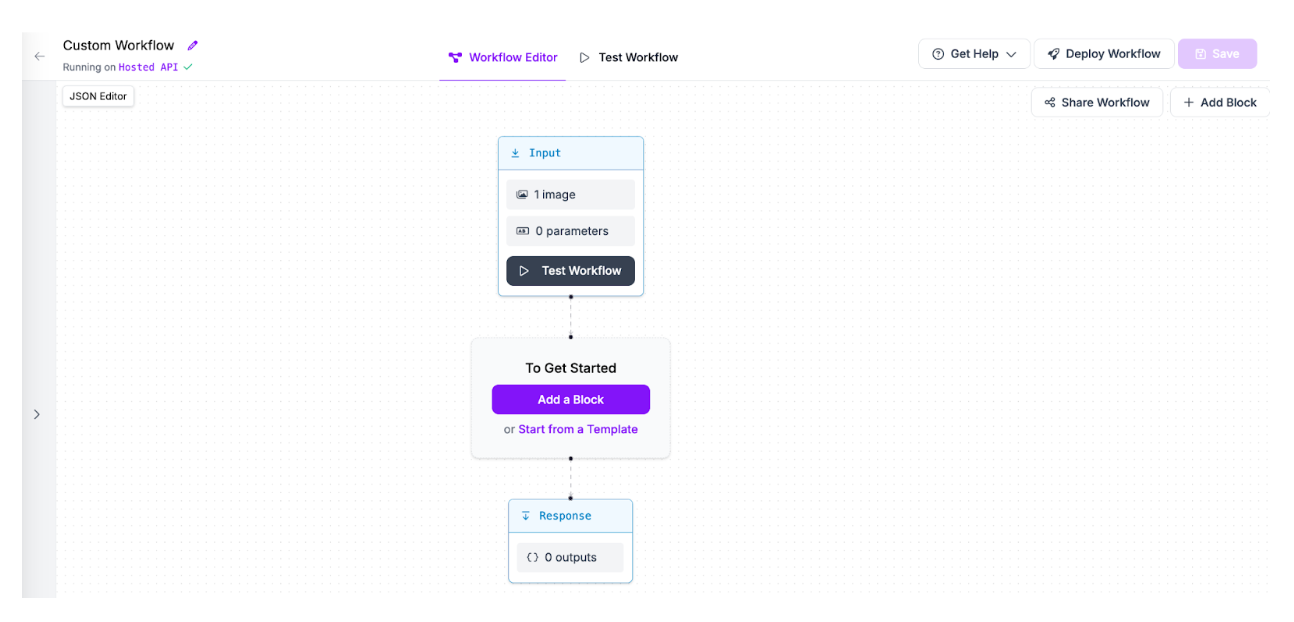

You will be taken to the Workflows editor where you can configure your application:

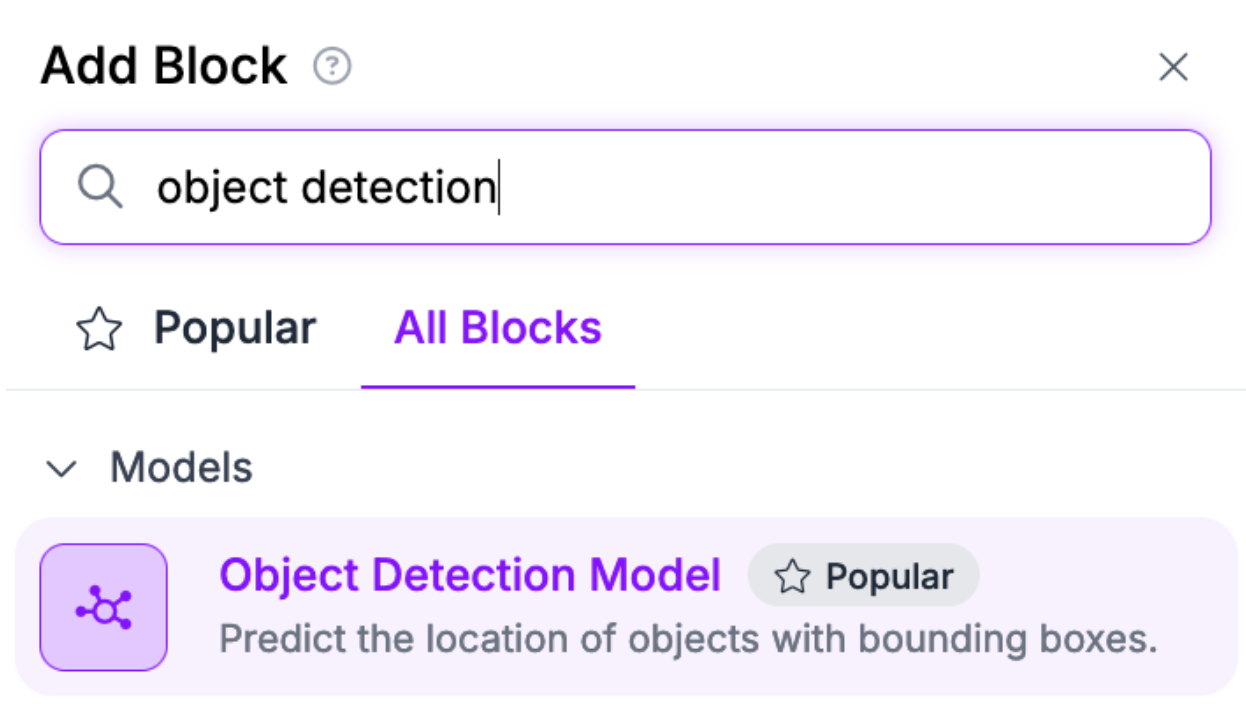

Click “Add a Block” to add a block. Then, add an Object Detection Model block.

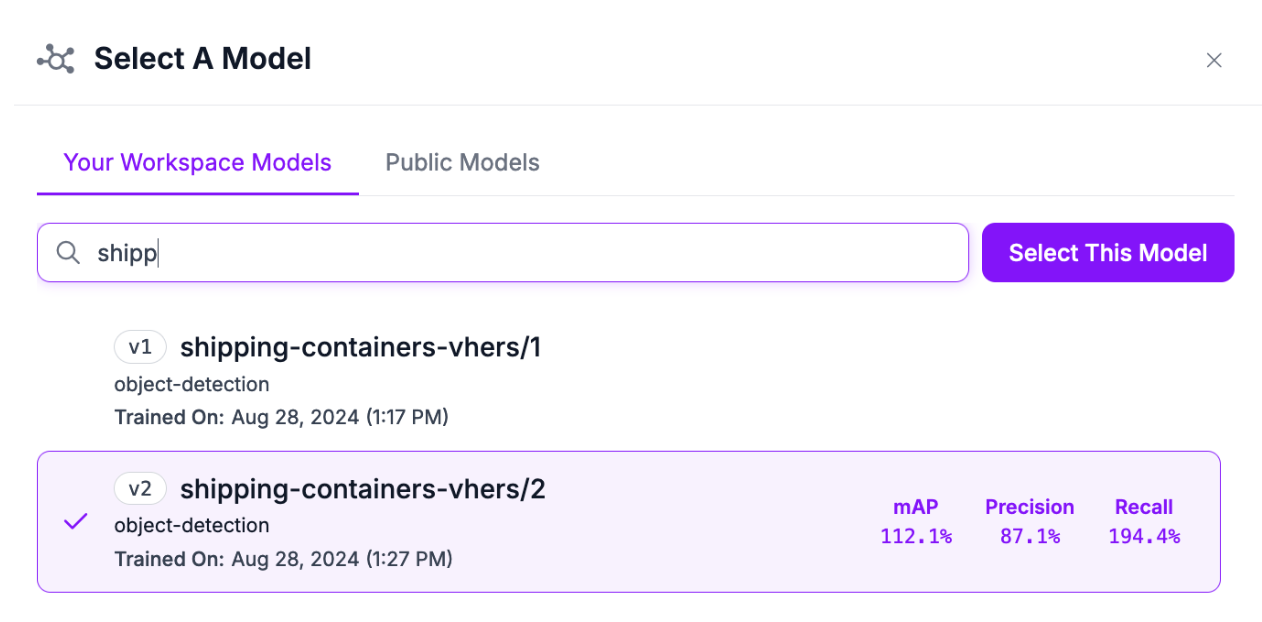

You will then be taken to a panel where you can choose what model you want to use. Set the model ID associated with your YOLO11 model. You can set any model in your workspace, or a public model.

To visualize your model predictions, add a Bounding Box Visualization block:

You can now test your Workflow!

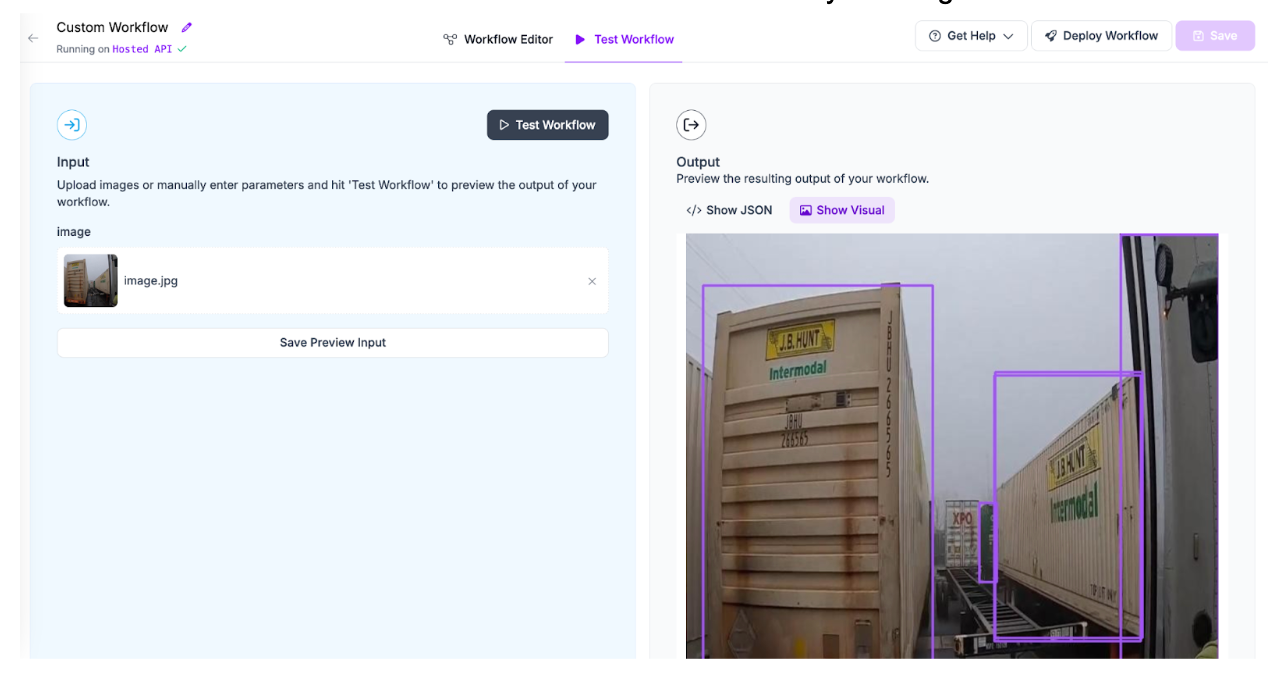

To test your Workflow, click “Test Workflow”, then drag in an image on which you want to run inference. Click the “Test Workflow” button to run inference on your image:

Our YOLO11 model, fine-tuned for logistics use cases like detecting shipping containers, successfully identified shipping containers.

You can then deploy your Workflow in the cloud or on your own hardware. To learn about deploying your Workflow, click “Deploy Workflow” in the Workflow editor, then choose the deployment option that is most appropriate for your use case.

Conclusion

YOLO11 is a new model architecture developed by Ultralytics, the creators of the popular YOLOv5 and YOLOv8 software.

In this guide, we walked through three ways you can use YOLO11 with Roboflow:

- Train YOLO11 models from the Roboflow web user interface;

- Deploy models on your own hardware with Inference;

- Create Workflows and deploy them with Roboflow Workflows.

To learn more about YOLO11, refer to the Roboflow What is YOLO11? guide.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Oct 10, 2024). Launch: Use YOLO11 with Roboflow. Roboflow Blog: https://blog.roboflow.com/use-yolo11-with-roboflow/