Controlling computers by looking in different directions is one of many technologies being developed to provide alternative ways of interacting with a computer. The relevant field of research is gaze detection, which aims to estimate the position on the screen at which someone is looking or the direction in which someone is looking.

Gaze detection has many applications. For example, you can use gaze detection to provide a means through which people can use a computer without using a keyboard or mouse. You could verify the integrity of an exam proctored online by checking for signals that someone may be referring to external material, with help from other vision technologies. You can build immersive training experiences for using machinery. These are three of many possibilities.

In this guide, we are going to show how to use Roboflow Inference to detect gazes. By the end of this guide, we will be able to retrieve the direction in which someone is looking. We will plot the results on an image with an arrow indicating the direction of a gaze and the place at which someone is looking.

To build our system, we will:

- Install Roboflow Inference, through which we will query a gaze detection model. All inference happens on-device.

- Load dependencies and set up our project.

- Detect the direction a person is gazing and the point at which they are looking using Inference.

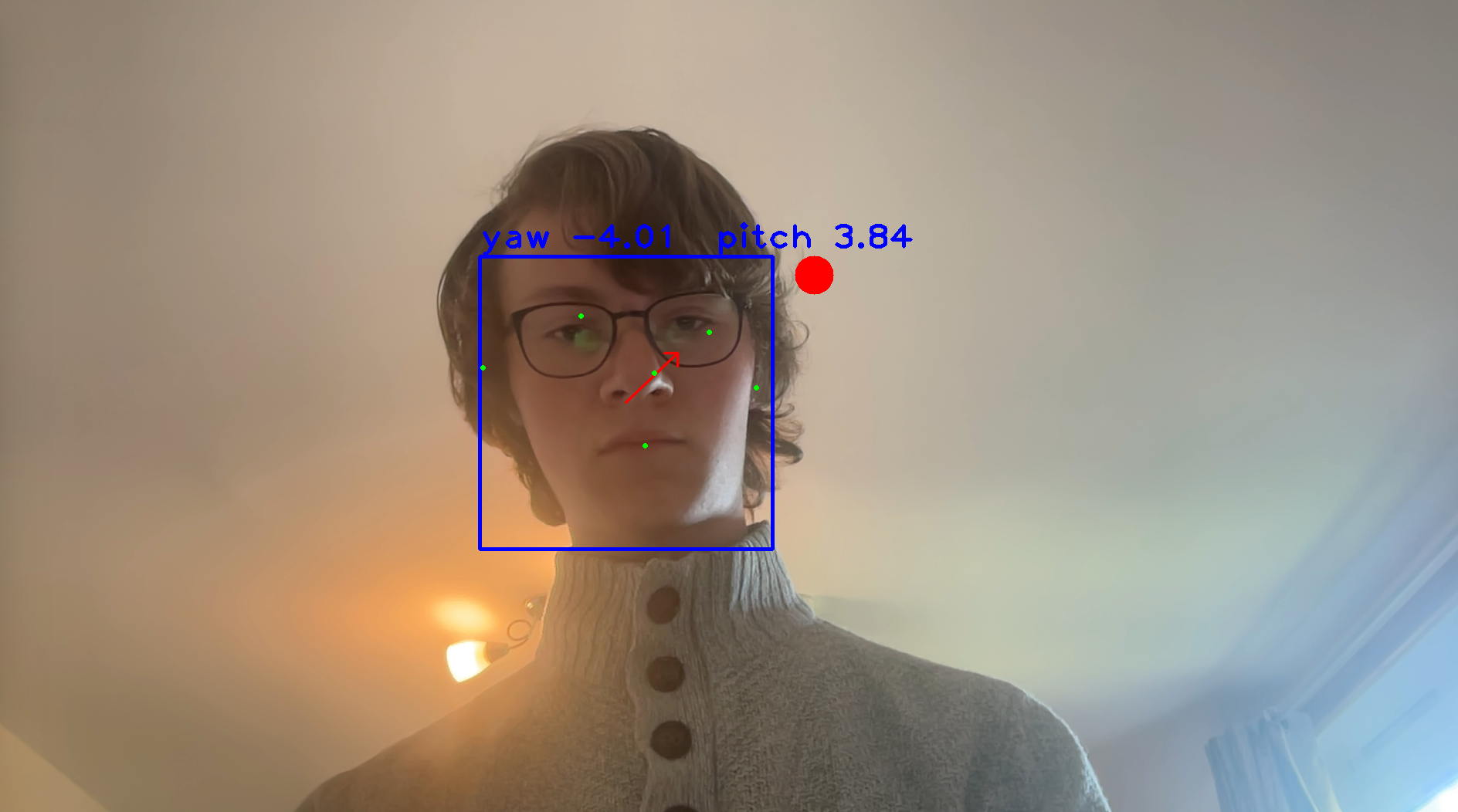

Here are the final results:

Eye Tracking and Gaze Detection Use Cases

Eye tracking and gaze detection have a range of use cases. A popular use case is in use for assistive technologies, as aforementioned. Eye tracking enables someone to interact with a computer without a keyboard or mouse.

Eye tracking and gaze detection can also be used to monitor for hazards in heavy goods vehicle operation. For example, gaze detection could be used as part of a system to check whether truck or train drivers are using cell phones while operating a vehicle.

Vision-based eye tracking lowers the cost of running an eye tracking system in comparison to purpose-built glasses, where the cost of equipment acquisition may be significant given the specialist nature of the devices.

There are more applications in research and medicine, such as monitoring reactions to stimuli.

Step #1: Install Roboflow Inference and Dependencies

Roboflow Inference is an open source tool you can use to deploy computer vision models. With Inference, you can retrieve predictions from vision models using a web request, without having to write custom code to load a model, run inference, and retrieve predictions. Inference includes a gaze detection model, L2CS-Net, out of the box.

You can use Inference without a network connection once you have set up your server and downloaded the model weights for the model you are using. All inference is run on-device.

To use Inference, you will need a free Roboflow account.

For this guide, we will use the Docker version of Inference. This will enable us to run a server we can query to retrieve predictions. If you do not have Docker installed on your system, follow the official Docker installation instructions.

The Inference installation process slightly varies depending on the device on which you are running. This is because there are several versions of Inference, one optimized for a different device type. Go to the Roboflow Inference installation instructions and look for the “Pull” command for your system.

For this guide, we will use the GPU version of Inference:

docker pull roboflow/roboflow-inference-server-gpuWhen you run this command, Inference will be installed and set up on your machine. This may take a few minutes depending on the strength of your network connection. After inference has finished installing, a server will be available at http://localhost:9001 through which you can route inference requests.

You will also need to install OpenCV Python, which we will be using to load a webcam for use in our project. You can install OpenCV Python using the following command:

pip install opencv-pythonStep #2: Load Dependencies and Configure Project

Now that we have Inference installed, we can start writing a script to retrieve video and calculate the gaze of the person featured in the video.

Create a new Python file and paste in the following code:

import base64

import cv2

import numpy as np

import requests

import os

IMG_PATH = "image.jpg"

API_KEY = os.environ["API_KEY"]

DISTANCE_TO_OBJECT = 1000 # mm

HEIGHT_OF_HUMAN_FACE = 250 # mm

GAZE_DETECTION_URL = (

"http://127.0.0.1:9001/gaze/gaze_detection?api_key=" + API_KEY

)In this code, we import all of the dependencies we will be using. Then, we set a few global variables that we will be using in our project.

First, we set our Roboflow API key, which we will use to authenticate with our Inference server. Learn how to retrieve your Roboflow API key. Next, we estimate the distance between the person in the frame and the camera, measured in millimeters, as well as the average height of a human face. We need these values to infer gazes.

Finally, we set the URL of the Inference server endpoint to which we will be making requests.

Step #3: Detect Gaze with Roboflow Inference

With all of the configuration finished, we can write logic to detect gazes.

Add the following code to the Python file in which you are working:

def detect_gazes(frame: np.ndarray):

img_encode = cv2.imencode(".jpg", frame)[1]

img_base64 = base64.b64encode(img_encode)

resp = requests.post(

GAZE_DETECTION_URL,

json={

"api_key": API_KEY,

"image": {"type": "base64", "value": img_base64.decode("utf-8")},

},

)

gazes = resp.json()[0]["predictions"]

return gazes

def draw_gaze(img: np.ndarray, gaze: dict):

# draw face bounding box

face = gaze["face"]

x_min = int(face["x"] - face["width"] / 2)

x_max = int(face["x"] + face["width"] / 2)

y_min = int(face["y"] - face["height"] / 2)

y_max = int(face["y"] + face["height"] / 2)

cv2.rectangle(img, (x_min, y_min), (x_max, y_max), (255, 0, 0), 3)

# draw gaze arrow

_, imgW = img.shape[:2]

arrow_length = imgW / 2

dx = -arrow_length * np.sin(gaze["yaw"]) * np.cos(gaze["pitch"])

dy = -arrow_length * np.sin(gaze["pitch"])

cv2.arrowedLine(

img,

(int(face["x"]), int(face["y"])),

(int(face["x"] + dx), int(face["y"] + dy)),

(0, 0, 255),

2,

cv2.LINE_AA,

tipLength=0.18,

)

# draw keypoints

for keypoint in face["landmarks"]:

color, thickness, radius = (0, 255, 0), 2, 2

x, y = int(keypoint["x"]), int(keypoint["y"])

cv2.circle(img, (x, y), thickness, color, radius)

# draw label and score

label = "yaw {:.2f} pitch {:.2f}".format(

gaze["yaw"] / np.pi * 180, gaze["pitch"] / np.pi * 180

)

cv2.putText(

img, label, (x_min, y_min - 10), cv2.FONT_HERSHEY_PLAIN, 3, (255, 0, 0), 3

)

return img

if __name__ == "__main__":

cap = cv2.VideoCapture(0)

while True:

_, frame = cap.read()

gazes = detect_gazes(frame)

if len(gazes) == 0:

continue

# draw face & gaze

gaze = gazes[0]

draw_gaze(frame, gaze)

image_height, image_width = frame.shape[:2]

length_per_pixel = HEIGHT_OF_HUMAN_FACE / gaze["face"]["height"]

dx = -DISTANCE_TO_OBJECT * np.tan(gaze['yaw']) / length_per_pixel

# 100000000 is used to denote out of bounds

dx = dx if not np.isnan(dx) else 100000000

dy = -DISTANCE_TO_OBJECT * np.arccos(gaze['yaw']) * np.tan(gaze['pitch']) / length_per_pixel

dy = dy if not np.isnan(dy) else 100000000

gaze_point = int(image_width / 2 + dx), int(image_height / 2 + dy)

cv2.circle(frame, gaze_point, 25, (0, 0, 255), -1)

cv2.imshow("gaze", frame)

if cv2.waitKey(1) & 0xFF == ord("q"):

breakIn this code, we first define a function to draw a gaze onto an image. This will allow us to visualize the direction our program estimates someone is looking. We then create a loop that runs until we press `q` on the keyboard while viewing our video window.

This loop opens a video window showing our webcam. For each frame, the gaze detection model on our Roboflow Inference Server is called. The gaze is retrieved for the first person found, then we plot an arrow and box showing where the face of the person whose gaze is being detected is and the direction in which they are looking. It is assumed that there will be one person in the frame.

Gazes are defined in terms of “pitch” and “yaw” in the model we are using. Our code does the work we need to do to convert these values into a direction.

Finally, we calculate the point on the screen at which the person in view is looking. We display the results on screen.

Here is an example of the gaze detection model in action:

Here, we can see:

- The pitch and yaw associated with our gaze.

- An arrow showing our gaze direction.

- The point at which the person is looking, denoted by a red dot.

The green dots are facial keypoints returned by the gaze detection model. The green dots are not relevant to this project, but are available in case you need the landmarks for additional calculations.

Bonus: Using Gaze Points

The gaze point is the estimated point at which someone is looking. This is reflected in the red dot that moves as we look around in the earlier demo.

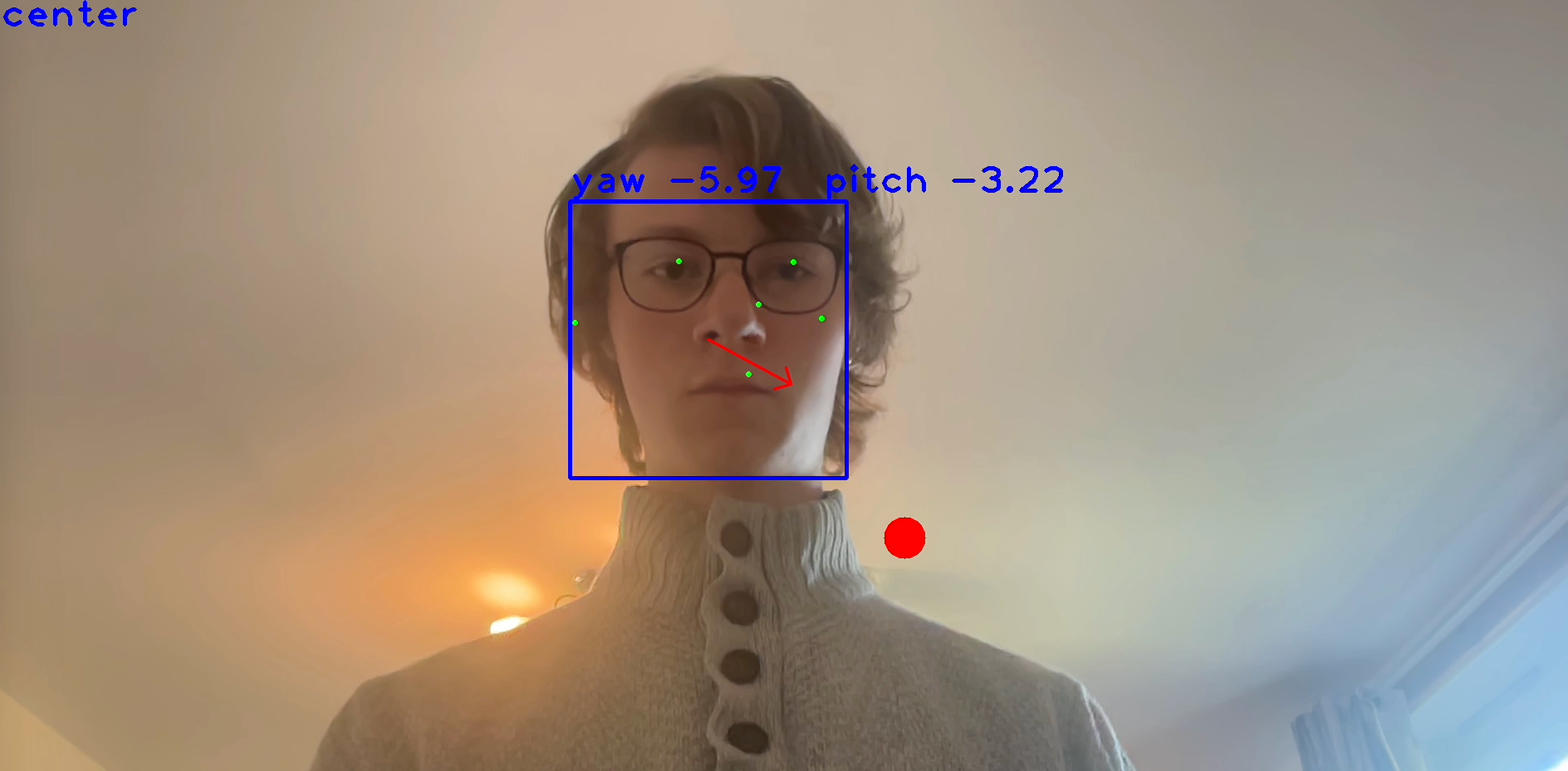

Now that we can detect the point at which someone is looking, we can write logic to do something when we look at a particular section on the screen. For example, the following code calculates the general direction we are looking (top, bottom, left, right, center) and shows text in the video frame with the direction in which we are looking. Add the following code in the loop we defined earlier, above the cv2.imshow() call:

quadrants = [

("center", (int(image_width / 4), int(image_height / 4), int(image_width / 4 * 3), int(image_height / 4 * 3))),

("top_left", (0, 0, int(image_width / 2), int(image_height / 2))),

("top_right", (int(image_width / 2), 0, image_width, int(image_height / 2))),

("bottom_left", (0, int(image_height / 2), int(image_width / 2), image_height)),

("bottom_right", (int(image_width / 2), int(image_height / 2), image_width, image_height)),

]

for quadrant, (x_min, y_min, x_max, y_max) in quadrants:

if x_min <= gaze_point[0] <= x_max and y_min <= gaze_point[1] <= y_max:

# show in top left of screen

cv2.putText(frame, quadrant, (10, 50), cv2.FONT_HERSHEY_PLAIN, 3, (255, 0, 0), 3)

breakLet’s run our code:

Our code successfully calculates the general direction in which we are looking and displays a message with the direction in which we are looking in the top left corner.

We can connect the point at which are looking or the region in which we are looking to logic. For example, looking left could open a menu in an interactive application; looking at a certain point on a screen could select a button.

Gaze detection technology has been applied over the years to enable eye-controlled keyboards and user interfaces. This is useful for both increasing access to technology and for augmented reality use cases where the point at which you are looking serves as an input to an application.

Conclusion

Gaze detection is a field of research in vision that aims to estimate the direction in which someone is looking and the point someone is looking on a screen. A primary use case for this technology is accessibility. With a gaze detection model, you can allow someone to control a screen without using a keyboard or mouse.

In this guide, we showed how to use Roboflow Inference to run a gaze detection model on your computer. We showed how to install Inference, load the dependencies required for our project, and use our model with a video stream. Now you have all the resources you need to start calculating gazes.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Sep 22, 2023). Gaze Detection and Eye Tracking: A How-To Guide. Roboflow Blog: https://blog.roboflow.com/gaze-direction-position/