This article was contributed to the Roboflow blog by Peter Mitrano, a 5th year PhD student at the University of Michigan, working with Dmitry Berenson in the ARMLab.

My research focuses on learning and planning for robotic manipulation, with a special focus on deformable objects. You can find my work in the Science Robotics journal and top-tier robotics conferences, but in this blog post I’m going to focus on perception.

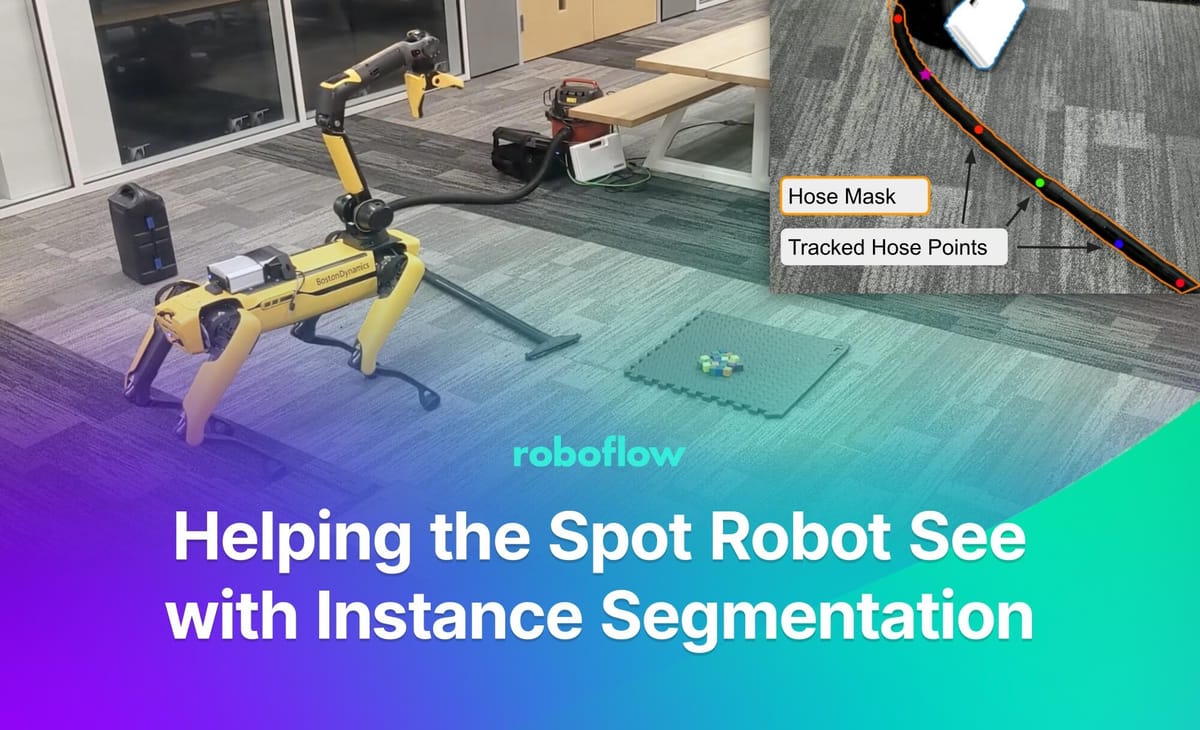

In the next section, I’ll describe how we used instance segmentation as the foundation of our perception pipeline. If you just want to skip to our flashy demo video, here it is!

Data Collection and Labeling

Like any machine learning task, instance segmentation model performance depends on accurate and relevant training data. In this section, I will share a few tips that are perhaps specific to robotic manipulation.

The first tip is to start small. Gather no more than 25 images, label them, and train your first model. Once you do this the first time, you will likely discover that: (i) the detections are bad in a certain situation you hadn’t considered, and; (ii) you can’t do the manipulation tasks with the information provided by the detections.

Find the Right Training Data

Finding the relevant training data that is representative of one’s use case is key to achieving strong model performance.

For example, I immediately noticed that the body camera on the Spot Robot I was using had a very skewed angle of the world, which made detecting them difficult and manipulation based on those poor detections even more difficult.

As a result, I switched to using the camera in the hand. That meant collecting more data! You don’t have to throw out the old data (usually the model can scale to tons of data) but this is why you don’t want to start with 200 images, which would take longer to label before you got any feedback on whether those were a good set of images!

Another way to ensure you collect a useful dataset is to use “active learning”. This means that when the robot detects something wrong or misses a detection, you should save that image and upload it for labeling and re-training. If you do this iteratively, and the scope of what the robot will see isn’t unbounded, you will quickly converge to a very reliable instance segmentation model!

Create a Labeling Scheme

Creating the right labeling scheme is key for making the predictions useful to downstream manipulation planning. Labeling object parts as opposed to whole objects is generally the way to go.

For example, I wanted the robot to grasp the end of a vacuum hose, but at first I labeled the entire hose as one segmentation. This meant I didn’t know which part of the hose was the “end”. So, I went back and separately labeled the “head” of the hose as its own object class.

Here’s another example from a project I did on opening lever-handle doors. I started by labeling the entire door handle as one object/class. However, fitting a plane to estimate the surface worked poorly since the depth was so noisy, so instead I labeled only the surface of the handle.

Additionally, I needed to know which end is the pivot about which it rotates, so I also labeled the pivot with a small circular mask. Again, it’s good to start by only labeling a few images, test your algorithms, and then iterate on how you label before spending hours labeling!

Going from RGB to 3D

The task of Instance segmentation is to produce masks and/or polygons around unique instances of objects in an image. For manipulation though, knowing where something is in a 2D image usually isn’t enough – we need to know the object’s location and shape in 3D.

In robotics, it is common to use RGBD cameras with calibrated camera intrinsics. The Spot robot is no exception to this. This means we can project a given pixel (u, v) in the RGB image into 3D (x, y, z). However, inexpensive depth cameras are notoriously unreliable, often having missing areas where no valid depth readings are available. This makes projecting a single pixel into 3D unreliable!

One solution to this is to project the entire 2D mask into 3D. However, we can often do better than this by making use of more than just the pixels that are part of a single mask. For example, we can fit a plane to the depth image and use that to find where objects on the floor are. We can also use CDCPD, a tracking method for deformable objects developed by our lab, which looks at the entire segmented point cloud, and not individual pixels.

The figure below shows an example of CDCPD using the predicted hose mask and ground plane to track points on the hose.

Conclusion

This article provides several tips for how to use instance segmentation in robotics manipulation. These tips are:

- Iteratively collect and label small batches of data;

- Project segmentation masks from RGB into 3D using a depth image, and;

- Separately labeling object parts.

These techniques have been used successfully in a number of projects, such as this demo with the Spot Robot Conq Won't Give Up!, as well as during an internship I did at PickNik Robotics!

Cite this Post

Use the following entry to cite this post in your research:

Contributing Writer. (Jul 20, 2023). Helping the Spot Robot See with Instance Segmentation. Roboflow Blog: https://blog.roboflow.com/helping-spot-see-with-instance-segmentation/