In this blog, we discuss how to train and deploy a custom license plate detection model to the NVIDIA Jetson. While we focus on the detection of license plates in particular, this guide also provides an end-to-end guide on deploying custom computer vision models to your NVIDIA Jetson on the edge.

In a prior blog, we had the chance to learn how to use Roboflow's Inference API to create a License Plate Detection model. While the hosted Inference API is the best deployment option for many use cases, sometimes, the following considerations necessitate deploying our model an edge device, such as the NVIDIA Jetson:

- Model inferences need to inform an intelligent system in realtime

- Model inferences must occur offline

In this post, we’ll walk you through deploying this license plate detector and OCR model using Roboflow's Inference Server on an NVIDIA Jetson that you can use for for on-device inference. In this tutorial, you will learn to:

- Train a License Plate Detector

- Prepare the NVIDIA Jetson Camera

- Download the Roboflow Inference Server for the NVIDIA Jetson

- Adapt the License Plate Detection Project for the Jetson

- Troubleshoot Potential Errors

Dataset Collection and Model Training

The dataset we use in this blog to train our license plate detector is a public license plate detection dataset on Roboflow.

To train and deploy our model to the Jetson we use Roboflow Train.

For more details, please see our preceding post on Deploying a License Plate Detection Model with the Roboflow Inference API.

Once your model is done training, you will receive an endpoint and api_key. Keep these handy they will allow you to download and serve your model on the NVIDIA Jetson.

Preparing the NVIDIA Jetson Camera

Before we can start using the Jetson, we will need to follow a couple of steps to set up the camera. The camera we are using for this project is the Raspberry Pi Camera V2.*

*Note: The Raspberry Pi Camera V2 can come with an IMX219 Sensor or an OV5647 Sensor. Ensure the camera you are purchasing is equipped with the IMX219 Sensor as the NVIDIA Jetson does not support the OV5647 Sensor.

Before wiring up the Jetson, ensure the Jetson is completely turned off to avoid any damage to the Jetson or Camera. On the Jetson, carefully lift the plastic covering the on the MIPI CSI-2 camera connector ports as shown below:

The camera should be have a plastic ribbon with a blue edge. Insert the plastic ribbon into the port with the blue edge facing away from the Jetson. Then push down on the connector port covering to close the port.

To test whether your camera is installed properly, turn on your Jetson, open a terminal and run this command to check if your camera is detected:

ls -ltrh /dev/video*If your camera is connected properly, you should receive an output similar to the one below:

crw-rw----+ 1 root video 81, 0 May 26 08:55 /dev/video0

Download the Roboflow Inference Server

Roboflow's Hosted API is a versatile way to use models trained on Roboflow and it uses state-of-the-art infrastructure which autoscales as needed. However, the main limitations with the Hosted API is that it may not be ideal in cases with limited bandwidth or where production data cannot extend beyond your local network.

Roboflow's inference server is an alternative option to using Roboflow's hosted API and it accounts for limitations in the hosted API. To install the Roboflow Inference Server, you can pull the docker container:

sudo docker pull roboflow/inference-server:jetson

You can then start the inference server using this command:

sudo docker run --net=host --gpus all roboflow/inference-server:jetson

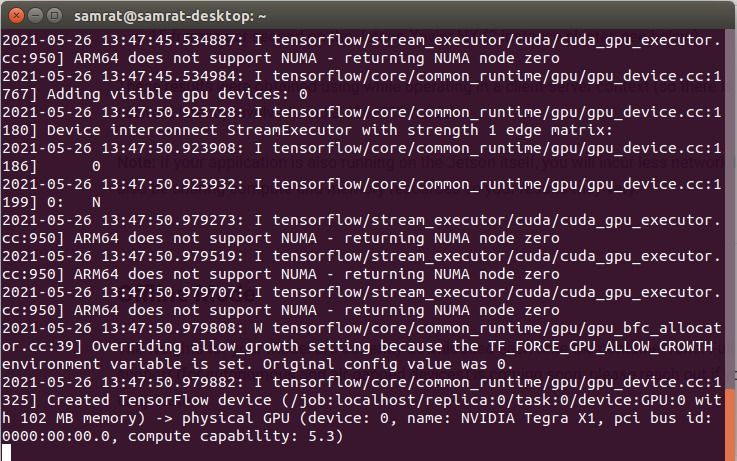

After that, your terminal output should look something like this:

And just like that, you will have installed and started the local inference server. Note: We recommend running the latest version of NVIDIA JetPack on your Jetson when using our inference server.

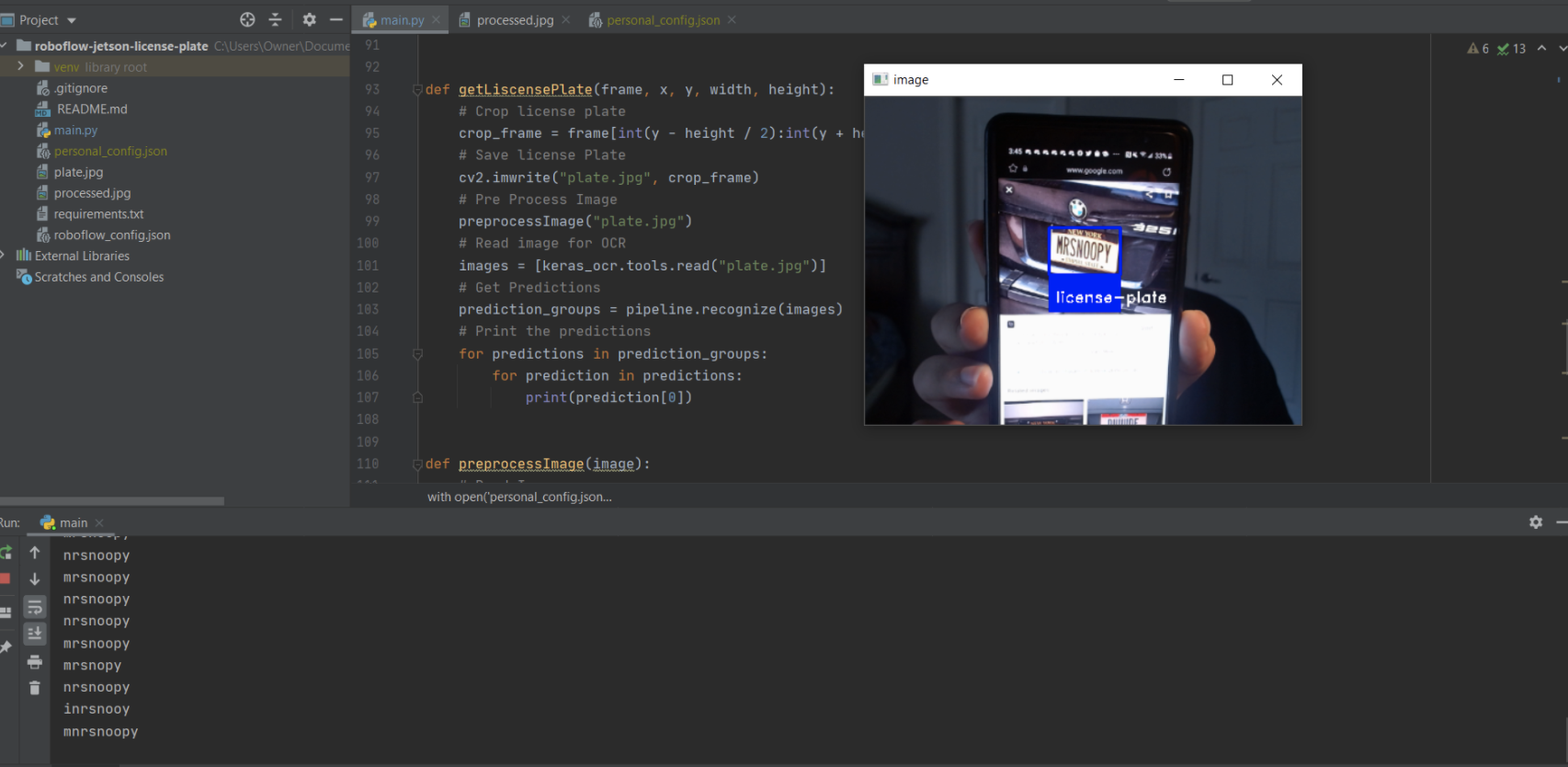

Adapting the License Plate Detection Project for the Jetson

Before we can start using the License Plate Detection Project with the Jetson, we recommend you read through the preceding blog before continuing. To start, we need to ensure that we install Tensorflow appropriately. To start, you will want to clone the repository and switch to the appropriate branch:

git clone https://github.com/roboflow-ai/roboflow-jetson-license-plate.git

git checkout nvidia-jetson

After cloning the repository, if you plan on using Roboflow's inference server, you will want to open the roboflow_config.json file. It should look something like this:

{

"__comment1": "Obtain these values via Roboflow",

"ROBOFLOW_API_KEY": "xxxxxxxxxx",

"ROBOFLOW_MODEL": "xx-name--#",

"ROBOFLOW_SIZE": 416,

"LOCAL_SERVER": false,

"__comment2": "The following are only needed for infer-async.py",

"FRAMERATE": 24,

"BUFFER": 0.5

}

To use the Jetson inference server, change the "LOCAL_SERVER" value to true and input your Roboflow API key and model in the "ROBOFLOW_API_KEY" and "ROBOFLOW_MODEL" respectively.

Next, we need to install a specific version of Tensorflow and its dependencies. First, install the required system packages:

sudo apt-get update

sudo apt-get install libhdf5-serial-dev hdf5-tools libhdf5-dev zlib1g-dev zip libjpeg8-dev liblapack-dev libblas-dev gfortran

Install and Upgrade pip3 and install Tensorflow's dependencies (these will need to be installed as root):

sudo apt-get install python3-pip

sudo pip3 install -U pip testresources setuptools==49.6.0

sudo pip3 install -U numpy==1.19.4 future==0.18.2 mock==3.0.5 h5py==2.10.0 keras_preprocessing==1.1.1 keras_applications==1.0.8 gast==0.2.2 futures protobuf pybind11

Then you can install Tensorflow as follows:

sudo pip3 install --pre --extra-index-url https://developer.download.nvidia.com/compute/redist/jp/v45 tensorflow

Note: This will install a version of Tensorflow that is compatible with Jetpack 4.5. To install Tensorflow for a different version, you must change the extra index url. I.e. Jetpack version 4.4 extra index url would be https://developer.download.nvidia.com/compute/redist/jp/v44

Install the remaining requirements for the project using a virtual environment:

cd roboflow-jetson-license-plate

sudo apt-get install python3-venv

python3 -m pip install --user virtualenv

python3 -m venv env --system-site-packages

source env/bin/activate

pip3 install -r requirements.txt

Then run the main.py file:

python3 main.py

And just like that you should be set to run a Roboflow model on your Jetson via the Roboflow inference server.

Troubleshooting Potential Errors

During the deployment process, I came across two primary issues:

OSError: library geos_c

OSError: Could not find library geos_c or load any of its variants ['libgeos_c.so.1', 'libgeos_c.so']

This error indicates that Jetson is missing a system package to function properly. You can read more about this error here.

You can easily solve this error by installing the appropriate system package:

sudo apt-get install libgeos-dev

ImportError: Cannot allocate memory in static TLS block

ImportError: /usr/lib/aarch64-linux-gnu/libgomp.so.1: cannot allocate memory in static TLS block

To fix this error, you need to update the LD_PRELOAD environment variable. You can do this by:

export LD_PRELOAD=/usr/lib/aarch64-linux-gnu/libgomp.so.1

Conclusion

Congratulations - now you know how to train and deploy a license plate detection model to the NVIDIA Jetson from Roboflow.

As a next step, try this video tutorial:

Cite this Post

Use the following entry to cite this post in your research:

Samrat Sahoo, Jacob Solawetz. (May 27, 2021). License Plate Detection and OCR on an NVIDIA Jetson. Roboflow Blog: https://blog.roboflow.com/license-plate-detection-jetson/