Creating successful computer vision models requires handling an ever growing set of edge cases.

At the 2020 Conference on Computer Vision and Pattern Recognition (CVPR), Tesla's Senior Director of AI, Andrej Karpathy, gave a talk on how Tesla is building autonomous vehicles. Karpathy's team is responsible for building the neural networks that create Tesla Autopilot. Given Tesla's industry-leading position in tackling self-driving computer vision (without LiDAR), the process Karpathy's team employs at Tesla creates actionable advice for every team solving problems with computer vision.

Don't forget to like and subscribe.

Consider watching the full CVPR talk, available here.

Teaching Cars to Stop

In one particular portion of the talk, Karpathy demonstrates the challenge of building a neural network that successfully identifies a stop sign so that a car can learn to stop where when a stop sign is recognized. On the surface, the task sounds relatively straight forward: stop signs are large red octagons, often easily identifiable, and uniformly positioned (right side of the road, attached to a pole).

In fact, a simple Google Image Search for Stop Sign yields the below:

From that search, the we see the most typical stop sign example that you may picture in your head when thinking about a stop sign at an intersection:

However, Karpathy outlines a series of edge cases – images of stop signs you may not anticipate – that may be impossible to anticipate before seeing them in the wild.

Before scrolling further: what edge cases can you think of that differ from a stop sign like the one pictured above?

Stop Edge Cases

Unfortunately, the world isn't so clean. Consider the below edge cases in teaching a model to recognize a stop sign.

Occlusion

Stop signs aren't always clearly visible. Objects often occlude our (and machines's) ability to see them clearly. Karpathy shared the following examples from their dataset:

Occlusion blocking the view of stop signs. (Credit: Karpathy, Tesla)

Mounting

Stop signs are not always mounted on posts. Tesla's AI Director shared additional examples:

Stop signs that are not mounted on metal poles, as is commonly expected. (Credit: Karpathy, Tesla)

Exceptions

Stop signs do not always indicate one needs to stop. Consider the following examples from Tesla's dataset:

These stops signs say stop – except when, for example, when a vehicle is turning right. (Credit: Karpathy, Tesla)

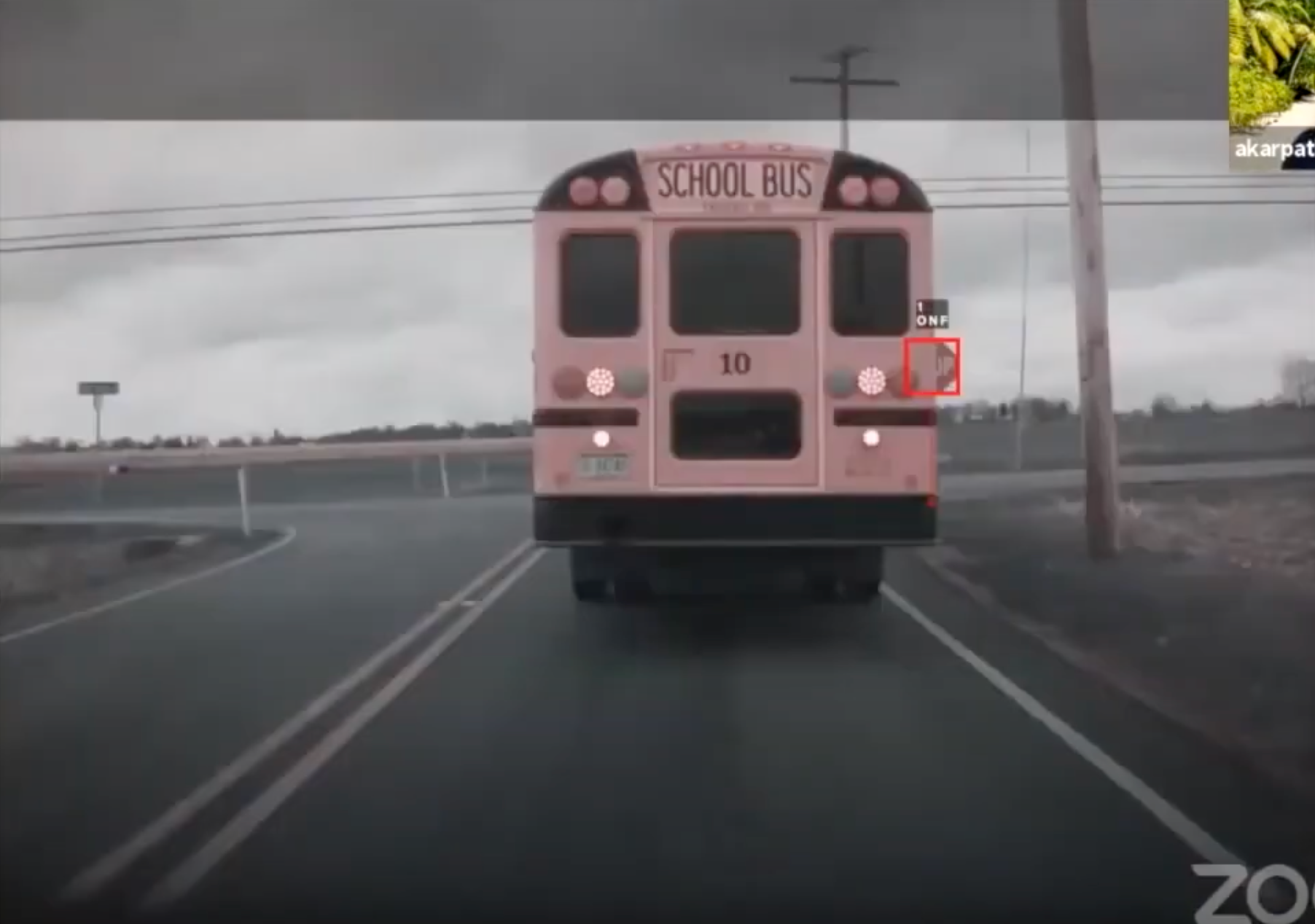

Conditional

Not all stops signs are always in an active state. Consider, for example, a stop sign attached to a school bus, a swinging gate, or a worker on the road:

Seeing a stop sign does not always mean it is in an active state requiring one to stop their vehicle. (Credit: Karpathy, Tesla)

How Tesla Responds to Edge Cases

After Andrej Karpathy walks through a seemingly hilarious number of stop sign edge cases, he describes how Tesla responsibly builds reliable vision systems.

"Because this is a product in the hands of customers, you are forced to go through the long tail. You cannot do just 95 percent [of cases] and call it a day. We have to make this work. And so that long tail brings all kinds of interesting challenges."

-Andrej Kaparthy, CVPR 2020 (emphasis mine)

In the case of Tesla's self-driving product, they are already providing capabilities to customers, creating a high bar for quality. Karpathy's team is focused not only on the "happy case" – a stop sign clearly visible on top of a silver pole at an intersection – but in fact deeply focused on the atypical cases. Tesla obsesses over dataset curation.

And it shows: Tesla likely has the world's most comprehensive dataset on occluded and exception-ridded stop signs in the world:

Tesla is spending just as much time on test-set curation as they are on training set curation to ensure their models are ready for the wide array of edge cases vehicles may encounter on the road.

Oh, and don't even get Karpathy started on speed limit signs...

This Affects Your Models, Too

Chances are you didn't guess all of the possible edge cases when considering what a stop sign looks like. There's an even higher chance the above edge cases fail to capture the full variation of all stop sign edge cases!

What this means for your computer vision model creation process is a few things.

Emphasize continued dataset curation over model selection. It is rumored that Karpathy spends hundreds of hours on reviewing images – not model building – each and every day. Ensuring your image dataset is representative of conditions your models will see is essential to creating high quality models an debugging model performance. Ben Hamner, Kaggle's CTO, recently shared similar thoughts on Twitter.

Collect as much data from your real world production conditions as possible. The data you're training on may not be the same data your models will see in production. As much as possible, collect data from the conditions where your model will be run in production rather than your training data.

There may be circumstances where having data from production conditions simply isn't available – like capturing the presence of an unlikely event. In these situations, consider of your training dataset is sufficiently strong to create an initial deploy model, and the continue to collect data once the model is deployed to production. Tesla, for example, is regularly feeding millions of images back to headquarters on a daily basis from all of its cars on the road. Roboflow's Upload API makes it seamless to capture and feed images from production conditions back to an ever-growing dataset – like putting your data collection task on autopilot.

Monitor and update dataset quality. Dataset health is not static. Keeping track of number of images, annotations across classes, class balance, and annotation quality is an ongoing endeavor.

"Your dataset is alive, and your labeling instructions are changing all the time. You need to curate and change your dataset all the time."

-Andrej Kaparthy, CVPR 2020

Tools like Roboflow's Dataset Health Check help teams monitor their dataset quality so they can focus on what is unique to their domain problem.

In sum, a happy dataset is a happy model – so don't under emphasis the time you should be spending on curating your ever-growing dataset.

Cite this Post

Use the following entry to cite this post in your research:

Joseph Nelson. (Oct 11, 2020). How Tesla Teaches Cars to Stop. Roboflow Blog: https://blog.roboflow.com/tesla-stop-signs-computer-vision/