Integrating state-of-the-art computer vision technologies into applications and business logic can be complex. For advanced projects, you may need to write significant amounts of code, which then needs to be tested and deployed in all of the places you need your application.

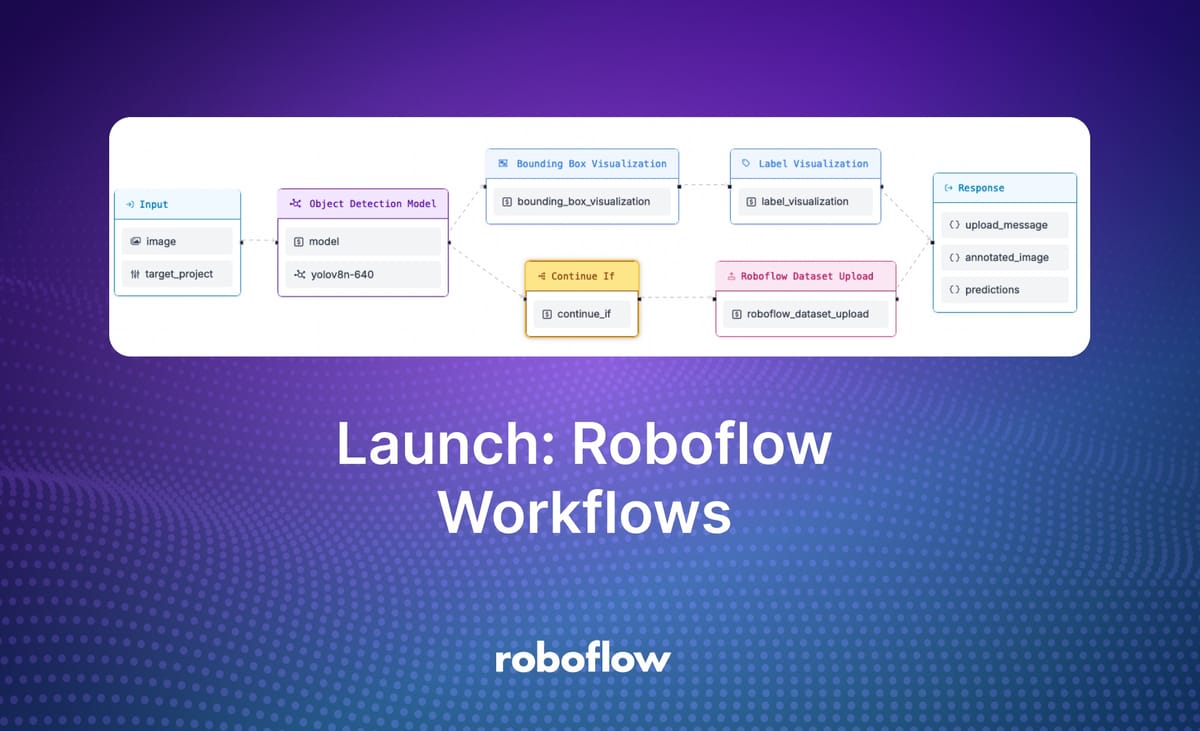

Roboflow is excited to announce Workflows, a new feature that lets you build complex applications that use the state of the art in computer vision. Workflows offers a web-based editor in which you can build your application logic. Once you have a Workflow ready, you can copy a code snippet to deploy it to all of your devices on images, videos, and live video streams.

Below is an example of a Workflow that detects common objects. Drop in an image with common objects (i.e. cats, cell phones, coffee cups) to see the Workflow in action:

To use this Workflow, click “Run Preview” at the bottom of the Workflow. Then, drag in any image that contains a common object (i.e. cars, animals, household items). Click “Run Preview” to see the output from the model. Here is an example output returned by the model:

In this blog post, we are going to explore what is possible in Workflows: the features available, alongside use cases to ignite your imagination as you experiment and build new projects.

You can see a collection of Workflows templates you can try immediately using the Roboflow Workflow Templates gallery.

Introducing Workflows

Roboflow Workflows lets you build complex computer vision applications in your browser. Workflows is based on blocks, each of which performs a specific function and can be connected to other blocks to create an application.

With Workflows, you can build applications that use:

- Your fine-tuned models hosted on Roboflow;

- State-of-the-art foundation models like CLIP and SAM-2;

- Visualizers to show the detections returned by your models;

- LLMs like GPT-4 with Vision;

- Conditional logic to run parts of a Workflow if a condition is met;

- Classical computer vision algorithms like SIFT, dominant color detection;

- And more.

Workflows can contain as many blocks as you want to represent your application logic. Let’s talk about some advanced use cases that you can build with no code using Workflows.

Build Advanced Active Learning Pipelines

You can build vision systems that run an object detection or segmentation model and save the results alongside the input image into a dataset. This can be used to build active learning pipelines, where input data from your model is saved into your dataset for use in training future model versions.

You can build active learning systems that add all images that run through a workflow to your dataset. This could be used with a foundation model like SAM-2 to auto-label data.

You can also build active learning systems that only upload images to your dataset that meet a certain condition, such as the confidence of predictions being within a particular range. This is useful for gathering data that could be used to improve model performance.

Here is an example of an active learning Workflow:

Here is what happens when the Workflow runs:

Images that run through the Workflow are automatically added to a Roboflow dataset. Predictions from the model are added as annotations.

You can read more about how this Workflow works in our How to Build a Computer Vision Active Learning Workflow tutorial.

You can adjust the system to record predictions when a condition is met by using the "Continue If" block available in Workflows, too.

Object Counting with Pass/Fail Logic

You can build Workflows that make a determination based on specified conditions. For example, you can build a system that monitors for a blockage on an assembly line indicated by there being too many items in a given region.

Here is an example system that returns TRUE if an assembly line is over capacity. Otherwise, the system returns FALSE.

Here is an example of the system in action:

You can read more about how this Workflow works in our Monitor Assembly Line Throughput with Computer Vision guide.

Build an Inventory Management System

You can build advanced inventory management systems for retail applications with Workflows.

For example, you can use YOLO World, a zero-shot model, to identify generic products (i.e. coffee bags), crop objects of interest, then send each object to GPT to find out more information about the object.

We built a system that identifies information about a product SKU. This information could then be saved in an inventory management system.

To test this Workflow, drag in an image that contains one or more coffee bags, such as this one:

Add your OpenAI API key in the input field. Then, submit the Workflow. You will see a result with information from the system, like this:

"structured_output": {

"roast date": "08/09/2020",

"country": "Peru, Colombia",

"flavour notes": "Chocolate, Caramel, Citrus"

}You can learn more about how this workflow works in our How to Build a CPG Inventory Cataloging System guide.

Play Rock Paper Scissors

You can build Workflows that contain several conditional statements. For fun, the Roboflow team made a Workflow that lets you play rock paper scissors.

The Workflow does the following:

- Runs an object detection model to identify hand gestures of rock, paper, and scissors;

- Detects the prediction on the left and right (left being player 1 and right being player 2);

- Determines which player won according to the rules of the game;

- Returns a result showing the results from the model and which player won.

You can try the Workflow below. To try it, you will need an image of two people who make a rock, paper, or scissors gesture with their hands.

Object Detection and OCR

You can use Workflows to build systems that run object detection models, process predictions, and then run business logic on the results.

For example, we built a system that reads shipping container IDs with four Workflow blocks. The system:

- Runs a shipping container and container ID detection model.

- Filters detections to return only those that contain the class associated with container IDs.

- Crops the IDs.

- Sends each crop to GPT for OCR.

You can try the Workflow below:

Note: You will need to add an OpenAI API key to try this Workflow.

Deploying Workflows

You can deploy a Workflow in three ways:

- To the Roboflow cloud using the Roboflow API;

- On a Dedicated Deployment server hosted by Roboflow and provisioned exclusively for your use, or;

- On your own hardware.

The Workflows deployment documentation walks through exactly how to deploy Workflows using the various methods above.

Deploying to the cloud is ideal if you need an API to run your Workflows without having to manage your own hardware. With that said, for use cases where reducing latency is critical, we recommend deploying on your own hardware.

If you deploy your model in the Roboflow cloud, you can run inference on images. If you deploy on a Dedicated Deployment or your own hardware, you can run inference on images, videos, webcam feeds, and RTSP streams.

To deploy a Workflow, click “Deploy Workflow” on any Workflow in your Roboflow Workspace. A window will then open with information about how you can deploy your Workflow.

When you first run a Workflow on your own hardware or a Dedicated Deployment, the models used in your Workflow will be downloaded to your device for use with the Workflow.

The amount of time this process takes will depend on what models you use. Roboflow models should take a few seconds to download, but foundation models like CLIP and SAM 2 may take several minutes to download.

Then, the model will be cached for use on your own hardware. This will result in faster responses for subsequent runs.

More Examples

The Roboflow Workflows Template gallery features many examples of Workflows. Here are a few that we have made:

- Detect vehicles

- Detect fish

- Remove the background from an image

- Detect small objects with SAHI

- Detect and read license plates

We have also written several guides that walk through specific Workflows step-by-step, including:

- How to Build an Automated Multimodal Data Labeling Pipeline

- How to Create a Retail Planogram using Computer Vision

- How to Build a Computer Vision Active Learning Workflow

- Monitor Assembly Line Throughput with Computer Vision

- Identify Solar Panel Locations with Computer Vision

- How to Build a CPG Inventory Cataloging System

- How to Build an Automated License Plate Reading Application

Conclusion

Roboflow Workflows is a low-code computer vision application builder. With Roboflow Workflows, you can build computer vision tools that implement complex, multi-step logic.

You can build a Workflow in your browser, then deploy it through the Roboflow API, a Dedicated Deployment provisioned exclusively for your use on Roboflow, or on your own hardware.

Workflows can be run on images with the Roboflow API, and on images, videos, webcam feeds, and RTSP streams on your own hardware.

To get started with Roboflow Workflows, go to your Roboflow dashboard, click “Workflows” in the left sidebar, and create a new Workflow.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Aug 29, 2024). Launch: Roboflow Workflows. Roboflow Blog: https://blog.roboflow.com/workflows/