Announced by Google on March 11, 2025 as “The most capable model you can run on a single GPU or TPU”, Gemma 3 is a multimodal language model, the third in the Gemma series. Gemma 3 allows you to ask questions in text and provide images as context.

In this guide, we are going to walk through our qualitative analysis of Gemma 3’s capabilities using a standard set of images we use to evaluate multimodal models. Our tests cover tasks like visual question answering, zero-shot object detection, and OCR.

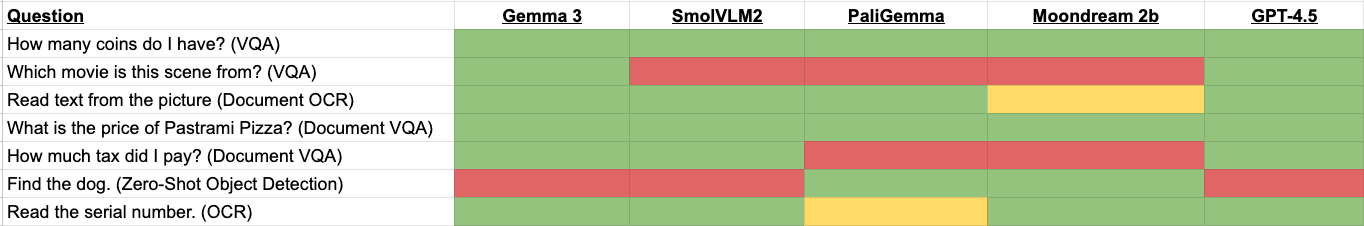

Here is a summary of our results:

Gemma passed 6/7 of our tests, failing only the object detection test. This is one of only three multimodal models we have tested that have correctly answered at least six questions. GPT-4.5 answered six questions correctly, failing on zero-shot detection. Qwen-VL-Plus passed all seven.

Without further ado, let’s talk more about Gemma 3 and walk through our findings.

What is Gemma 3?

Gemma 3 is a multimodal language model developed by Google. The model comes in four sizes: 1B, 4B, 12B, and 27B. The larger the model size you use, the more accurate the model is. The model is released under Google's custom Gemma license.

The 1B model has a context window of 32K tokens whereas the other model sizes have a context window of 128K, a 16x increase from previous models in the Gemma series. 128K is enough to fit thousands of words of text or multiple images in a single prompt.

The 1B model supports only text whereas the larger models support both text and images as an input. The 128K context window is ideal for applications that involve large amounts of input data.

In addition, all model sizes aside from the 1B model were trained on an input corpus that contained data from over 140 languages. This makes Gemma 3 an ideal candidate for use in applications that involve multimodal tasks in non-English languages.

The model comes with instruction tuned checkpoints that you can use to have discussions with the model. These checkpoints are available for download on Kaggle and Hugging Face. You can also use the models in the Google AI Studio.

You can read more about the model implementation in the Gemma 3 technical report.

Evaluating GPT-4.5 for Multimodal Tasks

We evaluated GPT-4.5’s multimodal support on several tasks, including:

- Object counting

- Visual question answering (VQA)

- Document OCR

- Document VQA

- Zero-shot object detection

- Real-world OCR

Below, we show our results from each test.

We used the gemma-3-12b-it instruction-tuned checkpoint hosted on Hugging Face Spaces to test the model.

Gemma 3 object counting

We first tested Gemma on an object counting task. We provided the following image of coins on a table and asked the model “How many coins do I have?”:

The model responded:

Based on the image, you have four coins.

Gemma successfully counted the number of coins.

Gemma 3 Visual question answering (VQA)

We then tested Gemma on two VQA tasks:

- Identifying a scene in a popular movie, and;

- Answering a question about a menu.

We provided Gemma with the following image of a scene from the movie Home Alone and asked the model “Which movie is this scene from?”

The model returned:

This scene is from the classic Christmas movie Home Alone.

You can tell by the iconic Christmas decorations, the fireplace, and the presence of Macaulay Culkin as Kevin

Gemma both identified the movie and provided a visual description of the image.

For our menu VQA test, we provided the following image of a menu and asked “What is the price of Pastrami Pizza?”:

The model returned:

According to the menu, Pastrami Pizza costs $27.

Gemma accurately identified the cost of the pastrami pizza.

Gemma 3 Document OCR

We next tested Gemma 3 on a document OCR task. We provided a screenshot of a paragraph of text on a web page and asked Gemma 3 to “Read text from the picture.” Here is the input image we used:

Gemma returned:

Here's the text from the picture:

"I was thinking earlier today that I have gone through, to use the lingo, eras of listening to each of Swift's Eras. Meta indeed. I started listening to Ms. Swift's music after hearing the Midnights album. A few weeks after hearing the album for the first time, I found myself playing various songs on repeat. I listened to the album in order multiple times."

Gemma got every character right, including the italics on the word “Midnights”. Getting the italics right has been a task with which some other vision language models we have tested have struggled.

Gemma 3 Document question answering

Next, we ran a test to evaluate Gemma 3’s receipt VQA capabilities. We provided the following image of a receipt and asked “How much tax did I pay?”:

Gemma returned:

Based on the image of the receipt you sent, you paid $2.30 in tax. It's listed as "Tax" on the bill.

The model successfully identified the tax paid as listed on the receipt.

Gemma 3 Real-world OCR

We then turned to a real-world OCR task. We provided Gemma with the following photo of a tire on a serial number and asked the model to “Read the serial number”:

The model returned:

The serial number is 3702692432.

Gemma successfully read the serial number.

Gemma 3 Zero-shot object detection

Finally, we tested Gemma 3 on a zero-shot object detection task. We asked Gemma to provide the xyxy coordinates of a dog in an image. This is a task with which many multimodal models fail, especially those not explicitly developed to support object detection.

We provided the following image and asked Gemma to “Find the dog. Return xyxy coordinates.”:

Gemma returned:

Here are the approximate xyxy coordinates for the dog in the image:

• x: 450

• y: 350

• x2: 750

• y2: 650

Note: These coordinates are relative to the image and are approximate.

Gemma’s answer is incorrect. The returned coordinates do not relate to the position of the dog.

Conclusion

Gemma 3 is a multimodal vision model developed by Google. You can use Gemma 3 for a wide range of tasks including OCR, document VQA, object counting, and more.

In this guide, we walked through our analysis of the model, showing how Gemma 3 was able to successfully answer 6/7 questions across different multimodal task types. This only scratches the surface of tests you could run, though. Since Gemma 3 was explicitly trained on multilingual data, it would be interesting to further test how the model performs on tasks in other languages.

Curious to learn more about how other multimodal models perform on our tests? Check out our list of multimodal model blog posts in which we analyze the capabilities of various new and state-of-the-art multimodal models.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Mar 13, 2025). Gemma 3: Multimodal and Vision Analysis. Roboflow Blog: https://blog.roboflow.com/gemma-3/