Released on April 14th, 2025, GPT-4.1 is a new series of multimodal models developed by OpenAI. The series comes with three models: GPT-4.1, GPT-4.1 mini, and GPT-4.1 nano.

According to OpenAI, the new models outperform previous GPT models on many industry-standard benchmarks, from SWE-bench Verified (used for coding) to Video-MME (used to evaluate multimodal video understanding).

We ran a qualitative analysis on GPT-4.1 using our standard set of prompts to evaluate the general capabilities of the model. We ran our tests both on GPT-4.1 and GPT-4.1 nano.

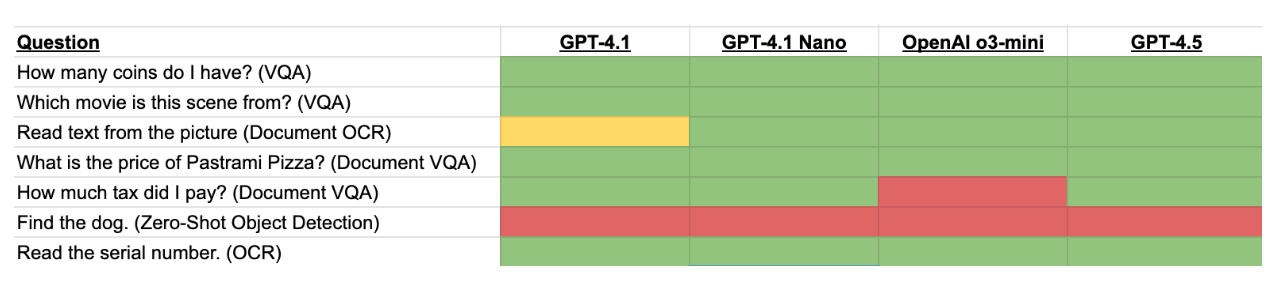

GPT-4.1 passed five of seven tests. If we exclude a minor error – missing italics in an OCR task – GPT-4.1 passed six tests. GPT-4.1 nano completed the OCR task perfectly, achieving six out of seven correct answers. GPT-4.5 passed six of the seven tests. Both GPT-4.1, GPT-4.1 Nano, and GPT-4.5 failed our object detection test.

In this guide, we will walk through the prompts we run, the answers from GPT-4.1, as well as our analysis from using the model.

Without further ado, let’s get started!

What is GPT-4.1?

GPT-4.1 is a new, multimodal model developed by OpenAI. GPT-4.1 models have a context window of 1 million tokens, making the models ideal for tasks that require long context. The models achieve state-of-the-art performance on a range of benchmarks, including SWE-bench Verified, Video-MME, and Scale’s MultiChallenge benchmark.

GPT-4.1 comes in three sizes:

- GPT-4.1

- GPT-4.1 mini

- GPT-4.1 nano

The smaller the model, the lower the latency you can expect from the model.

GPT-4.1 is only available in the OpenAI API. According to the model launch post, the model “will only be available via the API”. The reasoning cited is:

In ChatGPT, many of the improvements in instruction following, coding, and intelligence have been gradually incorporated into the latest version(opens in a new window) of GPT‑4o, and we will continue to incorporate more with future releases.

You can also experiment with the model in the ChatGPT Playground, ideal for exploring the model capabilities in a visual interface.

Evaluating OpenAI’s GPT-4.1 Model

We evaluated OpenAI’s GPT-4.1 multimodal support on several tasks, including:

- Object counting

- Visual question answering (VQA)

- Document OCR

- Document VQA

- Real-world OCR

- Zero-shot object detection

Below, we show our results from each test.

Our tests were run using the ChatGPT playground. We used the gpt-4.1 model.

GPT-4.1 Object Counting

We started by asking GPT-4.1 to count the number of coins in an image. We asked the model “How many coins do I have?” with the following image:

The model returned:

There are four coins in the image.

The model successfully counted the number of coins in the image.

GPT-4.1 Visual Question Answering

We next tested GPT-4.1’s visual question answering abilities. We asked the question “Which movie is this scene from?” with the following image as context:

GPT-4.1 returned:

This scene is from the movie "Home Alone." It is a classic Christmas film featuring the character Kevin McCallister, played by Macaulay Culkin.

The model correctly answered the question.

GPT-4.1 Document OCR

We then tested GPT-4.1’s document OCR abilities. We uploaded a screenshot with several lines of text and asked the model to “Read text from image.” We provided the following image:

GPT-4.1 returned:

I was thinking earlier today that I have gone through, to use the lingo, eras of listening to each of Swift's Eras. Meta indeed. I started listening to Ms. Swift's music after hearing the Midnights album. A few weeks after hearing the album for the first time, I found myself playing various songs on repeat. I listened to the album in order multiple times.

The words are all correct. With that said, it missed the italics on the word “Midnights”. We have seen this behaviour in the past where a model successfully reads text but misses the italics.

Interestingly, GPT-4.1 nano, a smaller model than GPT-4.1, got the answer exactly current, including with the italics.

GPT-4.1 Document Question Answering

We then uploaded a photo of a menu and asked “How much does Pastrami Pizza cost?”:

GPT-4.1 returned:

The Pastrami Pizza costs $27.

GPT-4.1 successfully read the price on the menu.

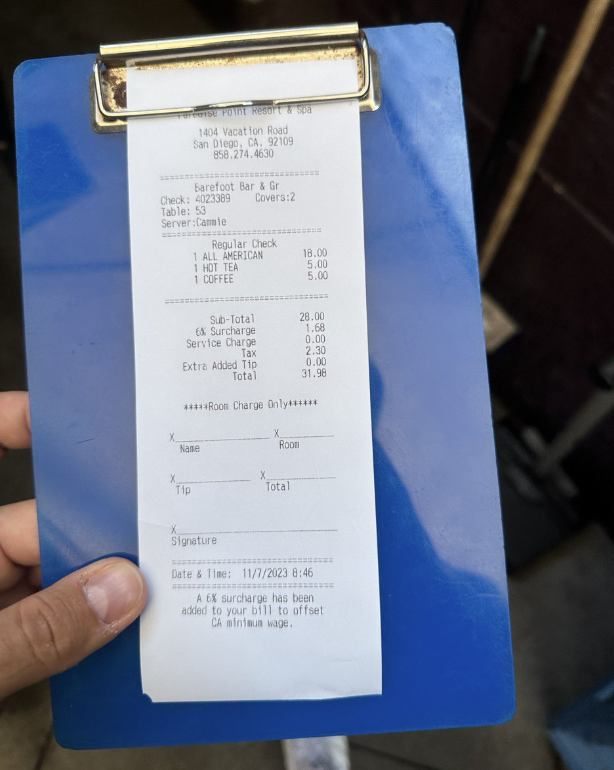

We then asked the model to answer a question about the contents of a receipt. Given the following image of a receipt, we asked “How much tax did I pay?”:

GPT-4.1 responded with:

You paid $2.30 in tax, as indicated on the receipt.

This is the correct answer.

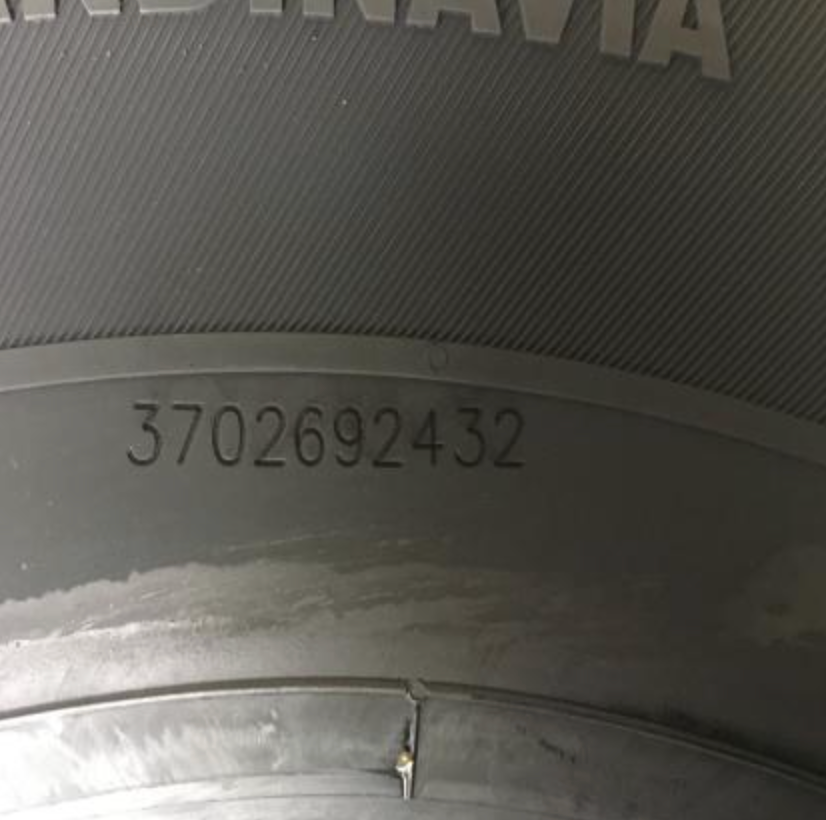

GPT-4.1 Real-World OCR

We then asked GPT-4.1 to do a real world OCR task. We provided a photo of a tire with a serial number and asked the model to read the serial number. Here is the image we provided:

We provided the prompt “Read the serial number.”. GPT-4.1 returned:

The serial number is 3702692432.

This is correct.

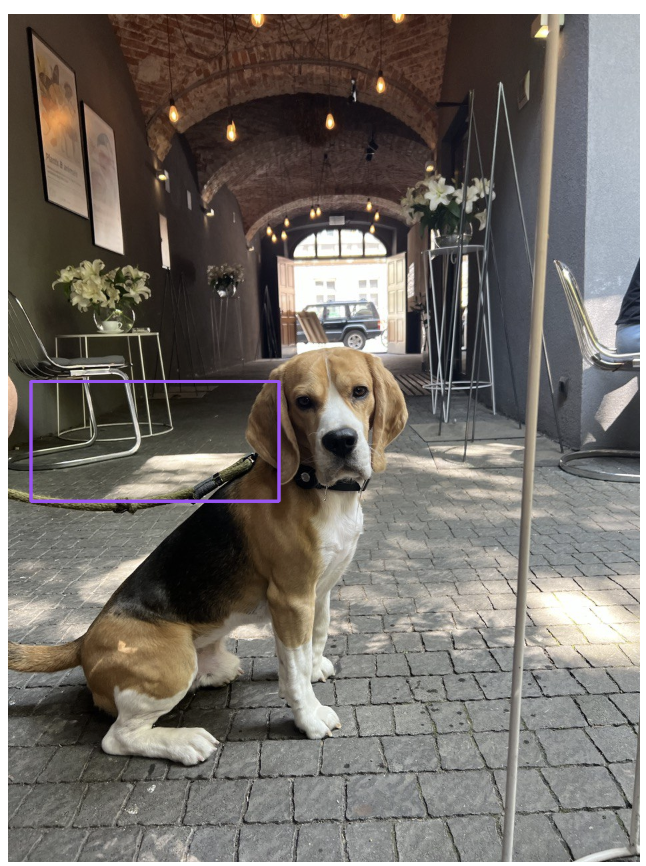

GPT-4.1 Object Detection

Finally, we challenged GPT-4.1 to detect the location of an object in an image. This is a task with which many multimodal models struggle. We provided the following image and asked GPT-4.1 to “Return the xyxy coordinates of the dog in the image.”

The model returned:

The approximate xyxy coordinates for the dog in the image are:

x_min: 38 (leftmost part of the dog, near the left edge of the image)

y_min: 410 (top of the dog's head)

x_max: 551 (rightmost part of the dog's hindquarters)

y_max: 730 (bottom of the front paws)

Therefore, the coordinates are:

[38, 410, 551, 730]

These values are estimated based on the visible position of the dog in the image.

We plotted these coordinates on the input image to visualise them using the supervision Python package. The results are:

The model did not identify the position of the dog correctly.

Conclusion

GPT-4.1 is the latest multimodal model developed by OpenAI. The model, which comes in three sizes, can be used for tasks like VQA, OCR, and receipt reading.

The base GPT-4.1 model passed five of seven of our tests. The model struggled with object detection, although this was not a surprise given many multimodal models struggle with this task too.

Curious to learn more about state-of-the-art multimodal models? Check out our multimodal model guides.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Apr 15, 2025). OpenAI GPT-4.1: Multimodal and Vision Analysis. Roboflow Blog: https://blog.roboflow.com/gpt-4-1-multimodal/