Kaggle is a data science and machine learning platform, primarily known for hosting a wide range of datasets and competitions. It is one of the most popular platforms for data scientists and machine learning enthusiasts with the availability of free resources and publicly available datasets

This comprehensive guide will cover most of what you need to know to use Kaggle for computer vision tasks.

What is Kaggle?

Kaggle is an online community that offers tools and resources for data science and machine learning practitioners. Its platform has about 50,000 publicly available datasets, ranging in topic, size, format, and modality.

Kaggle is best known as the platform for sharing and hosting a wide variety of datasets, accepting uploads and downloads of datasets for everyone. It also offers complimentary features including:

- A notebook, similar to Google Colab, which allows users to run code and create models.

- The ability to create competitions, where hosts can prepare data and competitors can run code to compete on Kaggle Notebooks.

- A curated model library that users can discover and use from Google-affiliated organizations like TensorFlow, DeepMind, MediaPipe, and more.

How to Use Roboflow Notebooks on Kaggle

Roboflow has many (28+) notebooks for training state-of-the-art computer vision models like Ultralytics YOLOv8, SAM, CLIP, and RTMDet. We make these notebooks available to use on various notebook platforms like Google Colab and Amazon SageMaker Studio Lab, as well as Kaggle.

Opening one of our training notebooks in Kaggle can be done by going to our GitHub repository and clicking on the “Open In Kaggle” link for any of our notebooks.

How to Use Kaggle Notebooks for Computer Vision

Kaggle Notebooks allow you to run, experiment, and iterate on code in a contained environment. A key benefit from both Google Colab and Kaggle Notebooks is the availability of free GPU resources, crucial for machine learning research and development.

Kaggle Notebooks function similarly to a Jupyter Notebook, where a notebook is made up of several cells, which can be run independently or in a sequence. Using a Kaggle Notebook, you can try out the latest state-of-the-art models (YOLOv5, MobileNetv2, LLaMa 2 and more) and build proof-of-concept use cases directly in the browser.

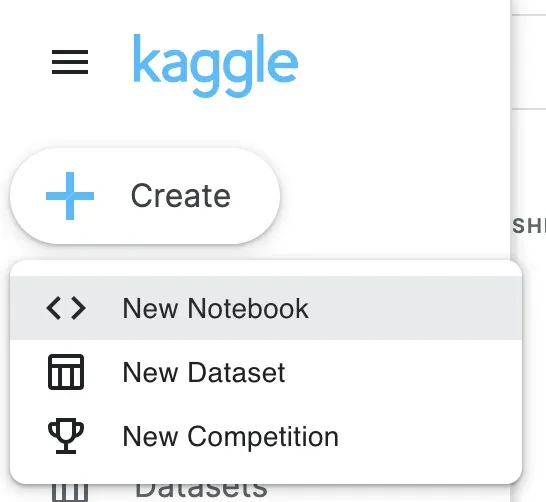

To create a notebook, in the side menu, under Create, click on “New Notebook”

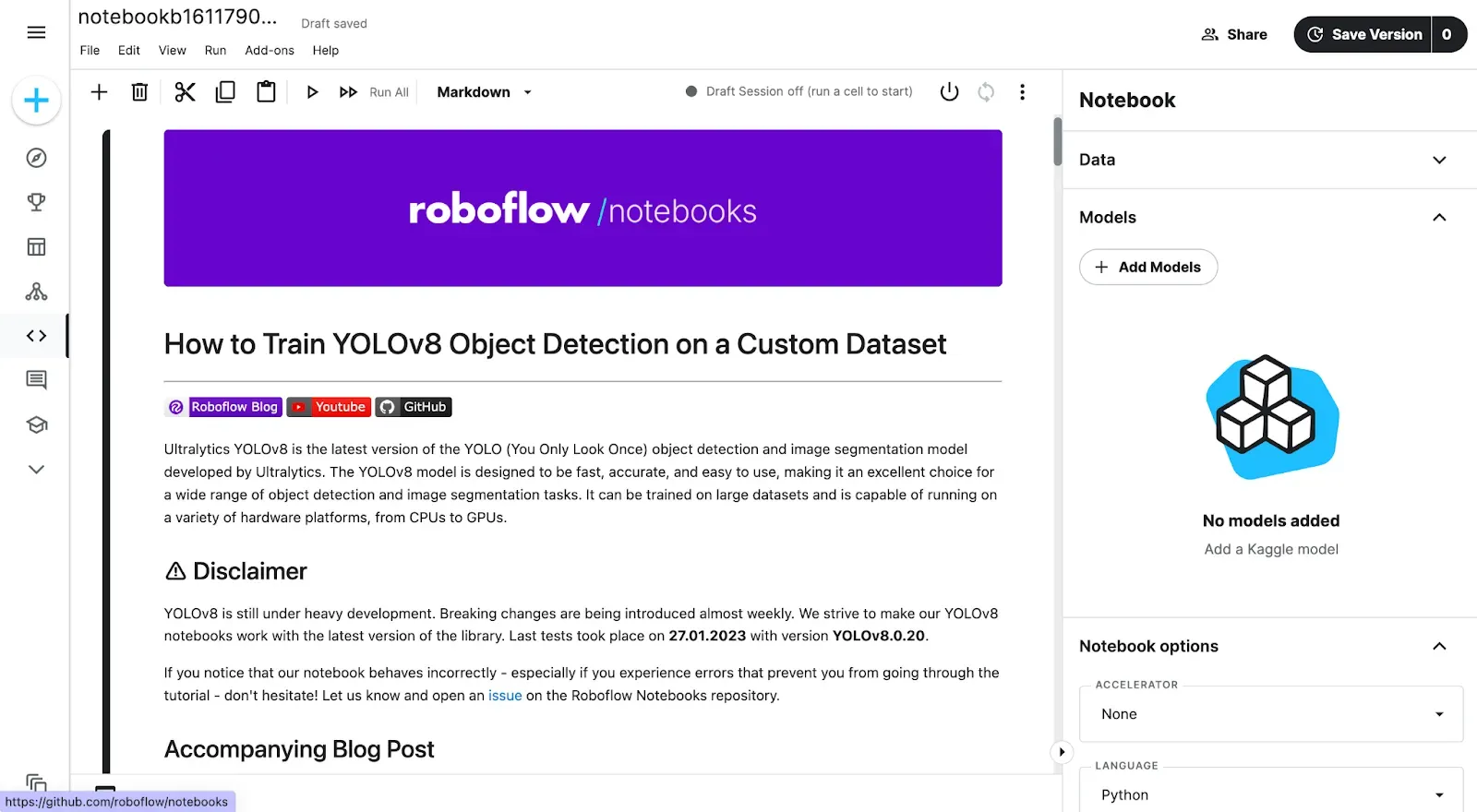

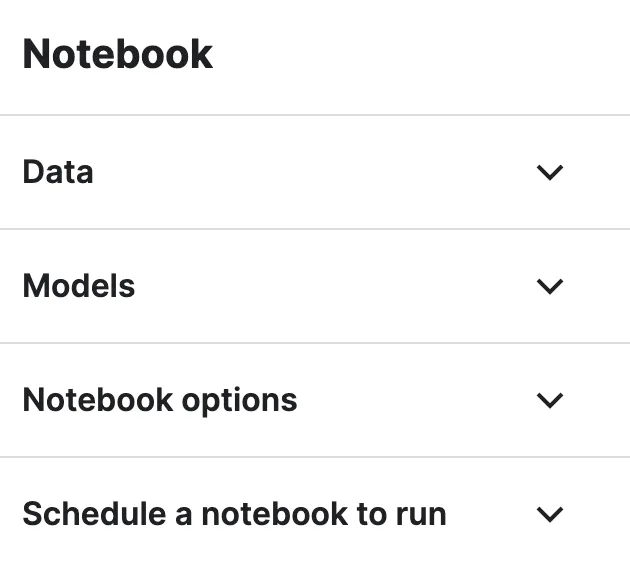

Then, you will see your notebook in a Jupyter Notebook format, where code can be executed in cells . Although the basics are similar, there are a few differences, starting with the right-side Notebook menu.

Kaggle allows you to automatically download one of over 50,000 Kaggle datasets into your notebook environment into your kaggle/input folder, as well as over 200 models from the model library.

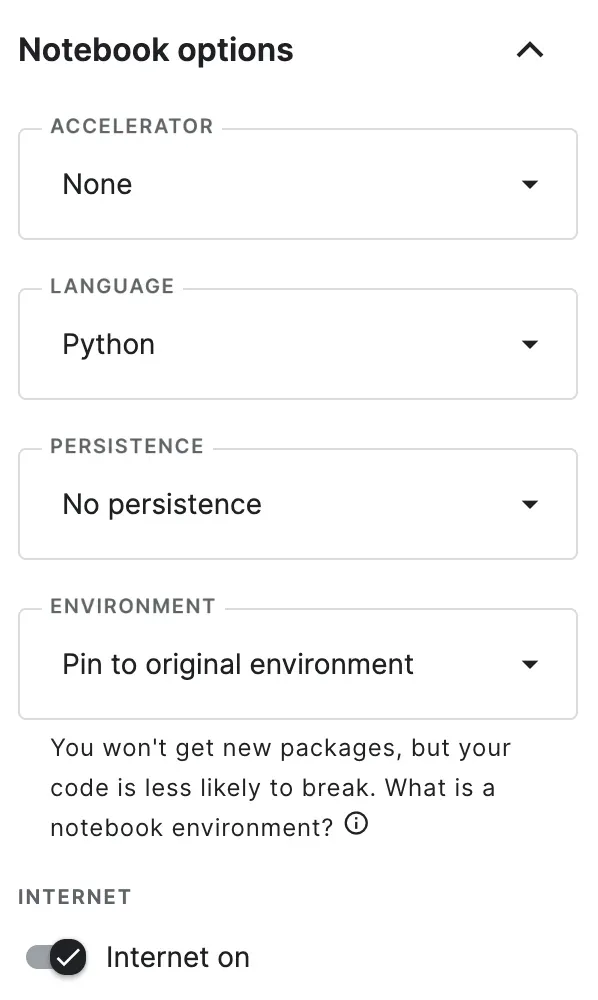

There are also some options to choose from, including adding a GPU or other accelerators, selecting between Python and R, enabling persistence so that data isn’t lost across sessions, as well as pinning an environment to maintain reproducibility. These are several features that aren’t available in Colab nor in Jupyter.

There’s also an option to enable/disable internet use, which is a rarely used feature outside of competitions, where internet use is limited or disabled.

How to Use Datasets From Kaggle

Due to its support of any dataset modality, type, and format, the method for interacting with data on Kaggle is not standardized. How you get data into Kaggle depends on each individual dataset. That being said, there are a large number of datasets that use a `.csv` file as an index for the data and their respective annotations.

There are several options for using datasets that are found on Kaggle.

Option 1: Import the Dataset Into a Kaggle Notebook

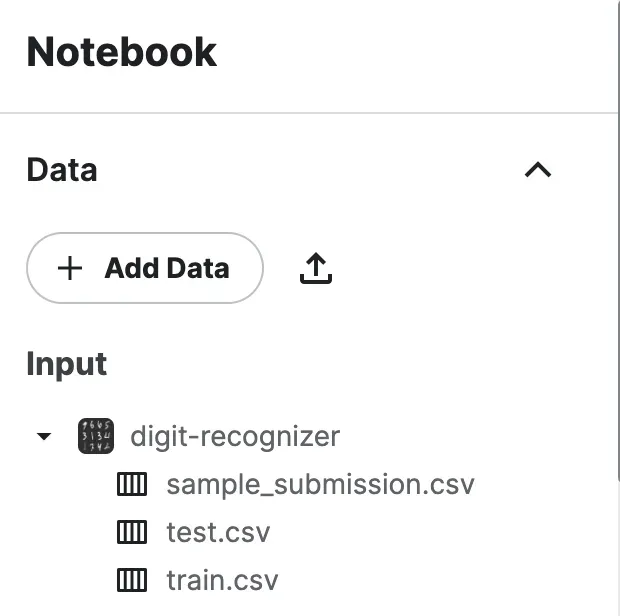

By design, the easiest way to interact with Kaggle datasets is by using their notebooks. In the sidebar for the notebook, you should see a dropdown to “Add Data”. Here, you can select from your recently viewed datasets or search. After you find a dataset, you can click the Add button.

After the dataset is added, it will appear in a folder in the path `kaggle/input`. For the Digit Recognizer example, it was added to `kaggle/input/digit-recognizer`.

Option 2: Download the Dataset

The second option is to download the dataset for use elsewhere. It will usually download in a ZIP file called `archive.zip` with the contents of the dataset inside.

How to Upload Datasets to Kaggle

Kaggle allows any user to upload datasets onto their platform so that they can be stored and shared.

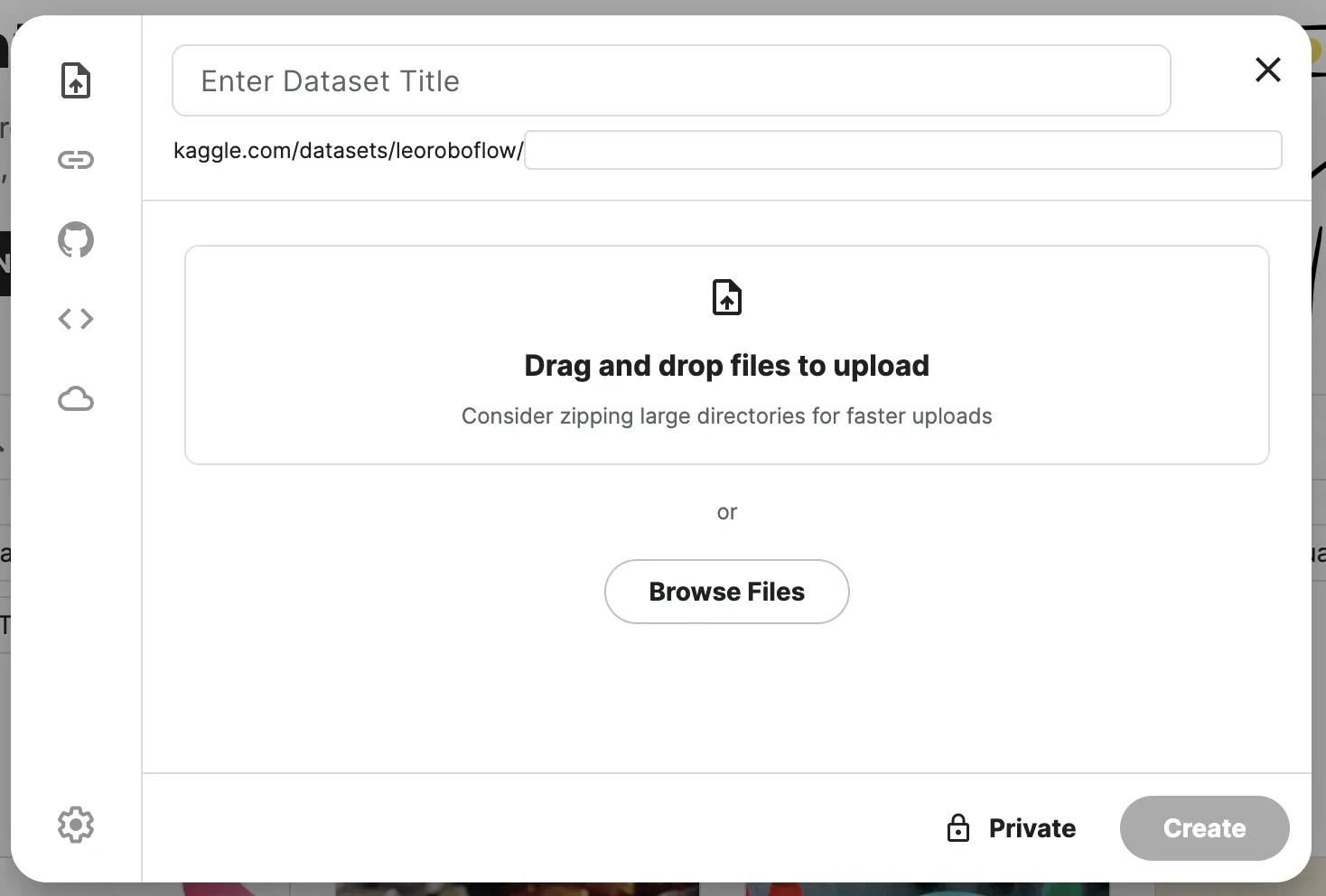

To upload a dataset into Kaggle, click the Create Dataset button under the Create button.

You can’t upload entire folder directories, but it is possible to upload a compressed ZIP file, which can be then uploaded. Kaggle will automatically unzip the files when they are added to the platform.

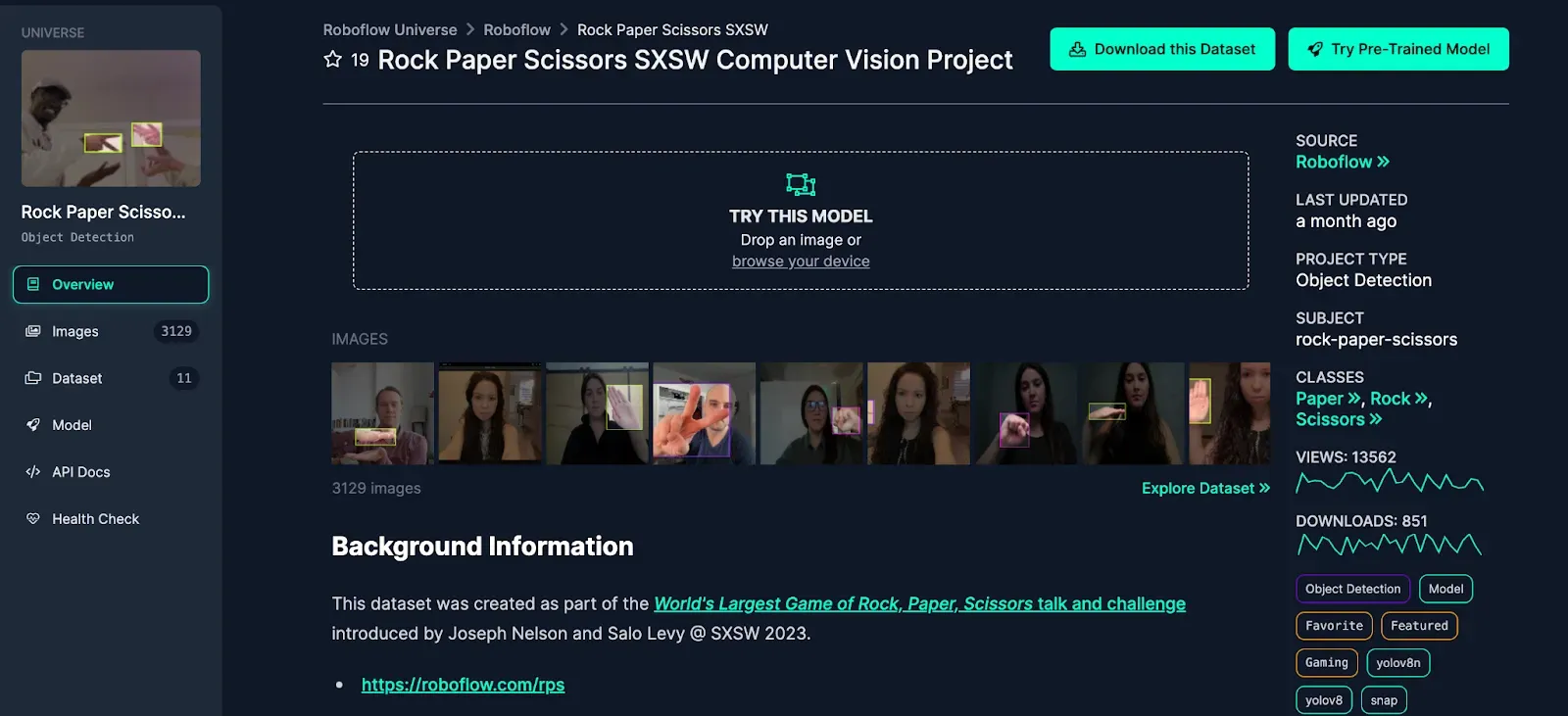

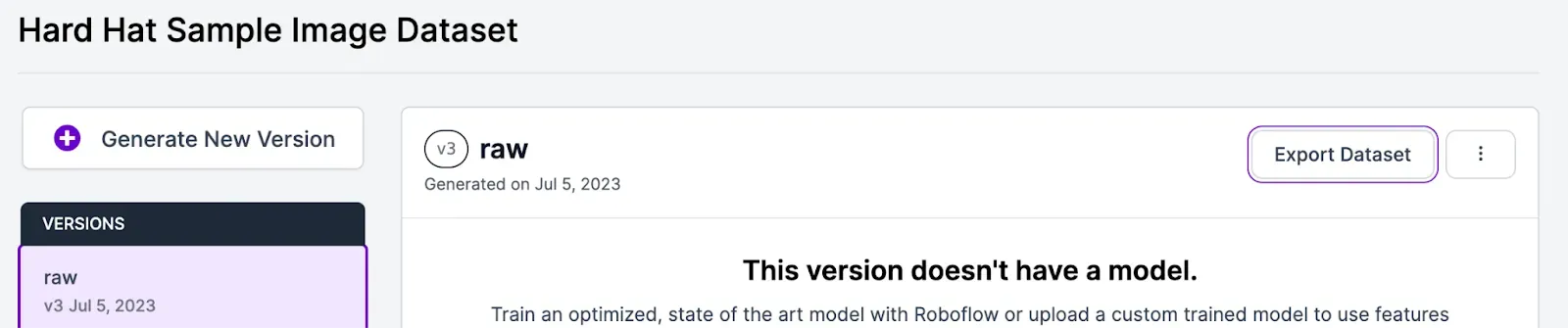

You can upload a project from Roboflow Universe or your own projects as well. On Universe, you can browse our curated favorites, our recent featured datasets or search from over 200,000 datasets.

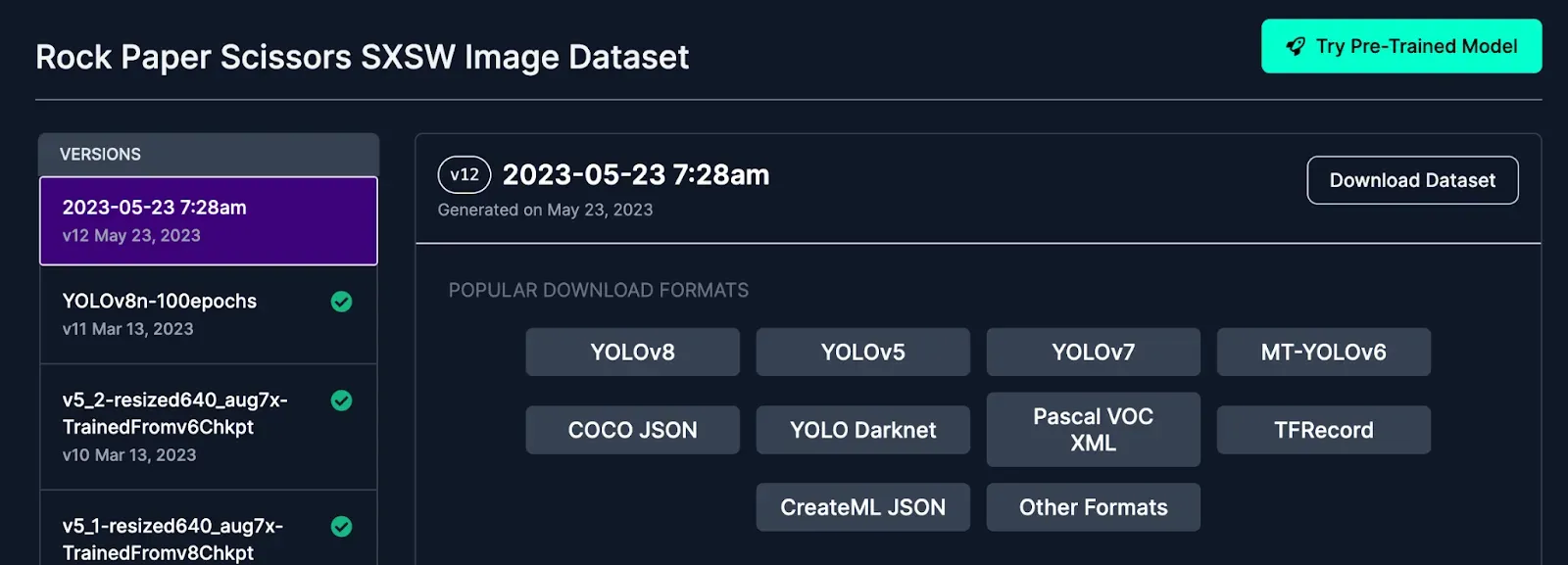

Once you’ve found a dataset you’d like to upload, select a version from the project and download the dataset in your preferred format. A version is a point-in-time snapshot of your dataset that keeps track of the images, annotations, preprocessing, and augmentations of the dataset at a certain time, allowing you to consistently reference and reproduce the exact dataset.

Then, upload the zip file to Kaggle.

How to Use a Model From Kaggle

Kaggle has a library of curated models from many Google-affiliated organizations or research projects. Since models vary in architecture, type, and modality, like datasets, Kaggle has no standardized means of running inference on models.

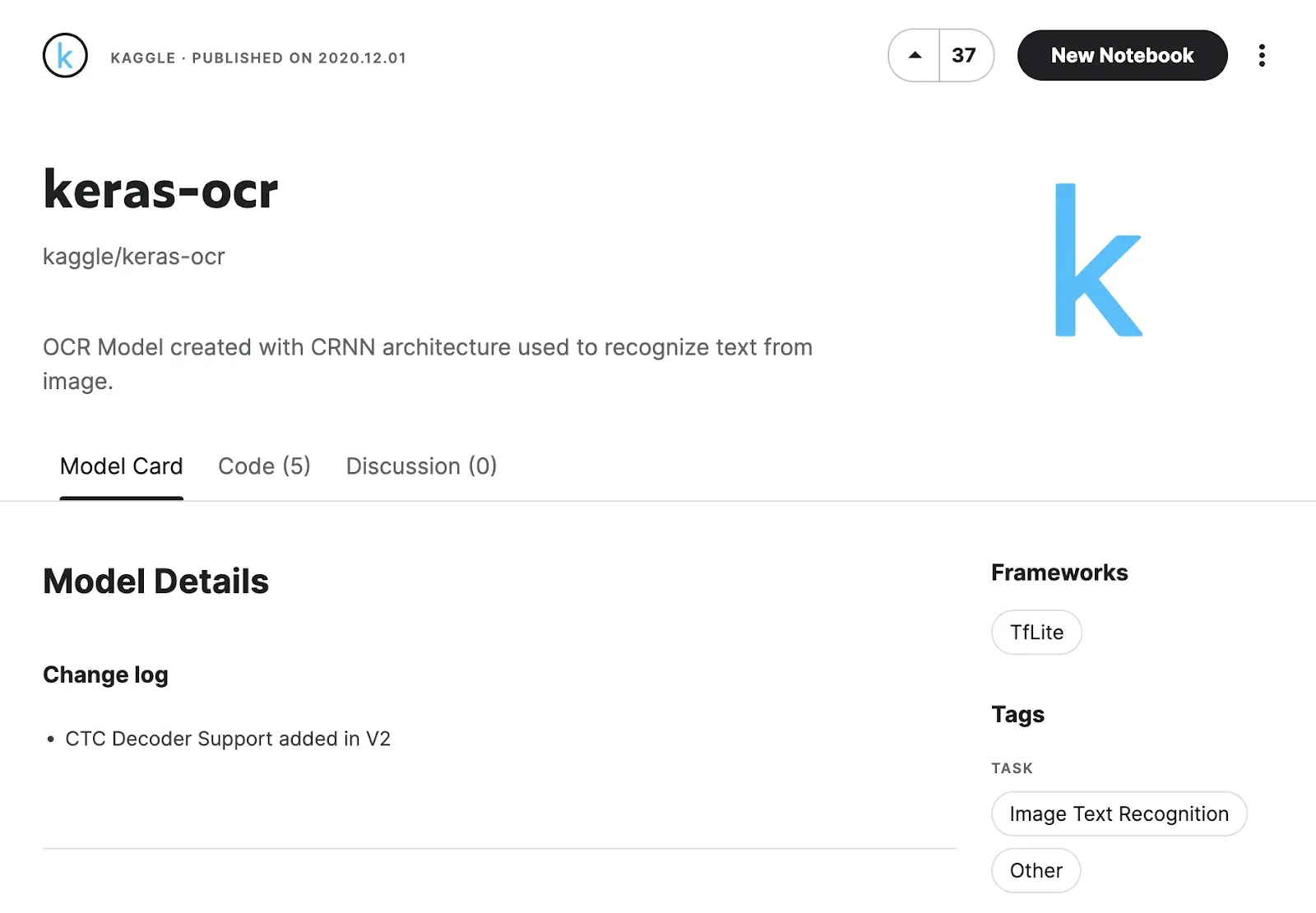

To use a model, click New Notebook once you’ve navigated to your desired model page.

It will open a Kaggle notebook, where you can configure the model. The model will be imported to the kaggle/input folder, the same location as the datasets. For this example keras-ocr model, it will go to /kaggle/input/keras-ocr/tflite/dr/2/2.tflite.

How to Upload a Trained Model to Roboflow

If you decide to fine-tune or train a new model on Kaggle, you can easily upload the model weights into Roboflow so you can use it to label new data using Label Assist or deploy it!

You can upload model weights using the `.deploy()` function in our Python SDK using this code:

project.version(1).deploy("yolov5", "*path/to/training/results/*")

Example: Inferring on a Kaggle Dataset with a Roboflow Model

Now that you know the ins and outs of how to use Kaggle, let’s try testing out a Roboflow model with a Kaggle dataset. Just one of the many, many use cases for Kaggle.

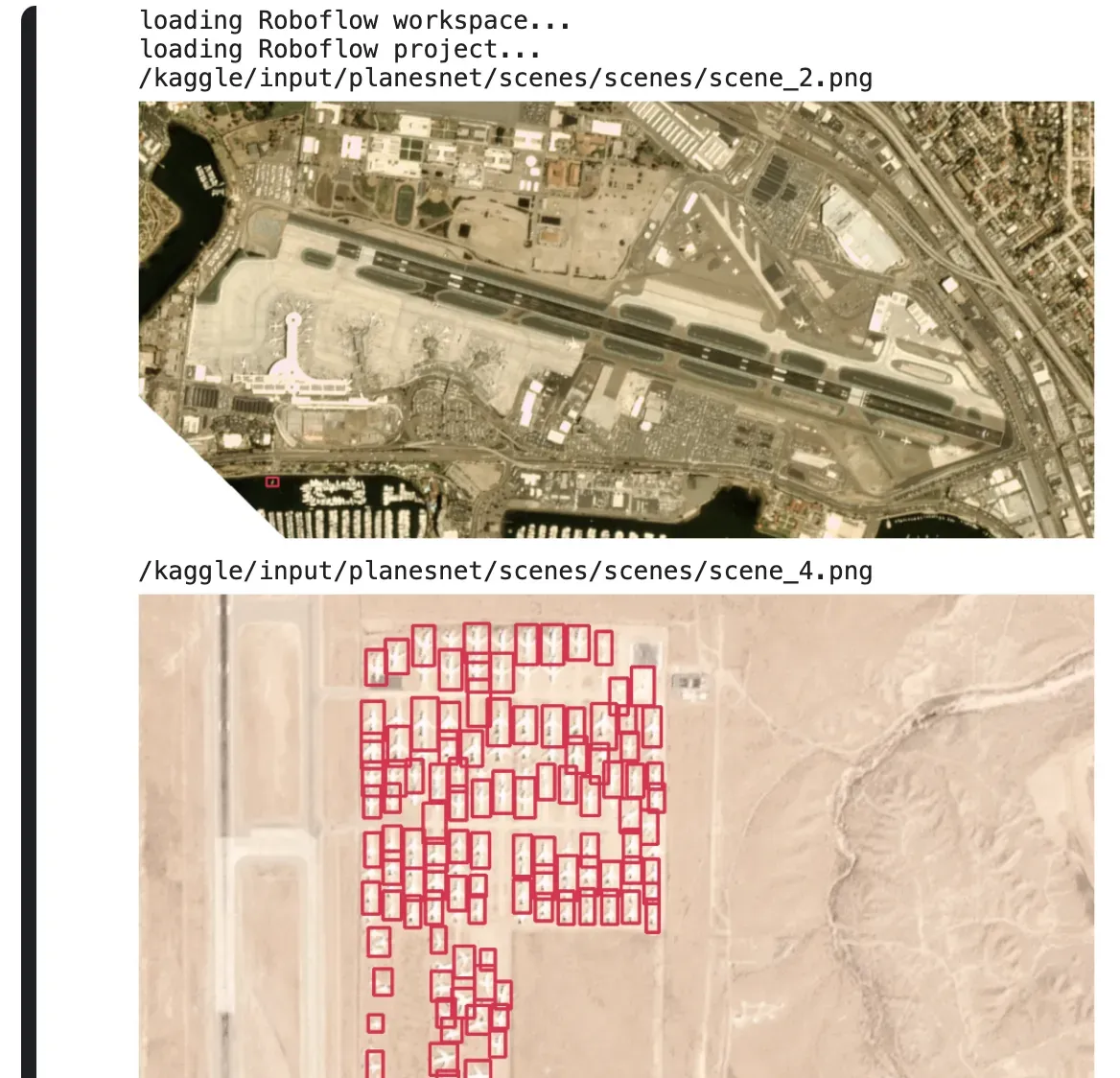

To get started, we can create a new notebook. Once we’re in our notebook, we can import a dataset. For this example, let’s try testing the performance of a plane detection model on Roboflow Universe against the Planes in Satellite Imagery dataset.

Once we have our notebook, we can modify the code that Kaggle gives us by default to run inference on our dataset. We use Supervision to visualize and organize the results.

images_folder = '/kaggle/input/planesnet/scenes'

limit = 3

import os

import cv2

import supervision as sv

from roboflow import Roboflow

rf = Roboflow(api_key="")

project = rf.workspace().project("rifles-00uv0")

version = project.version(1)

model = version.model

classes = list(project.classes)

for dirname, _, filenames in os.walk(images_folder):

for index, filename in zip(range(limit), filenames):

path = os.path.join(dirname, filename)

print(path)

result = model.predict(path, confidence=10).json()

detections = sv.Detections.from_inference(result)

box_annotator = sv.BoxAnnotator()

labeled_img = box_annotator.annotate(

scene = cv2.imread(path),

detections = detections

)

sv.plot_image(image=labeled_img)To use the snippet above, you will need a free Roboflow account. Add in your Roboflow API key as the value to the api_key parameter. Learn how to retrieve your Roboflow API key.

In this use case, we can evaluate the performance of a Roboflow model on Kaggle for a model using new data it hasn’t seen before.

The console from the Kaggle notebook

We can visualize the data and see how it is doing. Optionally, we can upload the data to our project if we encounter examples that aren’t performing as expected.

This example Kaggle notebook is a narrow example of the full capabilities of Kaggle. Feel free to experiment!

Kaggle Alternatives

Kaggle allows for the quick and easy sharing of datasets to a large audience, but there are alternatives available for specific tasks. Although an adaptable and flexible development environment is useful, it can also be hard to integrate and iterate.

Colab, another notebook product from Google, and Amazon SageMaker Studio Lab similarly offers free resources and a virtual environment to experiment while platforms like Hugging Face and Roboflow Universe offer an alternative to Kaggle for dataset and model hosting.

Conclusion

In this guide, we covered how to use many of the main features of Kaggle. Kaggle makes it easy to explore and experiment with machine learning, building upon community collaboration and experience. We walked through how to use the notebooks feature (including how to use Roboflow Notebooks), how to use and upload datasets, and how to use a model from the Kaggle model repository.

Cite this Post

Use the following entry to cite this post in your research:

Leo Ueno. (Sep 6, 2023). How to Use Kaggle for Computer Vision. Roboflow Blog: https://blog.roboflow.com/how-to-use-kaggle-for-computer-vision/