Contrastive Language-Image Pre-training (CLIP) has revolutionized the field of computer vision, powering modern recognition systems and generative models. CLIP can be used for comparing the similarity between text and images, comparing the similarity between two images, and clustering images. These uses have a myriad of applications, from building moderation systems to creating intelligent image data gathering solutions.

However, CLIP's success is largely attributed to its data, rather than its model architecture or pre-training objective. In this blog, we will talk about Metadata-Curated Language-Image Pre-training (MetaCLIP), a new model that builds on the strengths of CLIP by improving the curation of its training data.

What is MetaCLIP?

MetaCLIP, developed by Meta AI. is a new approach to language-image pre-training that focuses on data curation. It takes a raw data pool and metadata derived from CLIP's concepts to produce a balanced subset of data based on the metadata distribution. MetaCLIP was introduced in the “Demystifying CLIP Data” paper.

By concentrating solely on the data aspect, MetaCLIP isolates the model and training settings, allowing for a more rigorous experimental study.

You can use MetaCLIP for the same tasks as CLIP, which include:

- Comparing the similarity of text and images (i.e. for media indexing, content moderation);

- Comparing the similarity of two images (i.e. to build image search engines, to check if images similar to a specified image appear in a dataset);

- Clustering (i.e. to better understand the contents of a dataset).

How Does MetaCLIP Perform?

When applied to CommonCrawl with 400 million image-text data pairs, MetaCLIP outperforms CLIP's data on multiple standard benchmarks. In zero-shot ImageNet classification, MetaCLIP achieves 70.8% accuracy, surpassing CLIP's 68.3% on ViT-B models.

Moreover, when scaled to one billion data pairs, while maintaining the same training budget, MetaCLIP attains 72.4% accuracy. These impressive results hold across various model sizes, exemplified by ViT-H achieving 80.5%, without any bells-and-whistles.

Let’s talk through all the steps we need to follow to use MetaCLIP.

Step #1: Install MetaCLIP

The Roboflow team developed Autodistill MetaCLIP, a wrapper around Meta AI’s MetaCLIP model. The Autodistill MetaCLIP wrapper allows you to use MetaCLIP. The wrapper also integrates with Autodistill, an ecosystem that lets you use foundation models like MetaCLIP to automatically label data for use in training a model.

To install the Autodistill MetaCLIP wrapper, run the following command:

pip install autodistill_metaclipNow that we have MetaCLIP installed on our system, we can start experimenting with the model.

Step #2: Test the Model

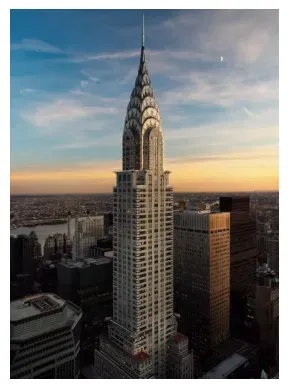

In this demo, we will be using MetaCLIP to identify one of three famous New York City Buildings: the Empire State Building, the Chrysler Building, and the One World Trade Center.

There are two ways to run inference using MetaCLIP and Autodistill.

You can retrieve predictions directly using an ontology (a list of classes you want to identify). Or you can calculate image and text embeddings and compare them manually. Both approaches are equivalent, but using an ontology abstracts away having to do manual comparisons. We will talk about both approaches.

Use MetaCLIP with an Ontology

An ontology is a pair of text that contains the prompt to be sent to a model (in this case, MetaCLIP) and a label that will be used if you want to label a full dataset.

Create a new Python file and add the following code:

from autodistill_metaclip import MetaCLIP

from autodistill.detection import CaptionOntology

classes = [“empire state building”, “chrysler building”, “one world trade center building”]

base_model = MetaCLIP(

#{caption:class}

ontology=CaptionOntology(

{

"The Empire State Building": "empire state building",

"The Chrysler Building": "chrysler building",

"The One World Trade Center Building":"one world trade center building"

}

)

)

results = base_model.predict(file_path, confidence=0.5)

print(results)

top_result = classes[results.class_id[0]]

print(top_result)In the code snippet above, we construct an ontology that associates class names with corresponding prompts.

The MetaCLIP base model will receive the prompts "The Empire State Building," "The Chrysler Building," and "The One World Trade Center Building." The code will subsequently identify any occurrence of "The Empire State Building" as "empire state building," "The Chrysler Building" as "chrysler building," and "The One World Trade Center Building" as "one world trade center building."

This code will then return a prediction showing the classification with confidences over 0.5 (50%). You can adjust the confidence level in the code snippet as necessary. We will take the top result, retrieve its class name, and print that result to the console, too.

For our example, we are going to use a picture of the Chrysler building:

Let’s run our code. Our code returns:

Classifications(class_id=array([1]), confidence=array([0.96553457]))

chrystler buildingThis output indicates that the image was classified with a class ID of 1, which corresponds to the second element in the class_id array (since array indices start at 0). This is the “chrystler building” class.

MetaCLIP model able to associate the text "The Chrysler Building" with the image with approximately 97% confidence.

Calculate and Compare Embeddings

Embeddings are numeric representations of images and text. Embeddings encode semantic information about an image or text. This information enables you to use a distance metric like cosine similarity to compare the similarity of two embeddings.

With the Autodistill MetaCLIP module, you can calculate both text and image embeddings. You can also compare them using cosine similarity with a compare() function.

Create a new Python file and add the code below:

from autodistill_metaclip import MetaCLIP

base_model = MetaCLIP(None)

chrysler_text = base_model.embed_text("the chrysler building")

image_embedding = base_model.embed_image(“image.png”)

print(base_model.compare(chrysler_text, image_embedding))In this code, we initialize MetaCLIP, create a text embedding for the term “the chrysler building”, create an image embedding for a file, then compare the embeddings.

You could save the image embedding in a vector database for use in building an image search engine.

The script returns:

0.3144019544124603This value reflects the degree of similarity between the embedded image and the corresponding text. Note that this value is not the same as confidence. Confidence tells you how confident a model is that a label matches an image; similarity tells you how similar two embeddings are. The higher the similarity, the more similar two embeddings are.

Next, we will run multiple .compare() operations to determine whether the image of the Chrysler Building has a greater similarity to the text "the chrysler building" compared to the texts "the empire state building" and "the one world trade center building."

base_model = MetaCLIP(None)

empire_state_text = base_model.embed_text("the empire state building")

chrysler_text= base_model.embed_text("the chrysler building")

one_world_trade_text= base_model.embed_text("the one world trade center building")

image = base_model.embed_image(file_path)

print("Empire State Building Similarity :", base_model.compare(empire_state_text, image))

print("Chrysler Building Similarity :", base_model.compare(chrysler_text, image))

print("One World Trade Center Similarity :", base_model.compare(one_world_trade_text, image))The code above returns:

Empire State Building Similarity : 0.2762314975261688

Chrysler Building Similarity : 0.3144019544124603

One World Trade Center Similarity : 0.2696280777454376With the similarity score for the Chrysler Building being the highest, this indicates that MetaCLIP successfully gauged that the image of the Chrysler Building was more similar to "the chrysler building" compared to "the empire state building" and "the one world trade center."

Conclusion

MetaCLIP represents an exciting advancement in the domain of language-image pre-training, underscoring the importance of data curation in attaining enhanced performance. The study outlined in "Demystifying CLIP Data" demonstrates that MetaCLIP, when applied to a dataset of 400 million image-text pairs, surpasses CLIP in terms of performance across multiple standard benchmarks.

By prioritizing the data component and refining the curation procedure, MetaCLIP capitalizes on the strengths of CLIP and extends the possibilities within the realm of computer vision.

You can use MetaCLIP today using the Autodistill MetaCLIP module. This module provides an intuitive way to use MetaCLIP. The module also integrates with the Autodsitill ecosystem, allowing you to use MetaCLIP to automatically label images for use in training models.

Cite this Post

Use the following entry to cite this post in your research:

Nathan Marraccini. (Nov 3, 2023). How to Use MetaCLIP. Roboflow Blog: https://blog.roboflow.com/how-to-use-metaclip/