Announced in the paper “Perception Encoder: The best visual embeddings are not at the output of the network”, Perception Encoder is a new, state-of-the-art model for generating image and text embeddings, licensed under an Apache 2.0 license. Perception Encoder was developed by Meta AI.

With Perception Encoder, you can generate embeddings that can be used for a wide range of tasks, including:

- Finding images similar to a text prompt (i.e. “box”).

- Finding images that contain specific objects (i.e. “a brown box with a shipping label”).

- Classifying videos into one or more categories (i.e. “box” vs “crate”).

Meta’s Perception Encoder model is available both in Roboflow Inference, an open source computer vision Inference server, and Roboflow Workflows, a web-based computer vision application builder. You can both create image and text embeddings, and compare them for use in image classification and understanding.

In this post, we will show how to use the Perception Encoder model in Roboflow Inference. We will build an application that calculates Perception Encoder text and image embeddings. We will use these embeddings to identify the similarity between frames in a video stream and the text embedding.

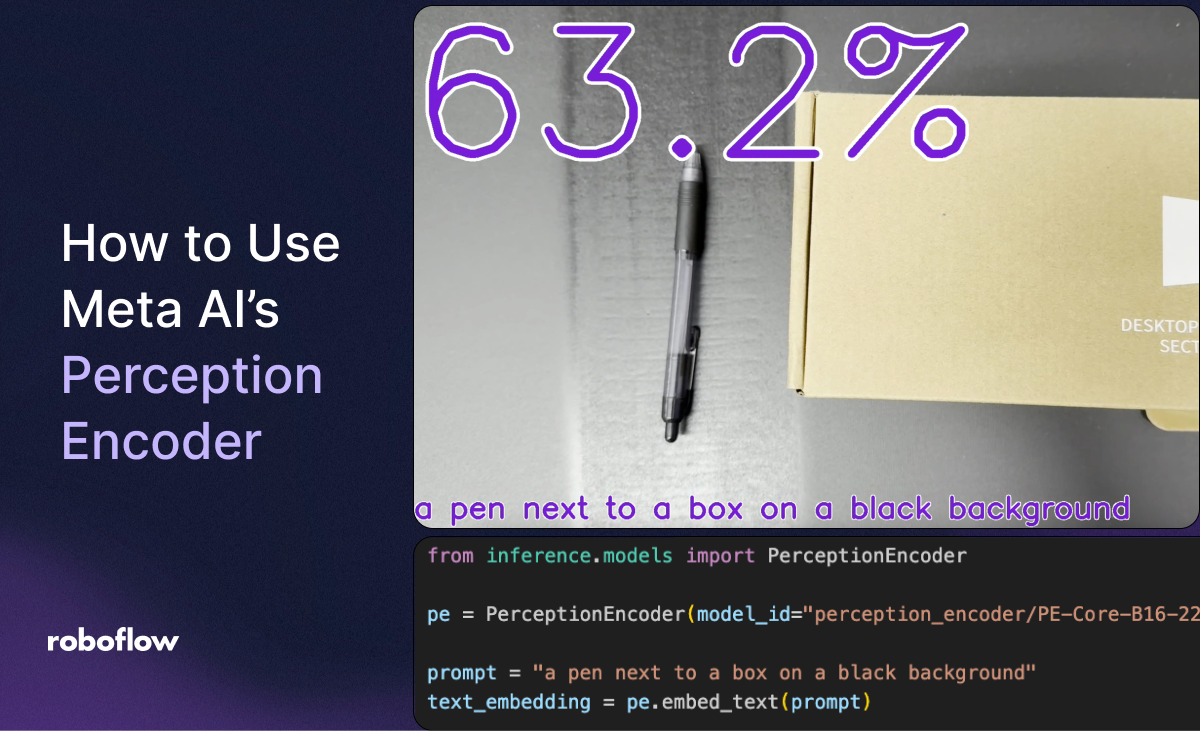

Here is a demo showing Perception Encoder calculating the similarity between a video frame and a text prompt ("a pen next to a box on a black background"):

Install Inference

To get started, we need to install Inference, an open source computer vision inference server developed and maintained by Roboflow. We will install Inference with the Transformers extension, required to use Perception Encoder.

pip install “inference[transformers]”With Inference installed, we can start building our application.

Run Perception Encoder

Then, create a new Python file and add the following code:

import cv2

import inference

from inference.core.utils.postprocess import cosine_similarity

from inference.models import PerceptionEncoder

pe = PerceptionEncoder(model_id="perception_encoder/PE-Core-B16-224", device="mps")

prompt = "a pen next to a box on a black background"

text_embedding = pe.embed_text(prompt)

def render(result, image):

# get the cosine similarity between the prompt & the image

similarity = cosine_similarity(result["embeddings"][0], text_embedding[0])

# scale the result to 0-100 based on heuristic (~the best & worst values I've observed)

range = (0.15, 0.40)

similarity = (similarity-range[0])/(range[1]-range[0])

similarity = max(min(similarity, 1), 0)*100

# print the similarity

text = f"{similarity:.1f}%"

cv2.putText(image, text, (10, 310), cv2.FONT_HERSHEY_SIMPLEX, 12, (255, 255, 255), 30)

cv2.putText(image, text, (10, 310), cv2.FONT_HERSHEY_SIMPLEX, 12, (206, 6, 103), 16)

# print the prompt

cv2.putText(image, prompt, (20, 1050), cv2.FONT_HERSHEY_SIMPLEX, 2, (255, 255, 255), 10)

cv2.putText(image, prompt, (20, 1050), cv2.FONT_HERSHEY_SIMPLEX, 2, (206, 6, 103), 5)

# display the image

cv2.imshow("PE", image)

cv2.waitKey(1)

# start the stream

inference.Stream(

source="webcam",

model=pe,

output_channel_order="BGR",

use_main_thread=True,

on_prediction=render

)In this code, we:

- Import the required dependencies.

- Load the

perception_encoder/PE-Core-B16-22perception encoder model. Update the “device” value to “cuda” if you have an NVIDIA-capable GPU, or “cpu” if you are running on a device with a CPU. Leave the value as “mps” if you are running the script on a Mac. - Define a render function that accepts a video frame, runs Perception Encoder, then shows the similarity between the embedding of a pre-calculated text prompt (declared in the prompt variable) and the current video frame.

- Use

inference.Streamto run the model on our webcam. This function accepts our render function as a callback.

Let’s run the script. When you run the script, your webcam will start and compare the similarity of your video stream to the text:

When the box appears in the video, the confidence goes up. This satisfies the “box” part of the prompt. When a pen is placed next to the box, the confidence goes up because the frame is closer to the prompt “a pen next to a box on a black background”.

The more similar the frame is to the text embedding, the higher the similarity score will be.

You can also run Perception Encoder on stored video frames with the following code:

import cv2

from tqdm import tqdm

from inference.core.utils.postprocess import cosine_similarity

import supervision as sv

from inference.models import PerceptionEncoder

pe = PerceptionEncoder(model_id="perception_encoder/PE-Core-B16-224")

prompt = "a pen next to a box on a black background"

text_embedding = pe.embed_text(prompt)

VIDEO = "IMG_2340.MOV"

# Create a video_info object for use in the VideoSink.

video_info = sv.VideoInfo.from_video_path(video_path=VIDEO)

frame_generator = sv.get_video_frames_generator(VIDEO)

# Create a VideoSink context manager to save our frames.

with sv.VideoSink(target_path="output.mp4", video_info=video_info) as sink:

# Iterate through frames yielded from the frame_generator.

for frame in tqdm(frame_generator, total=video_info.total_frames):

result = pe.embed_image(frame)

# get the cosine similarity between the prompt & the image

similarity = cosine_similarity(result[0], text_embedding[0])

# scale the result to 0-100 based on heuristic (~the best & worst values I've observed)

range = (0.15, 0.40)

similarity = (similarity - range[0]) / (range[1] - range[0])

similarity = max(min(similarity, 1), 0) * 100

# print the similarity

text = f"{similarity:.1f}%"

cv2.putText(

frame, text, (10, 310), cv2.FONT_HERSHEY_SIMPLEX, 12, (255, 255, 255), 30

)

cv2.putText(

frame, text, (10, 310), cv2.FONT_HERSHEY_SIMPLEX, 12, (206, 6, 103), 16

)

# print the prompt

cv2.putText(

frame, prompt, (20, 1050), cv2.FONT_HERSHEY_SIMPLEX, 2, (255, 255, 255), 10

)

cv2.putText(

frame, prompt, (20, 1050), cv2.FONT_HERSHEY_SIMPLEX, 2, (206, 6, 103), 5

)

# Write the annotated frame to the video sink.

sink.write_frame(frame=frame)This file opens a video file, runs inference on each frame with Perception Encoder, calculates the similarity between the embedding for the frame and the embedding for the text prompt, then saves the frame with a similar score written on top to a new file.

Conclusion

Perception Encoder is a new, state-of-the-art multimodal embedding model developed by Meta AI. With Perception Encoder, you can calculate the similarity between two images with simple or complex text prompts.

In this guide, we walked through how to run Perception Encoder on your own hardware with Roboflow Inference, an open source Inference server.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Jun 26, 2025). How to Use Perception Encoder. Roboflow Blog: https://blog.roboflow.com/how-to-use-perception-encoder/