When you are conducting aerial surveys – for example, for insurance auditing – a common probFor example, you can train an object detection model to identify solar panels, then a classification model that says whether the solar panel is in the region of a roof or on the ground.

In this demo, we will detect solar panels and determine if they are on the ground or on the roof of a building. With that said, you can update the example to use any models you want.

Here is a demo of our system detecting solar panels, then showing whether each one is on the roof or the ground:

Without further ado, let’s get started!

Build a Detection and Classification Workflow

To build a detect then classify workflow with Roboflow Workflows, you need:

- An object detection model, and;

- A classification model.

The classification model can be a fine-tuned classification model trained on Roboflow, or a multimodal model. For this guide, we will use GPT-4 with Vision to demonstrate multimodal classification in Roboflow Workflows.

Our system will return a JSON result that can be processed with code.

Let’s discuss how this Workflow works in detail.

What is Roboflow Workflows?

Workflows is a low-code computer vision application builder. With Workflows, you can build complex computer vision applications in a web interface and then deploy Workflows with the Roboflow cloud, on an edge device such as an NVIDIA Jetson, or on any cloud provider like GCP or AWS.

Workflows has a wide range of pre-built functions available for use in your projects and you can test your pipeline in the Workflows web editor, allowing you to iteratively develop logic without writing any code.

Detect Solar Panels in Aerial Imagery Using Computer Vision

First, we need to detect objects of interest. In this guide, we will detect solar panels using an aerial solar panel detection model. This model has been fine-tuned specifically for use with aerial imagery.

If you don’t have an object detection model yet, you can train one on Roboflow. Refer to our Getting Started guide to learn how to train an object detection model.

After we detect objects, we need to offset them by a fixed amount. This will allow us to get context of the environment around each bounding box. This is important because we need to know the environment around each solar panel to determine whether it is on the ground or on a rooftop.

Note: If you apply a detection offset, make sure that you pre-process images going into your workflow with black pixels of the same offset. This is important to ensure that your detection offsets do not go out of bounds in your image, which will cause the bounding box to be discarded.

We then crop all of the detections so that we have individual images of each solar panel.

Visualize Predictions

Our Workflow contains a Bounding Box Visualization block. This block will show all predictions returned by our object detection model. This is useful for visualizing what our object detection model sees, and how the padding affects the predictions from the model.

Classify Solar Panel Locations with Computer Vision

We send each cropped response to GPT-4o using the Large Multimodal Model (LMM) block. To use this block, you will need an OpenAI API key. Learn how to retrieve your OpenAI API key.

Multimodal models are ideal for zero-shot classification, where you want to classify an object and do not yet have a fine-tuned model for your specific task.

We provided the prompt:

is the solar panel on a house or the ground? return a one word response.

You can also use a fine-tuned classification model trained on Roboflow.

Return a Response

We have configured our Workflow to return three values:

- The predictions from our solar panel detection model;

- The response from our bounding box visualizer, and;

- The structured data response from GPT-4V.

Testing the Workflow

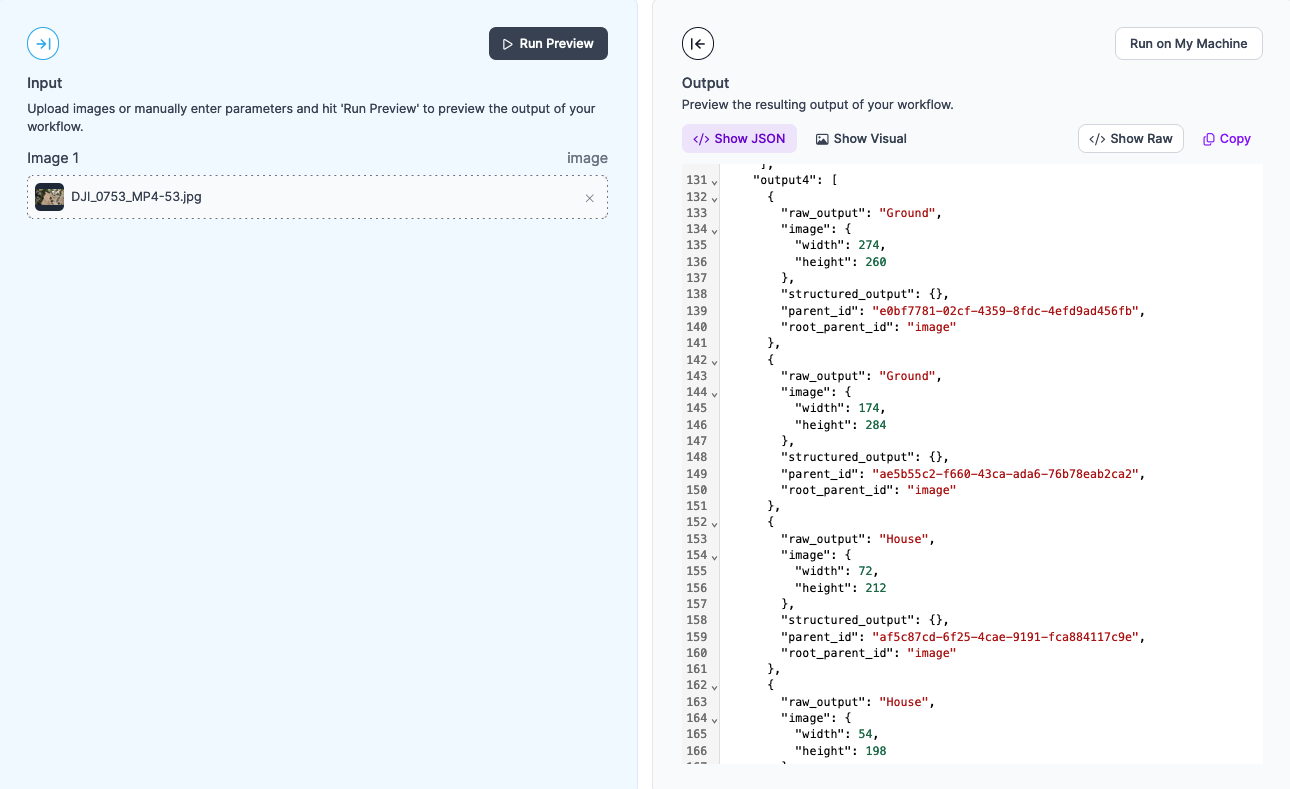

To test a Workflow, click “Run Preview” at the top of the page.

To run a preview, first drag and drop an image into the image input field.

Click “Run Preview” to run your Workflow.

The Workflow will run and provide two outputs:

- A JSON view, with all of the data we configured our Workflow to return, and;

- A visualization of predictions from our model, drawn using the Bounding Box Visualization block that we included in our Workflow.

Let’s run the Workflow on an image.

Our Workflow returns a JSON response with data from GPT-4, as well as a visual response showing the bounding box region, with padding, returned by our object detection model. Here is the response from our Workflow:

The response contains JSON data for each solar panel:

{

"raw_output": "Ground",

"image": {

"width": 274,

"height": 260

},

"structured_output": {},

"parent_id": "e0bf7781-02cf-4359-8fdc-4efd9ad456fb",

"root_parent_id": "image"

},

Our system returned two "Ground" results and two "House" results. This is reflective of the provided image. Our demo image contains four groups of solar panels. Two groups are on a roof. The other two are on the ground or otherwise away from the roof of a house.

Deploying the Workflow

Workflows can be deployed in the Roboflow cloud, or on an edge device such as an NVIDIA Jetson or a Raspberry Pi.

To deploy a Workflow, click “Deploy Workflow” in the Workflow builder. You can then choose how you want to deploy your Workflow.

Conclusion

You can use Roboflow Workflows to build applications that detect objects then classify the region of the object.

In this guide, we used Roboflow Workflows to build a tool that identifies solar panels in an image, applies padding to the region of each panel, then determines whether the region around a solar panel is on a roof or the ground.

To learn more about building computer vision applications with Workflows, refer to the Roboflow Workflows documentation.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Jul 25, 2024). Identify Solar Panel Locations with Computer Vision. Roboflow Blog: https://blog.roboflow.com/identify-solar-panels/