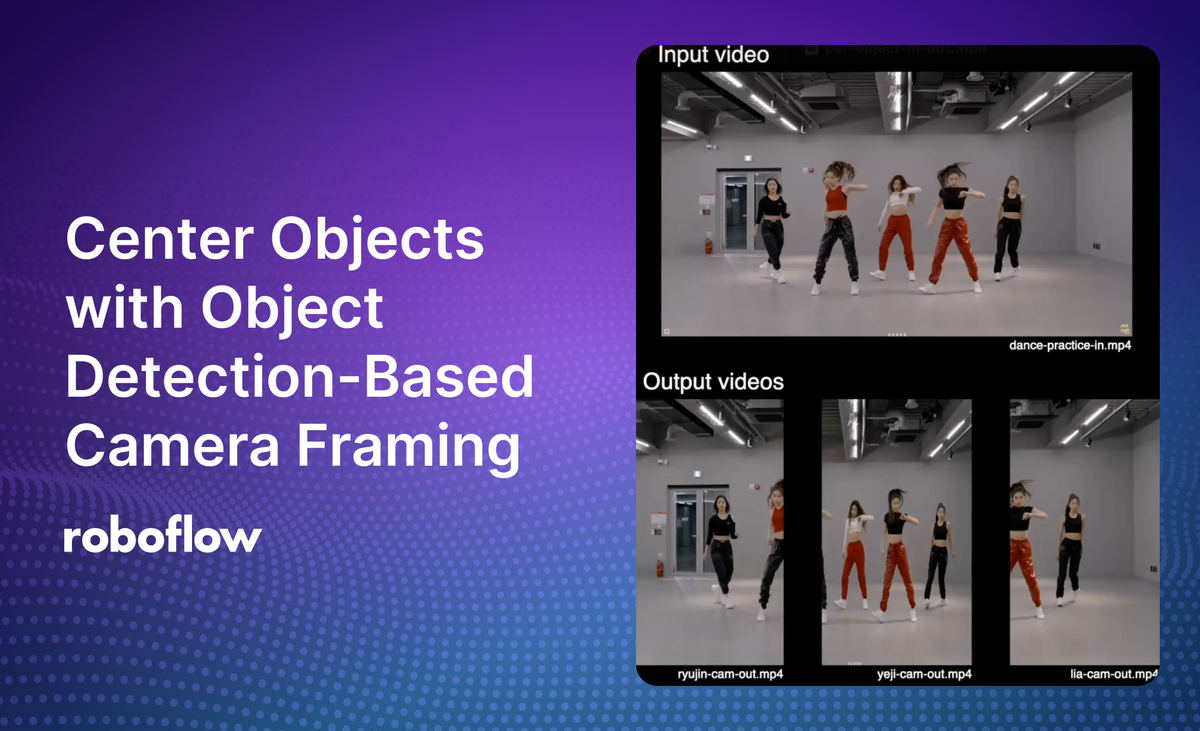

Keeping objects of interest centered in a video frame can be useful across applications like sports analysis, security monitoring, wildlife tracking, and live event streaming. For example, you could use object centering to focus on a player on a football field as they make a play, or focus on a dancer in a music video.

To center objects, you need to make dynamic adjustments to account for changes in object sizes, positions, and aspect ratios.

This guide introduces two approaches to address this challenge:

- Group approach: Produce a single output video that encloses all objects of interest together in one frame.

- Per-object approach: Generate separate output videos, each focusing on a single object of interest.

Whether you need a single video that keeps all objects of interest in the frame together or separate videos that focus on each object’s movements, this guide provides a clear and adaptable starting point for a range of practical applications.

Here is an example output showing an input video where there are several dancers and outputs where individual dancers are focused:

How it works (Overview)

There are four steps that we will follow to keep an object centered in a video:

- Object detection: We begin with an object detection model—this could be a general-purpose YOLO variant or a custom-trained model. We’ll run it using Roboflow Workflows with the local video inferencing pipeline. Since our model outputs center-based bounding boxes (i.e., x, y, width, height where x, y is the box’s midpoint), we’ll gather those detections frame by frame before moving on to expansion and smoothing.

- Bounding box expansion: Sometimes the detected boxes are too tight, risking clipped limbs or partial objects. To avoid that, we multiply the bounding box width and height by a margin factor (e.g. 1.3), ensuring there’s extra room around each subject.

- Zooming and smoothing: Bounding boxes can shift unpredictably if your subject moves quickly. To prevent sudden jerks in the camera frame, we use exponential smoothing on both the bounding box center and the zoom factor. We also clamp how fast the scale can change per frame, so we don’t see abrupt in/out zooms.

- Fitting into the desired video resolution: Even though bounding boxes vary in size or aspect ratio, the final cropped region must remain a fixed resolution (e.g. 1920×1080 or 720×1280) without distorting the video. We handle this by determining a uniform scale based on whichever dimension (width or height) is most limiting, preserving the aspect ratio so there’s no stretching. After cropping the region from the source, we resize it proportionally. This guarantees our finished video hits the exact target resolution while keeping the subject’s proportions correct.

Set up object detection with Workflows

This guide assumes that you have an object detection model in place, whether it’s a publicly available checkpoint or a custom-trained solution. If you don’t, you can start with a default YOLO11n model trained on the COCO dataset, or build your own by following this tutorial. A custom-trained model lets you detect classes tailored to your project, like specific objects in a warehouse to unique wildlife species, ensuring more accurate and relevant results for the auto framing pipeline.

We will begin by creating a workflow that includes an Object Detection step (using your model), a Bounding Box Visualization step for rendering or parsing those detections, and an Output step to finalize the process.

The inference server runs on localhost, ensuring local processing and quick iteration. After setting up this workflow, a separate Python application will take the bounding box data from Roboflow and handle the panning, tilting, cropping, and smoothing of the final frames.

To set up the inference server on localhost, ensure Docker is installed and configured on your system. Then, install the Roboflow inference CLI with:

pip install inference-cliStart the inference server by running:

inference server startThis will launch the server locally, ready to process frames through the workflow.

Building an Auto-Framer in Python

Now for executing the pipeline, we will run the workflow in Python to process each video frame, passing the detection and bounding box data as input parameters. The Python application takes the bounding boxes generated by the Roboflow workflow and processes them to dynamically pan, tilt, crop, and smooth the frame of interest. Both the Group Approach and Per-Object Approach rely on the same logic for detecting objects and adjusting the frame, with minor differences in how the output is handled. Below, we’ll break down the essential parts of the code to understand how it works.

Step #1: Initialize object detection with InferencePipeline

We begin by setting up the InferencePipeline to run the workflow locally on the video input. The pipeline continuously processes each frame and passes the detections to a callback function for further processing.

from inference import InferencePipeline

pipeline = InferencePipeline.init_with_workflow(

api_key="[YOUR_API_KEY]",

workspace_name="[YOUR_WORKSPACE_NAME]",

workflow_id="[YOUR_WORKFLOW_ID]",

video_reference="[YOUR_VIDEO_PATH]",

max_fps=30,

on_prediction=my_sink

)

pipeline.start()

pipeline.join()This section connects the pipeline to the locally running inference server and specifies the callback function, my_sink, which processes each frame's predictions.

Step #2: Processing predictions

The my_sink function takes the detections from the workflow and applies the logic to calculate a smooth and dynamic frame. First, it parses the bounding boxes and expands them to provide some padding around the detected objects.

def my_sink(result, video_frame):

predictions = result.get("predictions", [])

boxes = []

for pred in predictions:

x = pred["x"]

y = pred["y"]

width = pred["width"]

height = pred["height"]

# Expand bounding box

x1 = x - width / 2

y1 = y - height / 2

x2 = x + width / 2

y2 = y + height / 2

boxes.append((x1, y1, x2, y2))This ensures the bounding boxes are converted from center-based format to a more convenient corner-based format for cropping and resizing.

Step #3: Applying smoothing

To avoid sudden jumps in the frame, the code uses exponential smoothing on both the bounding box center and scale. This creates a steady motion for the camera effect.

# Smooth bounding box center

new_cx = ALPHA_CENTER * old_cx + (1 - ALPHA_CENTER) * raw_cx

new_cy = ALPHA_CENTER * old_cy + (1 - ALPHA_CENTER) * raw_cy

# Smooth scale

scale_instant = (target_fraction * OUTPUT_HEIGHT) / box_height

new_scale = ALPHA_SCALE * prev_scale + (1 - ALPHA_SCALE) * scale_instantThese calculations ensure the frame remains stable, even if the objects move quickly.

Step #4: Cropping and resizing

Once the smoothed bounding box is ready, the code crops the frame to fit the region of interest and resizes it to the desired output resolution without distortion.

# Compute cropping region

hw = (OUTPUT_WIDTH / 2) / scale

hh = (OUTPUT_HEIGHT / 2) / scale

top_left_x = int(cx - hw)

top_left_y = int(cy - hh)

bot_right_x = int(cx + hw)

bot_right_y = int(cy + hh)

# Crop and resize

cropped = frame[top_left_y:bot_right_y, top_left_x:bot_right_x]

output_frame = cv2.resize(cropped, (OUTPUT_WIDTH, OUTPUT_HEIGHT))This ensures every output video maintains the same resolution and aspect ratio, regardless of the original bounding box size.

Step #5: Writing the output

The final step involves writing the processed frames to video files. The output handling differs based on the approach:

Group Approach: Single output video

A single VideoWriter writes frames where all objects of interest are enclosed in one bounding box. This ensures the entire group remains in view throughout the video.

# Initialize the VideoWriter for group output

if writer is None:

fourcc = cv2.VideoWriter_fourcc(*"mp4v")

writer = cv2.VideoWriter(OUTPUT_VIDEO, fourcc, FPS, (OUT_WIDTH, OUT_HEIGHT))

# Write the resized frame to the group video

writer.write(resized_frame)Per-Object Approach: Multiple output videos

Each object has its own VideoWriter, and processed frames for each detected object are written to separate files, creating individual videos.

# Initialize VideoWriters for each object

if not initialized:

for obj_name, filename in OUTPUT_FILENAMES.items():

writer_dict[obj_name] = cv2.VideoWriter(filename, fourcc, FPS, (OUTPUT_WIDTH, OUTPUT_HEIGHT))

initialized = True

# Write the resized frame to the corresponding object’s video

writer_dict[obj_name].write(resized_frame)Putting it all together

The group approach and per-object approach both share the same underlying logic for detecting objects, smoothing their movement, and dynamically adjusting the frame. The difference lies in how the outputs are handled, which makes each approach suitable for specific use cases.

Group Approach

The group approach computes a single bounding box that encloses all detected objects and creates one video where the entire group stays in view. This approach is ideal for scenarios like team sports, stage performances, or any situation where maintaining a view of multiple subjects is important.

Below is the full Python code for the group approach:

import cv2

import numpy as np

from inference import InferencePipeline

#############################

# ---- CONFIG / CONSTANTS --

#############################

API_KEY = "[YOUR_API_KEY]"

WORKSPACE_NAME = "[YOUR_WORKSPACE_NAME]"

WORKFLOW_ID = "[YOUR_WORKFLOW_ID]"

VIDEO_REFERENCE = "[YOUR_VIDEO_PATH]"

OUTPUT_VIDEO = "group_output.mp4"

# Output resolution

OUT_WIDTH = 1920

OUT_HEIGHT = 1080

# Input resolution

IN_WIDTH = 2560

IN_HEIGHT = 1440

# Smoothing & Padding parameters

PADDING_FACTOR = 1.3

ALPHA_CENTER = 0.98

ALPHA_SCALE = 0.98

MAX_SCALE_CHANGE_FACTOR = 1.05

# Global smoothing states

group_center = [IN_WIDTH / 2, IN_HEIGHT / 2]

group_scale = 1.0

# Video writer

writer = None

FPS = 30

#############################

# ----- SINK / CALLBACK ----

#############################

def my_sink(result, video_frame):

global writer, group_center, group_scale

# Convert Roboflow's VideoFrame to OpenCV format

frame = video_frame.numpy_image

if frame is None:

return

# Initialize VideoWriter if not already set

if writer is None:

fourcc = cv2.VideoWriter_fourcc(*"mp4v")

writer = cv2.VideoWriter(OUTPUT_VIDEO, fourcc, FPS, (OUT_WIDTH, OUT_HEIGHT))

print(f"[INFO] Writer initialized for output: {OUTPUT_VIDEO}")

# Parse predictions

predictions = result.get("predictions", [])

if not predictions:

writer.write(frame)

print(f"[WARN] No detections found.")

return

# Compute group bounding box

min_x = min([pred["x"] - pred["width"] / 2 for pred in predictions])

min_y = min([pred["y"] - pred["height"] / 2 for pred in predictions])

max_x = max([pred["x"] + pred["width"] / 2 for pred in predictions])

max_y = max([pred["y"] + pred["height"] / 2 for pred in predictions])

group_width = (max_x - min_x) * PADDING_FACTOR

group_height = (max_y - min_y) * PADDING_FACTOR

# Raw center

raw_cx = (min_x + max_x) / 2

raw_cy = (min_y + max_y) / 2

# Smooth center

group_center[0] = ALPHA_CENTER * group_center[0] + (1 - ALPHA_CENTER) * raw_cx

group_center[1] = ALPHA_CENTER * group_center[1] + (1 - ALPHA_CENTER) * raw_cy

# Scale

scale_w = OUT_WIDTH / group_width

scale_h = OUT_HEIGHT / group_height

scale_instant = min(scale_w, scale_h)

prev_scale = group_scale

min_scale = prev_scale / MAX_SCALE_CHANGE_FACTOR

max_scale = prev_scale * MAX_SCALE_CHANGE_FACTOR

scale_instant = max(min_scale, min(scale_instant, max_scale))

group_scale = ALPHA_SCALE * prev_scale + (1 - ALPHA_SCALE) * scale_instant

# Compute final bounding box in source space

half_w = (OUT_WIDTH / 2) / group_scale

half_h = (OUT_HEIGHT / 2) / group_scale

top_left_x = int(group_center[0] - half_w)

top_left_y = int(group_center[1] - half_h)

bot_right_x = int(group_center[0] + half_w)

bot_right_y = int(group_center[1] + half_h)

# Clamp bounding box to input frame dimensions

top_left_x = max(0, min(top_left_x, IN_WIDTH - 1))

top_left_y = max(0, min(top_left_y, IN_HEIGHT - 1))

bot_right_x = max(0, min(bot_right_x, IN_WIDTH - 1))

bot_right_y = max(0, min(bot_right_y, IN_HEIGHT - 1))

# Crop and resize

cropped_frame = frame[top_left_y:bot_right_y, top_left_x:bot_right_x]

if cropped_frame.size == 0:

print(f"[WARN] Invalid crop region, skipping frame.")

return

resized_frame = cv2.resize(cropped_frame, (OUT_WIDTH, OUT_HEIGHT))

# Write frame to video

writer.write(resized_frame)

#############################

# ----- MAIN FUNCTION ------

#############################

def main():

pipeline = InferencePipeline.init_with_workflow(

api_key=API_KEY,

workspace_name=WORKSPACE_NAME,

workflow_id=WORKFLOW_ID,

video_reference=VIDEO_REFERENCE,

max_fps=FPS,

on_prediction=my_sink

)

pipeline.start()

pipeline.join()

# Release writer

global writer

if writer:

writer.release()

cv2.destroyAllWindows()

print(f"[INFO] Group video saved to: {OUTPUT_VIDEO}")

if __name__ == "__main__":

main()This code processes the video to create a single output that dynamically pans and zooms to include all detected objects, ensuring a smooth and polished result.

Results:

Per-Object Approach: multiple output videos

The Per-Object Approach processes each detected object individually, creating a separate video for each. This is especially useful for generating personalized content, such as highlight reels for individual players, tracking specific items in a warehouse, or monitoring multiple moving targets in a surveillance feed.

Below is the full Python code for the per-object approach:

import cv2

import numpy as np

from inference import InferencePipeline

#############################

# ---- CONFIG / CONSTANTS --

#############################

API_KEY = "[YOUR_API_KEY]"

WORKSPACE_NAME = "[YOUR_WORKSPACE_NAME]"

WORKFLOW_ID = "[YOUR_WORKFLOW_ID]"

VIDEO_REFERENCE = "[YOUR_VIDEO_PATH]"

# Output resolution

OUTPUT_WIDTH = 720

OUTPUT_HEIGHT = 1280

# Input resolution

INPUT_WIDTH = 2560

INPUT_HEIGHT = 1440

# Smoothing & Padding parameters

ZOOM_TARGET_FRACTION = 0.5

MARGIN_FACTOR = 1.3

ALPHA_CENTER = 0.95

ALPHA_SCALE = 0.95

MAX_SCALE_CHANGE_FACTOR = 1.1 # ±10% per frame

# Class Mapping and Output Filenames

CLASS_MAP = {

0: "object_1",

1: "object_2",

2: "object_3",

3: "object_4",

4: "object_5"

}

OUTPUT_FILENAMES = {

"object_1": "object_1_output.mp4",

"object_2": "object_2_output.mp4",

"object_3": "object_3_output.mp4",

"object_4": "object_4_output.mp4",

"object_5": "object_5_output.mp4"

}

# Global smoothing states

camera_centers = {obj: [INPUT_WIDTH / 2, INPUT_HEIGHT / 2] for obj in CLASS_MAP.values()}

last_scales = {obj: 1.0 for obj in CLASS_MAP.values()}

# Video writers

writer_dict = {}

FPS = 30

initialized = False

#############################

# ----- SINK / CALLBACK ----

#############################

def my_sink(result, video_frame):

global writer_dict, camera_centers, last_scales, initialized

# Convert Roboflow's VideoFrame to OpenCV format

frame = video_frame.numpy_image

if frame is None:

return

# Lazy initialization of VideoWriters

if not initialized:

fourcc = cv2.VideoWriter_fourcc(*"mp4v")

for obj_name, filename in OUTPUT_FILENAMES.items():

writer_dict[obj_name] = cv2.VideoWriter(filename, fourcc, FPS, (OUTPUT_WIDTH, OUTPUT_HEIGHT))

initialized = True

print("[INFO] Writers initialized for individual outputs.")

# Parse predictions

predictions = result.get("predictions", [])

boxes_for_objects = {}

for pred in predictions:

cls_id = pred.get("class_id")

if cls_id is not None and cls_id in CLASS_MAP:

obj_name = CLASS_MAP[cls_id]

x = pred["x"]

y = pred["y"]

w = pred["width"] * MARGIN_FACTOR

h = pred["height"] * MARGIN_FACTOR

boxes_for_objects[obj_name] = (x, y, w, h)

# Generate output for each object

for obj_name, writer in writer_dict.items():

if obj_name not in boxes_for_objects:

continue

# Get bounding box

x, y, w, h = boxes_for_objects[obj_name]

# Compute instantaneous center and scale

cx = x

cy = y

scale_instant = (ZOOM_TARGET_FRACTION * OUTPUT_HEIGHT) / h

# Smooth scale

prev_scale = last_scales[obj_name]

scale_instant = max(prev_scale / MAX_SCALE_CHANGE_FACTOR,

min(scale_instant, prev_scale * MAX_SCALE_CHANGE_FACTOR))

scale = ALPHA_SCALE * prev_scale + (1 - ALPHA_SCALE) * scale_instant

last_scales[obj_name] = scale

# Smooth center

old_cx, old_cy = camera_centers[obj_name]

new_cx = ALPHA_CENTER * old_cx + (1 - ALPHA_CENTER) * cx

new_cy = ALPHA_CENTER * old_cy + (1 - ALPHA_CENTER) * cy

camera_centers[obj_name] = [new_cx, new_cy]

# Compute cropping region

half_w = (OUTPUT_WIDTH / 2) / scale

half_h = (OUTPUT_HEIGHT / 2) / scale

top_left_x = int(new_cx - half_w)

top_left_y = int(new_cy - half_h)

bot_right_x = int(new_cx + half_w)

bot_right_y = int(new_cy + half_h)

# Clamp to input boundaries

top_left_x = max(0, min(top_left_x, INPUT_WIDTH - 1))

top_left_y = max(0, min(top_left_y, INPUT_HEIGHT - 1))

bot_right_x = max(0, min(bot_right_x, INPUT_WIDTH - 1))

bot_right_y = max(0, min(bot_right_y, INPUT_HEIGHT - 1))

# Crop and resize

cropped = frame[top_left_y:bot_right_y, top_left_x:bot_right_x]

if cropped.size == 0:

continue

resized_frame = cv2.resize(cropped, (OUTPUT_WIDTH, OUTPUT_HEIGHT))

# Write frame to corresponding video

writer.write(resized_frame)

#############################

# ----- MAIN FUNCTION ------

#############################

def main():

pipeline = InferencePipeline.init_with_workflow(

api_key=API_KEY,

workspace_name=WORKSPACE_NAME,

workflow_id=WORKFLOW_ID,

video_reference=VIDEO_REFERENCE,

max_fps=FPS,

on_prediction=my_sink

)

pipeline.start()

pipeline.join()

# Release all writers

for writer in writer_dict.values():

writer.release()

cv2.destroyAllWindows()

print("[INFO] Per-object videos saved successfully.")

if __name__ == "__main__":

main()This code processes the video to create a separate output for each detected object, ensuring smooth framing and consistent resolution for all individual videos.

Results:

Conclusion

Auto Framer demonstrates a practical approach for dynamically tracking and centering objects of interest in video footage, whether it is for a sports analysis tool, a security camera system, or creating individual focus videos for multiple objects.

Using an object detection model alongside video processing pipelines, this method ensures smooth transition and polished results for both single-group and per-object outputs.

By tailoring this approach, you can adapt it to suit various use cases, from creating focused individual videos to enhancing group tracking systems.

Ready to build your next computer vision project?

- Try Roboflow for free and explore our tutorials to get started.

Cite this Post

Use the following entry to cite this post in your research:

Samuel A.. (Jan 13, 2025). Keep Objects Centered with Object Detection-Based Camera Framing. Roboflow Blog: https://blog.roboflow.com/keep-objects-centered-with-object-detection-based-camera-framing/