License plate detection models have a vast range of use cases, from being used to identify the owners of cars that have been involved in violating traffic laws to ascertaining whether a car has parked illegally in a parking lot.

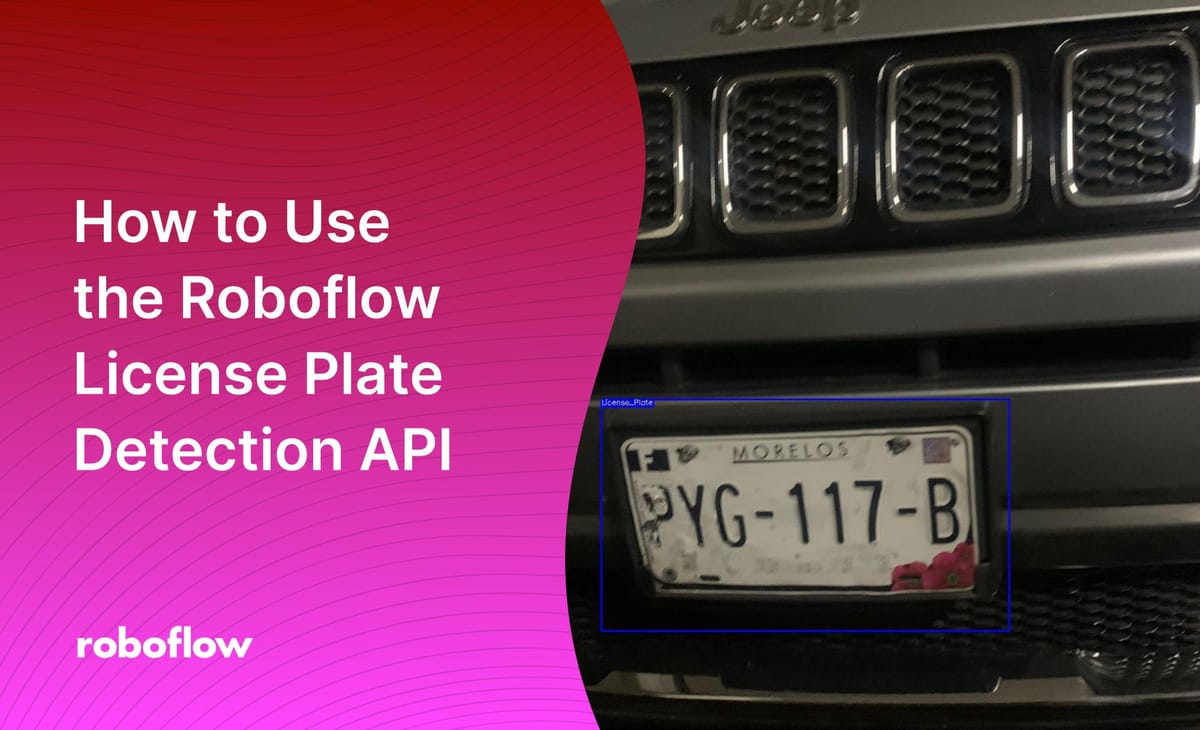

Roboflow provides a free-to-use license plate detection API on Universe, a repository of over 110,000 datasets and 11,000 pre-trained models for computer vision projects. In this guide, we’re going to demonstrate how to use the Roboflow license plate detection API to identify the region where license plates appear in an image or video.

By the end of this tutorial, we will have a list of JSON objects that contain the coordinates where license plates appear. We’ll plot these coordinates on an image to visualize them. Let’s begin!

Using the License Plate Detection API

To use the Roboflow license plate detection API, you must have a Roboflow account. You can create one by signing up on our website. Once you have an account, you will be assigned an API key that you can use to access both our license plate model and all of the other publicly-available models on Roboflow Universe.

For this guide, we’ll use the "License Plate Recognition Computer Vision Project" model, trained on 10126 images of license plates. This model aggregates images from six difference license plate datasets. When benchmarked against the provided test set, this model achieves a 99.0% mAP score and 97.1% accuracy.

To start using the model, go to the model page on Universe then click the “Model” link in the sidebar. This will take you to an interactive widget through which you can test the model and evaluate whether it meets your use cases. This interactive widget lets you run inferences on images and videos that are:

- Uploaded into the web browser;

- Accessible through a provided URL and;

- Displayed on the page in the “Samples from test set” section, a selection of four images from the test set against which the model was benchmarked.

You can also run inference in the browser using your webcam.

Once you have decided that the API meets your needs, we can begin using it programmatically. Scroll down to the section that says “Infer on Local and Hosted Images”. Run the “pip install roboflow” command to install our Python package, then copy the provided Python code snippet into a new file. The provided code snippet will contain your API key. Here’s an example of what this snippet looks like:

from roboflow import Roboflow

rf = Roboflow(api_key="YOUR_API_KEY")

project = rf.workspace().project("license-plate-recognition-rxg4e")

model = project.version(4).model

# infer on a local image

print(model.predict("your_image.jpg", confidence=40, overlap=30).json())Substitute the “your_image.jpg” file name with the name of the file on which you want to run inference. Make sure you copy the snippet from Universe as it will contain your API key. Also, remove the comment in the line that saves your prediction to a file:

model.predict("your_image.jpg", confidence=40, overlap=30).save("prediction.jpg")This line will run inference on our image and then save the results to a file called “prediction.jpg”. This line will let us visualize our predictions without having to worry about manually plotting the coordinates that the Roboflow API returns.

Now we’re ready to run the script! For this example, we are going to use the following image of a car:

When you run the provided code snippet, you will receive a list of JSON objects that contain the location of predictions in an image. Here is the output for the above image:

{'predictions': [{'x': 672.5, 'y': 846.5, 'width': 671.0, 'height': 381.0,

'confidence': 0.87760329246521, 'class': 'License_Plate',

'image_path': 'image.jpg', 'prediction_type': 'ObjectDetectionModel'}],

'image': {'width': 1600, 'height': 1200}}This list contains an object that contains a list. This list contains information about the license plates found in the image. In our example, one license plate is present. We can open up the “prediction.jpg” file to see a visual representation of our predictions:

We have successfully identified the location of a license plate in our image, as demonstrated in the image above.

Deploying the Model to Production

With a working model in place, there is a big question to answer: how can I deploy the model and run inference in production? That’s a great question. There are plenty of ways that you can deploy the license plate detection model.

Out of the box, we have SDKs for deploying a model to:

- Luxonis OAK: An edge inference device with a webcam. Ideal for projects that require a high FPS during inference.

- A Raspberry Pi: A small micro-computer with a small form factor, ideal for edge inference.

- iOS: Useful if you want to run your model inside an application.

- On the web using roboflow.js: Ideal if you want to control your model through a webcam.

- NVIDIA Jetson: An edge computing device built by NVIDIA. There are many versions of the Jetson available with different performance levels offered.

- Docker: Roboflow offers an inference server that you can deploy through Docker. Both CPU and GPU options are available. This container is ideal for running on a range of devices, from the Raspberry Pi to the Jetson.

You can deploy your model using our hosted API via Python, too. This is an especially good choice if you plan to post-process images and/or video (i.e. record video feeds and save them on a file to run inference later).

You can learn more about available deployment options on the Roboflow Deploy page and in our Inference documentation.

Next Steps After Inference

Now that we know where the license plate appears in an image, we can use that information to perform an additional action. One common use case is to run Optical Character Recognition (OCR) through the region that contains the license plate, allowing us to retrieve the text that appears on a license plate programmatically.

To do this, you need to:

- Crop out all of the license plates in an image into their own images, allowing you to run OCR on each license plate in isolation without confusing the text between license plates.

- Run each license plate image through an OCR model such as Google’s Cloud Vision OCR tool.

License Plate Detection Applications

Using the output of our model, combined with OCR, we could:

- Accurately record the license plates of cars that have committed a traffic violation (i.e. speeding, going through a red light) so that details about the incident can be forwarded to a traffic officer for review.

- Track when a stolen car is identified by a camera monitoring a road (i.e. using a camera mounted on top of traffic lights).

- Record when cars park in a parking lot without having paid for permission.

There are many more use cases that you could explore with our license plate detection API, too. We have collected over a dozen guides that show common patterns used with computer vision models in our Templates library. We encourage you to explore the library to find utilities that may be useful for your project (i.e. cropping regions of interest, saving inference results to a Google Sheet).

Conclusion

In this guide, we have demonstrated how to use the Roboflow license plate detection model to identify the coordinates of all of the license plates in an image. This model can be run in the browser on Universe for testing, then deployed to a range of devices from the Raspberry Pi to the Luxonis OAK camera.

While designing your application and considering deployment, you can build logic on top of the model that performs an additional action after a prediction is made. Now you have the knowledge you need to start detecting license plates in Python using our API!

As a next step, try this video tutorial

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Feb 20, 2023). How to Use the Roboflow License Plate Detection API. Roboflow Blog: https://blog.roboflow.com/license-plate-detection-api/

Discuss this Post

If you have any questions about this blog post, start a discussion on the Roboflow Forum.