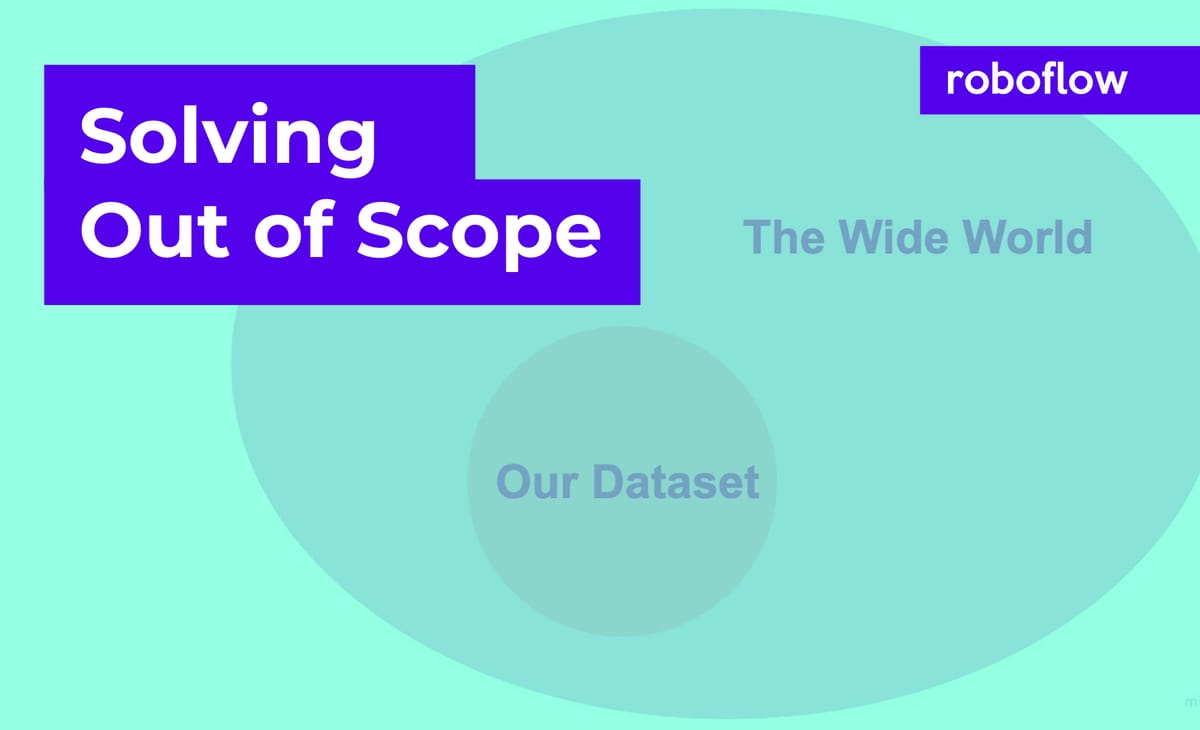

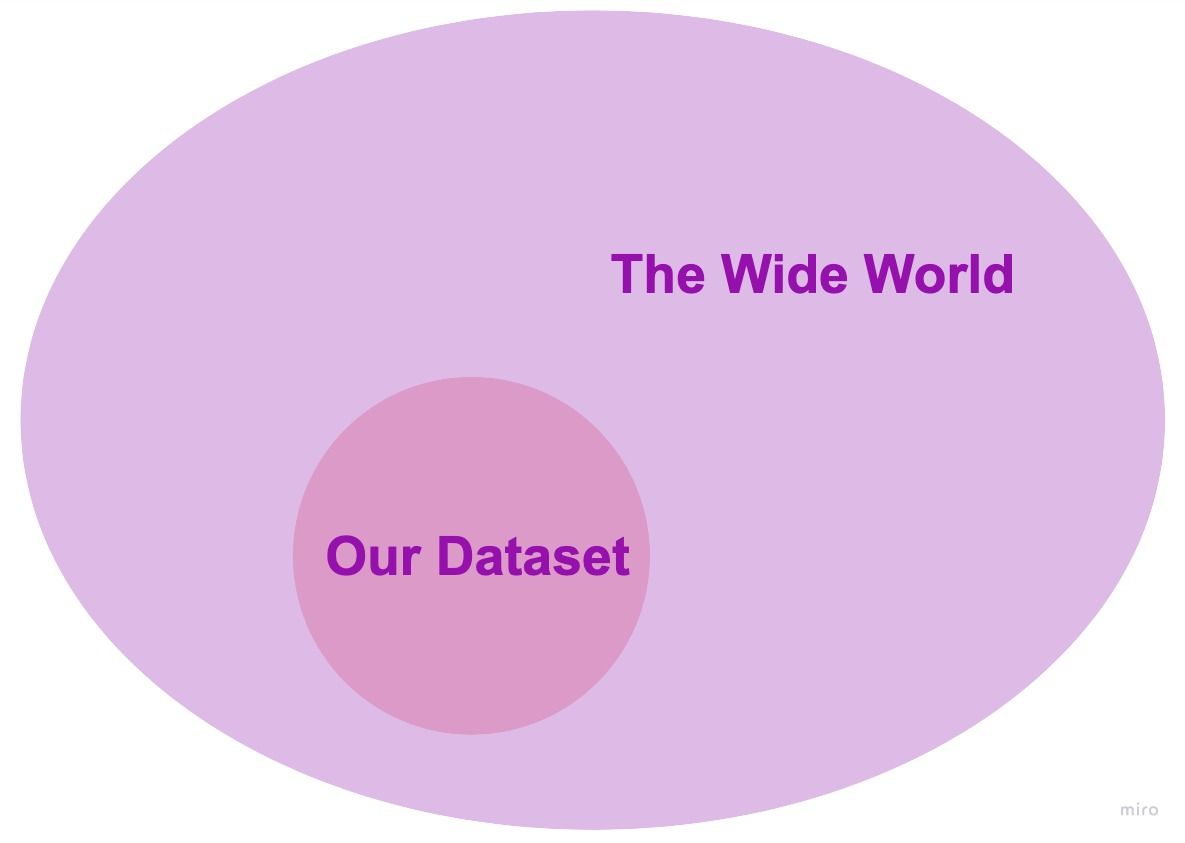

When we are teaching a machine learning model to recognize items of interest, we often take a laser focus towards gathering a dataset that is representative of the task we want our algorithm to master.

Subscribe to our YouTube for more computer vision content like this!

Through the dataset assembly process, our laser focus might miss a whole host of edge cases that we would naturally consider out-of-scope for our model. While out-of-scope instances are intuitive to us, our model has no way of knowing anything beyond the scope of what it has been shown.

Getting Real World - An Example

So far, I've been speaking in some pretty wide abstractions, let's get a little more real world.

Take Roboflow's example Raccoons Object Detection Dataset for example.

We were so laser focused in constructing this dataset of raccoons that we only gathered photos of raccoons, and to no surprise, this model does an extremely good job on the dataset's test set of raccoons.

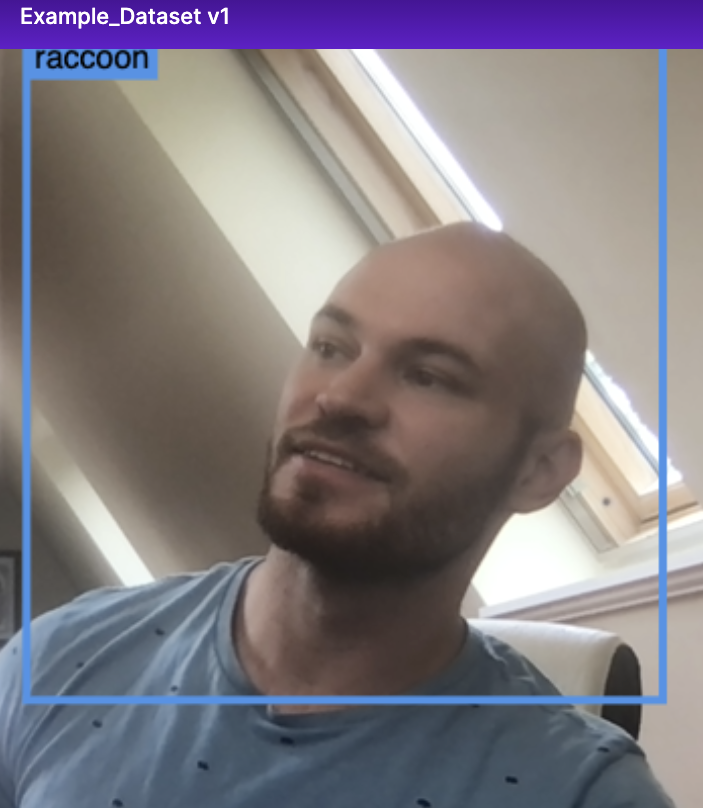

But when you open the raccoons model up in real life on your webcam. [Try It!] You'll find that it thinks you are indeed a raccoon.

This problem plagues machine learning models all over the place, whether it be logo detection of out-of-scope logos, misidentification of missing screws, or accidentally deterring your dog when your trying to deter rabbits from eating your vegetables.

Let's dive into how you can solve it.

Solution 1: Construct a Representative Test Set

The biggest step you can take to solving the out-of-scope problem is to gather a representative test set, especially if your test sets images do not contain your target objects or class. That is a test set that is fully representative of the deployment environment that your model will live. It can be tempting to cut corners on this step, and for example, just gather images from OpenImages.

Gathering images that are similar to your problem, but not directly sourced from your cameras (or website submissions, etc.) is a good way to prototype your model, but in order to go fully into production, you will need to eventually gather data directly.

Solution 2: Restrict your Deployment Domain

You can always help your model by narrowing the domain that your model needs to make predictions in. For example, monitoring rabbits in your ever shifting backyard might be a hard task to handle. But identifying manufacturing defects in the constrained environment of a conveyor belt might be much easier.

Solution 3: Gather Null Out-of-Scope Data

If your model is suffering from the out-of-scope problem at test time, we recommend gathering training images that show null examples. In a classification dataset, you will actually label these images as the "out-of-scope" class, to teach your model to learn what is in and out of its scope. In an object detection, keypoint detection, or instance segmentation dataset - you will simply leave these images without annotations.

Showing your model null examples will teach it to learn to lay off the trigger in making predictions.

In the raccoons example above, it would be useful to provide some images of people that are unlabeled, tempering our model to the out-of-scope.

Pitfall: Drowning Your Dataset with Nulls

You need to be careful not to provide your model with too many null examples. You can reach a point during training where the prior belief of not making any predictions can overwhelm the desirability of making any predictions at all.

Solution 4: Actively Label Problematic Out-of-Scope Data

If you have a particular out-of-scope instance that is plaguing your model, you may want to consider actively labeling this case as its own class or object.

Let's say that for example that we want to detect the presence of screws in a phone and we label all the instances of screws. At test time, we find that the model is predicting missing screws as screws as well. We conclude that the model has learned to predict screw simply based on location context. We can solve this problem by actively labeling "missing-screw" in our training set.

Solution 5: Active Learning

Machine Learning models are ever evolving. When you deploy your model, you will inevitably run into new out-of-scope edge cases that you did not anticipate in the assembly of your training set. The process of actively identifying these problems, gathering data, re-training, and re-deploying your model is called active learning, and it is crucial to designing a production grade computer vision model.

Conclusion

The out-of-scope problem is one that plagues machine learning models far and wide. In this blog, we've discussed why the out-of-scope problem occurs and we've discussed tactical steps to solving it:

- Construct a Representative Test Set

- Restrict your Deployment Domain

- Gather Null Out-of-Scope Data

- Actively Label Problematic Out-of-Scope Data

- Active Learning

Thanks for reading our primer on the out-of-scope problem - happy training and of course, happy inferencing!

Cite this Post

Use the following entry to cite this post in your research:

Jacob Solawetz. (Jul 16, 2021). Solving the Out of Scope Problem. Roboflow Blog: https://blog.roboflow.com/out-of-scope/