For years my father has been fascinated with the wildlife that roams around his house: various birds, cats, raccoons, and more. This curiosity has culminated with him installing multiple webcams around the exterior of his home to capture any and all wildlife exploring the area.

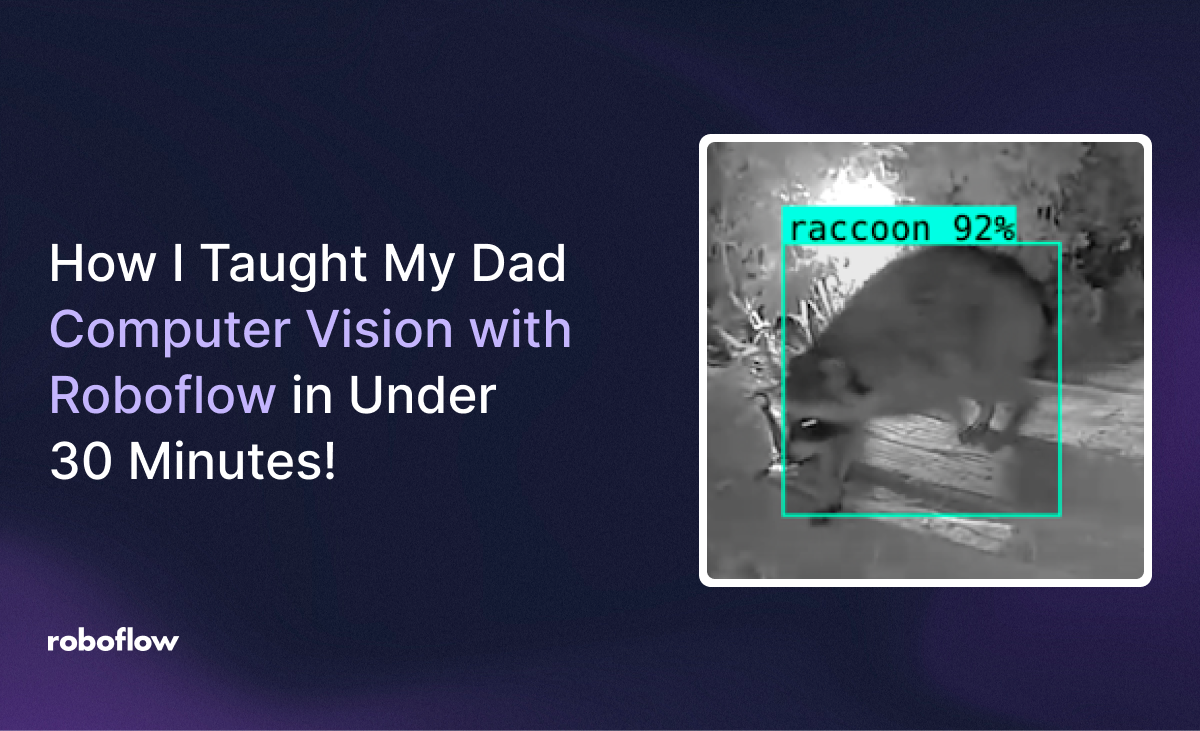

When I told him that we could use computer vision to automatically identify these animals and even send him notifications when they appear, he was intrigued. But his main concern was his lack of knowledge of coding, let alone computer vision model development. Here is the system we would go on to build, identify raccoons in our back yard:

Our Model Successfully Tracking a Raccoon!

While I have years of professional and educational engineering experience, now serving as a Solutions Architect at Roboflow, my father has zero experience with computer science and deep learning.

With Roboflow, we were able to build, train, and deploy a working model to identify different animals in under 30 minutes—without writing a single line of code. The best part? He didn’t need any technical background to do it!

Roboflow is designed to empower both technical and non-technical users. For beginners like my dad, the intuitive, interface-driven experience guides users through the entire process—from uploading images to training a model and deploying it in real-time. Meanwhile, for engineers like myself, Roboflow provides powerful tools to fine-tune models, integrate with APIs, and automate workflows, making it a versatile solution for any level of expertise.

Whether you’re a complete beginner or a seasoned developer, Roboflow makes it possible to bring computer vision projects to life with ease.

Getting Started with Roboflow

To kick off our project, my dad created a free public Roboflow account. One of the first big challenges with any computer vision project is collecting an image dataset on which to train.

Roboflow simplifies the data uploading process, allowing users to directly upload images and videos from their local drives, their Upload API, and various cloud providers. By uploading his collection of videos to Roboflow, he was quickly able to scrape dozens of images on which to train.

Once the images are uploaded to our Workspace, we have a few options to annotate our objects of interest and tag them as classes. While data from Roboflow Universe has labeled images, for our unique dataset we must label our own via manual labeling, auto labeling, or paying for a professional team to label for us.

For the purposes of this project, we will choose manual labeling, but I highly recommend checking out the “How To Annotate Images with Your Team Using Roboflow” blog post for additional insights.

One easy way Roboflow drastically improves the speed and accuracy of manual annotation is the Smart Polygon feature. This AI tool, powered by the Segment Anything Model (SAM), can reduce the amount of time spent annotating images by 75+ percent!

Creating a Smart Polygon Mask in Seconds

Once we had a labeled dataset, the next step was preparing the images for model training. Typically, this involves several complex steps: splitting data into train/validation/test sets, applying preprocessing (like resizing and normalization), and augmenting the dataset with techniques like rotation, flipping, and exposure adjustments to improve generalization.

For someone like my dad this would normally be overwhelming, but with Roboflow it was effortless. The platform automatically handled dataset splitting, ensuring the model had the right balance of images to learn from. Preprocessing and augmentation were just a matter of selecting checkboxes, and Roboflow took care of the rest. A great resource on why these steps are important can be found here for further education: “What is Image Preprocessing and Augmentation?”

Our Results

Within less than half an hour, we have our own custom model, all without the need for any coding knowledge! Roboflow made the process seamless. In our case, we felt our initial performance metrics were quite strong, so we decided to test the model by uploading a brand-new video to view its capabilities.

Additional Results on a Wild Cat

Future Possibilities

Success! My dad was genuinely impressed, especially since just an hour before he didn’t even know what computer vision was! Building a custom model used to require extensive knowledge of coding, data science, and machine learning frameworks. But with Roboflow the process—dataset creation, annotation, training, and deployment—was streamlined into an intuitive, step by step, process.

For more technical users, Roboflow still offers plenty of flexibility. If we wanted to fine-tune our model further, we could experiment with different preprocessing and augmentation steps, deploy our model with either local edge deployments or hosted APIs, or build a no code Roboflow Workflow for more advanced automation.

For next steps, I would challenge my dad to build a Workflow that delivers notifications to his phone whenever an animal is found in his webcams. But the best part? My dad didn’t need to worry about any of that to get meaningful results.

This experience also got us thinking about potential improvements. Could we add more images and classes to differentiate species? What if we fine-tuned the model with additional nighttime images to improve low-light detection? How could we improve the model with active learning?

The possibilities are endless, and thanks to Roboflow, iterating on our model is as easy as adding new images and retraining with a few clicks. Roboflow empowers everyone to think like a data scientist.

Cite this Post

Use the following entry to cite this post in your research:

Joe Wayne. (Mar 14, 2025). How I Taught My Dad Computer Vision with Roboflow in Under 30 Minutes!. Roboflow Blog: https://blog.roboflow.com/teaching-my-dad-computer-vision/