Multimodal vision models allow you to ask a question about the contents of an image. For example, consider a system that monitors one's front porch for packages. You could use a multimodal vision model to identify whether there is a package present, the color of the package, and where the package is in relation to other parts of an image (i.e. is the package on the porch, the grass).

Multimodal models can answer questions about what is in an image, how the objects in an image relate, and more. You can ask for rich descriptions that reflect the contents of an image. This is a task classified as Visual Question Answering (VQA) in the computer vision domain.

Using Multimodal Models for VQA

One multimodal architecture you can use for VQA is PaliGemma, developed by Google and released in 2024. The PaliGemma architecture has been used to train vision models for specific tasks like VQA, website screenshot understanding, and document understanding. These models can be run on your own hardware, in contrast to private models like GPT-4 with Vision.

In this guide, we are going to walk through how to use PaliGemma for VQA. We will use Roboflow Inference, an open-source computer vision inference server. You can use Inference to run vision models on your own hardware

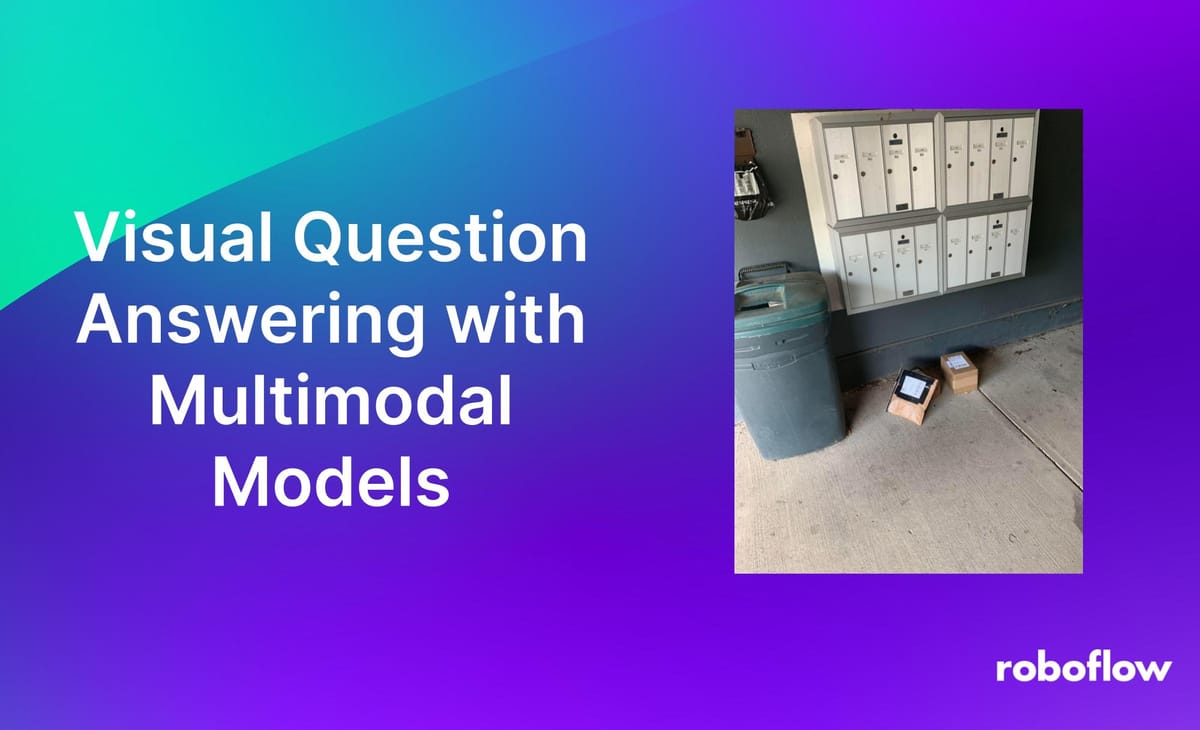

For example, suppose we want to understand whether the image below contains a table. We could ask the model the question "Does this image contain a package?":

The model returns "yes." The model successfully identified that athe

Note that the model did not identify the precise location of the package. For such tasks, you need to train an object detection model. Refer to our PaliGemma object detection guide to learn more about object localisation with PaliGemma.

Step #1: Install Inference

First, we need to install Inference. Inference is distributed as a Python package you can use to integrate your model directly into your application and as a Docker container.

The Docker container is ideal for building enterprise-grade systems where you need dedicated servers that will handle requests from multiple clients. For this guide, we are going to use the Python package.

To install the Inference Python package, run the following command:

pip install git+https://github.com/roboflow/inference --upgrade -q

We also need to install a few additional dependencies that PaliGemma model will use:

pip install transformers>=4.41.1 accelerate onnx peft timm flash_attn einops -q

With Inference installed, we can start building logic to use PaliGemma for VQA.

Step #2: VQA with PaliGemma

Create a new Python file and add the following code:

import os

from inference import get_model

from PIL import Image

import json

lora_model = get_model("paligemma-3b-ft-vqav2-448", api_key="KEY")

In the code above, we import the Inference Python package, then initialize an instance of a PaliGemma model. A specific model identifier is passed into the model initialization statement. paligemma-3b-ft-vqav2-448 refers to the model weights tuned for VQA.

Above, replace KEY with your Roboflow API key. Learn how to retrieve your Roboflow API key.

When you first run the code, the model weights will be downloaded to your system. This may take a few minutes. Once the model weights have been downloaded, they will be cached for future use so that you do not have to download the weights every time you start your application.

Consider the following image:

Let's run our program on the image above with the prompt "Is there a package on the ground?"

The model returns "Yes.", the correct answer to the question.

Now, let’s run the model with the prompt “How many packages are in the image?" The model returns 2. This demonstrates a limitation with the model: it may struggle to identify the exact amount of objects.

We encourage you to test the model on examples of the data with which your application will work to evaluate whether the model performs according to your requirements.

Conclusion

PaliGemma, a multimodal vision model architecture developed by Google, can be used for VQA, among many other vision tasks.

In this guide, we used model weights pre-trained on VQA data to run PaliGemma. We were able to successfully ask questions about an image and retrieve an answer to questions asked. We ran the VQA model with Roboflow Inference, an open source, high-performance computer vision inference server.

To learn more about running PaliGemma models with Inference, refer to the PaliGemma Inference documentation. To learn more about the PaliGemma model architecture and what the series of models can do, refer to our introductory guide to PaliGemma.

If you need assistance integrating PaliGemma into an enterprise application, contact the Roboflow sales team. Our sales and field engineering teams have extensive experience advising on the integration of vision and multimodal models into business applications.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Jul 12, 2024). Visual Question Answering with Multimodal Models. Roboflow Blog: https://blog.roboflow.com/vqa-paligemma/