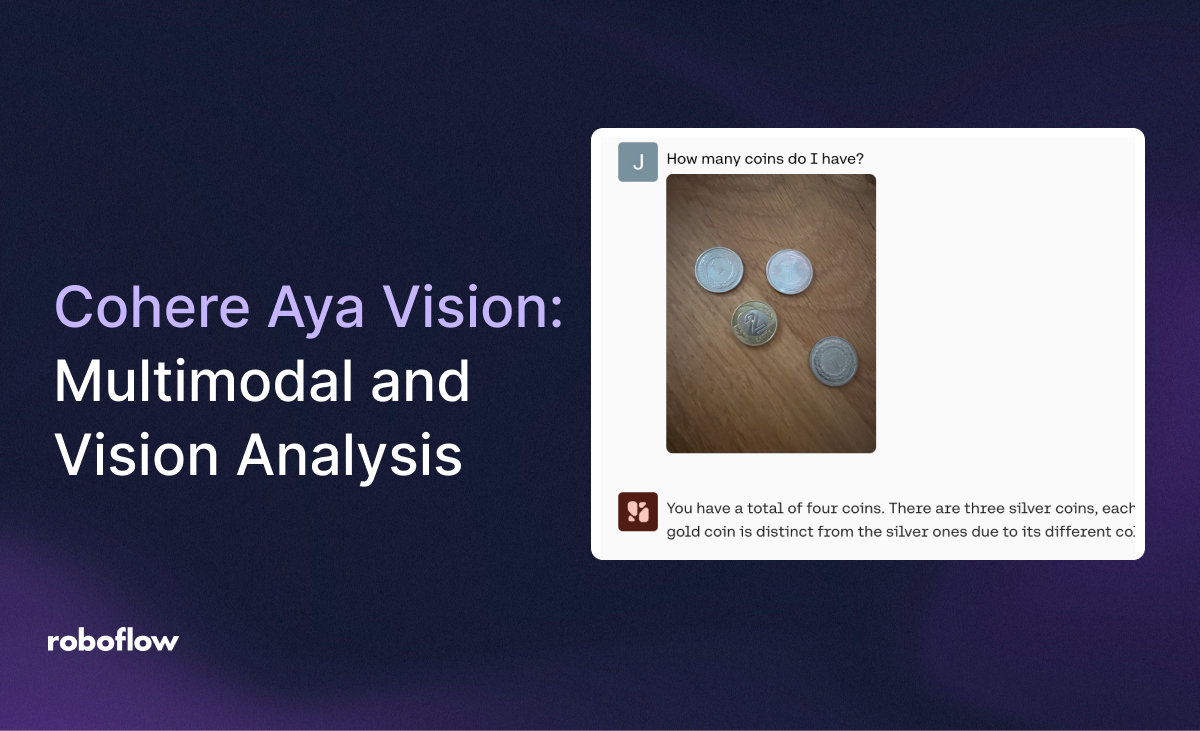

On March 3rd, 2025, Cohere announced Aya Vision, a new series of multimodal models licensed under a Creative Commons Attribution Non Commercial 4.0 license. This means that you can use the model for non-commercial uses, as long as you attribute that you are using the model in your application.

Aya Vision comes in two sizes: 8b and 35b. It is available for download via Hugging Face and Kaggle. You can also experiment with the model via the Cohere Playground web interface, and WhatsApp.

In this guide, we are going to walk through our analysis of Cohere Aya Vision’s multimodal and vision features. We will use our standard benchmark images that we pass through new multimodal models.

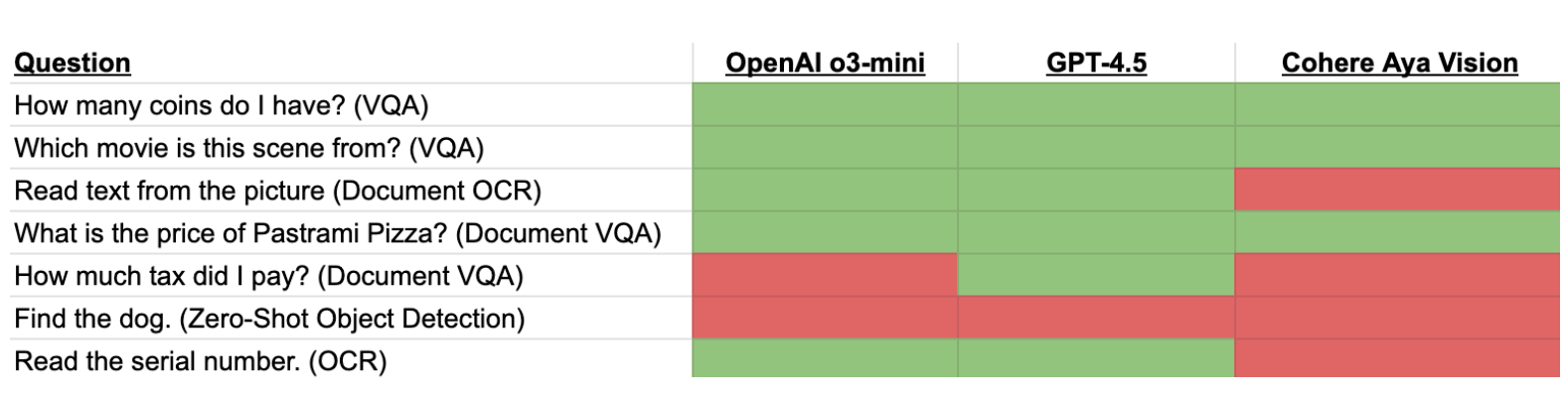

Curious to see how the model stacks up on our qualitative tests? Here is a summary of our results from the 8b model compared to models released over the last few weeks:

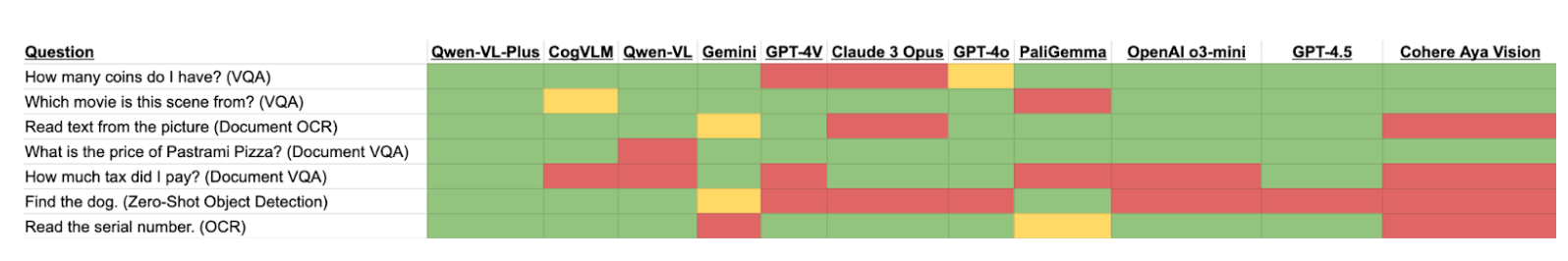

Here is our full table with results from more models:

What is Cohere Aya Vision?

Cohere Aya Vision is a new multimodal model developed by Cohere, a company that builds generative and retrieval models. The model was released on March 3rd, 2025 under a Creative Commons non-commercial license. Aya Vision accepts image and text inputs.

Aya Vision was designed with multilingual support in mind. The model supports 23 languages. In the model announcement, Cohere notes “In its parameter class, Aya Vision 8B achieves the best performance in combined multilingual multimodal tasks, outperforming Qwen2.5-VL 7B, Gemini Flash 1.5 8B, Llama-3.2 11B Vision, and Pangea 7B by up to 70% win rates on AyaVisionBench and 79% on m-WildVision.”

Alongside the model release, Cohere released a new benchmark: AyaVisionBench. The dataset “spans 23 languages and 9 distinct task categories, with 15 samples per category, resulting in 135 image-question pairs per language”, covering tasks such as image captioning, chart understanding, OCR, and more.

Evaluating Cohere Aya Vision for Multimodal Tasks

We evaluated Cohere Aya Vision’s multimodal support on several tasks, including:

- Object counting

- Visual question answering (VQA)

- Document OCR

- Document VQA

- Zero-shot object detection

- Real-world OCR

Below, we show our results from each test.

Our tests were run using the web playground. The temperature was set at 0.3, the default. Unless otherwise stated, our tests were run on the 8B version of the model.

Cohere Aya Vision object counting

We started by testing whether Aya Vision can count the number of coins in an image. We provided the following image and asked the model “How many coins do I have?”.

The model returned:

You have a total of four coins. There are three silver coins, each with a face value of 1, and one gold coin with a face value of 2. The gold coin is distinct from the silver ones due to its different color and design.

The model successfully identified there were four coins, and also identified the denominations.

Cohere Aya Vision visual question answering

We then asked Aya Vision “Which movie is this scene from?” and attached a photo from Home Alone:

The model returned:

This scene is from the beloved holiday classic "Home Alone." The image captures the heartwarming atmosphere of the film, featuring a beautifully decorated Christmas tree, festive decorations, and a cozy fireplace. The character Kevin McCallister, played by Macaulay Culkin, is seen sitting in a green bathrobe, looking content and surrounded by the warmth of the holiday season. The attention to detail in the decorations, such as the Christmas stockings, wreaths, and the fireplace mantelpiece, brings the iconic setting of the movie to life.

The model successfully identified the movie and provided a rich description of the scene.

Cohere Aya Vision document OCR

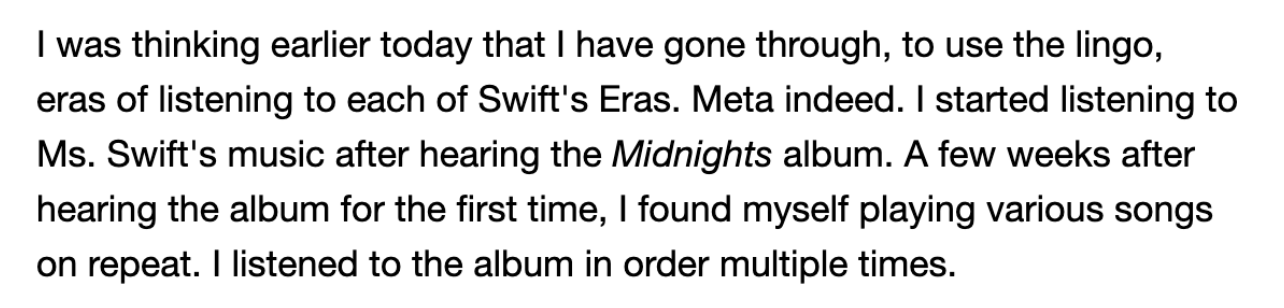

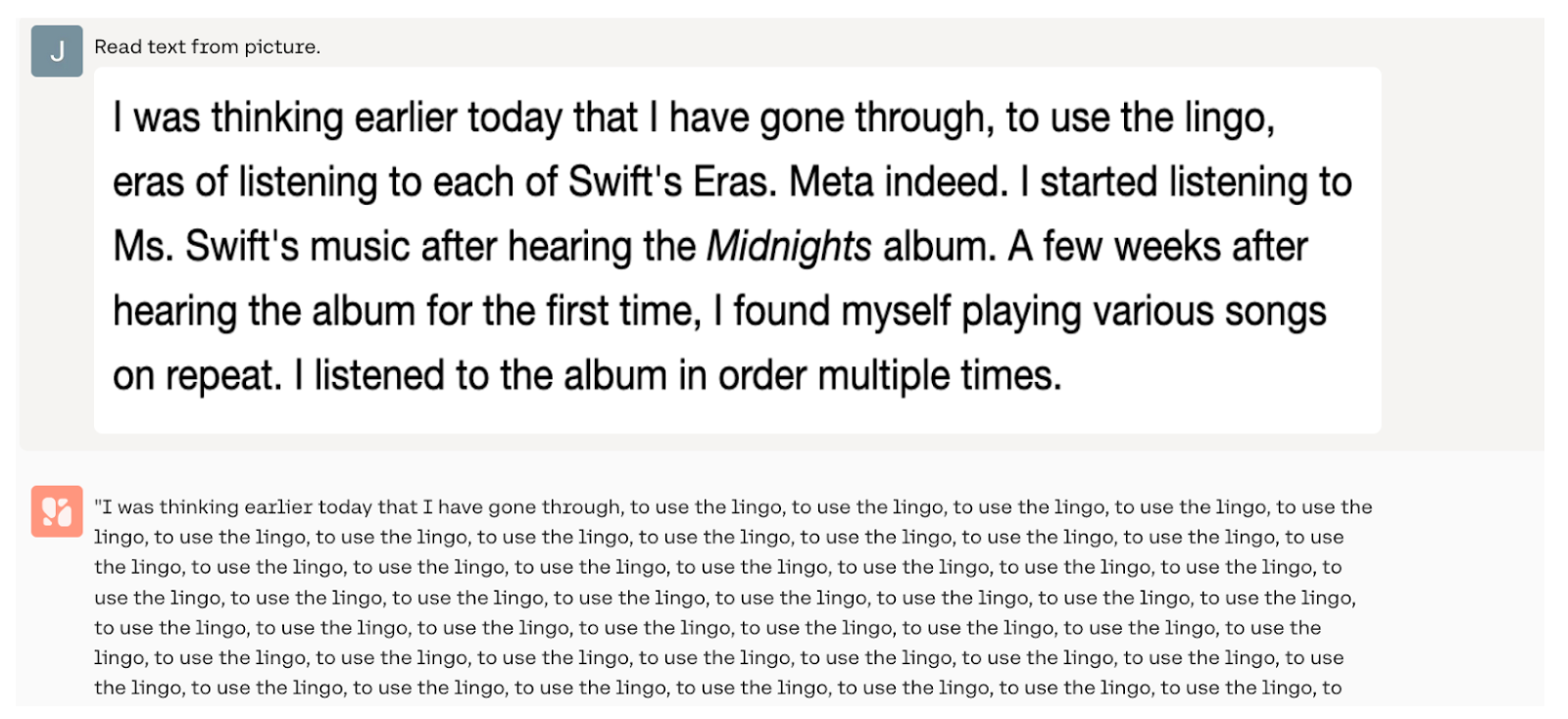

We provided Cohere Aya Vision with a screenshot of a paragraph of text and asked the model to “Read text from image.” Here is the screenshot we provided:

The model was unable to successfully answer the question. It got stuck in a loop:

We then tried the same question on the 35b model. The 35b model did not get stuck in a loop, but the answer included hallucinations. Here was the answer:

I was thinking earlier today that I have gone through, to use the lingo, eras of listening to each of Swift's Eras. Meta indeed. I started listening to her music in the early days, before she released her first album. A few weeks after hearing the Midnight's album, I found myself playing various songs from her album on repeat. I listened to the album in order multiple times.

The third sentence is wrong.

Cohere Aya Vision document question answering

We provided Aya Vision with the following photo of a menu and asked “How much does Pastrami Pizza cost?”:

The model answered:

The price of the Pastrami Pizza is $27.

The model successfully answered the question.

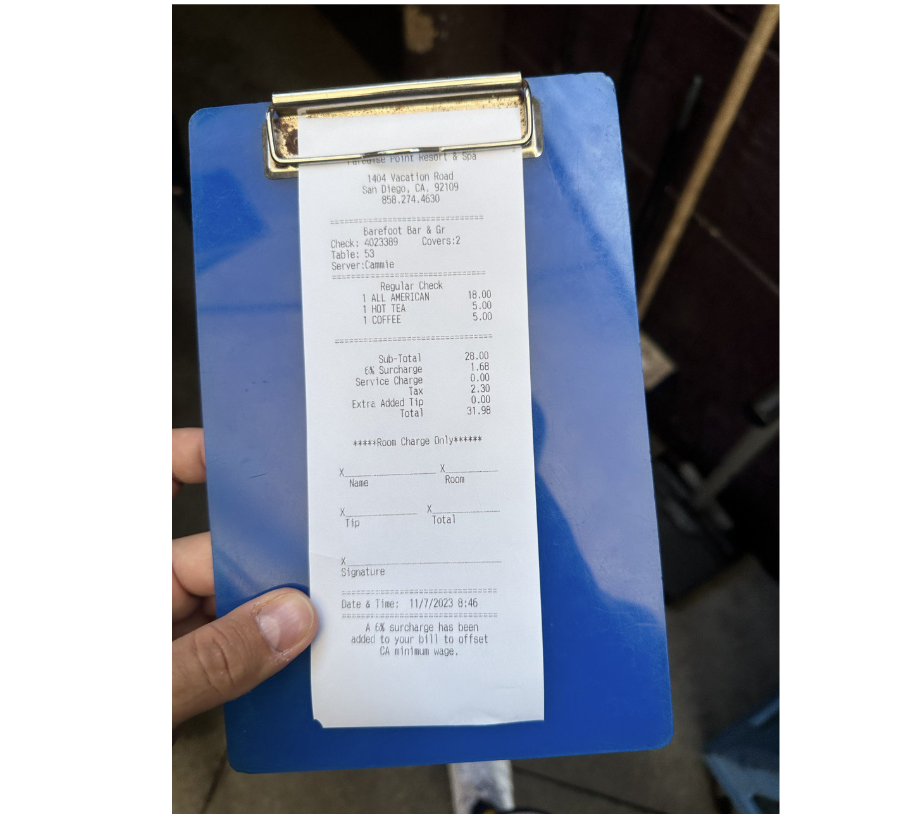

We then asked the model to answer a question about the contents of a receipt. Given the following image of a receipt, we asked “How much tax did I pay?”:

The model returned:

The tax amount on the receipt is $2.00.

This is incorrect. The correct tax amount is $2.30. Several multimodal models have struggled with this question, like PaliGemma and O3 Mini. With that said, GPT-4o and GPT-4.5 are both able to answer this question.

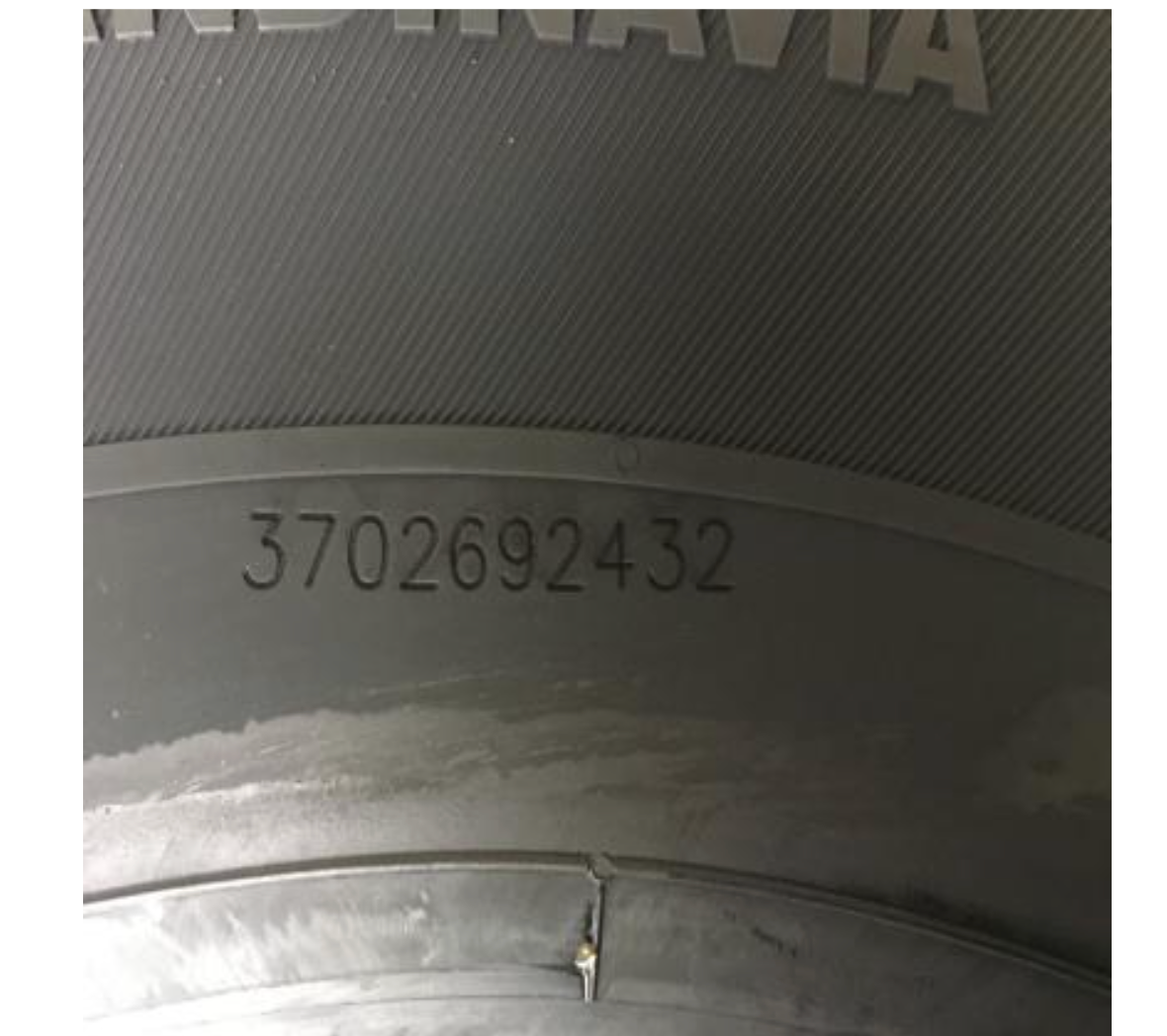

Cohere Aya Vision real world OCR

We provided Aya Vision with a photo of a serial number and asked the model to read its contents with the prompt “Read the serial number.” Here is the photo we provided:

The model returned:

The serial number on the tire is 370692432.

The model was almost correct, but missed a number. The correct answer is “3702692432” but the model returned “370692432”.

Conclusion

Released on March 3rd, 2025, Cohere Aya Vision is a new, multimodal model. The model is available for non-commercial use. It can be used both by downloading the weights from Hugging Face or Kaggle, through Cohere’s product, or through WhatsApp.

Curious to see how more multimodal models do? Check out our index of multimodal model blog posts, covering more new models like GPT-4.5, PaliGemma, Gemini, and more.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Mar 4, 2025). Cohere Aya Vision: Multimodal and Vision Analysis. Roboflow Blog: https://blog.roboflow.com/cohere-aya-vision/