Detecting small objects is a challenging task in computer vision, yet significant for many use cases. Slicing Aided Hyper Inference (SAHI) is a common method of improving the detection accuracy of small objects, which involves running inference over portions of an image then accumulating the results.

In this guide, we are going to show how to detect small objects with SAHI and supervision. supervision is a Python package with general utilities for use in computer vision projects. The supervision SAHI implementation is model-agnostic, so you can use it across a range of models. We’ll walk through an example of detecting objects on a beach in an image with SAHI.

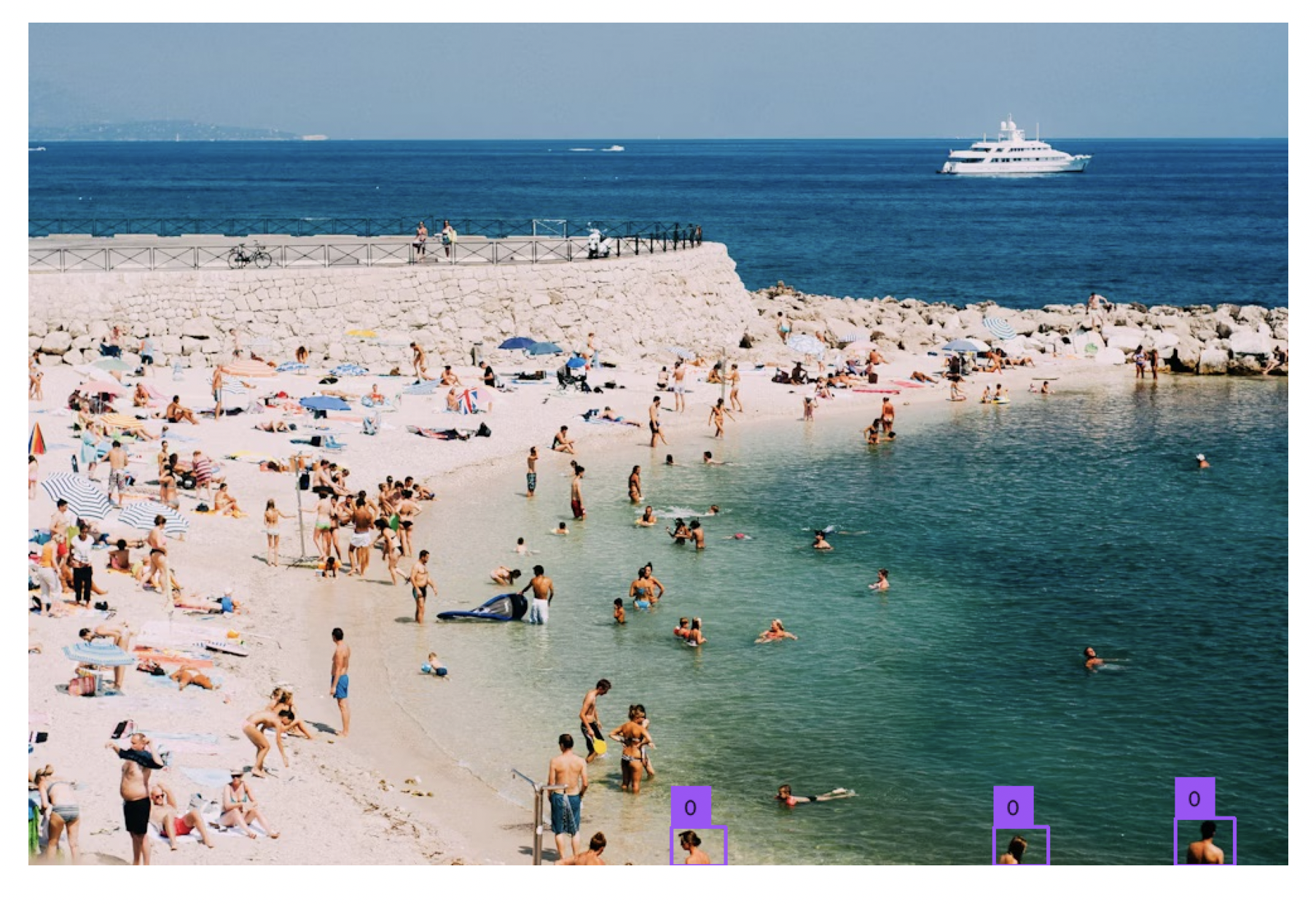

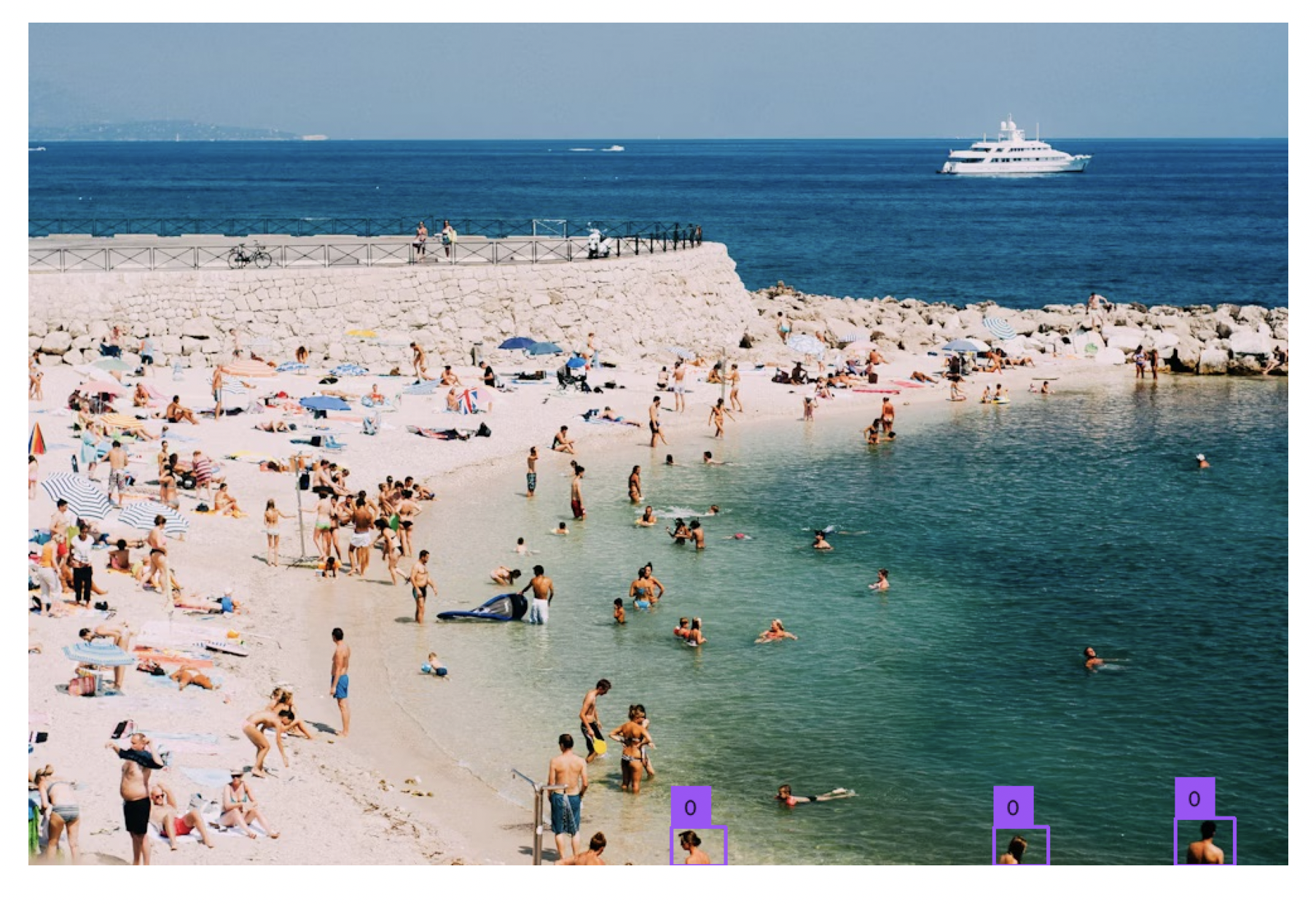

Here is an example of an image on which inference was run without and with SAHI:

Left: Without SAHI. Right: With SAHI.

You can try out SAHI in a Roboflow Workflow using the following embed:

Without further ado, let’s get started!

What is SAHI?

SAHI is a technique for running inference on a computer vision model which aids in detecting smaller objects in an image. SAHI involves running inference on smaller segments of an image, then patching together the results to show all of the detections in an image.

For example, consider a scenario where you are doing an aerial survey and you want to count the number of houses in an image, or a scenario where you are monitoring how busy a beach gets at peak times. In both cases, predictions may be small, and SAHI can help boost the detection rate of objects of interest.

The approach that we walk through for using SAHI is model-agnostic, with support for all models with supervision data loaders.

Whether you are using a model deployed with Roboflow Inference, a model deployed using the Ultralytics YOLOv8 Python package, a vision model in the Transformers Python package, or another model with a supported data loader, you can follow our instructions below to use SAHI.

Step #1: Set Up a Model

First, we will need a model on which to run inference. For this guide, we will be using a people detection model from Roboflow Universe. You can use any Roboflow model on Universe, private models associated with your account, or other model types with supervision data loaders.

We will deploy this model on our own hardware using Roboflow Inference. Inference is a high-performance server for running vision models in production. Inference is the technology behind Roboflow's API, which powers millions of API calls for computer vision inferences.

First, we need to install Inference. We can do so using the following code:

pip install inference inference-sdkNow, we can start writing a script to use our model with SAHI. Create a new Python file and add in the following code: Learn how to retrieve your API key.

import supervision as sv

import numpy as np

import cv2

from inference import get_roboflow_model

image_file = "image.jpeg"

image = cv2.imread(image_file)

model = get_roboflow_model(model_id="people-detection-general/5", api_key="API_KEY")

def callback(image_slice: np.ndarray) -> sv.Detections:

results = model.infer(image_slice)[0]

return sv.Detections.from_inference(results)In this code, we define a callback function that, given an image, will run inference using the Roboflow API and return the results. When you first run the program, you will be asked to login to Roboflow with an interactive login interface.

Above, replace people-detection-general with your Roboflow model ID and 5 with the version of the model you want to run. Learn how to retrieve your model and version IDs.

Step #2: Run Inference with the SAHI Slicer

Now that we have a model set up, we can run inference with the SAHI slicer. We can do so using the following code:

slicer = sv.InferenceSlicer(callback=callback)

sliced_detections = slicer(image=image)This code creates a slicer object which accepts the model inference callback function we defined in the previous step. We then use the slicer object to run inference on an image. The slicer object splits up the specified image into a series of smaller images on which inference is run.

To display SAHI predictions, we can use the following code:

label_annotator = sv.LabelAnnotator()

box_annotator = sv.BoxAnnotator()

# You can also use sv.MaskAnnotator() for instance segmentation models

# mask_annotator = sv.MaskAnnotator()

annotated_image = box_annotator.annotate(

scene=image.copy(), detections=sliced_detections)

# annotated_image = mask_annotator.annotate(

# scene=image.copy(), detections=sliced_detections)

annotated_image = label_annotator.annotate(

scene=annotated_image, detections=sliced_detections)

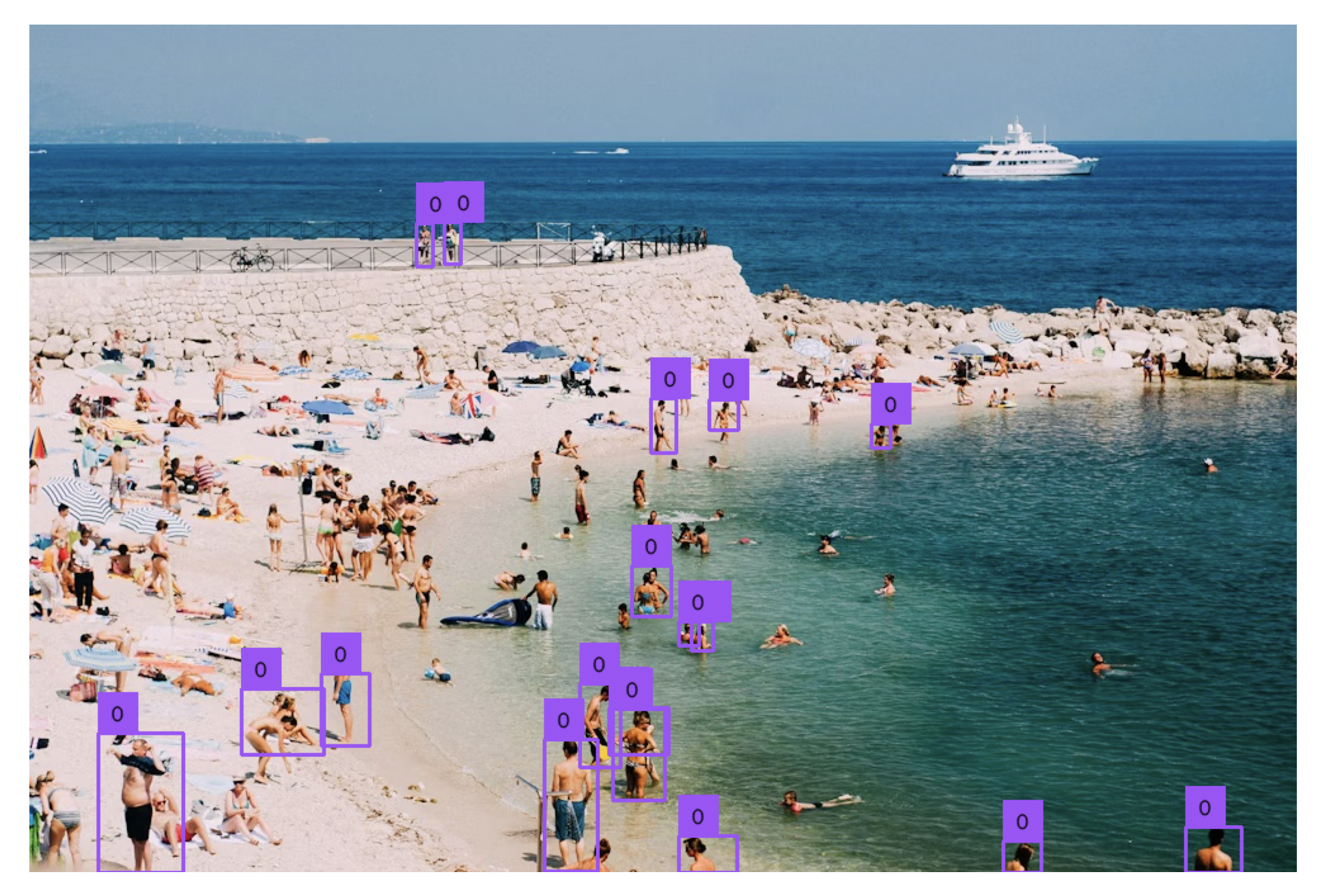

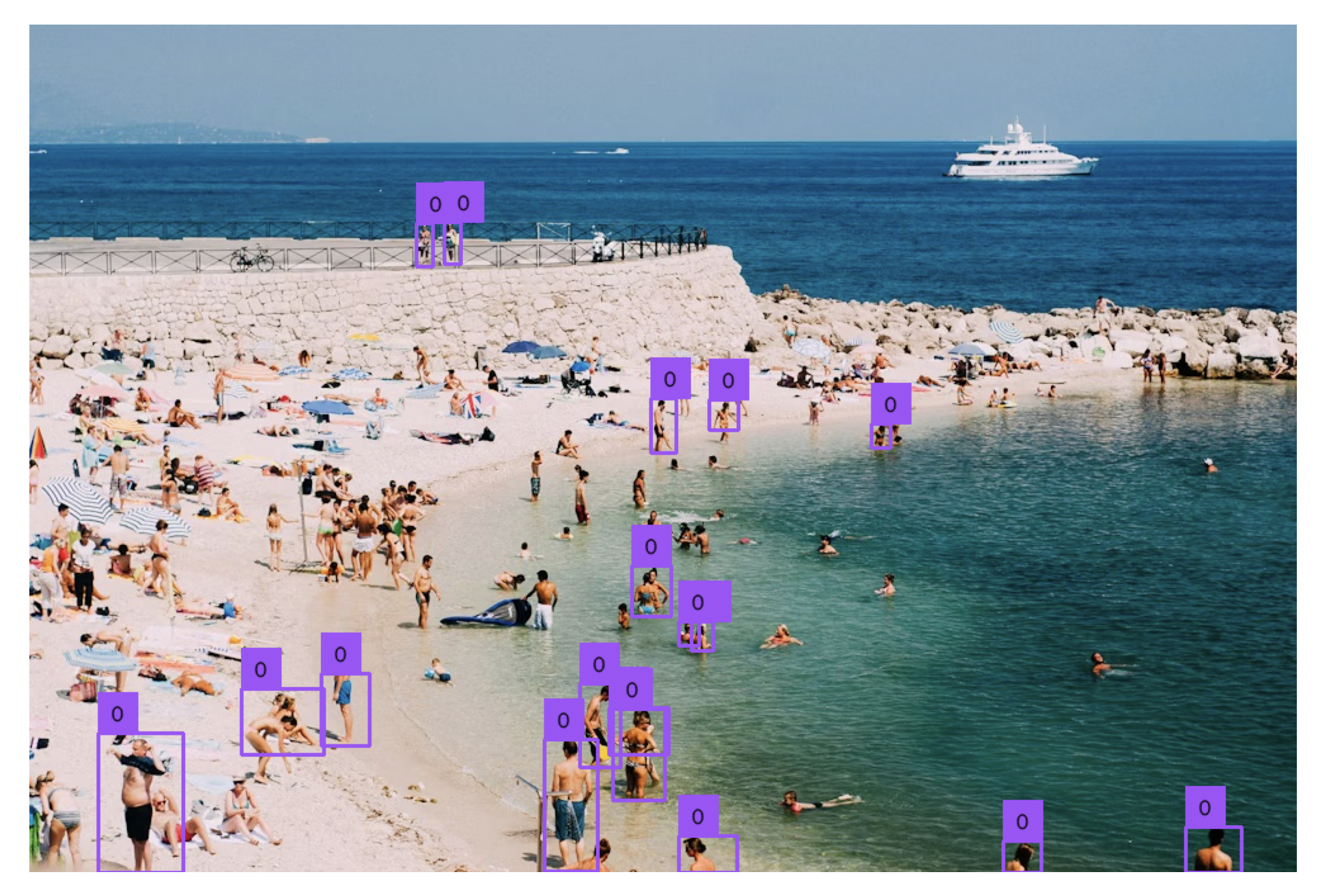

sv.plot_image(annotated_image)Here are the results from inference with and without SAHI:

On the left is the image processed without SAHI. On the right is the image processed with SAHI. Running the model with SAHI was able to capture significantly more objects than running the model without SAHI.

Conclusion

SAHI is a technique for improving the detection rate for smaller objects with object detection models. SAHI works by running inference on “slices’ of an image, then combining the results across the whole image. supervision has a built-i loader in supervision.

Combined with the Roboflow API or the self-hosted Roboflow Inference solution, you can use the SAHI technique to run inference on models hosted on Roboflow.

In this guide, we demonstrated how to detect small objects with SAHI and supervision. Now you have all of the resources you need to use SAHI to improve the detection rate of smaller objects using object detection models.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Aug 31, 2023). How to Use SAHI to Detect Small Objects. Roboflow Blog: https://blog.roboflow.com/how-to-use-sahi-to-detect-small-objects/