The article below was contributed by Timothy Malche, an assistant professor in the Department of Computer Applications at Manipal University Jaipur.

In computer vision and image processing, contours are curves or boundaries that connect continuous points along the boundary of an object that have the same intensity or color. In other words, a contour represents the outline of an object in an image.

Image contouring is the process of detecting and extracting the boundaries or outlines of objects present in an image. Essentially, image contouring involves identifying points of similar intensity or color that form a continuous curve, thereby outlining the shape of objects.

Contours are critical in many image processing and computer vision tasks because they allow for effective segmentation, analysis, and feature extraction of various objects in an image. Image contouring is often used to simplify an image by focusing only on the important structural elements, which are the object boundaries, while ignoring irrelevant details like texture or minor variations within the object. The following images show an example of contouring. In the image the shape of an apple is detected.

How Image Contouring Works

Image contouring typically involves the following steps:

- Preprocessing

- Binary conversion

- Contour detection

- Contour drawing

Let's talk through each of these steps one by one.

Preprocessing

Convert the image to grayscale if it is a color image. Optionally, apply a smoothing filter (e.g., Gaussian blur) to reduce noise, which helps achieve better contour detection results.

# Convert the image to grayscale

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# Apply Gaussian Blur to reduce noise

blurred = cv2.GaussianBlur(gray, (5, 5), 0)Binary Conversion

Apply thresholding or edge detection (e.g., using the Canny edge detector) to convert the grayscale image into a binary image. In a binary image, the objects are represented by white pixels (foreground) and the background is represented by black pixels.

Thresholding is useful to highlight areas of interest based on pixel intensity, while edge detection emphasizes the boundaries where sharp intensity changes occur.

# Apply binary thresholding

# The threshold value (150) may need adjustment based on your image's lighting and contrast

ret, thresh = cv2.threshold(blurred, 150, 255, cv2.THRESH_BINARY_INV)

Contour Detection

Use an algorithm to detect contours in the binary image. A commonly used function for contour detection in OpenCV is cv2.findContours(), which returns a list of all the detected contours in the image.

Contours are represented by lists of points, and each point represents a pixel position along the boundary of an object.

# Find contours in the threshold image

contours, hierarchy = cv2.findContours(thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

Contour Drawing

Once contours are detected, they can be visualized by drawing them on the original image or on a blank canvas using cv2.drawContours(). This helps in visualizing the boundaries of the objects.

# Draw the largest contour on both images

cv2.drawContours(image, contours, -1, (14, 21, 239), 5)

Here’s is an Example

# Read the image

image = cv2.imread('shapes_2.jpg')

# Convert to grayscale

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# Apply thresholding to create a binary image

_, binary = cv2.threshold(gray, 200, 255, cv2.THRESH_BINARY_INV)

# Find contours in the binary image

contours, hierarchy = cv2.findContours(binary, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# Create copies of the original image to draw contours

contour_image = image.copy()

contour_on_original = image.copy()

# Draw the contours on the binary (black) image

contour_black = np.zeros_like(image)

cv2.drawContours(contour_black, contours, -1, (0, 255, 0), 2)

# Draw the contours on the original image

cv2.drawContours(contour_on_original, contours, -1, (255,0, 0), 5)The output will be as follows:

The cv2.findContours() Function

The cv2.findContours() method is an essential function in the OpenCV library used for detecting contours in an image. Below, I will explain this function in detail, covering all of its arguments and their purpose.

Following is the function signature:

contours, hierarchy = cv2.findContours(image, mode, method[, contours[, hierarchy[, offset]]])The function accepts various arguments/parameters as explained below.

- image (Input Image)

This is the source image from which the contours are to be found. The input image must be a binary image, meaning that it should contain only two pixel values, typically 0 (black) for the background and 255 (white) for the objects.

Usually, this is achieved by using thresholding or edge detection to create a suitable binary image. Note that cv2.findContours() modifies the input image, so it's often recommended to pass a copy if you need to preserve the original.

- mode (Contour Retrieval Mode)

This parameter specifies how contours are retrieved. It controls the hierarchy of contours, especially useful for nested or overlapping objects. It includes modes such as cv2.RETR_EXTERNAL, cv2.RETR_LIST, cv2.RETR_CCOMP, cv2.RETR_TREE. All of these modes are explained in the section “Contour Retrieval Mode” below.

- method (Contour Approximation Method)

This parameter controls how the contour points are approximated, essentially affecting the accuracy and the number of points used to represent the contours. Common methods include cv2.CHAIN_APPROX_NONE, cv2.CHAIN_APPROX_SIMPLE, cv2.CHAIN_APPROX_TC89_L1, cv2.CHAIN_APPROX_TC89_KCOS. These methods are explained in the section “Contour Approximation Method” below.

- contours (Output Parameter)

This is the output list of all detected contours. Each individual contour is represented as a NumPy array of points (x, y coordinates) that define the contour boundary. The contours are often stored as a Python list containing one or more contours.

- hierarchy (Output Parameter)

This is an optional output parameter that stores information about the hierarchical relationship between contours. Each contour has four pieces of information [Next, Previous, First_Child, Parent].

- Next: The index of the next contour at the same hierarchical level.

- Previous: The index of the previous contour at the same hierarchical level.

- First_Child: The index of the first child contour.

- Parent: The index of the parent contour.

- If a value is -1, it means there is no corresponding contour (e.g., no parent or no child).

- offset (Optional)

This parameter is used to shift the contour points by a certain offset (a tuple of x and y values). This is useful if you are processing sub-images and need to shift the detected contours back to match their positions in the original image. It’s rarely used in most common scenarios.

The function returns the following values.

- contours: A list of detected contours.

- hierarchy: The hierarchy of contours, useful if you need to understand parent-child relationships between contours.

Contour Retrieval Mode in OpenCV

The Contour Retrieval Mode in OpenCV’s cv2.findContours() function controls how contours are retrieved, including whether or not the hierarchy (i.e., parent-child relationships) of the contours is taken into account. This mode is crucial in determining the structure of the detected contours and their relationships in an image, especially when dealing with nested or overlapping objects. For our examples in this section we will create synthetic binary image with four nested concentric circles of alternating black and white colors.

There are four main contour retrieval modes available in OpenCV. Let us understand each.

cv2.RETR_EXTERNAL

This mode is used to retrieves only the outermost contours. It ignores any contours that are contained within another contour (the hierarchy level of contour). It effectively considers only the outer boundaries and disregards internal nested contours.

This mode is useful when you are only interested in the external boundary of objects, such as detecting coins on a table or isolating objects from the background. This is efficient when nested contours are irrelevant for your analysis.

For example, in our input image of nested circles, the cv2.RETR_EXTERNAL will retrieve only the outer circle, ignoring the inner circle.

Here is the code we can use to process the image above:

# Find contours using cv2.RETR_EXTERNAL

contours, _ = cv2.findContours(image, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# Convert image to color for visualization

image_color = cv2.cvtColor(image, cv2.COLOR_GRAY2BGR)

# Draw the outer contours in green

cv2.drawContours(image_color, contours, -1, (0, 255, 0), 2)

In the code above, the cv2.RETR_EXTERNAL mode finds only the outermost contour (ignores the inner circles entirely). The outer contour is drawn in green. The left side of the following image shows the output returned by code with only the external circle detected and the right side shows the hierarchy returned by the cv2.findContours() function with only one contour (for the external circle). In this case, all four values (i.e. next, previous, first child, parent) are -1, meaning that the contour is completely isolated with no hierarchical relationships.

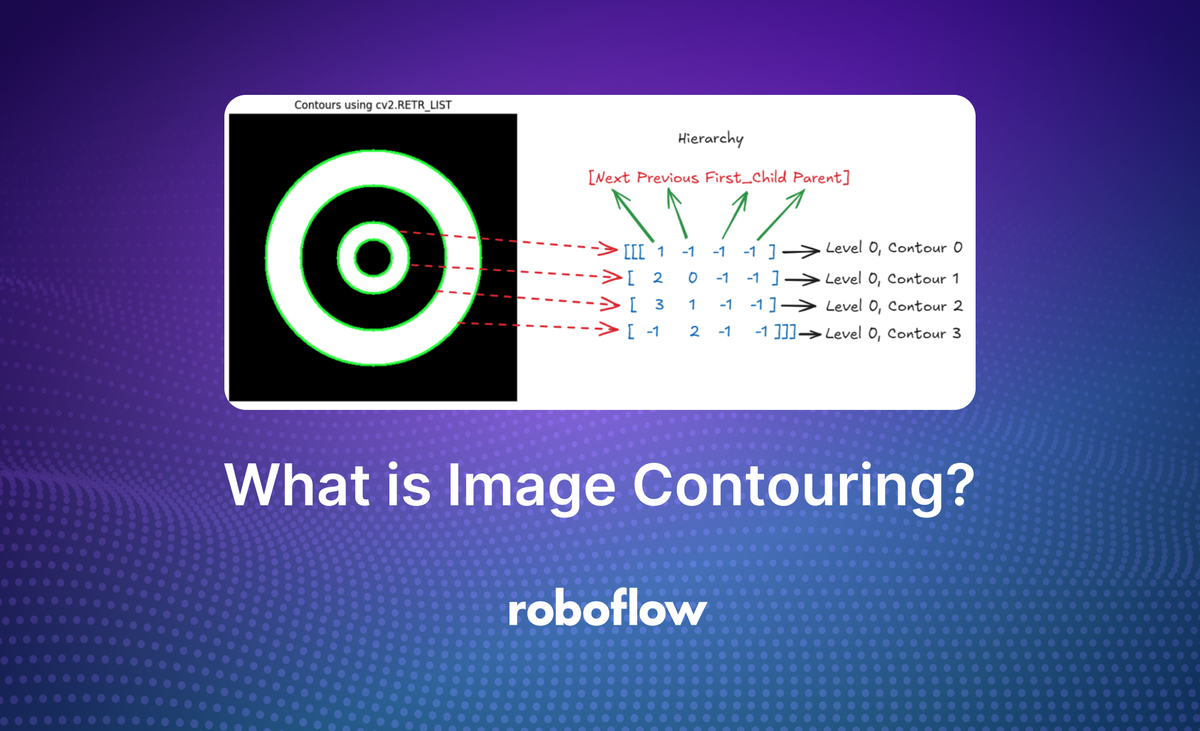

cv2.RETR_LIST

This mode is used to retrieve all contours in the image but does not establish any parent-child relationships between them. All contours are stored in a simple list. This mode essentially flattens the hierarchy. It does not differentiate between outer and inner contours and treats each contour as independent, without hierarchical grouping.

This mode is useful when you do not need information about the relationship between different contours and only requires the entire set of contours, such as analyzing the shape or boundaries of every object in an image.

For example, in the same drawing of nested circles, cv2.RETR_LIST will retrieve all the outer and inner circles, but it will not define any hierarchy (parent-child relationship or multiple levels), each circle is treated independently. The following is the example code.

# Find contours using cv2.RETR_LIST

contours, _ = cv2.findContours(image, cv2.RETR_LIST, cv2.CHAIN_APPROX_SIMPLE)

# Convert image to color for visualization

image_color = cv2.cvtColor(image, cv2.COLOR_GRAY2BGR)

# Draw all contours in green

cv2.drawContours(image_color, contours, -1, (0, 255, 0), 2)The cv2.RETR_LIST mode detects all contours, treating each independently (outer as well as all inner circles). Contours are drawn in green.

The following image shows the output from the code (on the left) detecting all circles. The right side of the image shows a hierarchy returned by the cv2.findContours() function which represents the relationship between four contours detected in an image, where each contour is at the same level (level 0) and has no parent or child contours. Here, in the hierarchy the “first child” and “parent” values for all contours are -1 indicating no parent-child relationship is detected.

cv2.RETR_CCOMP

This mode is used to retrieve all contours and organizes them into a two-level hierarchy. The contours are arranged in levels. There are only two levels in the hierarchy. The first level represents the external boundaries of objects, and the second level represents the contours inside those objects.

This mode is useful when you need a simple hierarchy with a clear differentiation between outer boundaries and their respective inner object boundaries.

For example, in our input image of circles, the cv2.RETR_CCOMP will store the outer circle as the first-level (level 0) contour and the inner circle as the second-level (level 1) contour. Each pair of contours (outer counter at level 0 and inner contour at level 1) is treated as one component. The following is the example code.

# Find contours using cv2.RETR_CCOMP

contours, hierarchy = cv2.findContours(image, cv2.RETR_CCOMP, cv2.CHAIN_APPROX_NONE)

# Convert image to color for visualization

image_color = cv2.cvtColor(image, cv2.COLOR_GRAY2BGR)

# Draw contours based on the two-level hierarchy

for i, contour in enumerate(contours):

if hierarchy[0][i][3] == -1: # If it is an outer contour

cv2.drawContours(image_color, [contour], -1, (0, 255, 0), 2) # Green for outer contours

else: # If it is an inner contour (hole)

cv2.drawContours(image_color, [contour], -1, (0, 0, 255), 2) # Blue for inner contoursThe cv2.RETR_CCOMP mode finds contours and organizes them into a two-level hierarchy. The following image shows the output from the code (on the left side) detecting all circles in two level hierarchy. The right side of the image shows hierarchy returned by the cv2.findContours() function which represents the relationships between four contours, but unlike the previous example, this hierarchy includes parent-child relationships.

cv2.RETR_TREE

This mode is used to retrieve all contours and organizes them into a full hierarchy (i.e., a tree structure). This mode provides the most comprehensive representation of the hierarchical relationships between contours, including parent, child, sibling, and nested contours. All contours are organized with full parent-child relationships, which means that not only external and internal contours are tracked, but also any nesting that exists within those internal contours. This forms a complete tree-like hierarchy.

This mode is useful for complex images where you need to understand the full structure of nested objects, such as analyzing contours in a document or understanding overlapping shapes. It provides detailed information about how contours are nested within each other.

For example, for the nested circles, the cv2.RETR_TREE will organize them in a full tree structure, where the largest circle is the root (parent), and each successive smaller circle is a child of the previous one. Following is the example code.

# Find contours using cv2.RETR_TREE

contours, hierarchy = cv2.findContours(image, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

# Convert image to color for visualization

image_color = cv2.cvtColor(image, cv2.COLOR_GRAY2BGR)

# Draw contours based on hierarchy levels

for i, contour in enumerate(contours):

level = 0

# Traverse the hierarchy to determine the level of the contour

parent = hierarchy[0][i][3]

while parent != -1:

level += 1

parent = hierarchy[0][parent][3]

# Use different colors for the first and second levels

if level == 0:

cv2.drawContours(image_color, [contour], -1, (0, 255, 0), 2) # Green for first level (outermost)

elif level == 1:

cv2.drawContours(image_color, [contour], -1, (0, 0, 255), 2) # Red for second level (inner)

else:

cv2.drawContours(image_color, [contour], -1, (255, 0, 0), 2) # Blue for deeper levels

The cv2.RETR_TREE mode finds all contours and organizes them into multiple levels with full hierarchy. The largest outer circle is the root (parent), the second circle is a child of the largest outer circle, and the third inner circle is a child of the second circle and so on.

The following image shows the output from the code (on the left side) detecting all circles in full hierarchy levels. The right side of the image shows hierarchy returned by the by cv2.findContours() function which represents the relationships between four contours with parent-child relationships. It creates a chain of nested contours, where each contour contains the next one, forming a parent-child relationship all the way down to the fourth contour. Each contour is nested inside the previous one, forming a strict parent-child chain. There are no "sibling" contours at the same level, each contour is the child of the previous one. This kind of hierarchy is typical when dealing with concentric shapes or other forms where one contour encloses another in a strictly nested manner.

Contour Method Comparison

Here’s the comparison of all modes:

- cv2.RETR_EXTERNAL: Only the outermost contours are retrieved, and inner contours are ignored.

- cv2.RETR_LIST: All contours are treated independently, and no hierarchy is applied.

- cv2.RETR_CCOMP: The contours are organized into a two-level hierarchy.

- cv2.RETR_TREE: The contours are organized into a full hierarchy, showing all parent-child relationships for nested contours.

Contour retrieval modes are used to determine how contours are extracted and organized within an image. It allows to control the hierarchical relationships between contours, such as distinguishing between outer boundaries and nested structures.

Contour Approximation Method

Contour Approximation Methods in OpenCV’s cv2.findContours() function determines how contours are represented by approximating the contour points, which directly affects the storage and accuracy of the contour representation. By using different approximation methods, you can control the number of points used to represent the contour, striking a balance between memory efficiency and contour accuracy.

The cv2.findContours() function provides different Contour Approximation Methods that determine how the detected boundary points are represented. Let’s take a look at these methods in detail.

The contour approximation methods are defined by the method argument in cv2.findContours(). They control how accurately the contour boundary points are stored. The common methods are:

cv2.CHAIN_APPROX_NONE

This method stores all points along the contour boundary. It provides the most detailed representation of the contour by keeping every point that lies on the contour. Since every single boundary point is stored, this method uses the most memory and can be computationally expensive.

cv2.CHAIN_APPROX_NONE is suitable when you need a highly accurate representation of the contour, such as when precise measurements are required. It can also be useful for further analysis that involves all points along the contour boundary, such as tracking points or performing transformations.

For example, if you have an irregular, wavy contour, cv2.CHAIN_APPROX_NONE will ensure that even the smallest details of the contour are captured. The following is the example code.

# Draw a star shape

pts = np.array([[200, 50], [220, 150], [300, 150], [240, 200], [260, 300],

[200, 250], [140, 300], [160, 200], [100, 150], [180, 150]], np.int32)

pts = pts.reshape((-1, 1, 2))

cv2.polylines(image, [pts], isClosed=True, color=255, thickness=2)

# Find contours using cv2.CHAIN_APPROX_NONE

contours, _ = cv2.findContours(image, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

# Convert image to color for visualization

image_contour = cv2.cvtColor(image, cv2.COLOR_GRAY2BGR) # Image for contours

image_points = cv2.cvtColor(image, cv2.COLOR_GRAY2BGR) # Image for points

# Draw the contours in green on the contour image

for contour in contours:

cv2.drawContours(image_contour, [contour], -1, (0, 255, 0), 2) # Draw contours in green

# Draw the points in red on the points image

for contour in contours:

for point in contour:

cv2.circle(image_points, tuple(point[0]), 1, (255, 0, 0), -1) # Red dots for points

This code allows you to see how cv2.CHAIN_APPROX_NONE captures and displays all points along the contour boundary, demonstrating its precision in representing the shape. You can clearly see the green line representing the entire contour.

The red dots represent each point stored along the contour, emphasizing that cv2.CHAIN_APPROX_NONE captures every single point, even along straight lines and curves. This approach allows you to verify that all points are indeed stored and visualized, highlighting the precision and detail of cv2.CHAIN_APPROX_NONE.

cv2.CHAIN_APPROX_SIMPLE

This method compresses the representation of the contour by removing all redundant points along a straight line. It only stores the endpoints of each segment, effectively approximating the contour with fewer points. This significantly reduces memory usage while preserving the general shape of the contour.

It is most commonly used for typical contour detection tasks where you need the overall shape of an object but don’t require every detail along the boundary. Suitable for detecting simple shapes (e.g., rectangles, circles) where you just need the key points to represent the structure of the contour.

For example, if you have a rectangular object, CHAIN_APPROX_SIMPLE will store only the four corners, eliminating redundant points along the edges. The following is the example code.

# Create a synthetic image with a rectangle

image = np.zeros((400, 400), dtype=np.uint8)

cv2.rectangle(image, (100, 100), (300, 300), 255, -1) # Draw a filled rectangle

# Find contours using cv2.CHAIN_APPROX_SIMPLE

contours, _ = cv2.findContours(image, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# Convert image to color for visualization

image_contours = cv2.cvtColor(image, cv2.COLOR_GRAY2BGR)

image_keypoints = cv2.cvtColor(image, cv2.COLOR_GRAY2BGR)

# Draw the simplified contours (keypoints only) on `image_contours`

for contour in contours:

# Draw contours in green

cv2.drawContours(image_contours, [contour], -1, (0, 255, 0), 2)

# Draw keypoints (points of the contour) on `image_keypoints`

for contour in contours:

for point in contour:

# Draw key points (red dots) for each contour

cv2.circle(image_keypoints, tuple(point[0]), 5, (0, 0, 255), -1)

The above code generates following output.

For a simple shape like a rectangle, cv2.CHAIN_APPROX_SIMPLE reduces the contour points to just the corners, effectively showing the efficiency of this method. By eliminating redundant points, this method reduces memory usage, which is ideal for scenarios where the overall shape is more important than the exact boundary details.

cv2.CHAIN_APPROX_TC89_L1

This method uses the Teh-Chin chain approximation algorithm, which approximates the contour using fewer points compared to CHAIN_APPROX_NONE.

It is an intermediate method that attempts to strike a balance between contour accuracy and the number of points. CHAIN_APPROX_TC89_L1 provides an optimized representation that uses fewer points than CHAIN_APPROX_NONE but may retain more detail than CHAIN_APPROX_SIMPLE.

Useful when you need optimized memory usage but still require more accuracy compared to CHAIN_APPROX_SIMPLE.

For example, if you need a smooth representation of contours for tracking or for applications that involve simplification of wavy edges, CHAIN_APPROX_TC89_L1 can be a suitable choice. The following is the example code.

# Create a synthetic image with a wavy contour (e.g., sinusoidal wave shape)

image = np.zeros((400, 400), dtype=np.uint8)

# Draw a wavy contour using a sinusoidal function

for x in range(50, 350):

y = int(200 + 50 * np.sin(x * np.pi / 50))

cv2.circle(image, (x, y), 2, 255, -1)

# Find contours using cv2.CHAIN_APPROX_TC89_KCOS

contours, _ = cv2.findContours(image, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_TC89_L1)

# Convert the image to color for different visualizations

image_contour = cv2.cvtColor(image, cv2.COLOR_GRAY2BGR) # Image for contours

image_points = cv2.cvtColor(image, cv2.COLOR_GRAY2BGR) # Image for points

# Draw the contours on the contour image

for contour in contours:

cv2.drawContours(image_contour, [contour], -1, (0, 255, 0), 2) # Draw contours in green

# Draw the points on the points image

for contour in contours:

for point in contour:

cv2.circle(image_points, tuple(point[0]), 1, (0, 0, 255), -1) # Red dots for key points

This method retains a good amount of detail while using fewer points than cv2.CHAIN_APPROX_NONE. It is especially useful for wavy or irregular shapes where full detail is not needed but significant simplification is not ideal. The result shows how cv2.CHAIN_APPROX_TC89_L1 provides a smooth and optimized contour representation suitable for applications where both memory efficiency and contour accuracy are important.

cv2.CHAIN_APPROX_TC89_KCOS

Similar to CHAIN_APPROX_TC89_L1, this method also uses the Teh-Chin chain approximation but provides a slightly different level of approximation compared to CHAIN_APPROX_TC89_L1. This method typically provides a slightly more aggressive reduction in the number of points while still capturing key features of the contour.

It offers a reduction in the number of points, but the exact behavior may vary slightly compared to CHAIN_APPROX_TC89_L1. This method is used when you want an even more aggressive compression of contour points than CHAIN_APPROX_TC89_L1 and need to retain only the most critical points.

For example, when memory and speed are crucial, and the contours are not very complex, CHAIN_APPROX_TC89_KCOS can provide a good trade-off between efficiency and contour detail. The following is the example code.

# Create a synthetic image with a wavy contour (e.g., sinusoidal wave shape)

image = np.zeros((400, 400), dtype=np.uint8)

# Draw a wavy contour using a sinusoidal function

for x in range(50, 350):

y = int(200 + 50 * np.sin(x * np.pi / 50))

cv2.circle(image, (x, y), 2, 255, -1)

# Find contours using cv2.CHAIN_APPROX_TC89_KCOS

contours, _ = cv2.findContours(image, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_TC89_KCOS)

# Convert the image to color for different visualizations

image_contour = cv2.cvtColor(image, cv2.COLOR_GRAY2BGR) # Image for contours

image_points = cv2.cvtColor(image, cv2.COLOR_GRAY2BGR) # Image for points

# Draw the contours on the contour image

for contour in contours:

cv2.drawContours(image_contour, [contour], -1, (0, 255, 0), 2) # Draw contours in green

# Draw the points on the points image

for contour in contours:

for point in contour:

cv2.circle(image_points, tuple(point[0]), 1, (0, 0, 255), -1) # Red dots for key points

This method provides a more aggressive reduction in contour points compared to cv2.CHAIN_APPROX_TC89_L1, suitable for applications where a minimal number of points is needed while retaining the general shape. By reducing the number of points further, this method optimizes memory usage and processing speed, ideal for scenarios where the contour does not need to be highly detailed.

Contour approximation methods are important as it helps to control the level of detail used to represent a contour's shape. By choosing an appropriate approximation method, you can balance between computational efficiency and the precision required for a given application. This flexibility is particularly useful where the level of contour detail can impact performance and accuracy.

Conclusion

In this blog post we have learnt what image contouring is, how it works and different contour retrieval modes and contour approximation methods. Image contouring is a fundamental tool in computer vision that enables the detection and analysis of object boundaries.

Image contouring simplifies object representation, aids in segmentation, and shape analysis. It plays an important role in various fields, including robotics, medical imaging, quality control, and object recognition.

Image contouring helps machines to understand the shapes and boundaries of objects in an image and provides a basis for many advanced vision-based applications and enables more intelligent analysis of visual data. Roboflow offers a no-code solution for image contouring. To learn more, check out the blog post How to Identify Objects with Image Contouring.

All code for this blog post is available in this notebook.

Cite this Post

Use the following entry to cite this post in your research:

Timothy M. (Oct 30, 2024). What Is Image Contouring?. Roboflow Blog: https://blog.roboflow.com/image-contouring/