Here at Roboflow, our aim is to make the world programmable through computer vision. Within that field are two methodological subsets: classical techniques like edge detection, template matching, and hand-crafted feature detectors (SIFT, ORB), and modern approaches like object detection, segmentation models, and foundation models like SAM3 that perform zero-shot segmentation without task-specific training.

Historically, combining these paradigms required separate codebases, careful data conversions, and deep expertise in both. Roboflow Workflows changes that, seamlessly integrating classical and modern tools into pipelines that run at the edge faster than you can say Dosovitskiy.

In the following sections, we leverage classical and modern computer vision tools to solve a unique challenge: localizing a drone without a GPS sensor. We discuss the problem conceptually, explore situations where GPS-denied navigation becomes necessary, and demonstrate how Roboflow makes problems like this surprisingly approachable.

The corresponding workflow for solving this problem can be found here, in case you prefer to work interactively while reading.

The Problem: When GPS Fails

GPS has become so ubiquitous that we rarely consider what happens when it's unavailable. Yet GPS denial is more common than most realize. Dense urban canyons cause multi-path errors where signals bounce off buildings, producing position estimates tens of meters from reality. Dense tree cover blocks signals entirely, causing your phone to lose service on a wooded trail. Not to mention that indoor environments and tunnels can block satellite communication entirely.

For autonomous drones, GPS denial isn't merely inconvenient, it's mission-critical. Search and rescue operations in mountainous terrain, infrastructure inspection beneath bridges, agricultural monitoring in dense orchards, and defense applications in contested electromagnetic environments all demand positioning solutions that don't depend on satellite infrastructure.

The question becomes: How do you determine where you are using only what you can see?

The Insight: Visual Localization via Map Matching

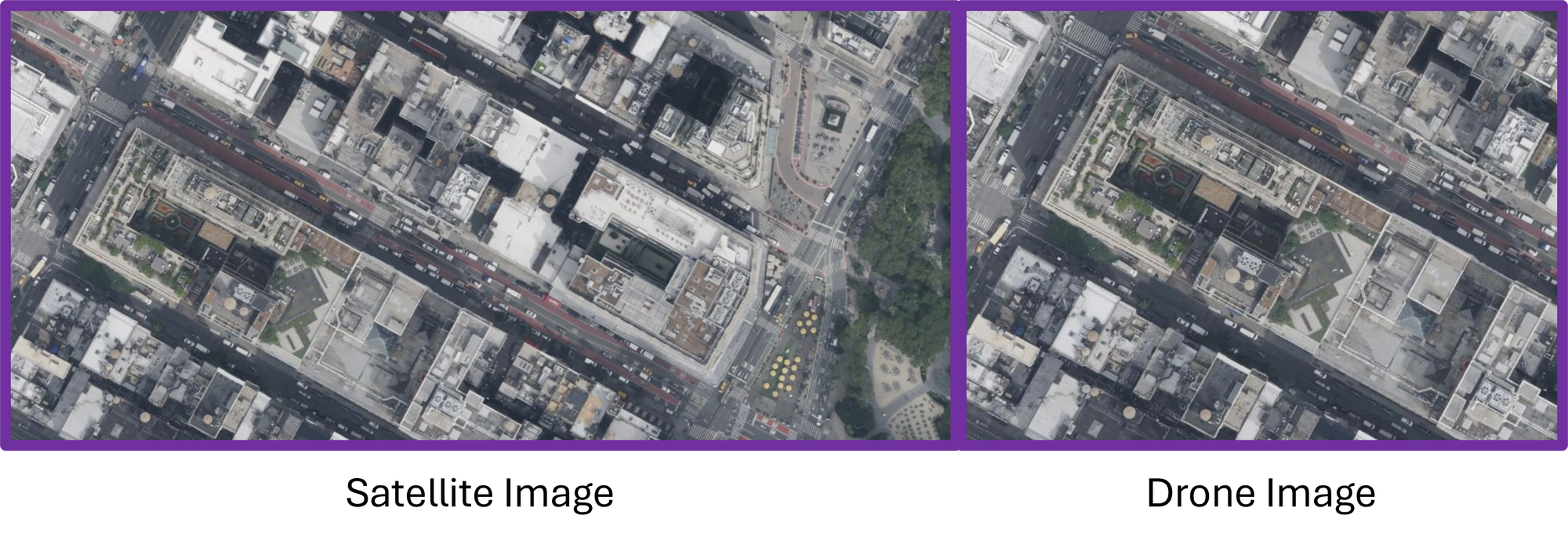

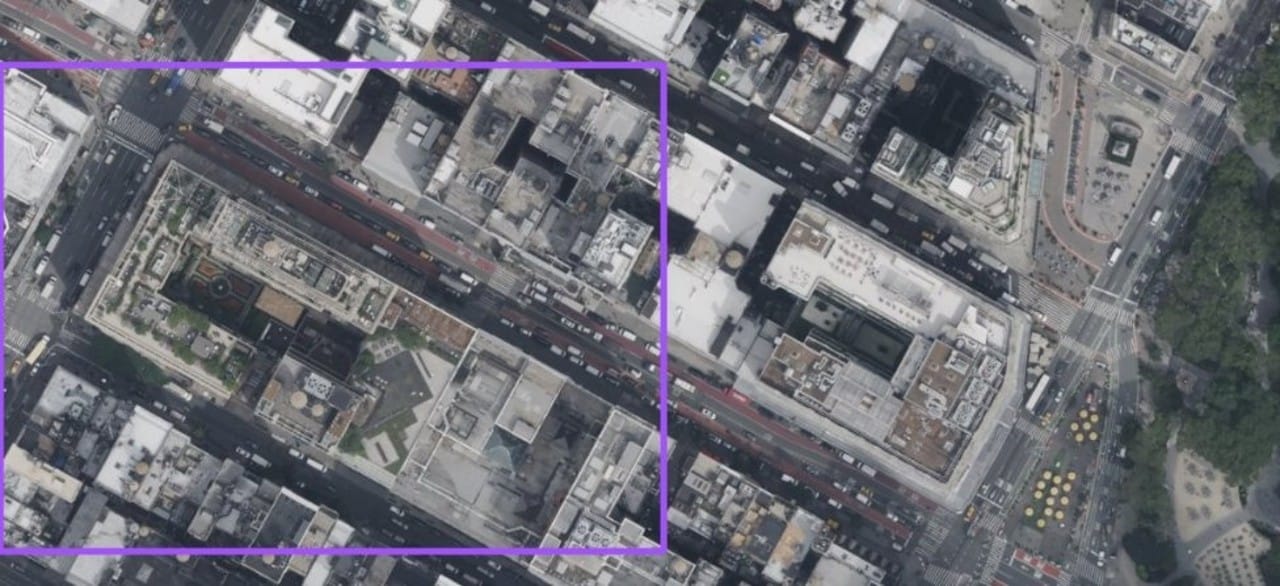

The core insight is elegantly simple: if a drone captures a top-down image of the terrain below, and we have geotagged satellite imagery of the region stored in an onboard edge device, we can find where the drone's view appears within the satellite map. The pixel coordinates of that match translate directly to geographic coordinates via the satellite image's metadata. No GPS required.

This is conceptually identical to how humans navigate with paper maps: you observe landmarks around you, find those same landmarks on the map, and triangulate your position. The challenge lies in making this process robust to the significant differences between a drone's camera view and satellite imagery: varying illumination, seasonal changes, altitude differences affecting scale, and rotational ambiguity.

Raw pixel matching fails catastrophically under these variations. The same building photographed in morning light versus afternoon shadow produces wildly different pixel values. This is where the hybrid approach becomes essential: we use modern computer vision to extract semantic features invariant to these nuisance factors, then apply classical computer vision to perform precise geometric matching.

The Solution: SAM3 Meets Template Matching

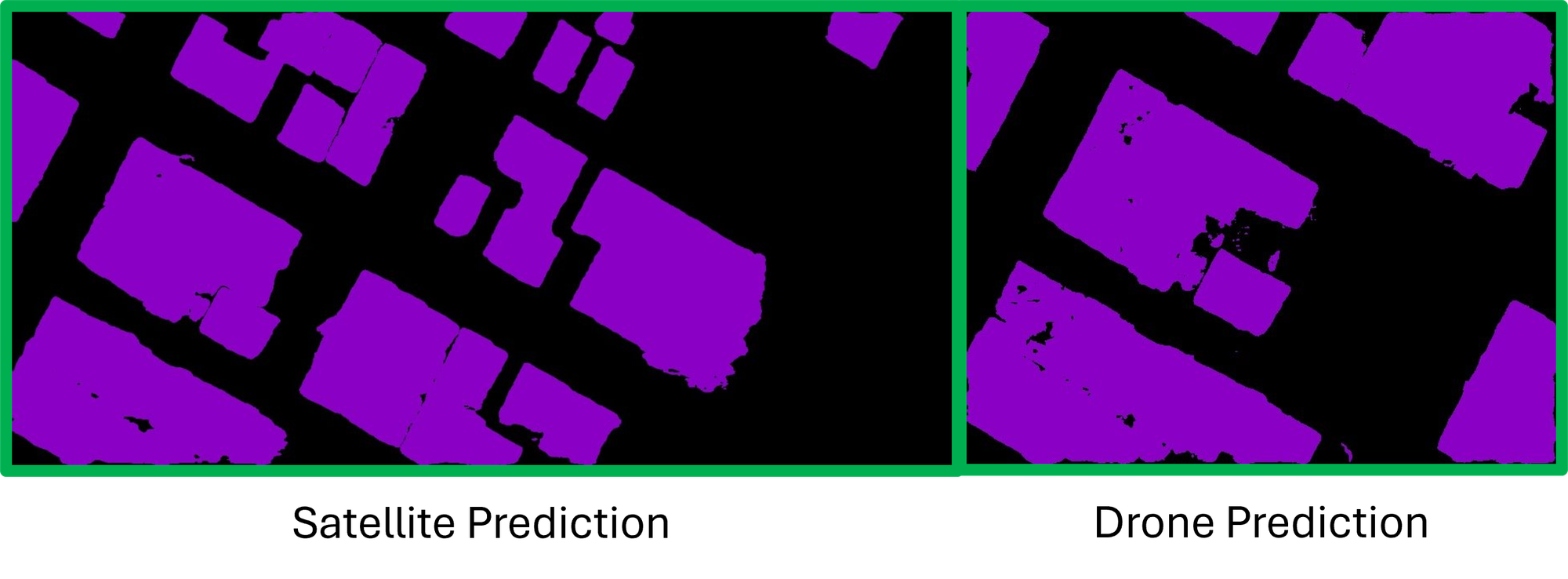

Our approach combines two techniques from opposite ends of the computer vision timeline. SAM3, Meta's foundation model for segmentation, provides zero-shot object detection. It segments buildings, roads, vegetation, and water bodies without ever being explicitly trained on aerial imagery. Template matching, a technique dating to the 1970s, slides a template image across a search image computing similarity at each position to find the best geometric alignment.

The pipeline works as follows. The drone captures an image from its downward-facing camera. Simultaneously, we identify a candidate region in our stored satellite data, perhaps based on the last known GPS fix before signal loss, or dead reckoning from IMU integration. SAM3 processes both images identically, producing segmentation masks that label each pixel by semantic category. These masks are then converted to binary representations: buildings become white pixels (in our case they are purple), everything else becomes black (or any consistent color scheme). Now instead of matching raw imagery sensitive to lighting and texture, we're matching abstract semantic layouts. A building's footprint looks the same whether photographed at dawn or dusk.

The binary mask from the drone view becomes our template. The binary mask from the satellite reference becomes our search image. Classical template matching via normalized cross-correlation finds where the template best aligns within the search space. The match coordinates map to latitude and longitude through the satellite image's geotag, completing the localization.

Why Neither Approach Works Alone

It's worth pausing to understand why this hybrid architecture outperforms either approach in isolation.

Pure classical approaches, e.g., matching raw imagery or hand-crafted features like SIFT keypoints, struggle with the domain gap between drone and satellite views. Feature detectors tuned for one imaging modality produce sparse or inconsistent keypoints in another. Template matching on raw pixels fails when shadows shift or seasons change foliage colors.

Pure modern approaches, e.g., training an end-to-end neural network to directly regress position from imagery, require massive paired datasets of drone views with ground truth coordinates. Such datasets are expensive to collect, may not generalize to new terrain, and produce black-box predictions without interpretable confidence measures. When the network fails, debugging is nearly impossible.

The hybrid approach leverages each paradigm's strengths. SAM3's zero-shot capability means it generalizes to novel environments without retraining. Its semantic understanding abstracts away lighting and texture variations that confound classical methods. Template matching provides geometric precision with interpretable confidence scores; when the correlation peak is weak or ambiguous, we know the match is unreliable. The system degrades gracefully rather than failing silently.

Roboflow Workflows: Making the Complex Accessible

Implementing this pipeline from scratch requires integrating multiple frameworks: PyTorch or ONNX for SAM3 inference, OpenCV or NumPy fast fourier transforms for template matching, and custom code for mask visualization and coordinate transforms. The resulting system is brittle, difficult to deploy on edge hardware, and painful to modify when requirements change.

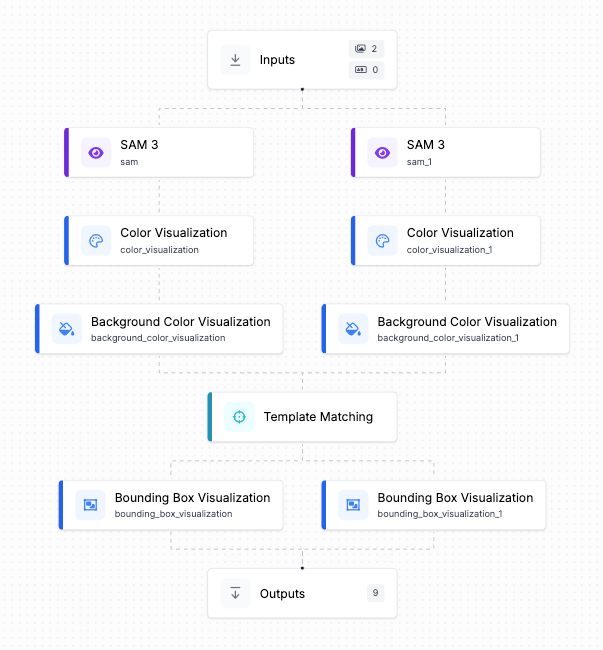

Roboflow Workflows abstracts this complexity into composable blocks. The entire GPS-denied localization pipeline becomes a visual graph:

- Image Input blocks ingesting the drone camera feed and satellite reference tile.

- SAM3 Inference blocks performing zero-shot segmentation on both inputs with consistent prompting.

- Color Visualization and Background Color Visualization blocks transforming segmentation predictions into binary masks suitable for classical processing.

- Template Matching blocks executing normalized cross-correlation and returning match coordinates with confidence scores.

Each block is easily configurable and modular. Swapping SAM3 for a different segmentation model requires changing one block. Deploying from cloud to a Jetson Orin edge device happens with a toggle.

Here is the deeper point: Roboflow Workflows doesn't just make GPS-denied localization easier. It makes the entire category of hybrid classical-modern pipelines accessible to engineers who shouldn't need to be experts in both paradigms simultaneously.

How to Localize a Drone Without a GPS Sensor Conclusion

GPS-denied localization exemplifies a broader pattern: the most robust solutions often combine techniques from different eras rather than betting everything on the latest architecture. Roboflow Workflows makes these hybrid approaches practical by abstracting modern and classical computer vision techniques into composable blocks with consistent interfaces, letting engineers focus on the problem rather than the plumbing connecting PyTorch to OpenCV. If you're working on challenges that require both semantic understanding and geometric precision, explore what Roboflow Workflows can do for your pipeline. Sometimes the best solution isn't choosing between old and new, it's combining them intelligently.

Feel free to try out this workflow below:

Cite this Post

Use the following entry to cite this post in your research:

Alessandro Dos Santos. (Jan 23, 2026). How to Localize a Drone Without a GPS Sensor. Roboflow Blog: https://blog.roboflow.com/localize-a-drone-without-a-gps-sensor/