Precision and recall are key metrics in the pocket of a machine learning and computer vision model builder to evaluate the efficacy of their model. By having a firm understanding of precision and recall, you'll be able to better evaluate how well your trained model solves the problem you want to solve.

In this blog post, we're going to help you learn how to:

- Understand how precision and recall affect your model's performance

- Choose when to optimize precision or recall when operating with limited time

- Communicate to stakeholders how your computer vision solution relates to their problem

Let's get started!

Precision and Recall in Machine Learning Video

What is Precision? What can we learn from Precision?

Roboflow user trained a computer vision model to detect when their cat used the toilet. Whenever the cat gets detected, a signal starts the toilet flushing timer which in turn triggers a toilet flush.

What do we lose with a missed detection versus a false detection?

The consequences of not detecting the cat is that the toilet doesn't flush--not too serious of an issue.

A false detection will lead to unwarranted flushing, which results in wasted water, increased utility bills, and potentially scaring a cat away from using the toilet (that defeats the purpose of the project).

In this example, we need a model with high precision to ensure that the toilet flushes at appropriate times and therefore must focus on reducing the number of false positives since each false positive will waste water.

What is Recall? What does Recall tell us?

Let's imagine we operate a bootstrapped startup. We have a customer in need of a computer vision model capable of detecting signs of an oil pipeline bursting. These visual signals could be leaks coming out of the pipe, the walls of the pipe having unusual warping, and misaligned pipe connectors. We have a short amount of time and need to deploy quickly to win the contract for further development.

What decisions can we make to demonstrate feasibility of vision to solve this problem, and what do we lose when the model provides a false detection vs a missed detection?

In this example, the ability to perform any detection at all outweighs the value of an accurate detection as a false detection does relatively little harm (somebody has to check the pipe monitoring cameras, a one minute operation). A missed detection could lead to huge environmental damage and financial losses.

Initial work should therefore be focused on creating a high recall minimum viable product (MVP) with an emphasis placed on reducing the rate of false negative detections, as a false negative represents a missed leak.

Now that we understand the intuitive relationship between precision and recall, let's break it down.

Precision vs Recall: Compared and Contrasted

A computer vision model's predictions can have one of four outcomes (we want maximum truth outcomes and minimal false outcomes):

- True Positive (TP): a correct detection and classification, "the model drew the right sized box on the right object"

- False Positive (FP): an incorrect detection and classification, "the model drew a box on an object it wasn't supposed to". This occurs most often when objects look similar to each other, and can be reduced by labeling the object confusing the model.

- True Negative (TN): The object was not in the image and the model did not draw a box, "there was nothing there and it didn't think there was something there".

- False Negative (FN): The object was in the image and the model did not draw a box, "there was something there but the model missed it." This occurs most often when the object is seen at a weird angle, lighting, or state not captured in the model's training data. It can be reduced by increasing object representation in the training data.

Let's compare recall and precision to see what each metric tells us.

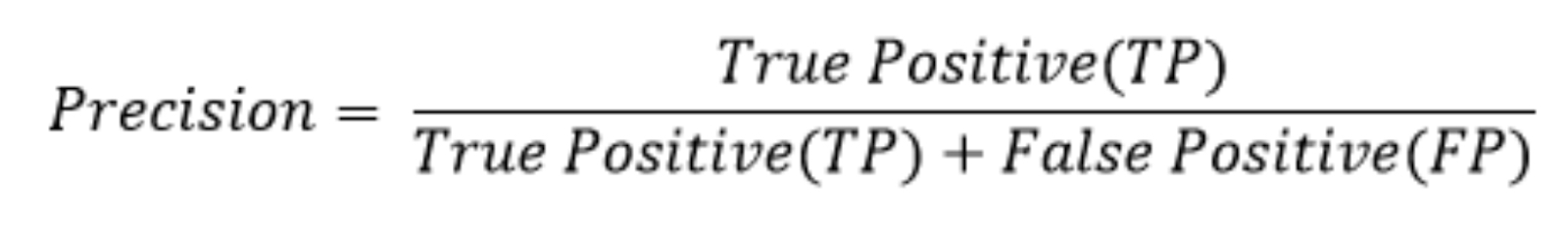

Precision formula

The formula for precision is True Positive divided by the sum of True Positive and False Positive (P = TP / (TP + FP).

Let's say we build an apple detection model and give it 1000 apples to detect. If it successfully detects 500 apples (TP), incorrectly detects 300 apples (FP), and fails to detect 200 apples (FN): We'd have a precision of 500/800, or 62.5%.

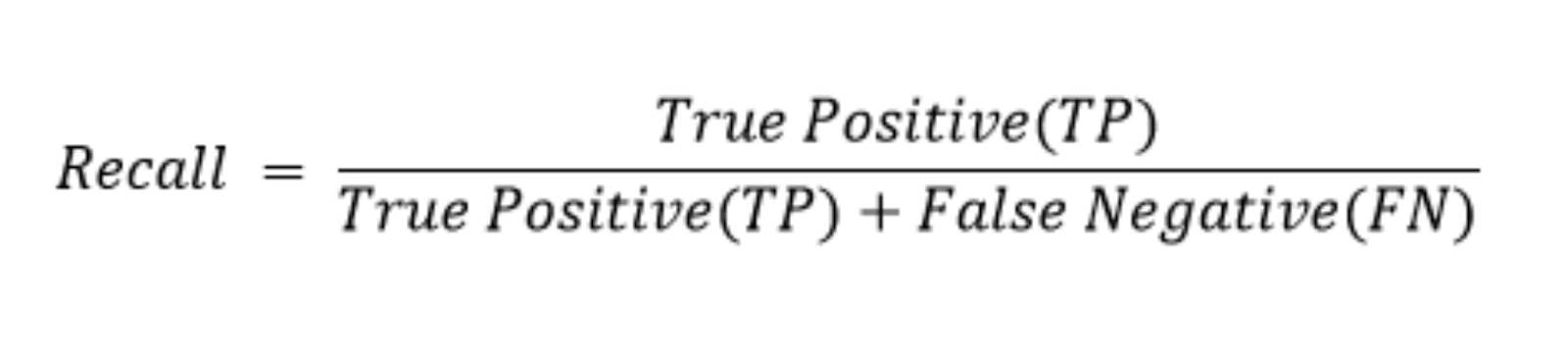

Recall formula

The formula for recall is True Positive divided by the sum of True Positive and False Negative (P = TP / (TP + FN).

Using the same apple example from earlier, our model would have a recall of 500/700, or 71%.

Evaluating precision and recall in context

A solution can only be measured relative to the problem it solves. Does an infinite energy solution with no feasible implementation solve the energy crisis?

Similarly, a computer vision model's performance metrics don't explain how well the model solves the problem it was created for.

The negative ramifications (aka the cost) of missing a detection vs getting the wrong detection provides the basis of valuing precision and recall.

Questions to be thinking about:

- When does precision matter and why?

- When does recall matter and why?

- If I only have enough time to optimize for one, which should I pick?

If you have a robotic drone that drives around and picks up apples, getting any detection at all is probably a good thing and you'd want to emphasize precision at the start of your dev cycle.

If you needed to hire seasonal workers and make your hiring decision based on the number of apples, over hiring would do more damage than under hiring so you'd want to emphasize recall.

How to Increase Precision and Recall

Need to improve precision? We can reduce the FP rate by understanding what the model falsely identifies as an apple and adding that to the training set. If the green pears look like the green apples, label the pears!

Need to improve recall? Reduce the FN rate by adding more variations of apples at different angles and levels of proximity to the camera.

Where does mean average precision (mAP) fit into this?

Combining precision and recall can tell us at a glance the overall general performance of our model, and serves as a good metric for relative performance when compared to other models.

A model with 90% mAP generally performs better than a model with 60% mAP, but it's possible that the precision or recall of the 60% mAP model provides the best solution for your problem.

For additional details on how mAP works, head over to our blog post on mean average precision.

Can we have it all?

In a perfect world, the computer vision models we build excel with high scores in mAP, precision, and recall--but oftentimes the iterative development process demands that we get a working solution out the door that addresses the immediate need.

How can we determine if a model performs well enough to meet the need they've been developed for?

The fastest and most intuitive way would be to test the model on some new sample data. Does it properly detect the object with a tight bounding box? Does it succeed in a wide range of edge case scenarios such as the object being partially obstructed, far away, or positioned in an unusual perspective?

Given enough time and resources, you can most likely build a state-of-the-art model--but will it be ready today?

Use what you've learned in this blog post to express what you need to stakeholders and build solutions that work relative to the problems you face.

Cite this Post

Use the following entry to cite this post in your research:

Jay Lowe. (Mar 3, 2022). Precision and Recall in Machine Learning. Roboflow Blog: https://blog.roboflow.com/precision-and-recall/