Gathering a large, representative sample of images to use in a dataset for a computer vision application can be a time consuming task, especially if you are starting from scratch. The sooner you can gather images, the faster you can start annotating data and preparing a new version of your computer vision model.

Today we are announcing Roboflow Collect, a new application that enables you to collect data for computer vision applications. Roboflow Collect offers three ways to collect data:

- Using a webcam configured on an edge device (i.e. an NVIDIA Jetson);

- Using input from a YouTube livestream, and;

- Using a desktop webcam on macOS (for testing purposes).

You can deploy Roboflow Collect to the edge, point a camera at area(s) of interest, and task the application with collecting images at a specified interval.

In addition, you can use Roboflow Collect to collect images that are semantically related to a text prompt fed through the CLIP computer vision model or an existing image in your dataset. For instance, if you only want to collect images at a rail yard where a train is present, you can do so by providing a “train” prompt.

Roboflow Collect will upload all of your data to Roboflow Annotate so you can search, manage, and annotate data for use in curating a dataset to train your model.

In this guide, we’re going to show you how to set up Roboflow Collect and use it for a computer vision application.

Without further ado, let’s get started!

Install Roboflow Collect

Before we begin, make sure you have Docker and Docker Compose installed on your system. Docker is required to run both Roboflow Collect and the inference server on which the project depends.

To install Roboflow Collect, first clone the project GitHub repository and install the required dependencies:

git clone https://github.com/roboflow/roboflow-collect

pip3 install -r requirements.txtRoboflow Collect requires access to a Roboflow inference server. If you don't have one set up on your device, you can download the requisite Docker image and install one using these commands:

CPU

---

sudo docker pull roboflow/roboflow-inference-server-arm-cpu:latest

sudo docker run --net=host roboflow/roboflow-inference-server-arm-cpu:latest

GPU (NVIDIA Jetson)

---

sudo docker pull rroboflow/inference-server:jetson

sudo docker run --net=host --gpus all roboflow/inference-server:jetsonNext, you’ll need to set some required configuration variables for use in the project. Using the export command, set environment variables for the following values:

ROBOFLOW_PROJECT: The name of your project.ROBOFLOW_WORKSPACE: The name of the workspace with which your project is associated.ROBOFLOW_KEY: Your private workspace API key.INFER_SERVER_DESTINATION: The destination of the Roboflow inference server you want to use with the project.

You also need to set values for the following variables in your environment:

SAMPLE_RATE: The rate at which images should be sampled, in seconds. Note that this number doesn't account for processing time. Thus, a sample time of 1 second may result in an image taking 2 seconds to capture (a 1 second delay in addition to 1 second for processing, assuming a 1 second processing time).COLLECT_ALL: Whether to collect an image at the sample rate or only when a semantically relevant frame is captured by the camera associated with the container.STREAM_URL: The URL of the stream from which you want to collect images.CLIP_TEXT_PROMPT: A text prompt for use with CLIP, which will evaluate whether an image is semantically similar to your prompt. This will be used to decide whether an image should be uploaded to Roboflow.

These values are all optional, but you need to set at least one of them to start collecting data. We’ll talk through each of these four variables as we show examples later in this article.

Collect Data at a Specified Interval

If you have a small dataset – or no images in your dataset – a good place to start is to collect all images that your camera captures at a specified interval. For example, you can collect images from a camera every second, every five seconds, etc.

Your workflow will be as follows: set up Roboflow Collect, move your camera to a place where you can gather data, then leave the script running until you have enough data for your project.

You can also use this workflow to gather more edge case images. For instance, if there is a certain location where you know you can gather images with edge cases, you can set up Collect so that your camera will gather images at a specified interval.

To collect data at a specified interval, set the following environment variables:

COLLECT_ALL=True

SAMPLE_RATE=3This will instruct the container to collect an image every 3 seconds. The actual collection rate may be a bit slower because processing and uploading an image can take a second or two.

To start the application, run the following command:

python3 app.pyIn the following video, we show all photos captured by the camera being uploaded to the Roboflow platform:

Collect Semantically Relevant Data Using CLIP

Roboflow Collect enables you to collect images related to other images in an existing dataset hosted on Roboflow. This is ideal if you want to improve representation of different features in your dataset.

Let’s say you are gathering photos of fruits for use in a fruit inventory management system. You can now set custom tags in Roboflow to indicate that you want to gather more images like a specific one – for example, you can gather more photos of bananas.

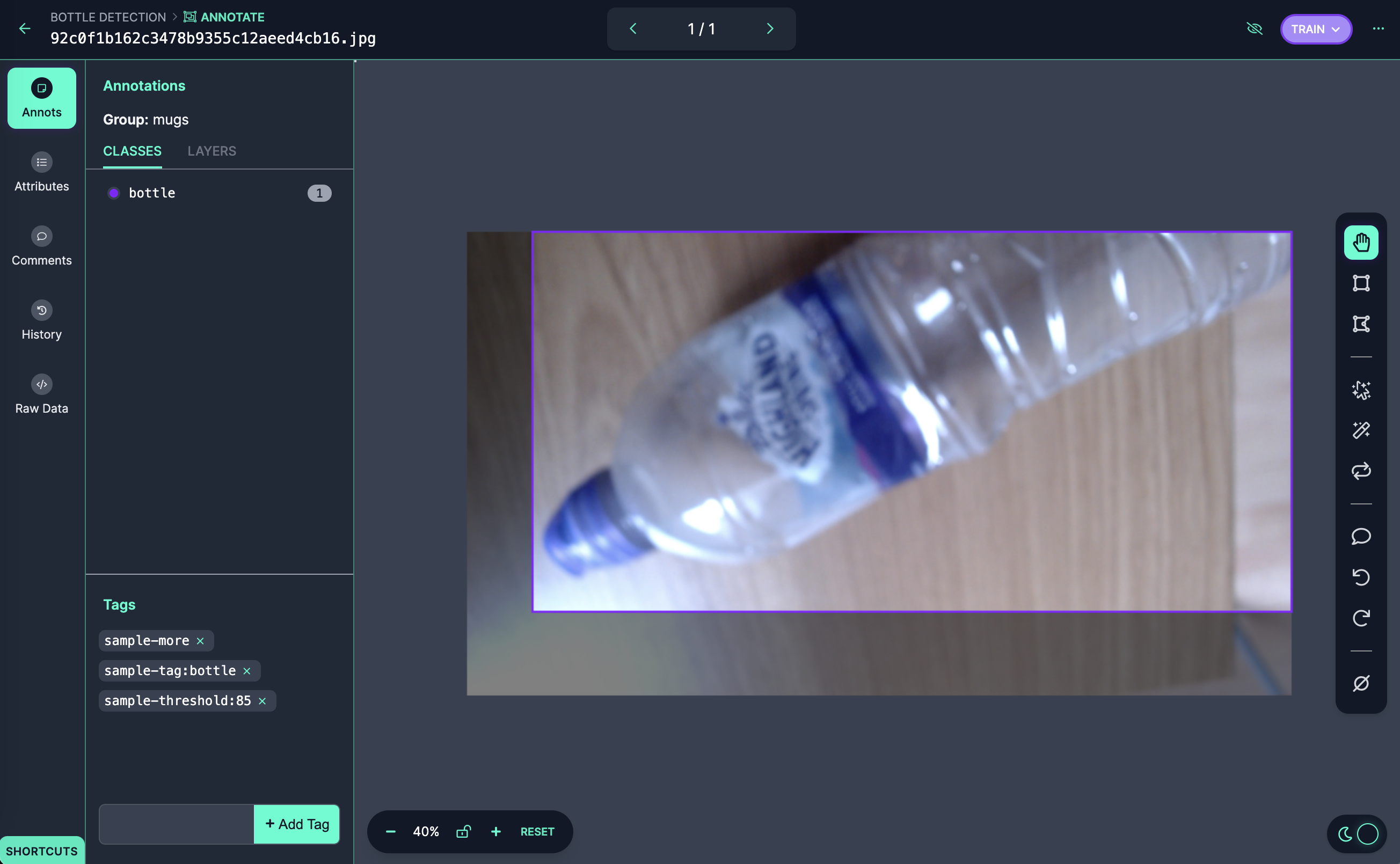

To gather semantically related images, first go to the Roboflow Annotate dashboard for your project. Then, select an image that is similar to the sorts of images of which you want to collect. You will see the annotation interface:

Next, click “Tags” in the sidebar. You will need to set three tags:

sample-more: This tells Roboflow Collect you want to gather more images like the one specified.sample-threshold:85: This tells Roboflow Collect you only want to gather images when they are at least 85% similar to the image you have saved. We recommend starting with 85% and tuning as necessary.sample-tag:bottle: This instructs Roboflow Collect to add a tag called “bottle” to any images uploaded that are related to the one with which the tag is associated.

With these three tags, you will instruct Roboflow Collect to:

- Find images related to the one above (a bottle);

- Only upload the images when they are 85% related to the image in your dataset;

- Add the tag “bottle” to any uploaded images.

Finally, we need to make sure the COLLECT_ALL variable is set to false:

COLLECT_ALL=FalseThis ensures that images will only be saved if they are semantically related to the one(s) selected in your dataset.

Let’s run Roboflow Collect:

python3 app.pyWhen the application starts, you will see a few messages printed to the console that show the tags that can be added to images if they are relevant to the ones selected in the Roboflow dashboard.

Then, we'll start saving images that are semantically related to the ones for which we added tags in the Roboflow Annotate dashboard:

Collect Semantically Relevant Data Using a CLIP Text Prompt

You can use CLIP with Roboflow Collect for zero-shot classification. You can set a configuration option that tells Roboflow Collect you only want to find images that are semantically related to a given text prompt.

In the example earlier, we set a custom tag for an image in our dataset to collect more images of bananas. This was ideal because our dataset already contained bananas. But if we had no images – or few representative images – this method of collection would not be appropriate.

Instead, we can set the following environment variable:

CLIP_TEXT_PROMPT="bottle"This will tell Roboflow Collect to only collect images related to the “bottle” prompt. Note that this method may not always find images related to your prompt, especially if you are looking for specific, uncommon features. The prompt “a small scratch in the top left corner of a car” would likely not work as well as a more generic prompt such as “car”.

With this value set, we can run Roboflow Collect:

python3 app.pyHere’s a video showing images being added when a webcam is pointed at a bottle:

Collect Data from a YouTube Stream

Roboflow Collect supports retrieving data from both cameras and YouTube livestreams. This is useful for gathering more data for a dataset that you may otherwise be unable to collect.

For example, if you are building a large dataset that monitors safety hazards on railway stations, you could use livestreams from multiple stations to help your model generalize to different railway station environments.

To use this feature, we first need a livestream with which to work. For this example, we’ll use a livestream from a York railway station. Next, we need to retrieve the livestream link associated with the stream. We can do this by copying the URL to the page, such as this one:

https://www.youtube.com/watch?v=1fwCoChGAUoAnd then replacing it with this structure (youtu.be + the video ID):

https://youtu.be/1fwCoChGAUoNext, we can set our new URL as an environment variable:

STREAM_URL=https://youtu.be/1fwCoChGAUoYou can use this configuration option with the COLLECT_ALL, CLIP_TEXT_PROMPT, and similarity tag features we discussed earlier. For this example, we’ll set COLLECT_ALL and a sample rate of 1 second. This will instruct Roboflow collect to sample one frame every second (with a delay for processing, so actual sample times will be a bit further apart than 1 second) and save it:

SAMPLE_RATE=1

COLLECT_ALL=TrueHere’s a video of the stream running, with Roboflow Collect collecting and uploading a new image to Roboflow every second:

Conclusion

Roboflow Collect provides a range of ways in which you can collect data for your computer vision models. Using Roboflow Collect, you can collect images:

- At a specified interval;

- Related to images already in your dataset;

- Using CLIP as a zero-shot classifier, and;

- From a YouTube live stream.

If you have any questions about how to use Roboflow Collect, or suggestions on how we can improve the project, we encourage you to leave an Issue on the project GitHub repository or on the Roboflow Discussion forums.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (May 12, 2023). Collect Images at the Edge with Roboflow Collect. Roboflow Blog: https://blog.roboflow.com/roboflow-collect/