The Roboflow Inference Pipeline is a drop-in replacement for the Hosted Inference API that can be deployed on your own hardware. The Inference Pipeline interface is made for streaming and is likely the best route to go for real time use cases. It is an asynchronous interface that can consume many different video sources including local devices (like webcams), RTSP video streams, video files, etc. With this interface, you define the source of a video stream and sinks.

We have optimized Inference Pipeline to get maximum performance from the NVIDIA Jetson line of edge-AI devices. We have done this by specifically tailoring the drivers, libraries, and binaries specifically to its CPU and GPU architectures.

This blog will go over deploying computer vision models at the edge using a Jetson Orin powered by Jetpack 5.1.1. The process uses Roboflow's inference pipeline and supervision library.

Flash Jetson Device

Ensure your Jetson is flashed with Jetpack 5.1.1. To do so, first download the Jetson Orin Nano Developer Kit SD Card image from the JetPack SDK Page, and then write the image to your microSD card with the NVIDIA instructions. Step by step instructions can be found here.

Once you power on and boot the Jetson Nano, you can check Jetpack 5.1.1 is installed with this repository.

git clone https://github.com/jetsonhacks/jetsonUtilities.git

cd jetsonUtilities

python jetsonInfo.pyVerify Jetpack installation

Configure Environment to utilize Nvidia GPU

In order to properly accelerate inference with Nvidia's GPU and Onnx Runtime, you must properly install the correct libraries with the installation commands below. You can put this in a .sh file to install all libraries at once.

#!/bin/bash

# Uninstall onnxruntime if it's already installed

pip3 install inference

# Uninstall onnxruntime if it's already installed

pip3 uninstall --yes onnxruntime

# Download the onnxruntime wheel file

wget https://nvidia.box.com/shared/static/v59xkrnvederwewo2f1jtv6yurl92xso.whl -O onnxruntime_gpu-1.12.1-cp38-cp38-linux_aarch64.whl

pip3 install onnxruntime_gpu-1.12.1-cp38-cp38-linux_aarch64.whl "opencv-python<4.3"

# Clean up the downloaded wheel file and pip cache

rm -f onnxruntime_gpu-1.12.1-cp38-cp38-linux_aarch64.whl

rm -rf ~/.cache/pip

pip3 install pandas

How to Run Inference Pipeline

Use this example code to deploy a COCO model on a RTSP stream. Roboflow has model aliases which are special model IDs that point to a Roboflow model ID. Inference Pipeline has an fps monitor natively built in to track latency and throughput. Documentation on accessing your API key can be found here.

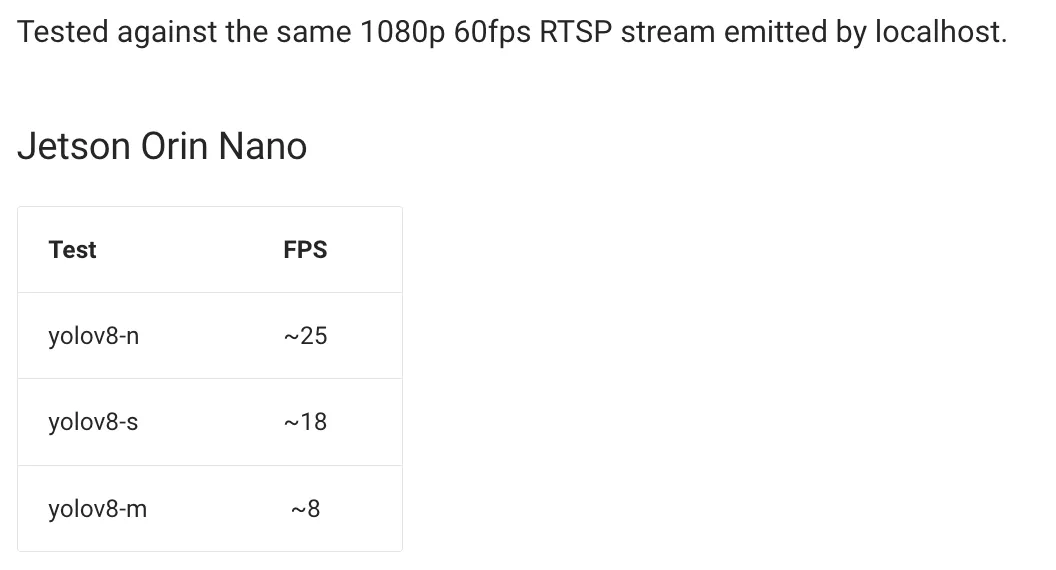

Here are the Inference benchmarks for Jetson Orin Nano – 25 FPS for yolov8-n model.

from inference.core.interfaces.stream.inference_pipeline import InferencePipeline

from inference.core.interfaces.stream.sinks import render_boxes

import supervision as sv

# Create an instance of FPSMonitor

fps_monitor = sv.FPSMonitor()

REGISTERED_ALIASES = {

"yolov8n-640": "coco/3",

"yolov8n-1280": "coco/9",

"yolov8m-640": "coco/8"

}

# Example alias

alias = "yolov8n-640"

# Function to resolve an alias to the actual model ID

def resolve_roboflow_model_alias(model_id: str) -> str:

return REGISTERED_ALIASES.get(model_id, model_id)

# Resolve the alias to get the actual model ID

model_name = resolve_roboflow_model_alias(alias)

# Modify the render_boxes function to enable displaying statistics

def on_prediction(predictions, video_frame):

render_boxes(

predictions=predictions,

video_frame=video_frame,

fps_monitor=fps_monitor, # Pass the FPS monitor object

display_statistics=True, # Enable displaying statistics

)

pipeline = InferencePipeline.init(

model_id= model_name,

video_reference="RTSP_URL",

on_prediction=on_prediction,

api_key='API_KEY',

confidence=0.5,

)

pipeline.start()

pipeline.join()

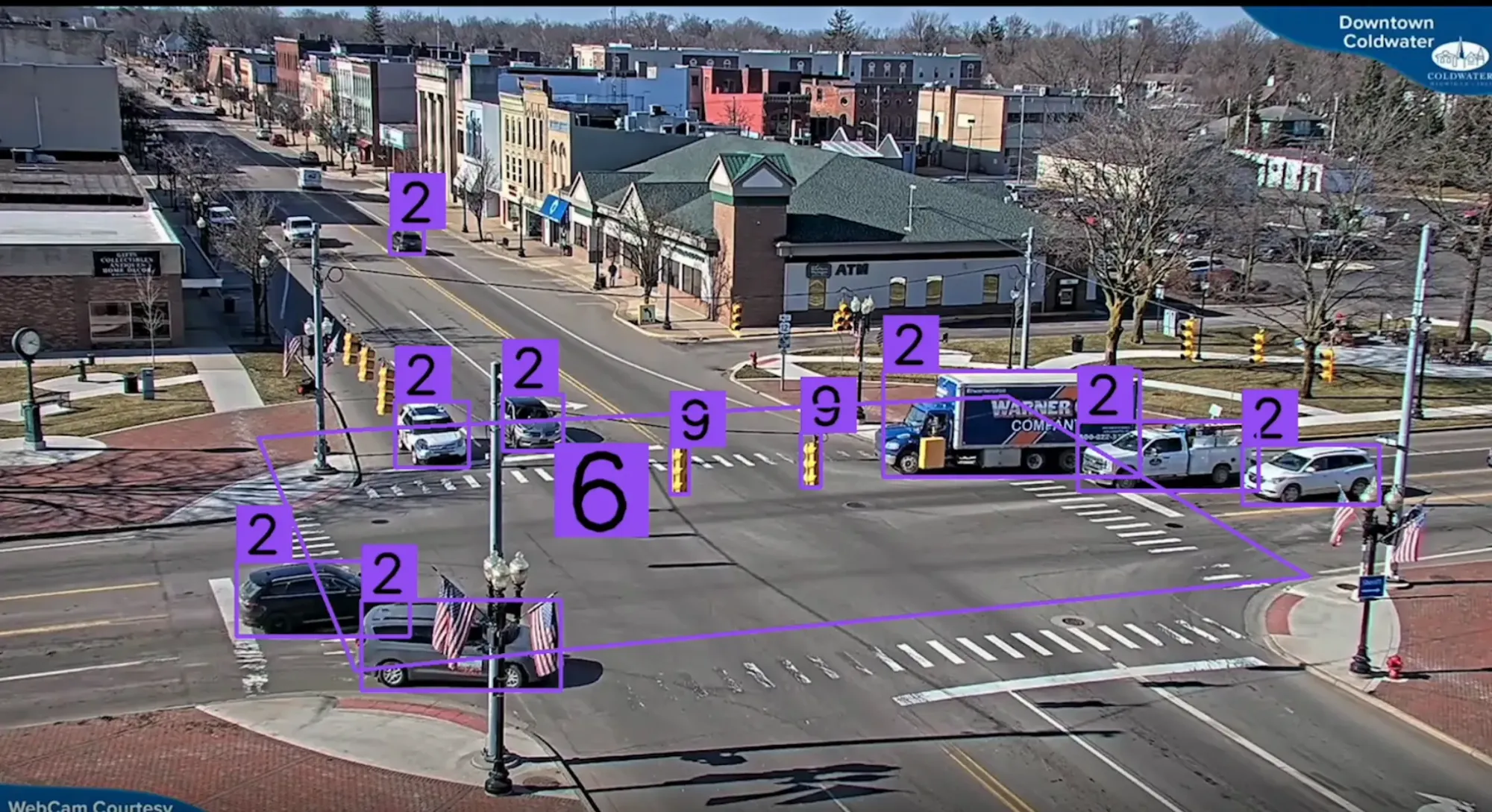

Results below of running the script.

Time in Zone with Supervision

A common use case is leveraging Supervision's PolygonZone and Bytetrack to measure the time unique objects are in a specific zone. Furthermore, we want to save the class of the object, the timestamp, and the number of seconds the object was in a zone to a CSV file to be loaded into a database.

Make sure to capture a frame of your RTSP stream and send to PolygonZone for drawing the polygon coordinates.

#ByteTrack & Supervision

tracker = sv.ByteTrack()

annotator = sv.BoxAnnotator()

frame_count = defaultdict(int)

colors = sv.ColorPalette.default()

#define polygon zone of interest

polygons = [

np.array([

[390, 543],[1162, 503],[1510, 711],[410, 819],[298, 551],[394, 543]

])

]

#create zones, zone_annotator, and box_annotator based on polygon zone of interest

zones = [

sv.PolygonZone(

polygon=polygon,

frame_resolution_wh=[1440,2560],

)

for polygon

in polygons

]

zone_annotators = [

sv.PolygonZoneAnnotator(

zone=zone,

color=colors.by_idx(index),

thickness=4,

text_thickness=8,

text_scale=4

)

for index, zone

in enumerate(zones)

]

box_annotators = [

sv.BoxAnnotator(

color=colors.by_idx(index),

thickness=4,

text_thickness=4,

text_scale=2

)

for index

in range(len(polygons))

]

#columns for csv output

columns = ['trackerID', 'class_id', 'frame_count','entry_timestamp','exit_timestamp','time_in_zone']

frame_count_df = pd.DataFrame(columns=columns)

# Define a dictionary to store the first detection timestamp for each tracker_id

first_detection_timestamps = {}

last_detection_timestamps = {}

def render(predictions: dict, video_frame) -> None:

detections = sv.Detections.from_roboflow(predictions)

detections = tracker.update_with_detections(detections)

for zone, zone_annotator, box_annotator in zip(zones, zone_annotators, box_annotators):

mask = zone.trigger(detections=detections)

detections_filtered = detections[mask]

image = box_annotator.annotate(scene=video_frame.image, detections=detections, skip_label=False)

image = zone_annotator.annotate(scene=image)

for tracker_id, class_id in zip(detections_filtered.tracker_id, detections_filtered.class_id):

frame_count[tracker_id] += 1

# Check if tracker_id is not in first_detection_timestamps, if not, add the timestamp

if tracker_id not in first_detection_timestamps:

first_detection_timestamps[tracker_id] = time.time()

last_detection_timestamps[tracker_id] = time.time()

time_difference = last_detection_timestamps[tracker_id] - first_detection_timestamps[tracker_id]

# Add data to the DataFrame

frame_count_df.loc[tracker_id] = [tracker_id, class_id, frame_count[tracker_id], first_detection_timestamps[tracker_id],last_detection_timestamps[tracker_id], time_difference]

frame_count_df.to_csv('demo.csv', index=False)

cv2.imshow("Prediction", image)

cv2.waitKey(1)

#Initialize & Deploy InferencePipeline

pipeline = InferencePipeline.init(

model_id="coco/8",

video_reference="RTSP_URL",

on_prediction=render,

api_key = 'API_KEY',

confidence=0.5,

)

pipeline.start()

pipeline.join()

In the code above, we define a specific polygon in which we want to count the number of objects. The coordinates for the polygon will vary depending on your use case. To find polygon coordinates for your use case, you can use the PolygonZone web helper.

With this tool, you can upload a frame of your video and draw the coordinates you need for your use case. Note that you must upload a frame from your video, not a screenshot, as this process is sensitive to image resolution. You can do this by saving a single frame from your InferencePipeline with OpenCV or Pillow.

Conclusion

Running RTSP streams on edge devices like a Nvidia Jetson Orin Nano can expand your use cases of real-time inference without sacrificing latency. In this guide, we walked through how to run inference on an NVIDIA Jetson Orin Nano using the Roboflow Inference server.

We deployed our model with Roboflow Inference, then used supervision to add logic that tracks vehicles in a video feed and counts the number of vehicles at an intersection.

For more resources on adding logic like counting objects in a zone to your computer vision project, refer to the supervision documentation. With supervision, you can count objects in a zone, track objects, smooth predictions, visualize predictions, and more.

Cite this Post

Use the following entry to cite this post in your research:

Ryan Ball. (Feb 15, 2024). Run Computer Vision Models on a RTSP Stream on a NVIDIA Jetson Orin Nano. Roboflow Blog: https://blog.roboflow.com/run-inference/