There are more than 24,000 emergency room visits in the United States each year caused by treadmill related accidents. Can we reduce that number by removing the need for human input?

If you are familiar with treadmills, you may recall the emergency clip at the bottom of the console that you are meant to attach to your clothing while you are running. The clip is attached to a string that when pulled often flips a lever or disconnects a magnet that instantly stops the treadmill in the event that you get too far from the console.

Wearing the clip will not prevent you from tripping and falling on a treadmill, however stopping the treadmill immediately once you have fallen can prevent injuries that occur by getting launched off the treadmill going at full speed. That being said, the decision whether or not to wear the clip is one that can be removed altogether thanks to computer vision.

In my Roboflow onboarding project, I used computer vision to identify whether a runner’s shoes or knees were no longer detected while the treadmill is still running. This can then be used to trigger a stop event so the treadmill does not run.

In the video below, I talk through my project and how it works:

The System - Gist

The system for detecting the presence of knees and shoes consists of four steps:

- Taking a locally stored video on a machine and breaking it down into individual images;

- Establishing the labeling format for our classifications;

- Using a model trained in Roboflow to run inference on each of the images, which include trigger events, and;

- Generating an output video made up of all the images up until the trigger event and adding on a final STOP frame.

Building a Model for the Application

I collected data by taping a phone to the bottom of a treadmill console with the camera facing the treadmill and recording the runner. I collected data on a couple of different models of treadmills as well as a couple of different runners.

I trained an object detection model, however there are several approaches that also could have worked. Training a classification model could have done a similar job of identifying whether or not a frame had a runner in it.

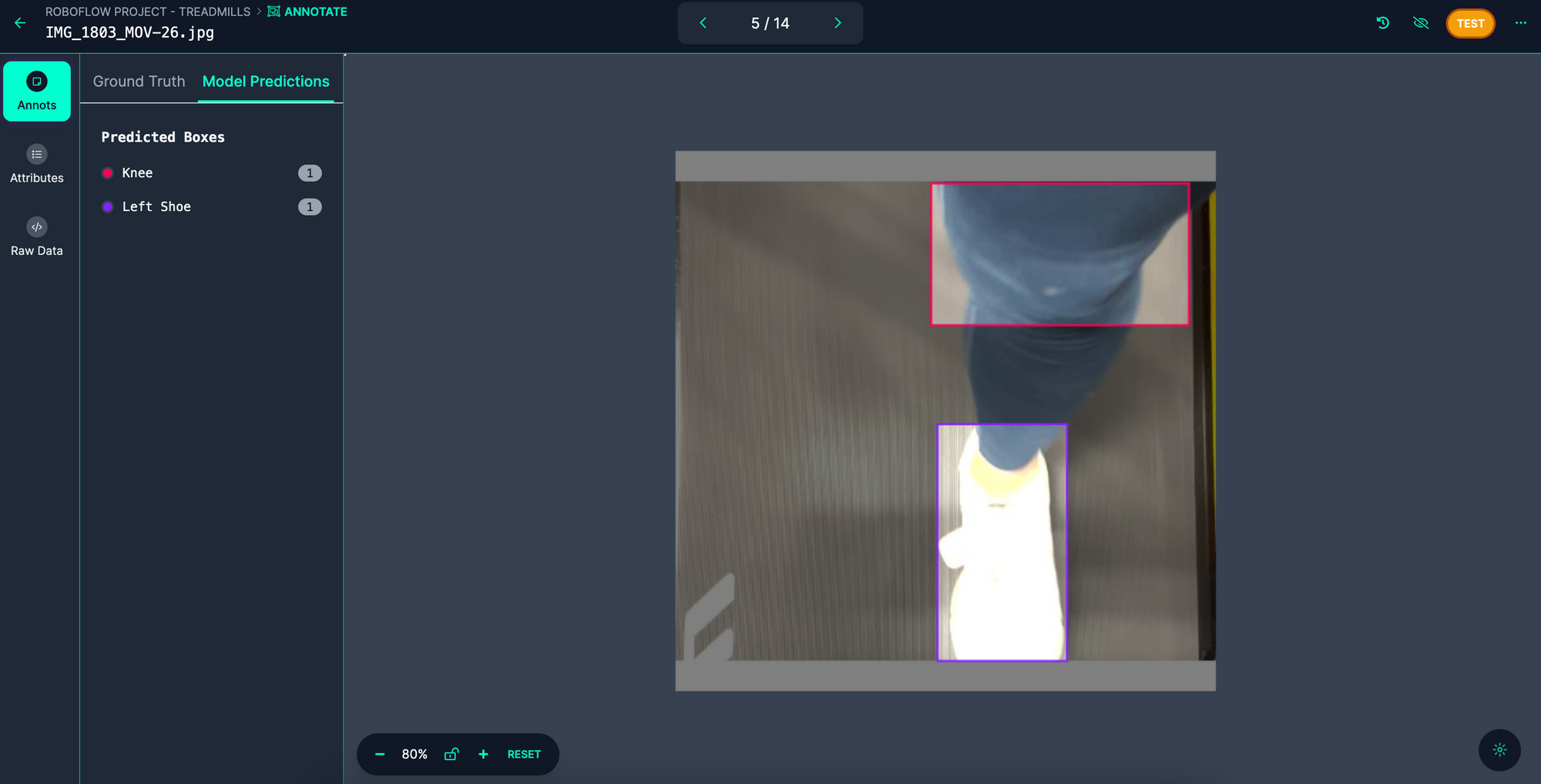

Here is an example of an annotated image that shows a knee and foot, the indicators that someone is on a treadmill:

Based on this model's performance, I would guess a segmentation model would have performed much better at recognizing shoes at different angles and visibilities as well as at distinguishing between left and right.

Preprocessing filters used:

- Auto-orient

- Static Crop

- Resize

Augmentation filters used:

- Rotation

- Blur

- Cutout

- Bounding Box Flip

After training the model, I attained a 90.8% mAP score:

Building the Application Logic

Next, I established the mechanism for recognizing when an object is no longer being detected and triggering an event. I do this by calling our Roboflow model via the API and running the model on our set of images.

When an object is detected in an image, the loop continues to run. When an object is not detected in an image, the system begins a counter. If enough consecutive images do not detect an object, I then stop the system and trigger an event. This is important because we don’t want the model missing one object to cause a stop event to occur.

The number of consecutive frames required to stop the system is a variable that I kept at a quarter of a second.

If a system like this were implemented on a treadmill, the trigger event would be to turn off the treadmill immediately. But since I cannot control that variable, I built a text message system to illustrate what a stop event would look like.

Text message sent via Twilio

Message written to a CSV

Finally, the script collects all of the images up until the trigger point and pieces them back together into a video. The output video is meant to serve as a visual example of what a camera would see and when it would respond.

Learnings

To get this system ready for production, I would need to improve the model's accuracy as well as improve the hardware setup. As I mentioned above, training a segmentation model might be the best way to fill in the gaps in the models performance moving forward.

This example, while certainly applicable to the world of gym equipment, can be abstracted out to better imagine how computer vision and trigger events can build products and processes in all kinds of industries. An immediate industry that comes to mind is the world of physical security. A camera monitoring a room that detects a person who is not supposed to be there can trigger an alarm and send out a warning notification to all security members.

Thanks to Roboflow's user-friendly product, superb documentation and tutorials, and some help from ChatGPT, I was able to build this script without any technical proficiency. I would encourage anyone with a physical world problem that computer vision may be able to solve to search through Roboflow's blog to find a jumping off point and begin solving it.