We are excited to announce the launch of support for video processing in Roboflow Workflows. Roboflow Workflows is a web-based computer vision application builder. With Workflows, you can build complex, multi-step applications in a few minutes.

With the new video processing capabilities, you can create computer vision applications that:

- Monitor the amount of time an object has spent in a zone;

- Count how many objects have passed over a line, and;

- Visualize dwell time and the number of objects that have passed a line.

In this guide, we are going to demonstrate how to use the video processing features in Roboflow Workflows. We will walk through an example that calculates for how long someone is in a zone.

If you want to calculate the number of objects crossing a line, refer to our Count Objects Crossing Lines with Computer Vision guide.

Here is an example of a Workflow running to calculate dwell time in a zone, using an example of skiers on a slope:

We also have a video guide that walks through how to build video applications in Workflows:

Without further ado, let’s get started!

Step #1: Create a Workflow

To get started, we need to create a Workflow.

Create a free Roboflow account. Then, click on “Workflows” in the left sidebar. This will take you to your Workflows home page, from which you will be able to see all Workflows you have created in your workspace.

Click “Create a Workflow” to create a Workflow.

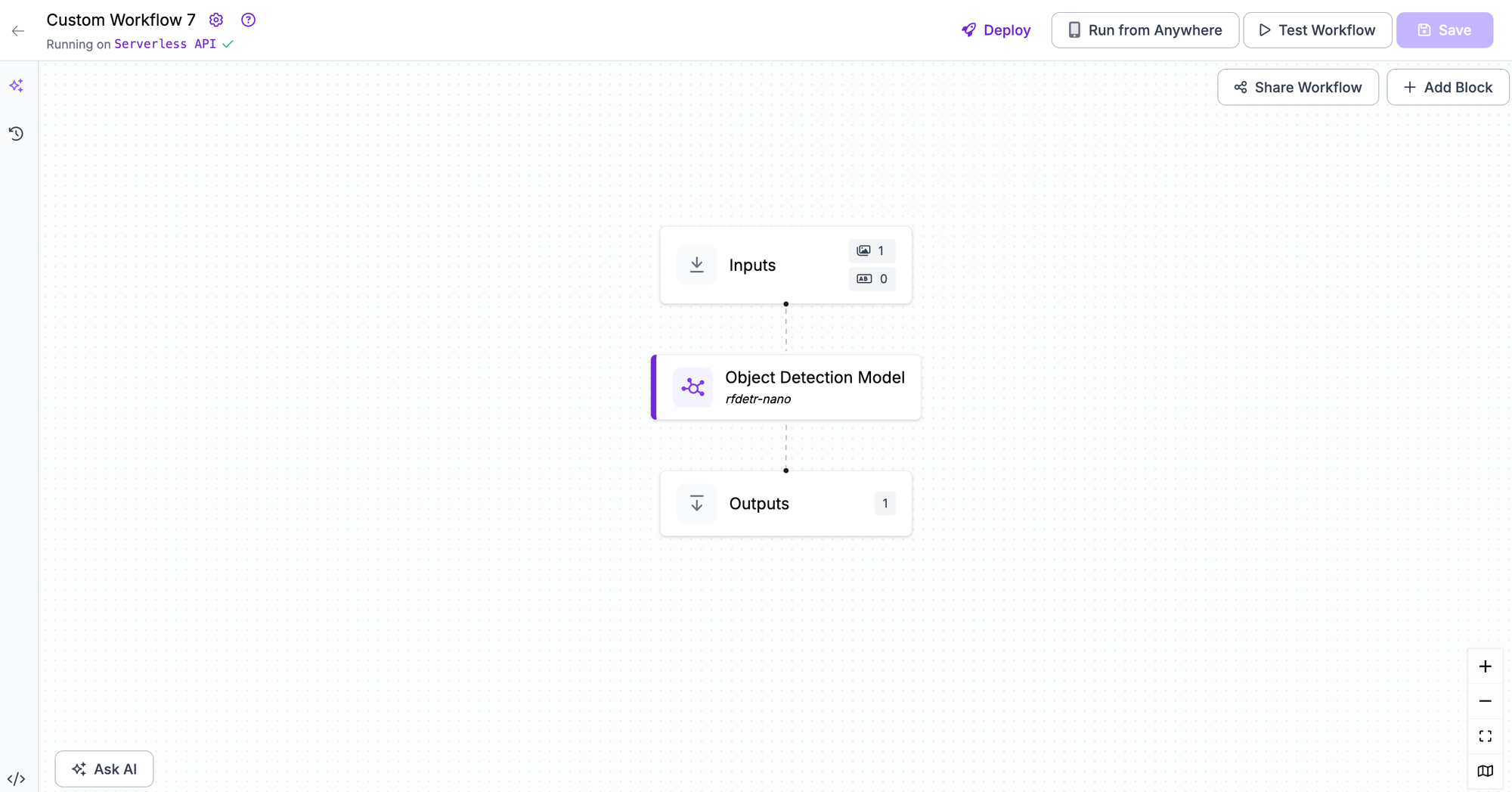

You will be taken into the Roboflow Workflows editor from which you can build your application:

We now have an empty Workflow from which we can build an application.

Step #2: Add a Video Input

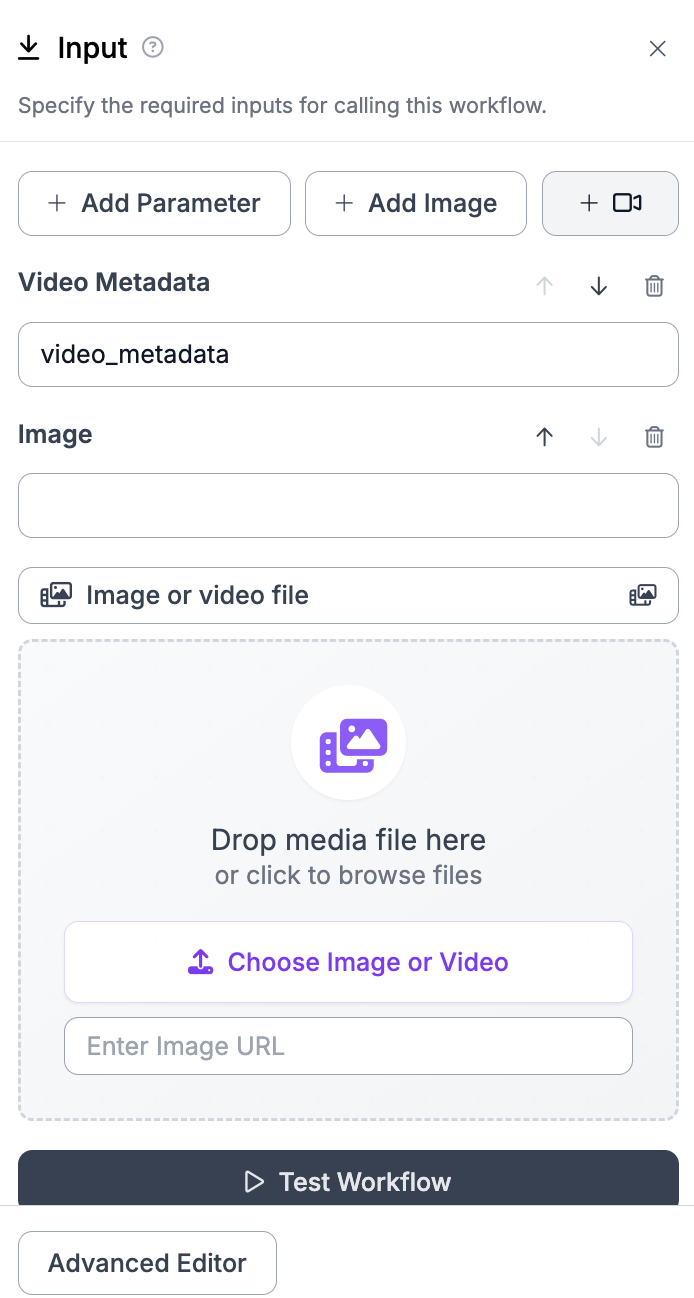

We will need a Video Metadata input. This input can accept webcam streams or video files local to your device, or RTSP streams.

Click “Input” on your Workflow. Click the camera icon in the Input configuration panel to add video metadata:

This parameter will be used to connect to a camera.

Step #3: Add a Detection Model

We are going to use a model to detect skiiers on a slope. We will then use video processing features in Workflows to track objects between frames so we can calculate time spent in a zone.

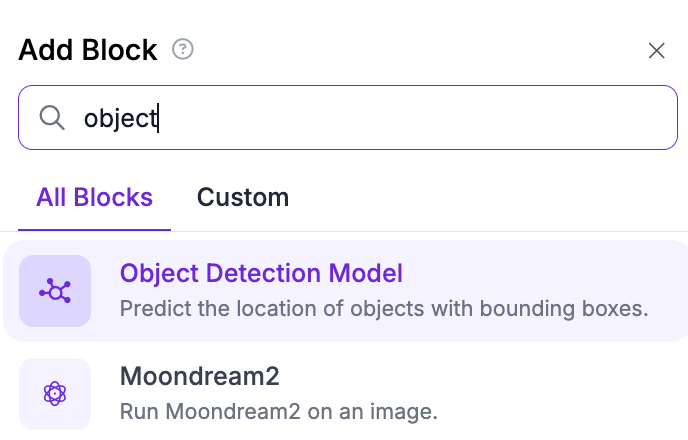

To add an object detection model to your Workflow, click “Add a Block” and choose the Object Detection Model block:

A configuration panel will open from which you can select the model you want to use. You can use models trained on or uploaded to Roboflow, or any model on Roboflow Universe.

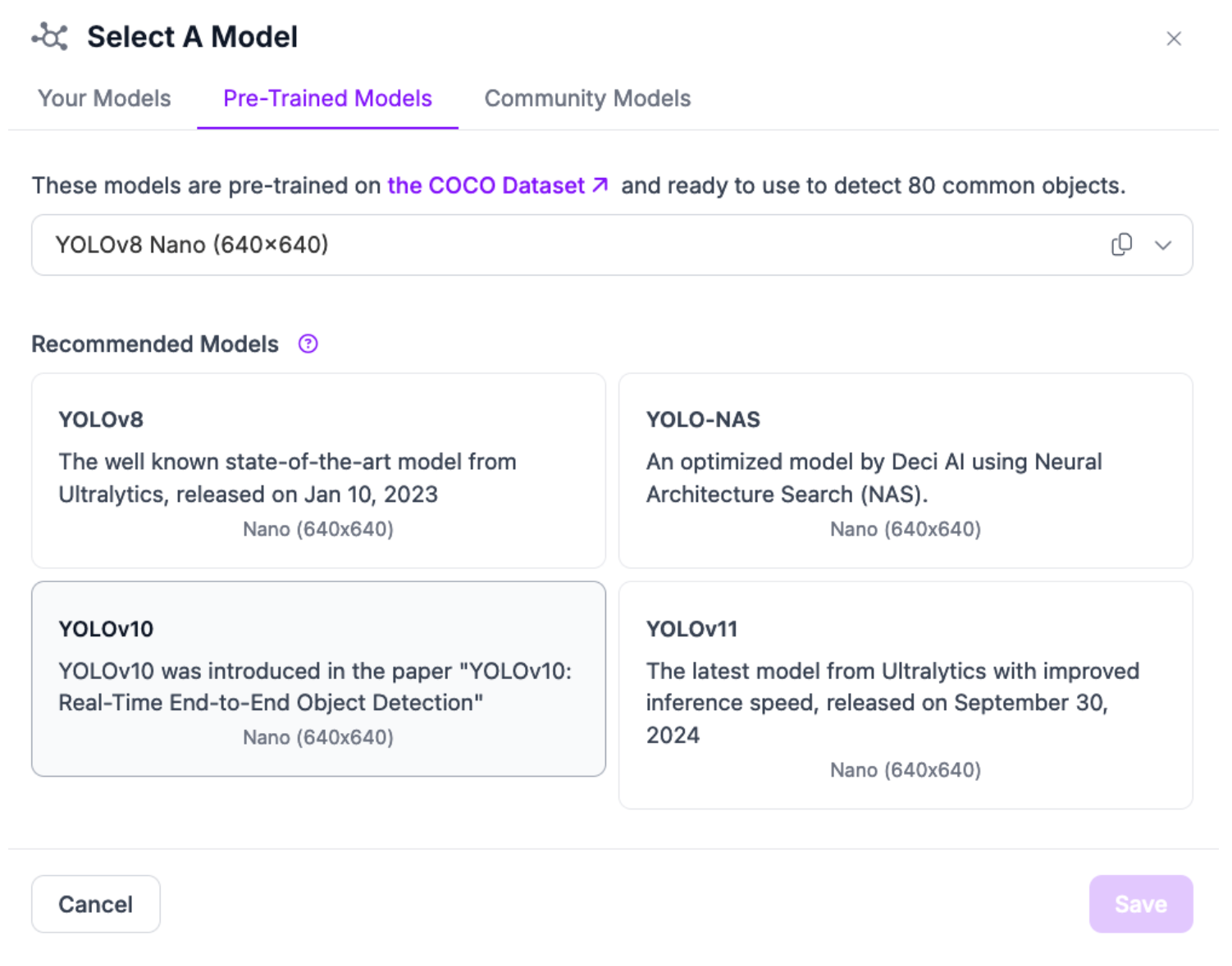

For this example, let’s use a YOLO model hosted on Universe that can detect people skiing. Click “Public Models” and choose YOLOv8 Nano:

Step #4: Add Tracking

With a detection model set up, we now need to add object tracking. This will allow us to track objects between frames, a prerequisite for calculating dwell time.

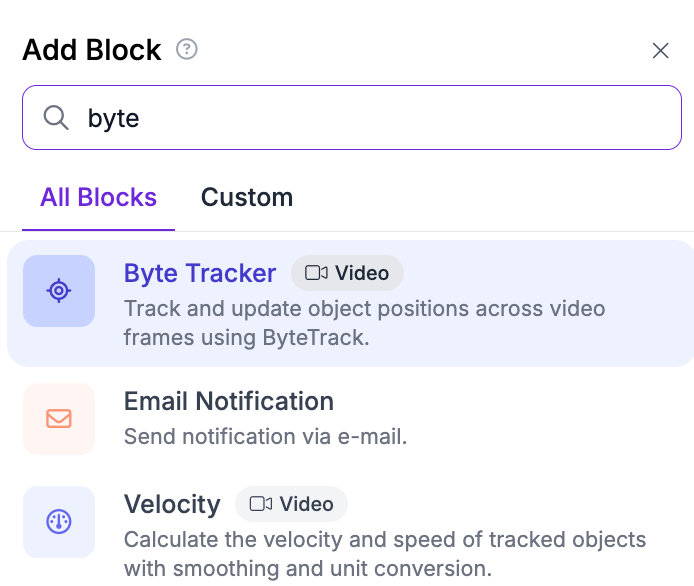

Add a “Byte Tracker” block to your Workflow:

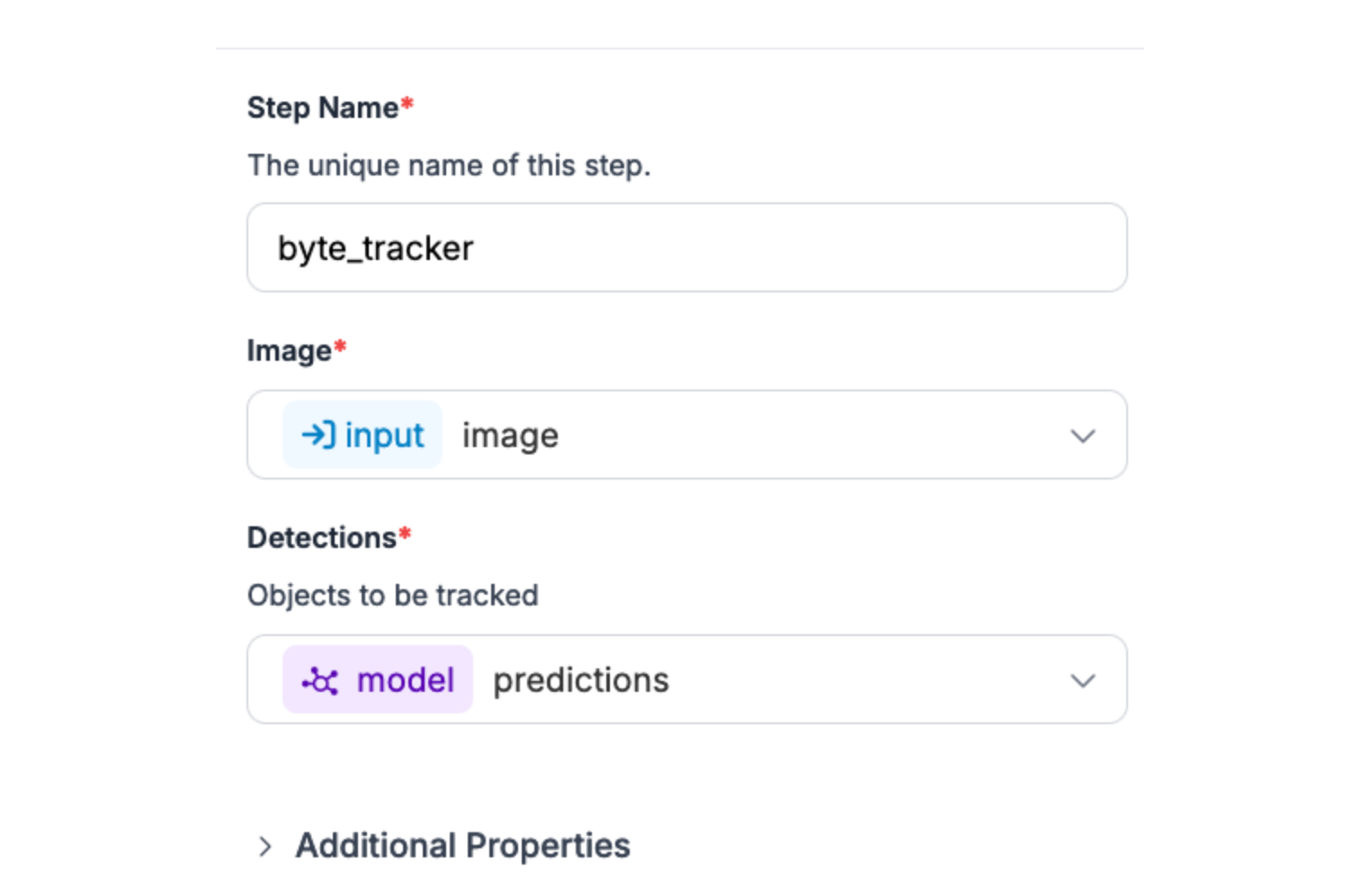

The block should be automatically connected to your object detection model. You can verify this by ensuring the Byte Tracker reads predictions from your model:

Step #5: Add Time in Zone

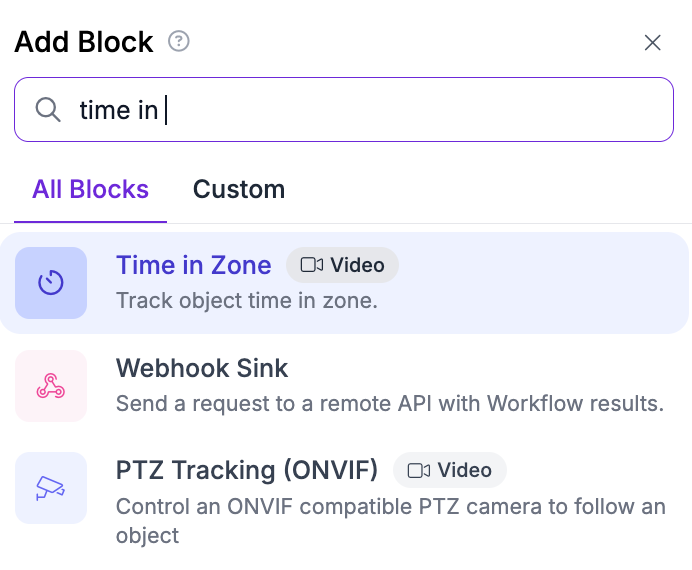

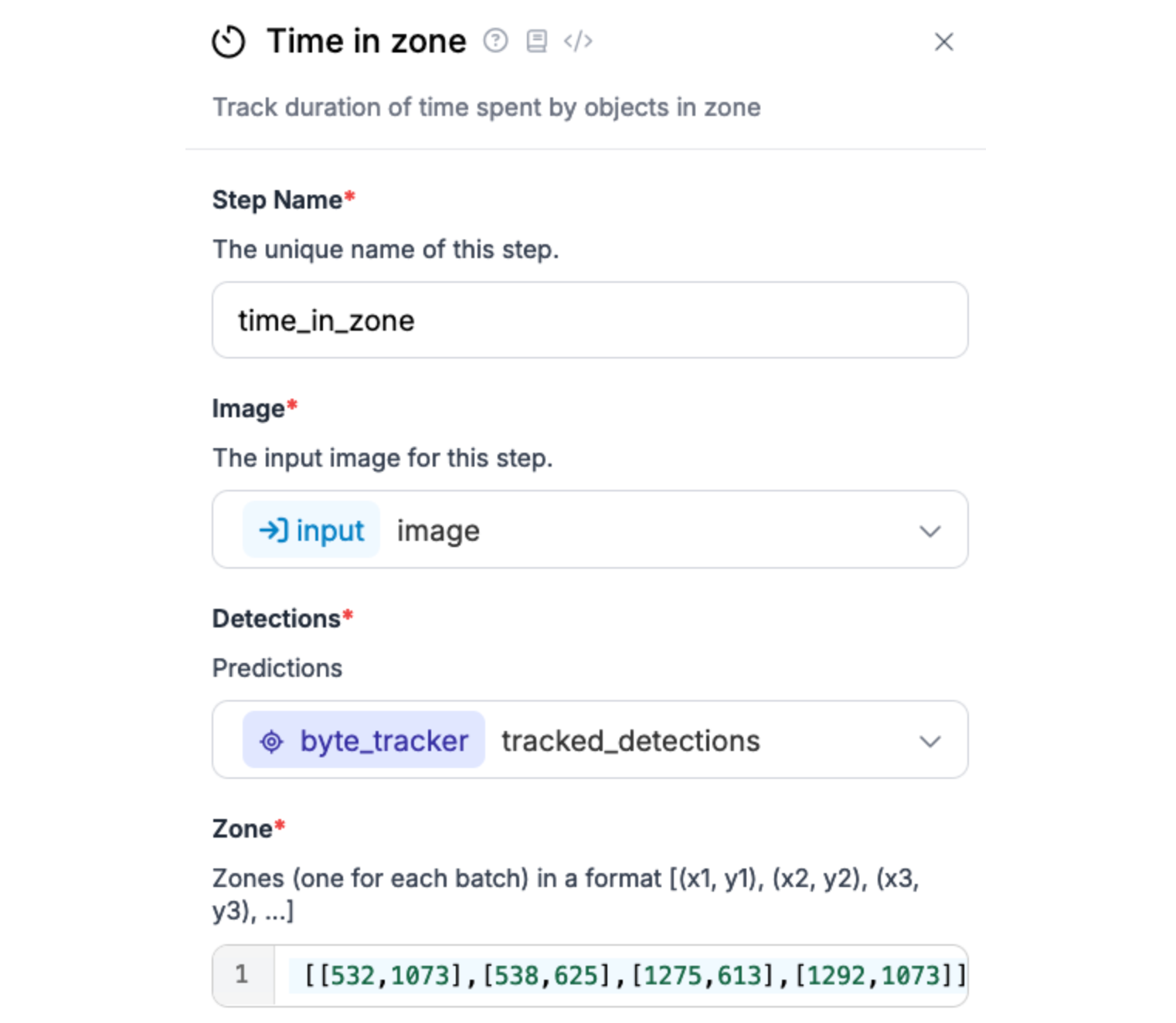

To calculate dwell time (also known as “time in zone”), we can use the Time in Zone block. Add the block to your Workflow:

A configuration panel will appear from which you can configure the Workflow block.

You will need to set the zone in which you want to count objects.

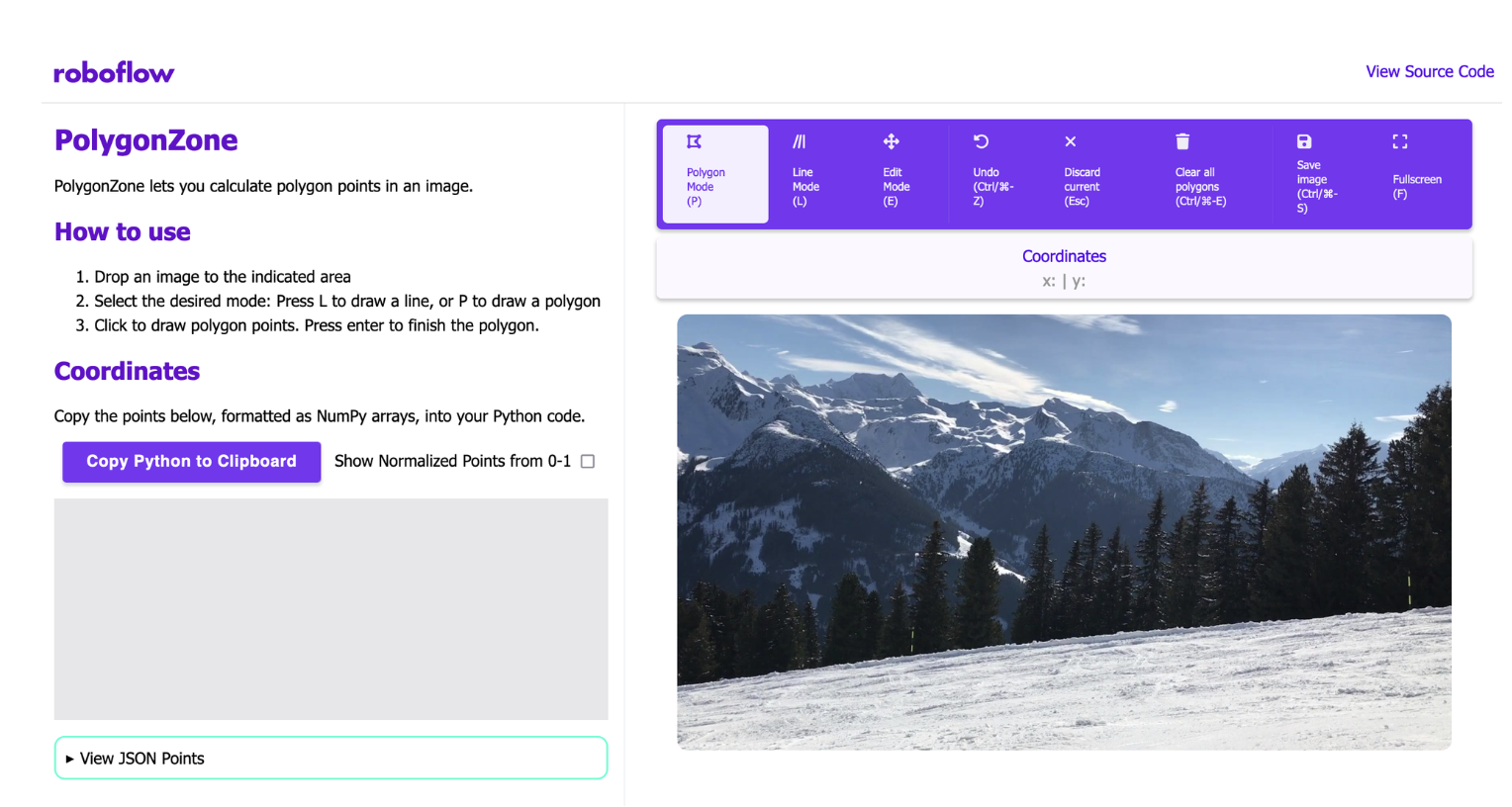

You can calculate the coordinates of a zone using Polygon Zone, a web interface for calculating polygon coordinates. Open Polygon Zone, then drag in an image of the exact resolution of the input frame from your video.

If you have a static video, you can retrieve a frame of the exact resolution with the ffmpeg command:

ffmpeg -i video.mp4 -vf "select=eq(n\,0)" -q:v 3 output_image.jpgAbove, replace video.mp4 with the file you will use as a reference.

For example, if your input video is 1980x1080, your input image to Polygon Zone should be the same resolution.

Then, use the polygon annotation tool to add points. You can create as many zones as you want. To complete a zone, press the Enter key.

The NumPy coordinates are formatted in x0,y0,x1,y1 form. You can copy these into the Workflows editor.

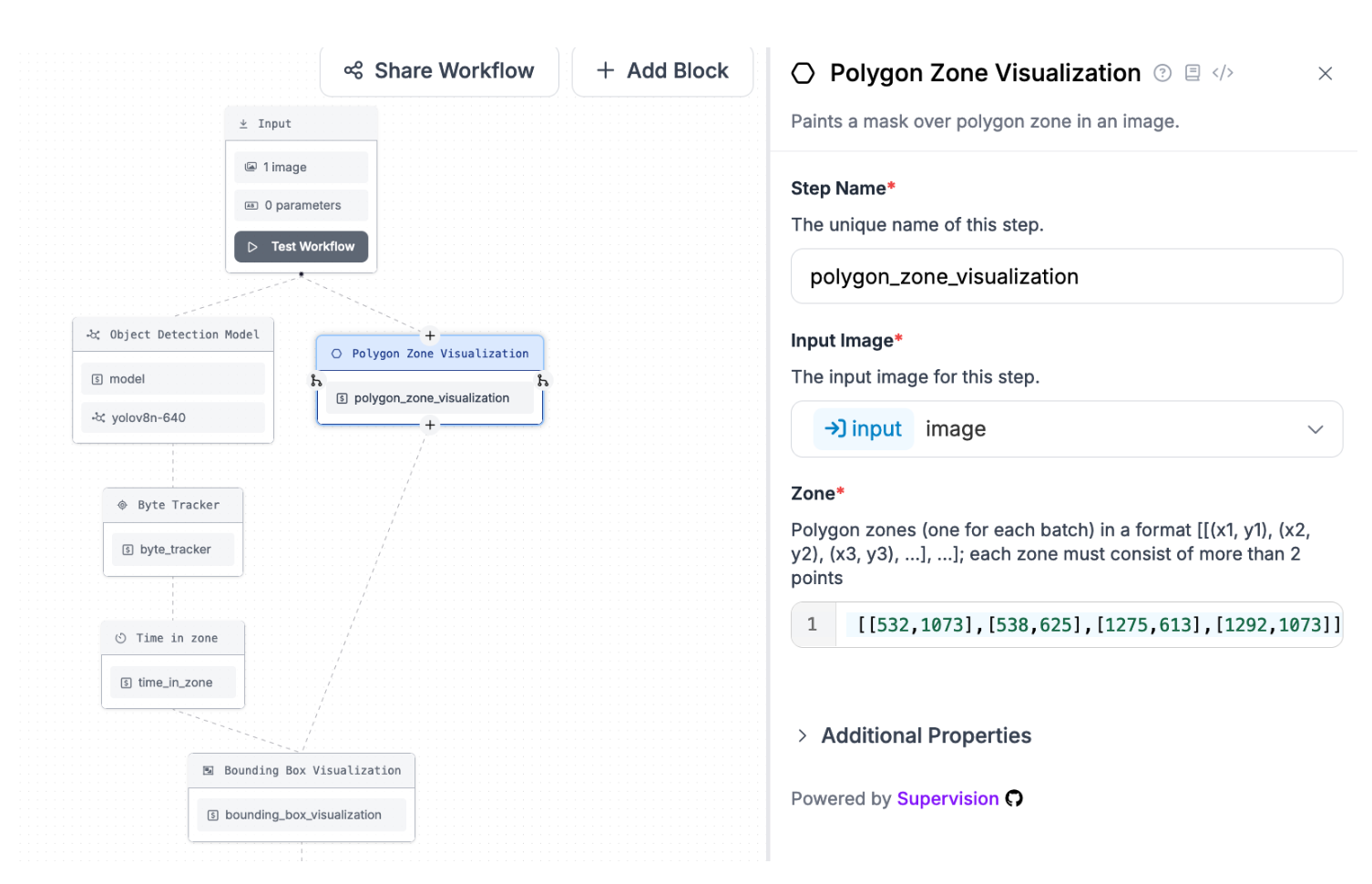

Step #6: Add Visualizations

By default, Workflows does not show any visual representation of the results from your Workflow. You need to add this manually.

For testing, we recommend adding three visualization blocks:

- Polygon zone visualization, which lets you see the polygon zone you have drawn.

- Bounding box visualization, which displays bounding boxes corresponding to detections from an object detection model, and;

- Label visualization, which shows the labels that correspond with each bounding box.

The polygon zone visualization should be connected to your input image, like this:

Add the coordinates of the zone you defined in the Time in Zone block to the Zone value in the polygon zone visualization.

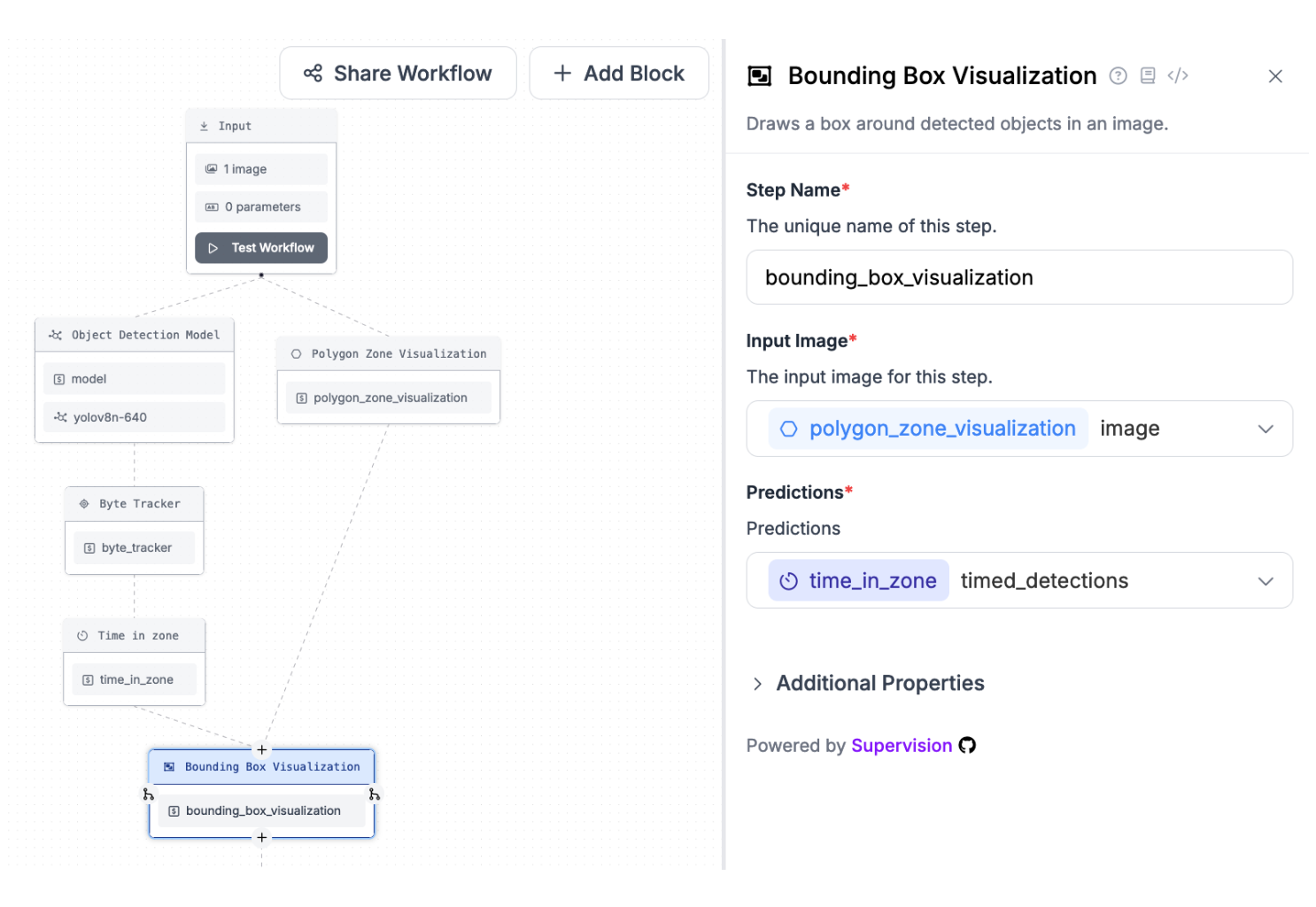

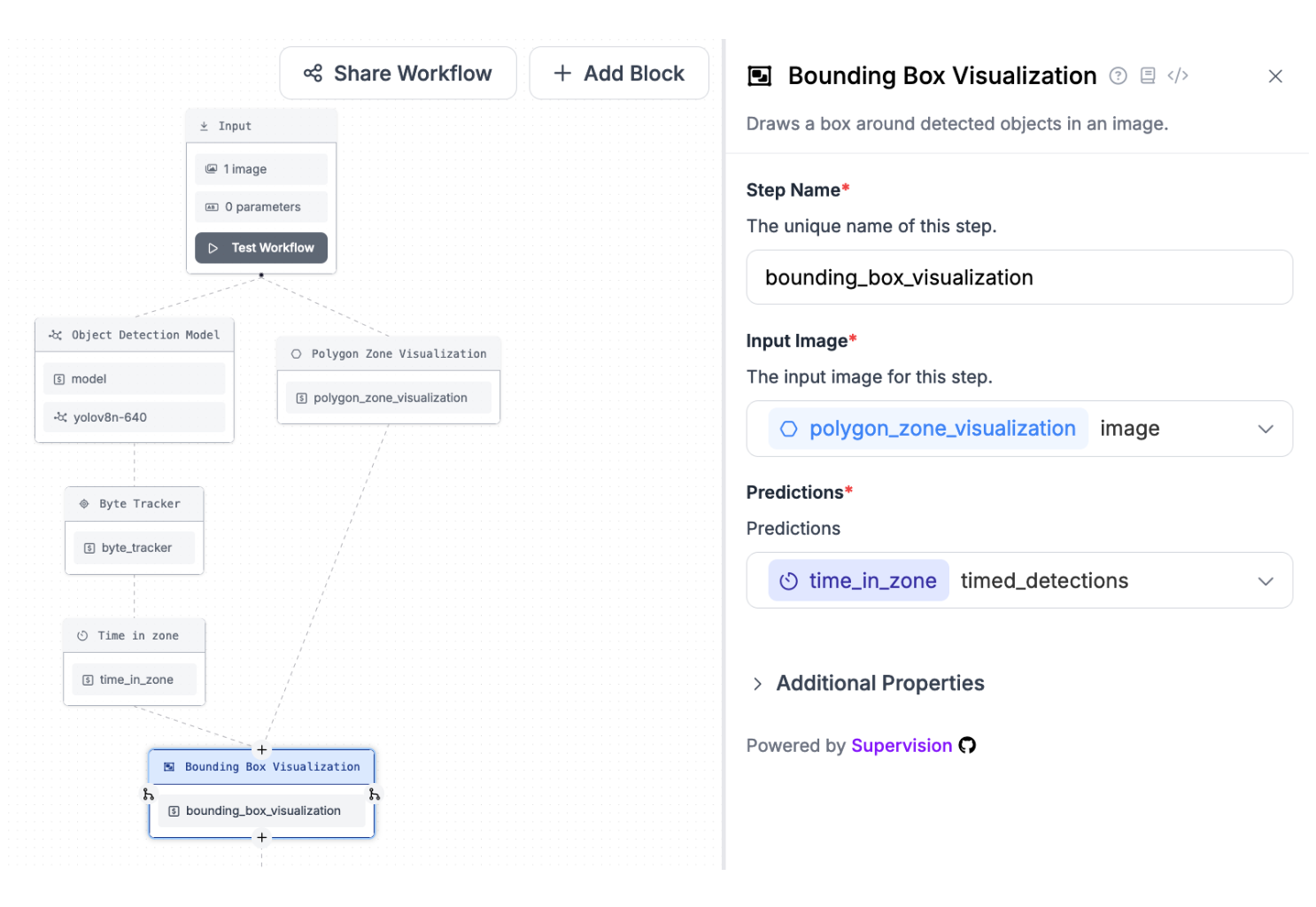

Set up a bounding box visualization to read the image from your polygon zone visualization and use the predictions from the time in zone feature:

Set up the label visualization to read the time in zone detections and use the bounding box visualization image:

Once you have added these visualizations, you are ready to test your Workflow.

Step #7: Test Workflow

To test your Workflow, you will need to run your Workflow on your own hardware.

To do this, you will need an installation of Roboflow Inference, our on-device deployment software, set up.

Run the following command to install Inference:

pip install inferenceThen, create a new Python file and add the following code:

import argparse

import os

from inference import InferencePipeline

import cv2

API_KEY = os.environ["ROBOFLOW_API_KEY"]

def main(

video_reference: str,

workspace_name: str,

workflow_id: str,

) -> None:

pipeline = InferencePipeline.init_with_workflow(

api_key=API_KEY,

workspace_name=workspace_name,

video_reference=video_reference,

on_prediction=my_sink,

workflow_id=workflow_id,

max_fps=30,

)

pipeline.start() # start the pipeline

pipeline.join() # wait for the pipeline thread to finish

def my_sink(result, video_frame):

visualization = result["label_visualization"].numpy_image

cv2.imshow("Workflow Image", visualization)

cv2.waitKey(1)

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument("--video_reference", type=str, required=True)

parser.add_argument("--workspace_name", type=str, required=True)

parser.add_argument("--workflow_id", type=str, required=True)

args = parser.parse_args()

main(

video_reference=args.video_reference,

workspace_name=args.workspace_name,

workflow_id=args.workflow_id,

This code will create a command-line interface that you can use to test your Workflow.

First, export your Roboflow API key into your environment:

export ROBOFLOW_API_KEY=""Run the script like this:

python3 app.py --video_reference=0 --workspace_name=workspace --workflow_id=workflow-idAbove, set:

- Video_reference to the ID associated with the webcam on which you want to run inference. By default, this is 0. You can also specify an RTSP URL or the name of a video file on which you want to run inference.

- workspace_name with your Roboflow workspace name.

- workflow_id with your Roboflow Workflow ID.

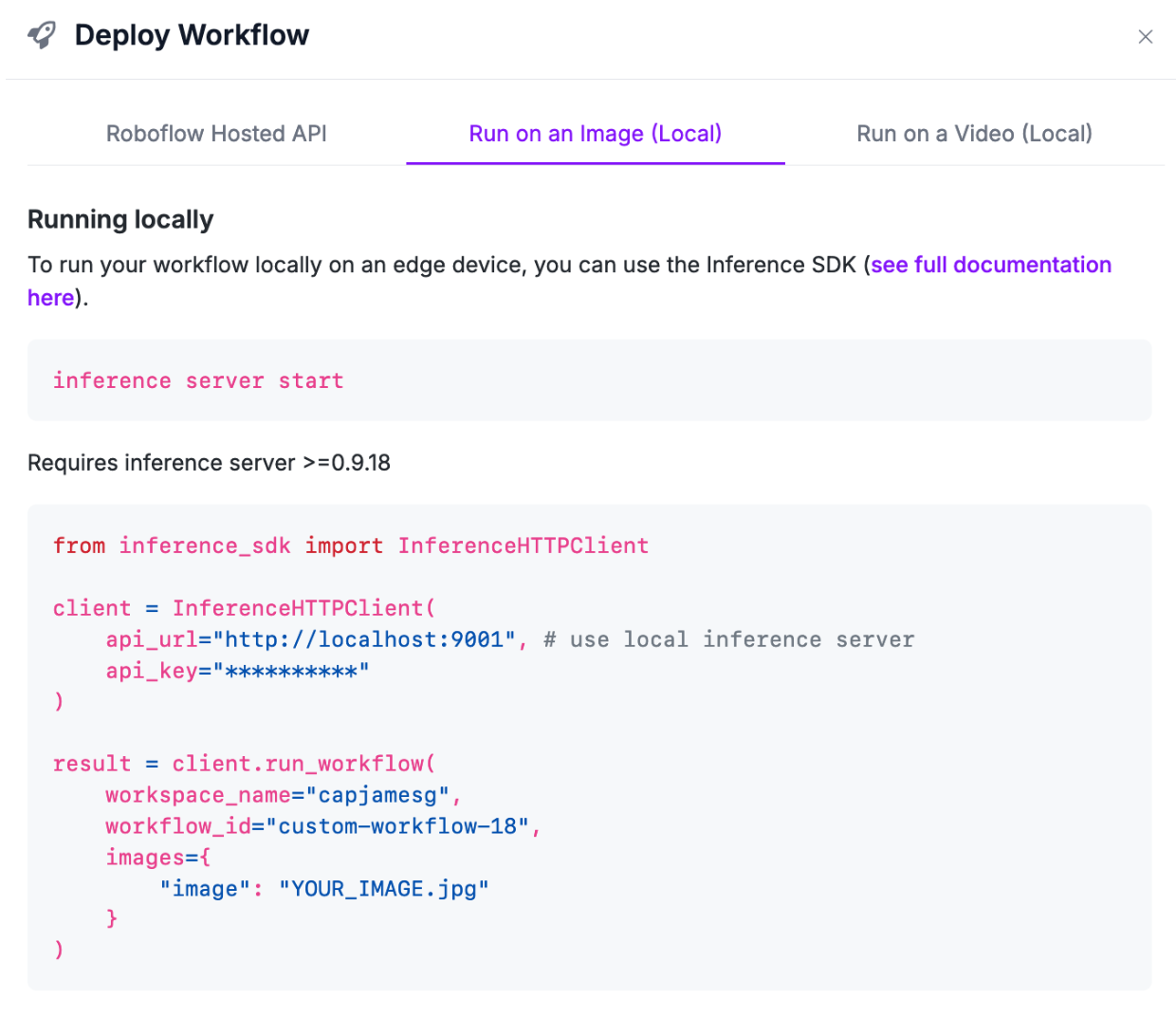

You can retrieve your Workspace name and Workflow ID from the Workflow web editor. To retrieve this information, click “Deploy Workflow” in the top right corner of the Workflows web editor, then copy these values from any of the default code snippets.

You only need to copy the workspace name and Workflow ID. You don’t need to copy the full code snippet, as we have already written the requisite code in the last step.

Run the script to test your Workflow.

Here is an example showing the Workflow running on a video:

Our Workflow successfully tracks the time each person spends in the drawn zone.

Conclusion

Roboflow Workflows is a web-based computer vision application builder. Workflows includes dozens of blocks you can use to build your application, from detection and segmentation models to predictions cropping to object tracking.

In this guide, we walked through how to build a Workflow that uses the new video processing features in Roboflow Workflows.

We created a Workflow, set up an object detection model, configured object tracking, then used the Time in Zone block to define a zone in which we wanted to calculate dwell time. We then created Workflow visualizations and ran the Workflow on our own hardware.

To learn more about Workflows and how to use Workflows on static images, refer to our Roboflow Workflows introduction.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Oct 18, 2024). Launch: Video Processing with Roboflow Workflows. Roboflow Blog: https://blog.roboflow.com/video-processing-roboflow-workflows/