In traditional image captioning, the goal is to assign a description that reflects the contents of a full image. Image captioning models are useful for a variety of purposes, from generating data for use in hybrid search systems to generating captions to accompany images in written text.

Dense image captioning, on the other hand, is concerned with generating rich descriptions of specific regions in an image.

In this guide, we are going to discuss what dense image captioning is and how you can generate dense image captions with Florence-2, an MIT-licensed multimodal vision model developed by Microsoft Research.

Without further ado, let’s get started!

What is Dense Image Captioning?

Introduced in the paper "DenseCap: Fully Convolutional Localization Networks for Dense Captioning" by Johnson, Justin and Karpathy, Andrej and Fei-Fei, Li, dense image captioning involves generating descriptions of specific regions in an image.

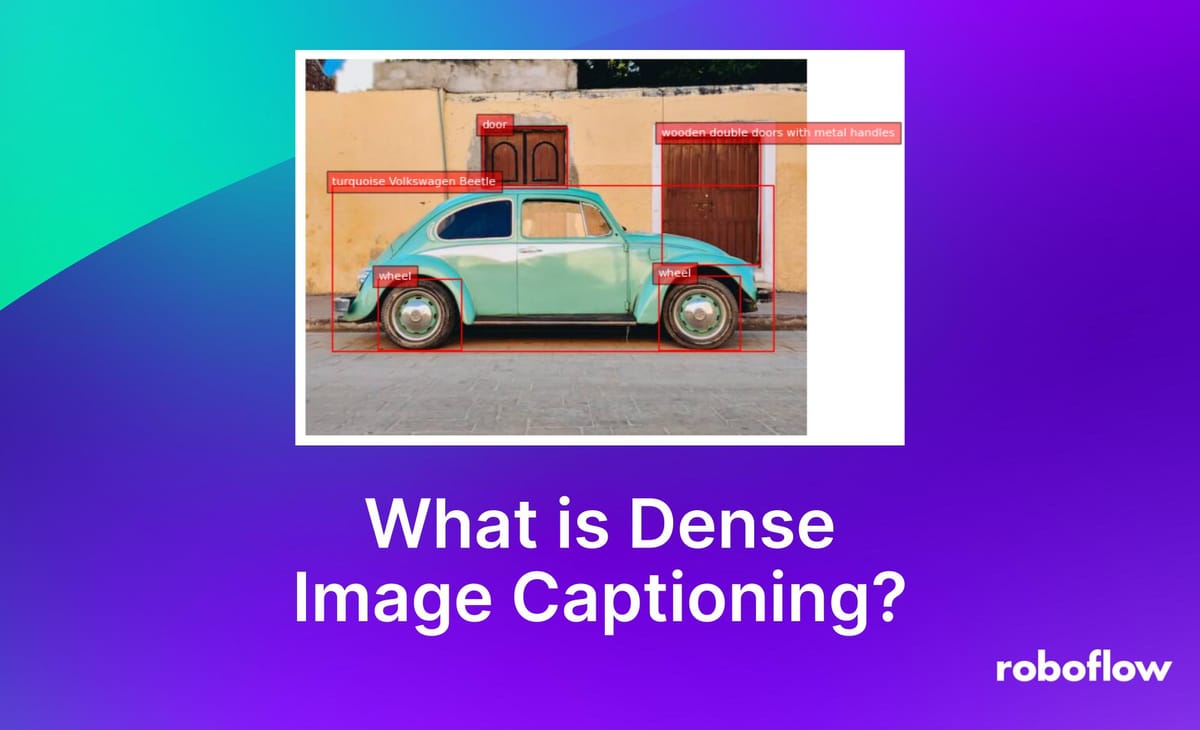

Consider the following image of a car on a street:

Let’s run this image through Florence-2, a multimodal vision model capable of both image captioning and dense image captioning. When prompted to generate a regular image caption, the model returns:

The image shows a vintage Volkswagen Beetle car parked on a cobblestone street in front of a yellow building with two wooden doors. The car is painted in a bright turquoise color and has a sleek, streamlined design. It has two doors on either side of the car, one on top of the other, and a small window on the front. The building appears to be old and dilapidated, with peeling paint and crumbling walls. The sky is blue and there are trees in the background.

In contrast, when prompted to generate a dense caption, the model returns:

The regular caption describes the whole image, whereas the dense caption localizes specific parts of the image.

The image of the dense caption results above shows the bounding boxes returned as part of the dense captions. We can infer extensive details about the image from both descriptions, but the dense captions give us localization information that we can use to further understand the image.

Dense image captioning is typically performed by multimodal models rather than traditional object detection models. This is because multimodal model architectures are able to generate rich descriptions without having to be specifically taught every possible class.

How to Generate Dense Image Captions with Florence-2

You can generate dense image captions with Florence-2, an open source multimodal vision model developed by Microsoft. Let’s walk through how to generate dense image captions.

Step #1: Install Required Dependencies

First, we need to install Transformers and associated dependencies, which we will use to run the Florence-2 model:

pip install transformers timm flash_attn einops -qWe will also need supervision, a Python package with utilities for processing computer vision model predictions:

pip install supervisionStep #2: Load the Model

Next, we need to import dependencies into a Python script and load the model. To do so, create a new Python file and add the following code:

from transformers import AutoProcessor, AutoModelForCausalLM

from PIL import Image

import requests

import copy

model_id = 'microsoft/Florence-2-large'

model = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True).eval().cuda()

processor = AutoProcessor.from_pretrained(model_id, trust_remote_code=True)

def run_example(task_prompt, text_input=None):

if text_input is None:

prompt = task_prompt

else:

prompt = task_prompt + text_input

inputs = processor(text=prompt, images=image, return_tensors="pt")

generated_ids = model.generate(

input_ids=inputs["input_ids"].cuda(),

pixel_values=inputs["pixel_values"].cuda(),

max_new_tokens=1024,

early_stopping=False,

do_sample=False,

num_beams=3,

)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

parsed_answer = processor.post_process_generation(

generated_text,

task=task_prompt,

image_size=(image.width, image.height)

)

return parsed_answer

Here, we load the model and its dependencies. The code above code is from the official Florence-2 notebook, written by Microsoft.

We can then run our model on an image using the following code:

task_prompt = "<DENSE_REGION_CAPTION>"

answer = run_example(task_prompt=task_prompt)

try:

detections = sv.Detections.from_lmm(sv.LMM.FLORENCE_2, answer, resolution_wh=image.size)

annotate(image.copy(), detections)

except Exception as e:

print(f"Exception: {e}")Here, we use the “<DENSE_REGION_CAPTION>” task type. This is the task type that generates dense image captions. We then load our detections into a supervision Detections object. With this object, we can use the supervision Annotators API to plot the dense captions on our image.

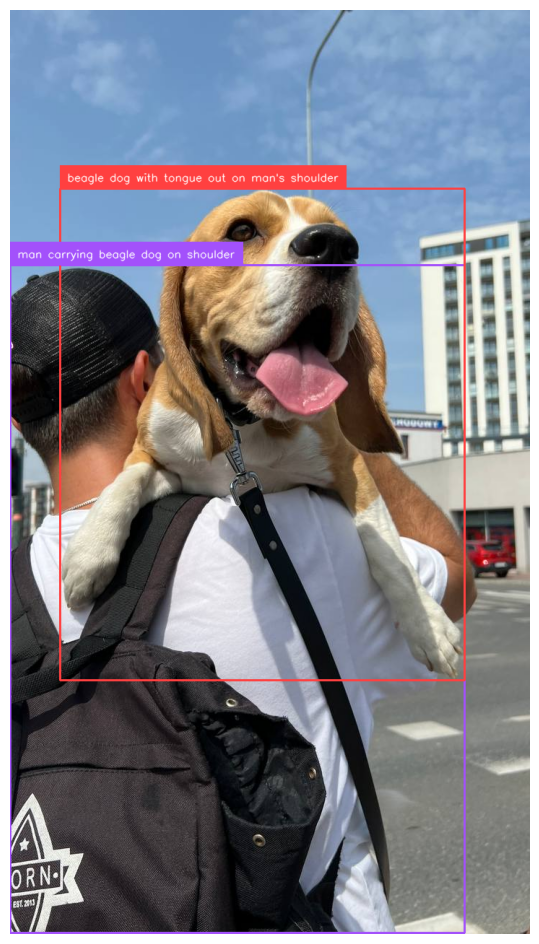

Let’s run our code on the following image of a dog:

Our model returns:

Our model successfully identified different regions of the image, and provided rich descriptions of those regions.

Since our model returns bounding boxes, we can programmatically evaluate the image as necessary. For example, we could check if a bounding box was in front of another one (i.e. if a car was parked in front of a door), check if two bounding boxes are side-by-side, and perform other checks that involve analyzing the relations of grounded regions in an image.

Conclusion

Dense image captioning is a computer vision task type that involves localizing objects in an image and generating captions for the localized regions.

In this guide, we walked through what dense image captioning is and how you can use Florence-2, a multimodal computer vision model, to generate dense captions of image regions.

To learn more about the Florence-2 model, refer to our guide to Florence-2.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Jul 10, 2024). What is Dense Image Captioning?. Roboflow Blog: https://blog.roboflow.com/what-is-dense-image-captioning/