Suppose you have a folder of videos in AWS S3 that you want to analyze using machine learning. Perhaps you want to identify timestamps for scenes in videos, run a custom object detection model on the videos, run OCR on frames in the video, or assign a moderation label to videos. These are all possible with the Roboflow Video Inference API.

With the Roboflow Inference API, you can run foundation vision models like CLIP, as well as fine-tuned vision models that can identify specific objects, on videos.

In this guide, we are going to demonstrate how to run a football player detection model on a folder of videos from AWS S3. We will use this model to detect players, a football, and referees. The football player detection model we are using is available for you to use on Roboflow Universe, a community where over 50,000 vision models have been shared for public use.

Here is an example of the predictions from our model displayed on a video:

You can also use models you have privately trained on the Roboflow platform, and supported foundation models. Read our documentation on the video inference API to learn about supported foundation models.

Without further ado, let’s get started!

Step #1: Select a Model

For this guide, we will use the football player detection model available on Roboflow Universe. With that said, you can use any object detection model available on Universe or models you have trained privately on the Roboflow platform

If you need a custom model fine-tuned for your use case, read our Roboflow Getting Started guide to learn how to train a model to identify custom objects.

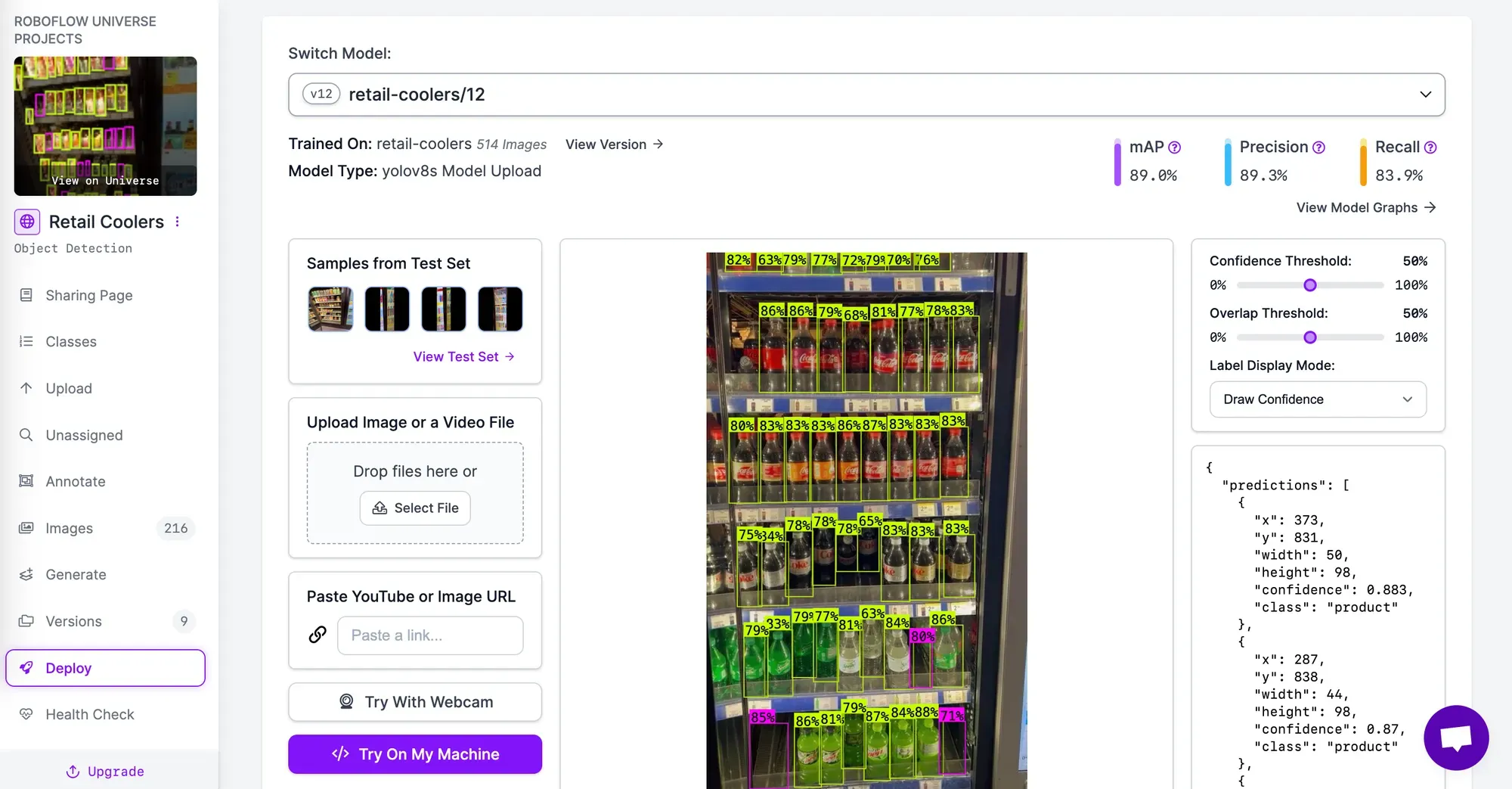

To explore public models, go to Roboflow Universe and search for a model that is related to the problem you are solving. You will need a model ID and your API key, both of which appear on the “Model” tab associated with all models on Universe.

Keep the model open as we will be referring to it later.

If you are using a model you trained on Roboflow, go to your Roboflow dashboard and select a model you have trained that you want to use to analyze your videos. Go to the “Deploy” page, accessible from the left sidebar on your project page, to retrieve your model ID and version. These values appear in the "Switch model" section.

Once you have selected a model for use in your video analysis, we can start to retrieve videos from AWS S3.

Step #2: Retrieve Videos from AWS S3

To retrieve videos from AWS S3, you will need the `aws` command line utility installed. You can use this command line utility to authenticate with AWS. To learn how to set up the AWS CLI and authenticate, refer to the official AWS CLI installation documentation.

You will also need to install boto3, the official AWS Python SDK:

pip install boto3Finally, install roboflow, which we will use to analyze our videos, and `tqdm`, which we will use to create a progress bar that shows the status of our analysis. We also need to install `supervision`, which we will use to plot predictions from the Roboflow Video Inference API onto a video.

pip install roboflow tdqm supervisionOnce you have authenticated, you can retrieve your videos for processing with the Roboflow Video Inference API.

Create a new Python file and add the following code:

import boto3

import tqdm

import json

import roboflow

roboflow.login()

PROJECT_ID = "football-players-detection-3zvbc"

VERSION = 2

AWS_BUCKET = “vision-rf”

rf = roboflow.Roboflow()

project = rf.workspace().project(PROJECT_ID)

model = project.version(VERSION).model

s3_client = boto3.client("s3")

files = [file["Key"] for file in s3_client.list_objects(Bucket=bucket)["Contents"]]

file_results = {k: None for k in files}Above, replace:

PROJECT_IDwith your Roboflow project ID. Learn how to retrieve your model ID.VERSIONwith your Roboflow model version.AWS_BUCKETwith the name of the AWS bucket in which your videos are stored.

The code above will load your Roboflow project and retrieve a list of all files in your bucket.

When you first run this code, you will be asked to issue an authentication token in the Roboflow platform. This process is interactive.

Next, add the following code:

for file in tqdm.tqdm(files, total=len(files)):

url = s3_client.generate_presigned_url(

"get_object", Params={"Bucket": bucket, "Key": file}, expiration=30

)

job_id, signed_url = model.predict_video(

url,

fps=5,

prediction_type="batch-video",

)

results = model.poll_until_video_results(job_id)

file_results[file] = results

with open("results.json", "w+") as results_file:

json.dump(file_results, results_file)This code will generate a signed URL that the Roboflow Video Inference API can use to retrieve your video for analysis. This URL expires 30 seconds after issuance to maintain a high level of security for your data. The URL is then sent to Roboflow so your video can be processed. The poll_until_video_results function will poll the Roboflow Video Inference API until results are available. Results will then be saved in a “results.json” file, mapped to the name of the file that was processed.

The Video Inference API will run inference at a rate of five frames per second. You can adjust this number by changing the fps value. Processing videos with higher inference FPS values cost more to run since more inferences need to be run on more frames to process your video.

This process will repeat for every video in the bucket. A progress bar will appear in your terminal that shows how many videos have been analyzed and how many are left to analyze.

To learn more about the structure of the response from the Video Inference API, refer to the Video Inference API documentation.

Step #3 (Optional): View Predictions on a Video

Let’s plot our object detections for a video so we can check that our video has been analyzed properly.

First, download one of the files from your S3 bucket to your computer. Do not change the name of the file, since we will use the file name to retrieve the predictions we saved earlier.

Then, create a new file and add the following code:

import supervision as sv

import numpy as np

import json

import roboflow

VIDEO_NAME = "video1.mp4"

MODEL_NAME = "football-players-detection-3zvbc"

VERSION = 2

roboflow.login()

rf = roboflow.Roboflow()

project = rf.workspace().project(MODEL_NAME)

model = project.version(VERSION).model

with open("results.json", "r") as f:

results = json.load(f)

model_results = results[VIDEO_NAME][MODEL_NAME]

for result in model_results:

for r in result["predictions"]:

del r["tracker_id"]

frame_offset = results[VIDEO_NAME]["frame_offset"]

def callback(scene: np.ndarray, index: int) -> np.ndarray:

if index in frame_offset:

detections = sv.Detections.from_inference(

model_results[frame_offset.index(index)]

)

class_names = [i["class"] for i in model_results[frame_offset.index(index)]["predictions"]]

else:

nearest = min(frame_offset, key=lambda x: abs(x - index))

detections = sv.Detections.from_inference(

model_results[frame_offset.index(nearest)]

)

class_names = [i["class"] for i in model_results[frame_offset.index(nearest)]["predictions"]]

bounding_box_annotator = sv.BoundingBoxAnnotator()

label_annotator = sv.LabelAnnotator()

labels = [class_names[int(detection)] for detection in detections.class_id]

annotated_image = bounding_box_annotator.annotate(

scene=scene, detections=detections)

annotated_image = label_annotator.annotate(

scene=annotated_image, detections=detections, labels=labels)

return annotated_image

sv.process_video(

source_path="video.mp4",

target_path="output.mp4",

callback=callback,

)Above, replace:

VIDEO_NAMEwith the name of the file you downloaded from S3.MODEL_NAMEwith your Roboflow model ID.VERSIONwith your Roboflow model version.

This code will:

- Access the predictions retrieved from the Video Inference API that we saved to the “results.json” file in the last step for the "video1.mp4" file.

- Plot our bounding box predictions onto the video.

- Save the results to a file called “output.mp4”.

Here is the result from the script above:

Bounding boxes that map to the predictions from the Video Inference API have been plotted on our video.

supervision has a range of other utilities you can use to process videos. For example, you can filter detections by confidence, count predictions in a zone, count when predictions cross a line, and more. To learn more about using supervision for video processing, refer to the supervision documentation.

Conclusion

You can now use the Roboflow Video Inference API with videos on S3 to analyze videos. You can run fine-tuned vision models hosted on Roboflow or public models available on Roboflow Universe.

In this guide, we detected the location of balls, players, and referees in a football video stored on AWS. We used the boto3 SDK to retrieve videos then the Roboflow Video Inference API to run inference and then used supervision to plot predictions on each frame.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Jan 12, 2024). How to Analyze a Folder of Videos from AWS S3. Roboflow Blog: https://blog.roboflow.com/analyze-video-aws-s3-roboflow/