On June 5th, 2023, at WWDC, Apple announced their biggest combination of hardware and software in years. The “one more thing” announcement this year – wherein Apple announces a product toward the end of a keynote – was the Apple Vision Pro, a new spatial computing headset integrated with the Apple ecosystem.

Apple Vision Pro’s vertically integrated hardware and software platform is bringing spatial computing, what previously might have been referred to as augmented reality (AR) or mixed reality (MR), to market in 2024. The release is packed with new and never before seen hardware advancements combined with revolutionary software capabilities to create a new paradigm in computing.

This post will cover how this new computing platform and computer vision will come together to deliver a new wave of use cases and applications. Let’s begin!

What is the Apple Vision Pro?

The Apple Vision Pro uses eye tracking, hand gestures, and voice as inputs to create a fully immersive experience.

The computer comes with a micro‑OLED display system with 23 million pixels (for reference, Vision Pro fits 64 pixels into the space of 1 iPhone pixel!) with an M2 chip running visionOS and a new R1 chip for real-time sensor processing of 12 cameras, 5 sensors, and 6 microphones. The R1 chip is used to process input from the cameras, sensors, and microphones, then stream images to the displays within 12 milliseconds.

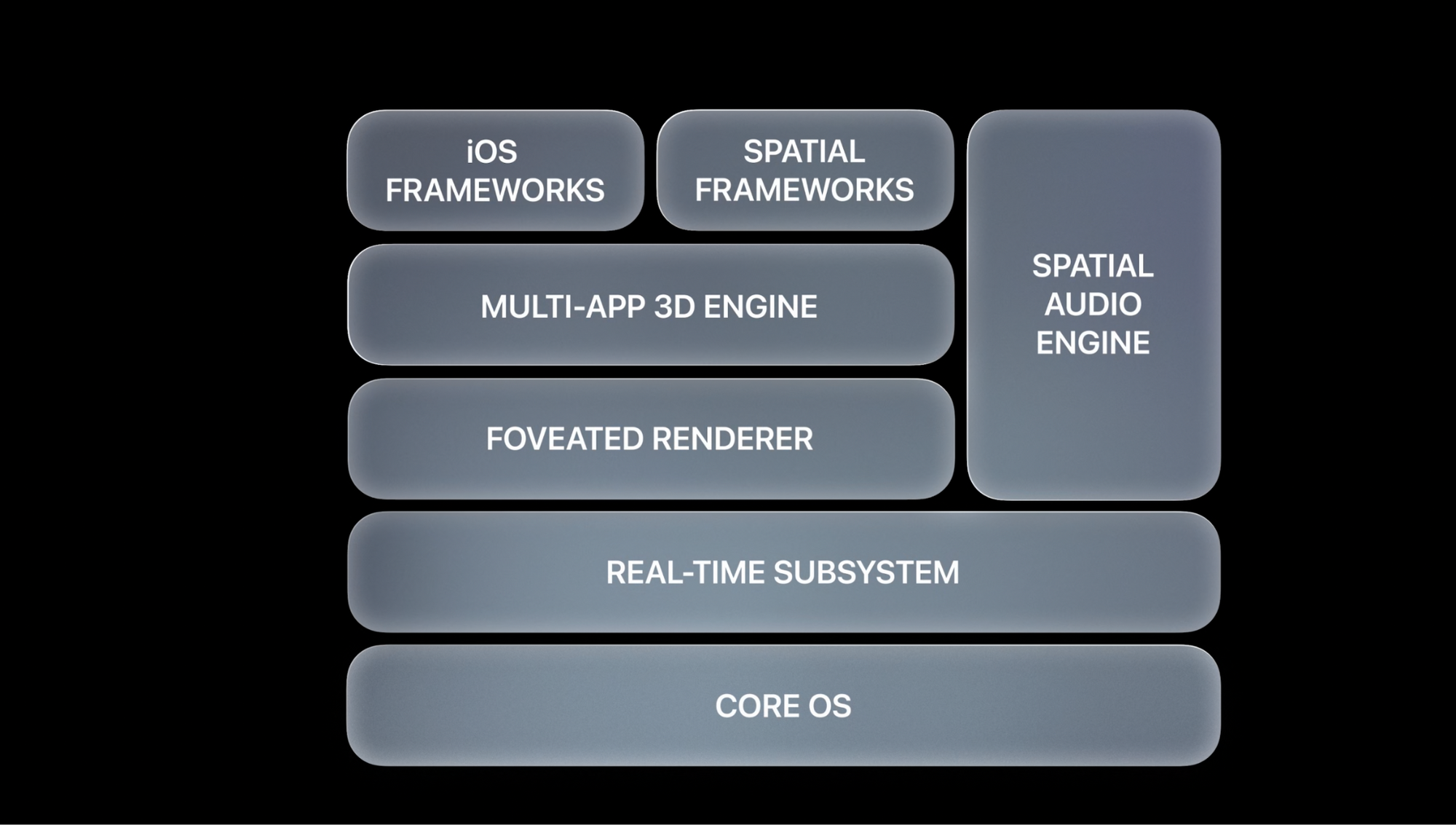

What is visionOS?

visionOS is the new operating system powering the Apple Vision Pro computer. The new visionOS will enable developers to create purpose built applications for the new device using familiar Apple developer tooling like Xcode, SwiftUI, RealityKit, and ARKit, as well as support for Unity and the new 3D-content preparation app Reality Composer Pro.

People can interact with applications while staying connected to their surroundings. The visionOS SDK arrives later this month along with Xcode, the visionOS Simulator, Reality Composer Pro, documentation, sample code, design guidance, and more. For now, you can explore information about how to prepare for visionOS and learn about the nuances developing applications for spatial computing devices.

Computer vision developers will have the ability to utilize ARKit with visionOS to blend virtual content with the real world.

Apple Vision Pro, visionOS, and Computer Vision

Vision Pro appears to have computer vision capabilities natively in the platform already, and we expect to learn more about the headset as developers start building applications for the platform. Below, we summarize some of the ways in which computer vision was used by the headset in the Apple keynote.

Gesture Recognition

Hand gesture recognition is core to the way users interface with Vision Pro. Users can pinch their index finger and thumb to expand applications, move applications, and scroll through applications. Vision Pro seems to be able to recognize hand gestures in a broad range of view to help users comfortably interact with applications, even when their hands are not in the frame.

Gesture recognition plays a key role in the spatial nature of the headset. No additional equipment – controllers, etc. – is required to control the headset. Instead, one can use their hands – and eyes – to interact with applications.

Human Detection

An important message being delivered is that the headsets have features to ensure people are not disconnected from those around them. In the keynote, Apple said “[Apple Vision Pro] seamlessly blends digital content with the physical world, while allowing users to stay present and connected to others”. Video highlights show that when humans are detected near a user, the humans will come into focus through the content being displayed.

Device Detection

Seamlessly interacting with other Apple products was a major selling point for how business users can move between devices without breaking their workflow. The demo shows how the Vision Pro will recognize your MacBook to move content from the laptop into the headset.

As you can see, Apple has built highly valuable object recognition features native into the interface because computer vision is a critical part of how users interact with the world around them.

Getting Started with Computer Vision and Apple Vision Pro

The visionOS SDK is set to be released in June 2023 and there is a lot you can do today to prepare for building visionOS applications utilizing computer vision. For all of the machine learning and vision content from WWDC23, head to the ML & Vision page.

For a cursory crash course, read up on Create ML and Core ML to understand how you can build intelligence into applications with Apple Machine Learning features for vision, natural language, speech, and sound. Once you have an overview, explore in more detail with the machine learning APIs and machine learning resources. Then dive into the vision documentation and available Core ML models.

After getting an overview of what’s possible, start experimenting with applications like the Cash Counter to see how those components work together to deliver augmented and mixed reality experiences on mobile. Cash Counter utilizes the Roboflow SDK, the open source sample app repo, and an open source dataset to deploy a custom computer vision model directly into the iOS application.

Once you’re ready to get started, follow this step-by-step video tutorial on deploying a custom model into a mobile app.

Apple Vision Pro Use Cases for Enterprises

Vision Pro opens up an entirely new landscape for computer vision use cases for enterprises. The advances in hardware and software enable new applications that previously would not have been possible, offering exciting new opportunities in a range of use cases.

The M2 and R1 offer the power and multimodal capabilities required for broad adoption within the enterprise. The Vision Pro’s ultra-high-resolution display system is the level of fidelity that can deliver a true hands free experience for building applications that augment workers in enterprise environments. Let’s explore ways enterprises can use the Apple Vision Pro.

Quality Assurance and Inspection in Manufacturing

Intelligence augmentation using computer vision acts as a personal assistant for manual quality assurance or product inspection work done by humans. Vision Pro can equip workers with additional support in identifying quality issues or anomalies in the manufacturing process. With the enhanced capability of computer vision, there is an opportunity to reduce errors or speed up processes to increase productivity.

For example, consider a scenario where a worker is being trained on understanding defects flagged by a manufacturing pipeline. The Vision Pro could be used to offer immersive training on different defects, providing interactive feedback to help workers understand for errors and what to do if defects occur.

Assembly, Installation, and Repair in Field Services

The breadth of knowledge for any individual field service employee can be expanded thanks to combining computer vision with Vision Pro for detailed visual instructions.

When dispatching employees or contractors, enterprises can equip them with explicit visual instructions and allow the service professionals to increase the amount of products they are able to service. Not only will each employee be able to service more products, quality will increase as a result of visual cues and alerting to guide employees during their work.

Training, Information, and Assistance Across Industries

A broad benefit of the Vision Pro is equipping employees with training, information, and assistance for any given task or situation.

Employees will be able to become productive at a faster pace with computer vision assisting them on how to complete tasks for a variety of scenarios. Employees can be guided during new tasks, request information in unique environments they haven’t experienced before, and utilize the camera system to call experts into a situation for human guidance. Computer vision will help new employees become effective in their role at a faster pace and reduce errors during onboarding windows.

Conclusion

The Apple Vision Pro and visionOS have delivered a winning combination of features to bring mixed reality and computer vision into enterprise business for broad adoption.

The advanced hardware utilizing multiple sensors and delivering a high resolution visual will allow hands-free experiences to employees in manufacturing, energy, transportation, logistics, field service, and healthcare.

We’ve already seen how Apple has utilized computer vision technology to build native experiences for their new headset and that provided inspiration for how enterprises can start to think about utilizing the new computing platform to tackle some of their biggest problems.

To get started building computer vision applications for wearable devices, like the Apple Vision Pro, utilize one of the 50,000+ free pre-trained models in Roboflow Universe.

Frequently Asked Questions

What industrial use cases does the Apple Vision Pro headset have?

The Apple Vision Pro could be used to assist in employee training, aiding quality assurance processes, helping installation and repair in field services, and more.

How much will the Apple Vision Pro headset cost?

Apple announced the headset will cost $3,499 at launch.