In May 2016, Joshua Brown died in the Tesla's first autopilot crash. The crash was attributed to the self-driving cars system not recognizing the difference between a truck and the bright sky above it. The system did not recognize the difference between a truck and the sky?!

After you read this article, you will be able to understand why Joshua Brown died. You will better understand the genius and the risks that come with using deep learning technologies for self-driving cars... and much more!

Deep Learning Technology in Self-Driving Cars

In this article, we focus on the deep learning technology in self-driving cars. Of course, many other aspects are involved in deploying this core technology. The car is equipped with sensors, cameras, a compute engine, and decision algorithms that surround the deep learning technology. Assuming these surrounding technologies are present and functioning, how can we train a computer to recognize objects in the cars surroundings, demonstrated in a video feed?

Collecting Training Data for Driving

Deep learning systems in self-driving cars learn by supervision. That is, in order to train our computer system to recognize cars, people, bikes, and trucks, we need to provide examples of these objects appearing through real life examples. We have a collection of datasets and models you can use for self-driving use cases.

If we were embarking on this project from scratch, we would collect these images ourselves by taking pictures and video as we drive through the streets. Then we would label our images with the annotations of each object we are interested in detecting. This is an enormous task. Data collection and curation is often at the crux of the deep learning research process.

Luckily, if you are interested in starting to experiment on deep learning projects yourself, a lot of this legwork has already been done and we have public datasets for self-driving cars.

Deploying Object Detection for Autonomous Vehicles

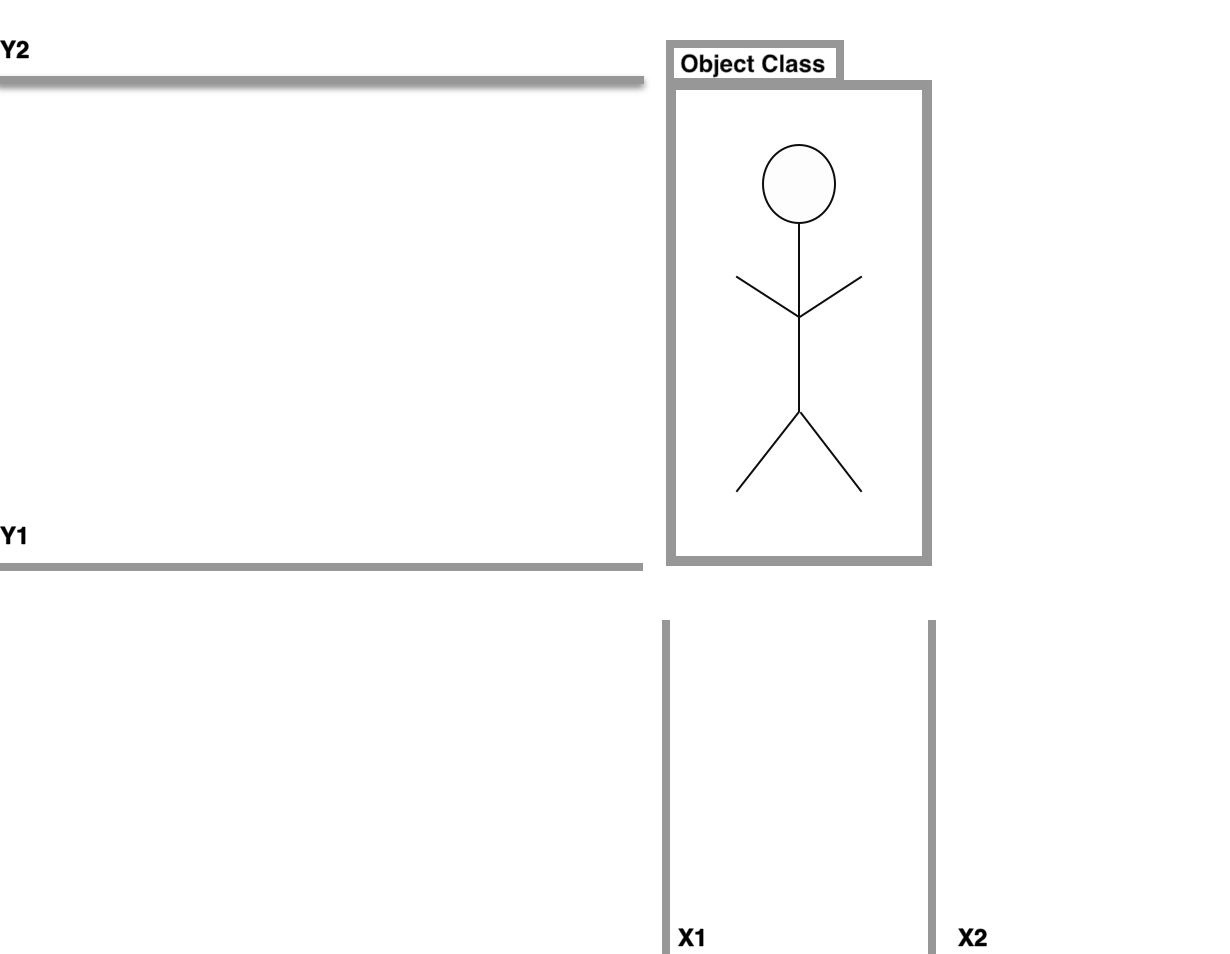

In order to train a model to recognize the cars, people, bikes, etc. in our images we need to frame the problem in a way that it can be easily mathematically expressed so we create a mathematical model to mirror the decision logic that we use with our own innate vision capabilities. Object detection is a popular task in computer vision. Most deep learning models for object detection break the problem into two parts:

- Identify the presence of an object's bounding box (regression problem)

- Classify the bounding box contents into relevant categories (classification problem)

There are many ways to model the object detection problem and a deep dive on the neural network architectures is beyond the scope of this post. If you are curious to look into an example further, I have written a deep dive into EfficientDet architecture and design.

The main takeaway is that we can use our training data to mathematically inform our model about the task at hand. We do this through a process called gradient descent, where each example "teaches" the model to make a more correct prediction by updating the models parameters. Gradient descent minimizes a loss function that we have defined in our problem (e.g. the inverse of accuracy). Here are a few lines of an object detection training in my Colab notebook comparing EfficientDet and YOLOv3:

Epoch: 10/40. Cls loss: 0.18862. Reg loss: 0.41760. Batch loss: 0.60622 Total loss: 0.60955

Epoch: 11/40. Cls loss: 0.17679. Reg loss: 0.38587. Batch loss: 0.56266 Total loss: 0.58988

Epoch: 12/40. Cls loss: 0.19804. Reg loss: 0.38336. Batch loss: 0.58141 Total loss: 0.55659

Epoch: 13/40. Cls loss: 0.13830. Reg loss: 0.33150. Batch loss: 0.46980 Total loss: 0.55499

The more examples the model sees, the more likely it will be able to tag objects in the different scenarios it will encounter in production. In the model, we can see the total loss decreasing - this is statistical promise that our model will generalize well to images that it has not yet seen when it is deployed to production.

It is immensely important to be sure that training data is of high quality, especially in the context of self-driving cars. Recently, we discovered that a popular dataset for self driving cars contains errors and flaws 😱.

If you are interested in getting your hands dirty with training object detection models, see our tutorials on training EfficientDet for object detection and how to train YOLOv3 on a custom dataset. Both of these tutorials step through the code required to train state of the art object detection models.

Evaluating Object Detection Models for Self-Driving Cars

How do we know that a model will perform well after training? A neural network is like a black box and it is difficult for us to peak inside to uncover the underlying logic. So rather than relying on an intuitive understanding of the model systems, deep learning researchers quantify the success of their models on a test set of images to see how well the model does outside of training. That is if I train a model to recognize cars on one dataset, and then I test that model and I find that it identifies 100% of cars on a completely different dataset, then it is safe for me to assume that the model will do well when it is introduced to an entirely new dataset in real life.

But this is a bold prospect! What if the test set that I have used does not contain the whole range of possible scenarios that might be realized? In Joshua Brown's case, Tesla lacked a training/testing set of images that occurred on extremely bright conditions where the distinction between a truck and the sky is faint.

Setting up a Data Stream for Autonomous Operation

Once we have trained an object detection model to identify objects of interest while our car is on the road and determined that it performs well on unseen data, we can set up a system to process images in a video feed as the car is driving. Watch some footage here:

You will notice the frames around people and cars move and update as the model is making inferences on its surroundings in real time. New state of the art models are quite small and can make predictions very fast!

In the upper left corner of each box, the object's classification is displayed. In the upper right corner, the model's confidence in the prediction is displayed. The model's confidence has a threshold at 20%. So the model must be at least 20% sure of a given object to predict its presence.

However, the model is far from perfect. You will see that it often classifies signs as traffic lights, and will occasionally miss a person, especially if they are far in the distance.

Now we can see the whole picture. We have a deep learning system making inferences on objects in its surroundings in realtime. If we were a car manufacturer, we could link this technology in along with lidar sensors to detect object distances and ultrasonic sensors in the wheels to detect curbs and parked vehicles. Then, we would code in algorithms that take in this information and deploy decisions to an actuator that operates the vehicle.

Should You Buy a Self-Driving Car?

The case to buy an autonomous or semi-autonomous vehicle will always be a statistical one because deep learning technology is difficult to take apart under the hood.

I think the decision to trust a computer over yourself boils down to two key concepts:

1) A belief in the power of data and statistics to predict the future

2) A sense of humility

Because at the end of the day, you need to trust the system more than you trust yourself.

I will be buying one when regulations are passed 🚗 what do you think??

Cite this Post

Use the following entry to cite this post in your research:

Jacob Solawetz. (May 4, 2020). Breaking Down the Technology Behind Self-Driving Cars. Roboflow Blog: https://blog.roboflow.com/breaking-down-self-driving-cars/