Computer vision (CV) is reshaping industries like agriculture, healthcare, retail, and manufacturing by enabling machines to interpret visual data, from counting avocados in a market to detecting defects on a production line. Traditionally, building CV applications required years of expertise in machine learning, data annotation, and model deployment.

Now, large language models (LLMs) combined with Roboflow, a leading CV platform, democratize this process, allowing anyone - coders and non-coders alike - to create powerful vision apps in hours.

This guide explains how to leverage LLMs as coding assistants with Roboflow to build apps for tasks like object detection, image classification, or scene segmentation.

How to Build Computer Vision Applications with LLMs and Roboflow

You’ll learn to find pre-trained models by asking your LLM to search Roboflow Universe, use prompts to adjust settings like confidence thresholds via Roboflow’s hosted API, deploy apps to platforms like Vercel, and ensure compliance with model licensing to avoid legal pitfalls.

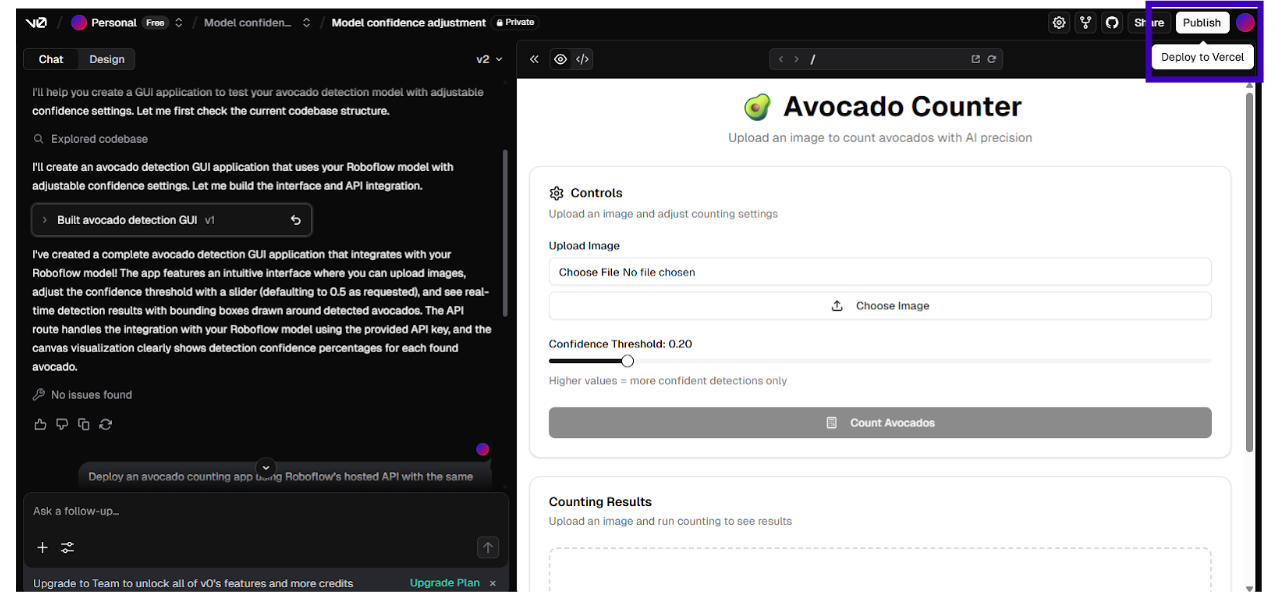

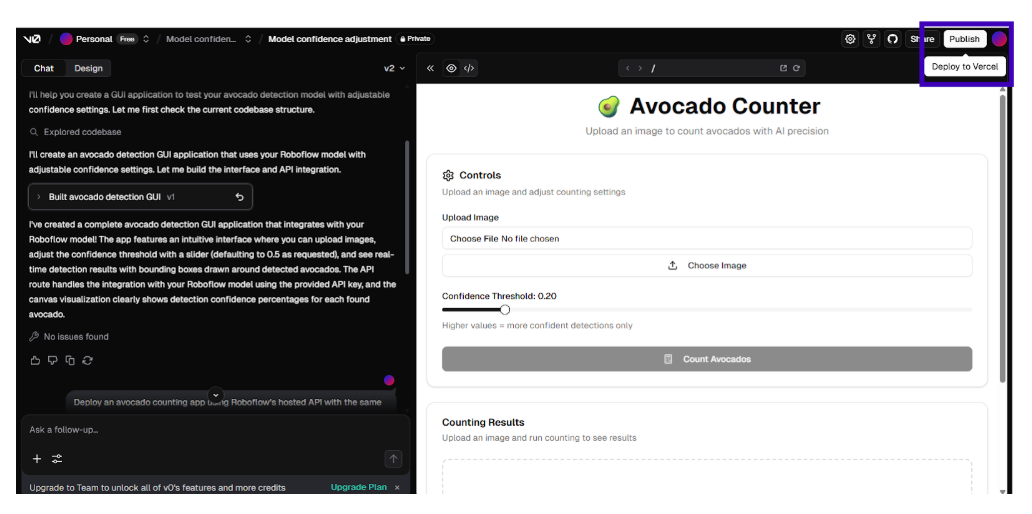

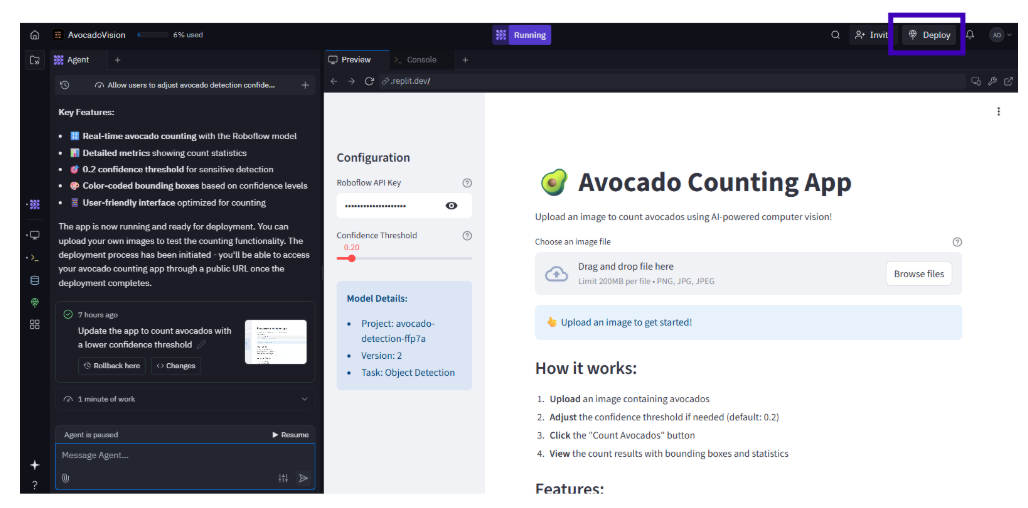

Here is an example application that counts the number of avocados in an image that we will show you how to build:

LLM Coding Assistants to Use with Roboflow

Roboflow’s intuitive, API-first ecosystem pairs seamlessly with LLM-powered coding assistants, enabling you to build vision apps using natural language prompts with little to no coding, making CV accessible to all skill levels.

Here’s a curated list of top LLM coding assistants compatible with Roboflow, each excelling at interpreting prompts, searching for models, generating API calls, or running apps directly:

- Cursor: Cursor is a VS-code based editor enchanted by AI that integrates models such as Claude 3.5 Sonnet and GPT-4o to deliver instant coding support, smarter and faster debugging and context-aware programming assistance, all designed to provide the developer with maximum programming efficiency.

- Vercel v0: Vercel’s v0 is an AI tool that quickly builds and deploys web apps from natural language, using React and Next.js, streamlining prototyping for all users

- OpenAI GPT-5: GPT-5 is OpenAI’s newest advanced LLM, excelling in reasoning and coding for versatile applications

- Anthropic Claude (Claude 4 Opus / Sonnet): Anthropic’s Claude 4 Opus and Sonnet are advanced, safety-focused LLM, excelling in low-hallucination reasoning for coding, analysis, and creative tasks

- Google Gemini (Pro + Flash): Google’s Gemini 2.5 Pro and Flash are multimodal LLMs, with Pro excelling in complex reasoning with a 1M+ token context and Flash optimizing speed for everyday tasks, both enhancing productivity in coding and Google app integration

- Meta’s Code Llama / Llama 3 Agents: Meta’s Code Llama and Llama 3 Agents are open-source LLMs excelling in code generation and autonomous agent tasks, offering customizable, high-performance solutions for coding, multimodal processing, and enterprise workflows. All very good options for open source projects.

- Cohere Command-R+ (RAG-optimized): Cohere’s Command-R+ is a highly efficient, RAG-optimized LLM, excelling in accurate, verifiable responses for enterprise tasks, offering superior retrieval quality, multilingual support, and cost-effective scalability compared to larger models

- Others: GitHub Copilot (IDE autocomplete), Amazon CodeWhisperer (AWS integration), Replicate’s Codeformer (code refactoring), Replit (interactive coding and deployment, runs apps from prompts without code).

These assistants leverage Roboflow’s hosted API, allowing you to prompt them to find models, adjust settings (e.g., “set confidence to 0.5”), or run apps directly. v0 and Replit are particularly powerful for executing and visualizing apps without user code, unlike Claude, which is limited to text-based responses.

How to Use Roboflow and LLM Coding Assistants to Make Apps

Creating vision apps with Roboflow and LLMs is straightforward: ask your LLM to find a model in Roboflow Universe, navigate to the model’s page to get its URL, obtain an API key, and prompt the LLM to use the hosted API for inference with customized settings like confidence thresholds. Optionally, generating a GUI.

Tools like v0 and Replit can run the app directly from your prompt without you writing code, delivering interactive outputs like web interfaces. Claude excels at model discovery but can’t execute code, so use it for finding models and switch to v0/Replit for running apps.

This section guides you through building an avocado counter, with examples using Claude Sonnet 4.0 for discovery and v0/Replit for execution, plus tips for optimization and common pitfalls.

Step 1: Get Your Roboflow API Key

Start by creating a free Roboflow account at roboflow.com.

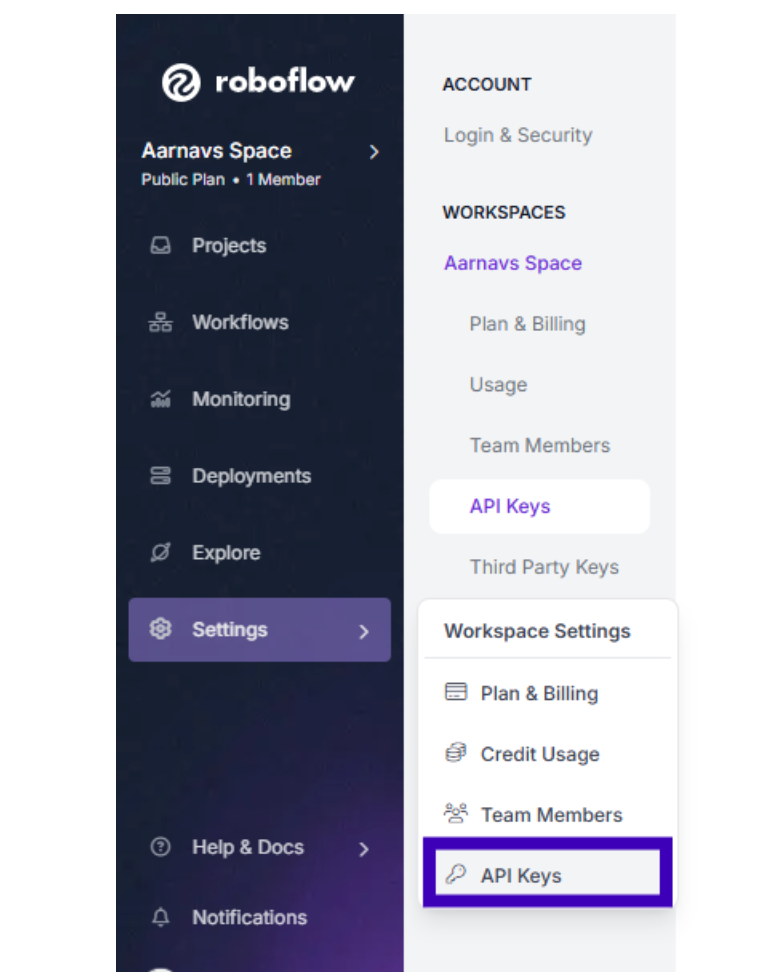

Navigate to Settings, then API key (top-right menu) and copy your private API key.

This key authenticates access to Roboflow’s hosted API for inference.

Share it securely with your LLM in prompts (e.g., as an environment variable) to enable model usage. For security, never hardcode the key in scripts or share it publicly; store it in a .env file or use a secret manager for production applications.

Step 2: Find a Model by Asking Your LLM (No Code Needed)

Roboflow Universe is a treasure trove of over 50,000 pre-trained models and 250,000 datasets, covering tasks like object detection (e.g., counting avocados), classification (e.g., identifying plant diseases), and segmentation (e.g., isolating objects in medical scans). Instead of coding, ask your LLM to search Universe, then visit the model’s page to confirm and copy its URL.

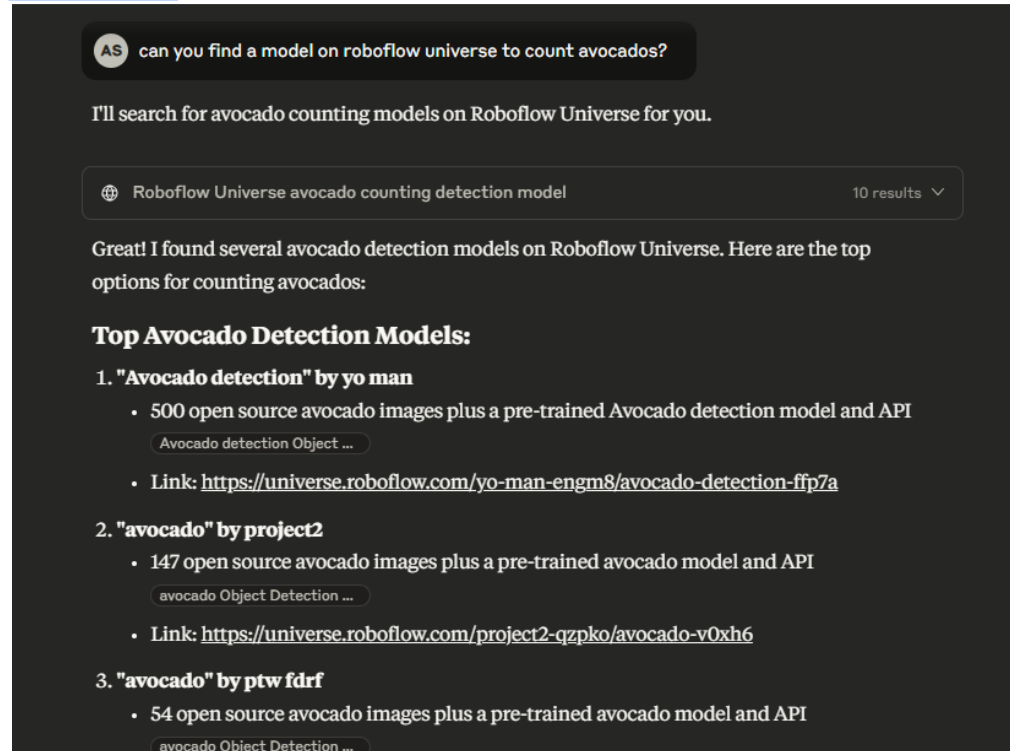

Example Prompt for Claude Sonnet 4.0: “Can you find a model on Roboflow Universe to count avocados?”

Example output:

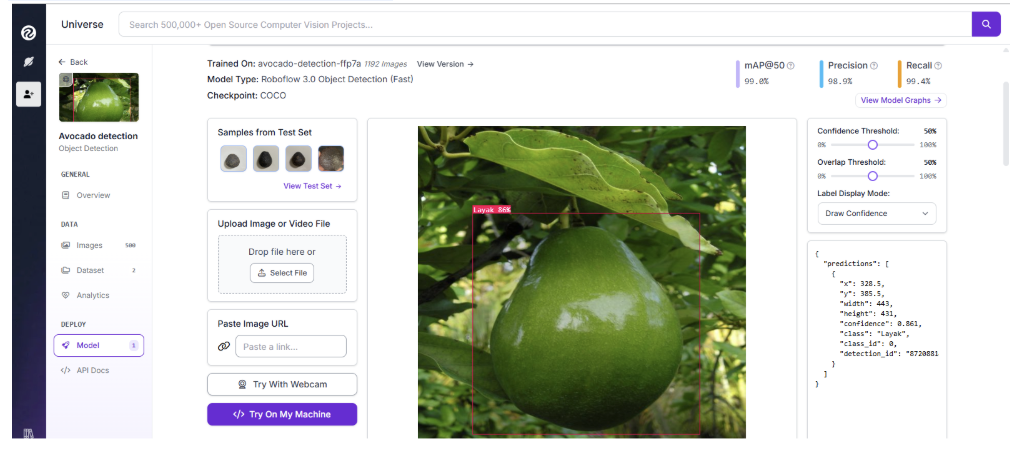

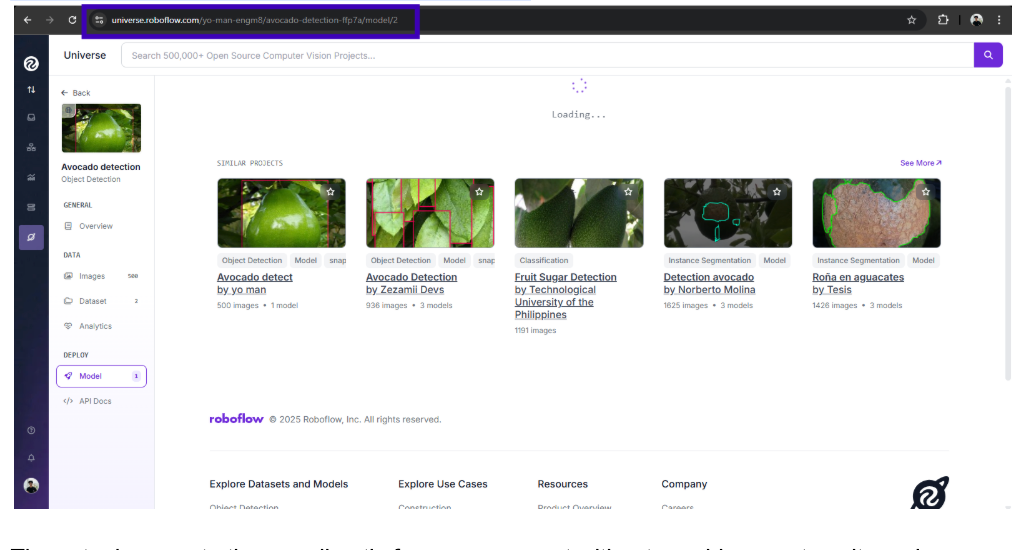

Visit the recommended model’s URL (e.g., https://universe.roboflow.com/yo-man-engm8/avocado-detection-ffp7a), navigate to the “Model” section, and copy the model URL or note the model ID (avocado-detection-ffp7a/2). Test it by uploading a sample image (e.g., a photo of avocados on a table) in Universe’s web interface to verify detections. No code is required, just ask the LLM and confirm on the website. Tip: Use Universe’s filters (e.g., “Has a Model”) and check model metrics like mAP or precision to choose the best fit. For avocados, a larger dataset (500 images) typically ensures better accuracy across lighting conditions or angles.

Step 3: Use the Model with Your LLM and Hosted API

With the model URL (like shown in the image) or ID and API key, prompt your LLM in tools like v0 or Replit to run inference using Roboflow’s hosted API, adjusting settings such as confidence thresholds and generating a GUI for your images.

These tools execute the app directly from your prompt without requiring you to write code, producing interactive outputs like web interfaces for uploading images and viewing results. Claude can’t run Python code on its platform, so while it’s great for finding models, it’s less suited for visualizing or executing apps. For this demonstration, v0 or Replit will be used for seamless runtime and visualization.

Example Prompt for v0 or Replit: “Adjust the confidence of this model: https://universe.roboflow.com/yo-man-engm8/avocado-detection-ffp7a/model/2 to 0.5 using my api_key (insert your own API key). Let me run a GUI using my own images.”

The LLM response runs the app directly (no code from you needed), delivering a high-quality output like an interactive GUI (e.g., using Streamlit) where you upload images and see avocado counts with confidence set to 0.5. Replit provides similarly impressive results, enabling real-time interaction with a web-based interface. The GUI might display the number of avocados detected, bounding boxes around each, and confidence scores, making it easy to validate results.

The confidence threshold of 0.5 ensures the model reports detections it’s at least 50% confident about, reducing false positives (e.g., mistaking limes for avocados) while capturing most true detections. For example, in a market stall image, this setting balances accuracy and noise.

Tips and Pitfalls:

- Prompt Precision: Be specific (e.g., “use model ID avocado-detection-ffp7a/2” vs. just the URL) to avoid LLM confusion.

- Image Quality: Ensure images are clear; low-resolution or blurry photos may reduce detection accuracy. Prompt “Enhance image quality for inference” if needed.

- Testing: Always test on Universe’s web interface first to confirm the model suits your use case (e.g., avocados in a tree vs. a store).

- LLM Limitation: Don’t ask Claude or other LLMs that can’t execute backend code to run the app. They can only provide you with text-based code or instructions, but they can’t actually manage or display backend results like v0 or Replit can.

Step 4: Customize Inference Settings with LLM Prompts

Optimize your app’s performance by tweaking settings via prompts in v0, Replit, or other LLMs. These adjustments fine-tune the model for your specific needs, improving accuracy, speed, or robustness. Here are key parameters, why they matter, and how to adjust them:

- Confidence Threshold: Filters detections by score (0-1). Why? Reduces false positives in complex scenes (e.g., avocados mixed with other fruits). How: Prompt “Set confidence to 0.5.” Example: A 0.5 threshold ensures reliable avocado counts in a crowded market image.

- Model Sizes/Versions: Universe models have versions (e.g., /2 vs. /3). Why? Newer versions may improve accuracy or speed (e.g., faster inference for real-time apps). How: Prompt “Use the latest version of avocado-detection-ffp7a.”

- Overlap Threshold (IoU): Merges overlapping boxes (e.g., overlap=0.3). Why? Prevents double-counting avocados in dense clusters. How: Prompt “Set overlap to 0.3.”

- Batch Processing: Processes multiple images. Why? Speeds up tasks like inventory counting across a farm. How: Prompt “Infer on 10 image URLs.”

Example: “Adjust the avocado detection model to use a 0.6 confidence threshold and process 10 images.” The LLM in v0/Replit runs the updated app directly, displaying results (e.g., counts and visualizations) without you coding. For real-time apps, prompt “Optimize for video inference at 30 FPS” to adjust settings for speed.

Practical Example: Imagine you’re building an app for a grocery chain to count avocados in daily shipments. You’d prompt: “Use model ID avocado-detection-ffp7a/2 with confidence 0.6 and IoU 0.3 to count avocados in 50 warehouse images.” The LLM runs the app, showing counts and bounding boxes, streamlining inventory management.

Pitfalls to Avoid:

- Overly High Confidence: Setting confidence too high (e.g., 0.9) may miss valid detections in low-light images. Test thresholds between 0.3–0.6 for balance.

- Version Mismatch: Ensure the model version matches your prompt (e.g., /2 vs. /3). Check Universe for the latest version.

- Rate Limits: Free Roboflow accounts have API quotas. If you hit limits, prompt “Optimize API calls for efficiency” or upgrade to a paid plan.

Step 5: Common Use Cases for Vision Apps

To inspire your projects, here are popular use cases for Roboflow and LLM-powered vision apps, showcasing versatility across industries:

- Agriculture: Count crops (e.g., avocados, apples) or detect plant diseases. Prompt: “Find a model to detect tomato blight and build a GUI to analyze farm images.” Use case: Farmers monitor crop health to optimize yield.

- Retail: Track inventory or detect shoplifting. Prompt: “Create an app to count items on shelves with confidence 0.5.” Use case: Stores automate stock checks, reducing manual labor.

- Healthcare: Classify medical images (e.g., X-rays for fractures). Prompt: “Find a model for pneumonia detection and run it on chest X-rays.” Use case: Clinics speed up diagnostics.

- Manufacturing: Identify defects in products. Prompt: “Build an app to detect scratches on car parts with IoU 0.3.” Use case: Factories improve quality control.

- Security: Detect objects in surveillance footage. Prompt: “Create a real-time app to detect unauthorized vehicles.” Use case: Enhance safety in restricted areas.

Each use case leverages Universe’s pre-trained models and LLMs’ ability to customize and run apps with minimal effort, making CV practical for diverse applications.

How to Deploy with Your Coding Agent

“Deploying” a vision app means using Roboflow’s hosted API to run inference, with your LLM adjusting settings via prompts and generating deployable apps for platforms like Vercel. Tools like v0 and Replit create and deploy full-stack apps directly from your prompt without requiring you to write code, producing live, scalable apps with polished interfaces. This is the fastest, most beginner-friendly way to make your app accessible online, requiring no server setup.

Step 1: Deploy via Hosted API

Roboflow’s hosted API (https://detect.roboflow.com) handles inference for images, videos, or streams, scaling automatically to support high traffic. Prompt your LLM in v0 or Replit to create a deployable app using the API. Unlike Claude, which can’t execute code, v0 and Replit visualize and deploy apps seamlessly, making them ideal for this step.

Example Prompt for v0 or Replit: “Deploy an avocado counting app using Roboflow’s hosted API with model ID ‘avocado-detection-ffp7a/2’ and confidence 0.2 using my api_key.”

The LLM response runs and deploys the app directly (no code from you needed), generating a high-quality output like a full-stack web app (e.g., using Streamlit or Flask) ready for Vercel deployment. The app lets users upload images and view avocado counts with bounding boxes, with instructions to host it globally.

Step 2: Integrate and Deploy

v0 and Replit generate deployable projects with Roboflow’s API integration. The prompt above yields a project structure (e.g., a Streamlit app) with a GUI for uploading images and displaying results. Follow the LLM’s instructions to deploy to Vercel, enabling global access in minutes. For example, a grocery store could deploy this app to let employees check avocado stock online.

Tips:

- Error Handling: Prompt “Add error handling for API failures” to ensure robustness (e.g., retry on network issues).

- Custom UI: Prompt “Style the GUI with a modern design” for a polished look.

- Testing: Test with varied images (e.g., avocados in different lighting) to ensure reliability.

Step 3: Test and Scale

Test by uploading sample images or videos. Prompt “Add logging for detection counts” to track usage. The hosted API scales automatically, and Vercel/Replit’s infrastructure ensures global accessibility. If you hit API quotas, prompt “Optimize API calls for efficiency” or consider a Roboflow Pro plan.

This code-light approach ensures quick success, letting you focus on app functionality. For advanced needs (e.g., edge deployment on devices like Raspberry Pi), contact Roboflow support for guidance.

Model Licensing for Your Vision Apps

Licensing is critical for public or commercial vision apps to avoid legal risks. Using models with restrictive licenses can force you to open-source your entire app or face legal consequences, especially if deployed publicly.

Licensing Risks

Models like YOLOv8 (AGPL-3.0) require sharing modifications or your codebase if distributed publicly, which can be problematic for proprietary apps. Similarly, GPL-3.0 imposes strict obligations, making these licenses risky for commercial use unless you’re prepared to comply fully. For example, deploying a YOLOv8-based app on Vercel without a commercial license could lead to legal notices or project shutdowns.

Recommended Licenses

Opt for permissive licenses that allow commercial use and modifications without forced disclosure:

- Apache 2.0: Flexible for proprietary apps, used in models like RF-DETR or YOLO-NAS. Ideal for startups building CV products.

- MIT: Highly lenient, used in Roboflow 2.0 or TrOCR, perfect for open-ended development.

Roboflow’s Solution

Roboflow curates models with clear licensing information and offers commercial licenses for restrictive models (e.g., YOLOv8) through Pro or higher plans, ensuring compliance for public or commercial use. Universe models display licensing details on their pages. For example, a commercial license might allow you to use a YOLOv8 model in a paid app without open-sourcing your code. Visit roboflow.com/licensing for details or contact Roboflow sales for custom licensing needs.

Best Practice: Before deploying, verify the license on Universe or ask your LLM to confirm. If you’re building a commercial app, stick to Apache 2.0 or MIT models, or secure a Roboflow license for restricted ones to avoid surprises.

With LLMs and Roboflow’s hosted API, you can build compliant, scalable vision apps effortlessly, from avocado counters for farmers to defect detectors for factories. So start building!

Written by Aarnav Shah

Cite this Post

Use the following entry to cite this post in your research:

Contributing Writer. (Aug 26, 2025). Build Computer Vision Applications with LLMs and Roboflow. Roboflow Blog: https://blog.roboflow.com/build-vision-applications-with-llms/