Building computer vision projects has never been easier. What once required advanced programming skills and large research teams can now be achieved with accessible tools, pre-trained models, and simplified workflows. Developers, students, and businesses alike can quickly prototype applications that analyze images, track objects, measure dimensions, or detect defects all with minimal setup.

The rapid growth of Vision AI is transforming industries. Ready-to-use templates and open datasets let anyone explore ideas faster, while modern APIs and cloud frameworks make deployment seamless across edge and enterprise systems. This democratization of vision technology has accelerated innovation, enabling startups and enterprises to integrate intelligent vision into everyday workflows.

But what does this look like in action? Today we'll share some real-world projects that showcase how vision AI helps businesses reduce waste, improve precision, and boost productivity.

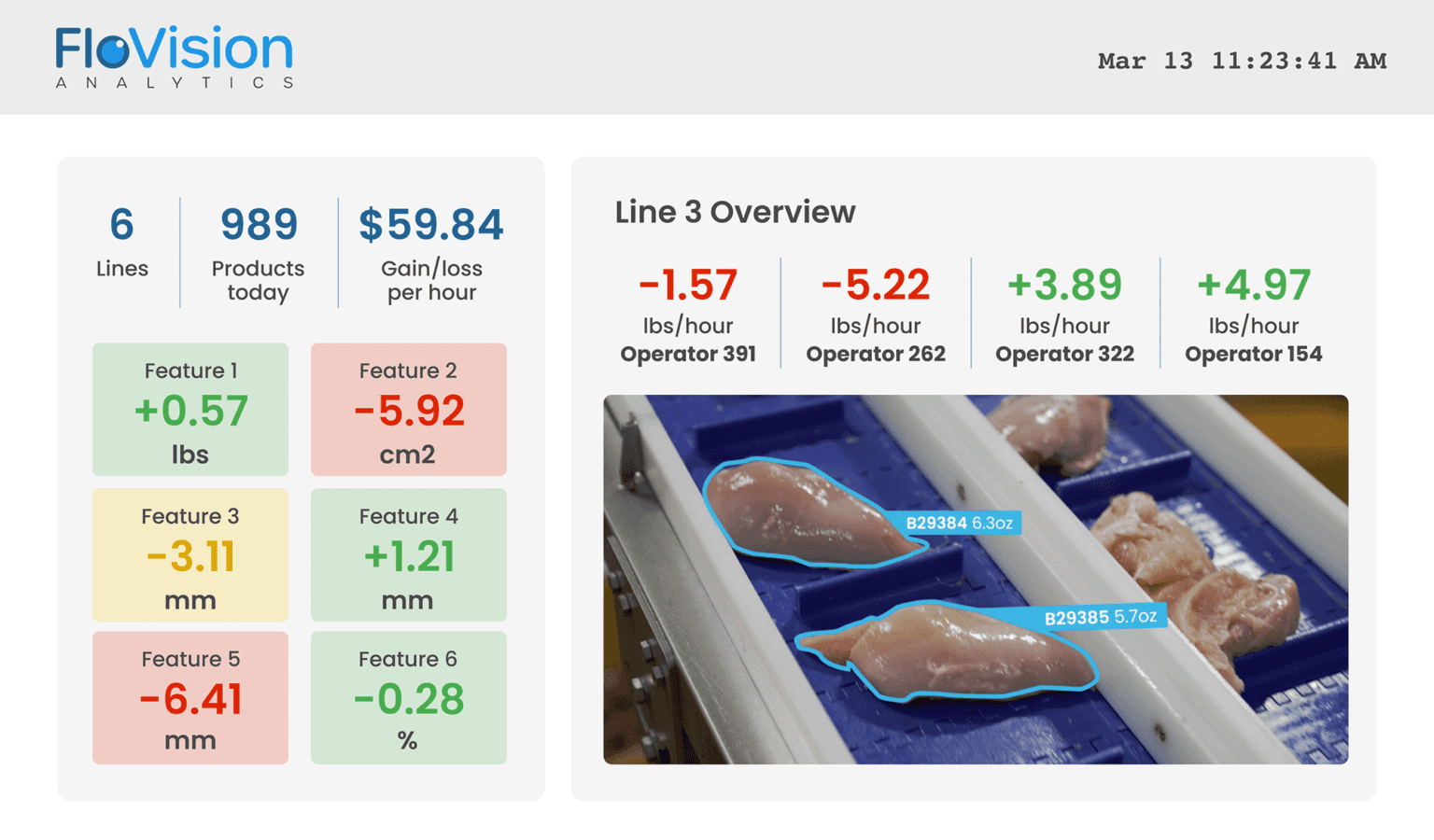

For example, FloVision uses vision AI to optimize food processing by analyzing production lines in real time.

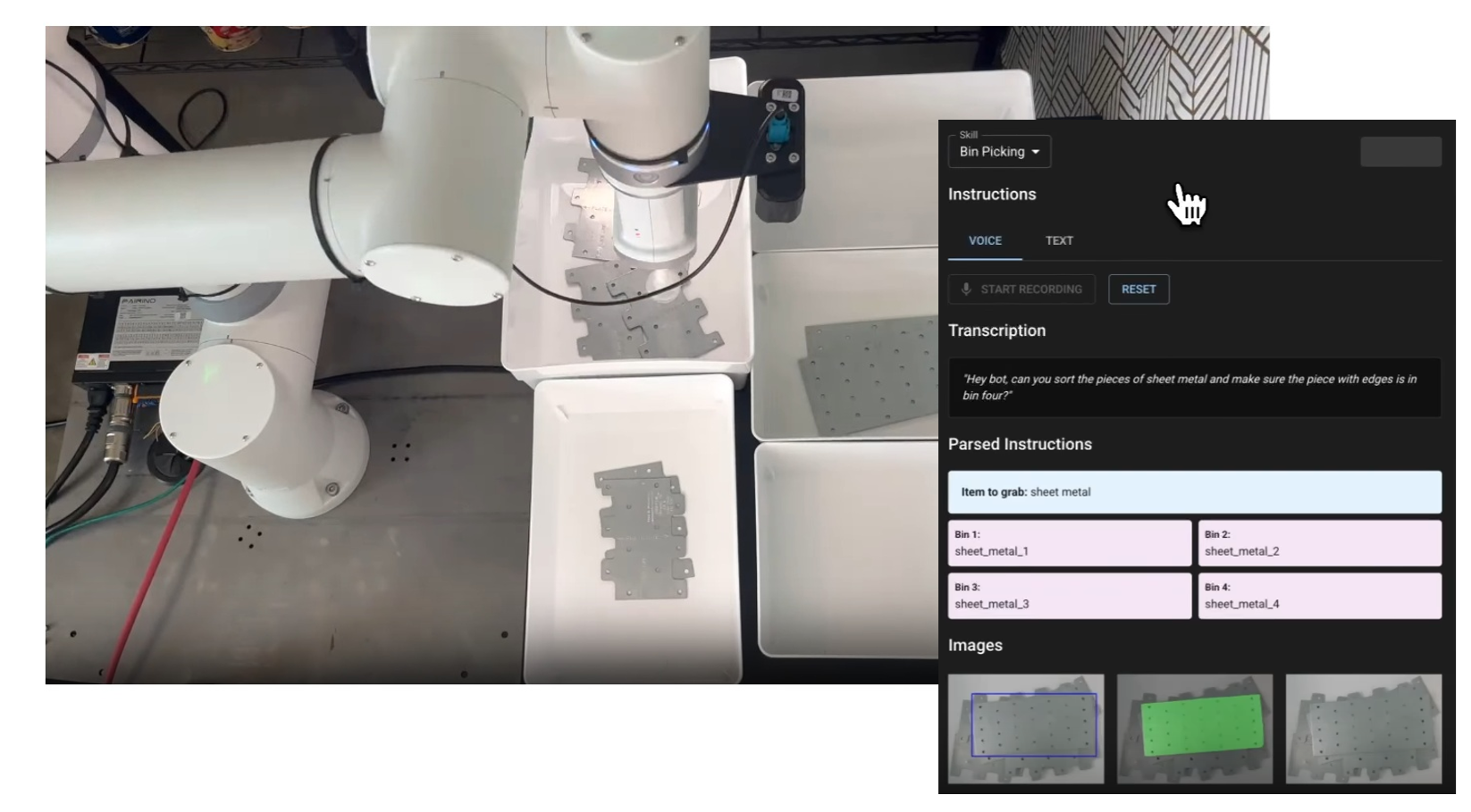

Similarly, Almond uses vision AI to enhance robotic automation in complex manufacturing environments.

Discover Computer Vision Projects

Let's explore computer vision projects that highlight how computer vision can be applied in real-world scenarios from basic image processing to advanced automation helping you get started with practical, high-impact ideas.

1. Stop Sign Detection

This project uses a computer vision model to automatically find and recognize stop signs in photos or video feeds. The system draws boxes around detected signs, and labels them, allowing machines to “see” road signs just like humans do.

Why it is useful?

- Safer driving assistance: Helps driver-assist or autonomy systems notice stop signs.

- Smart mapping: Catalogs where signs exist for city inventories.

- Traffic studies: Counts/locates signs to analyze intersections and compliance.

How to implement it?

Visit Stop Sign Detection AI Template and click “Use This Free Template” to clone the workflow into your own workspace.

Difficulty Level

Beginner

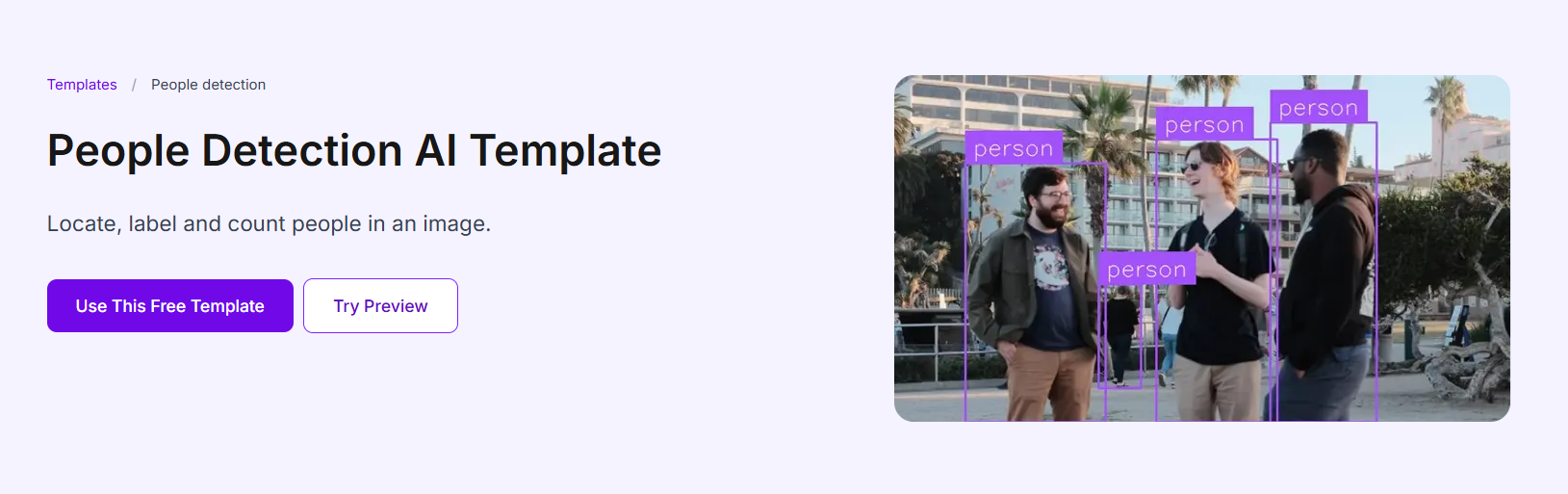

2. People Detection

This project uses a computer vision model to automatically locate, label, and count people in images or video frames. It marks each person with a bounding box and returns the count, enabling machines to “see” and quantify human presence in a scene.

Why it is useful?

Detecting people accurately is critical in many real-world systems. For example:

- Security or surveillance: Alert when persons enter a restricted area.

- Retail or event spaces: Monitor footfall (how many people are in a store or venue) to optimize staffing and layout.

- Occupancy management: Enforce capacity limits in rooms or buildings.

- Smart cities or transport hubs: Assist crowd control and flow measurement.

How to implement it?

Visit People Detection AI Template and click “Use This Free Template” to clone the workflow into your own workspace.

Difficulty Level

Beginner

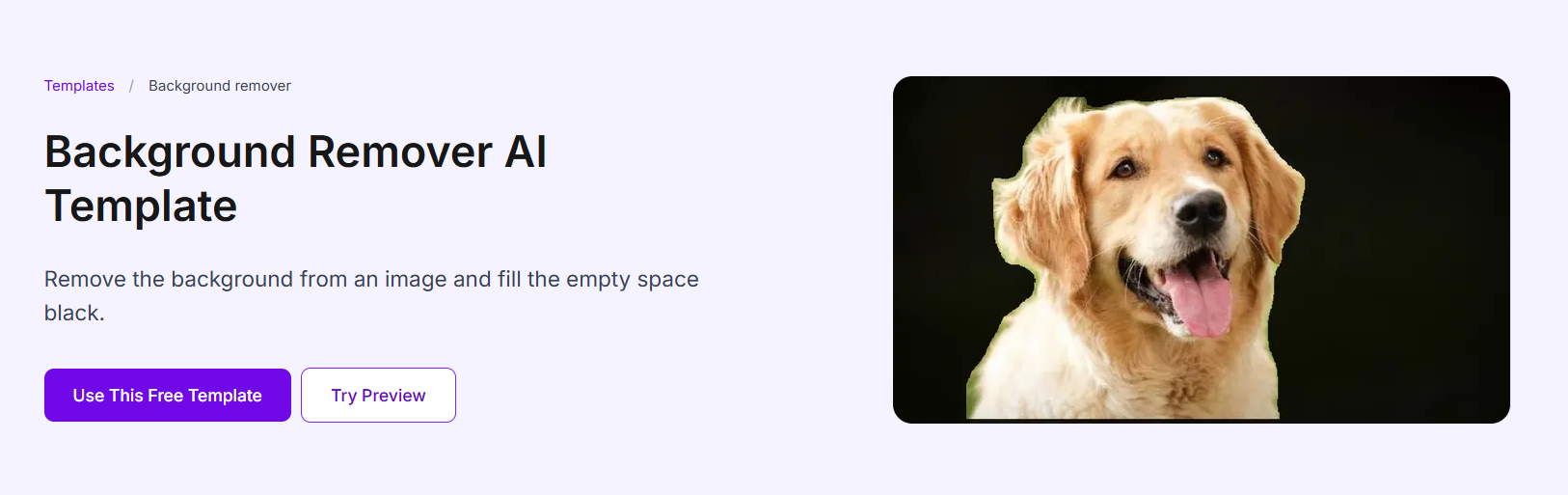

3. Background Remover

This project uses a vision model to automatically isolate the main subject in an image and remove its background, leaving just the foreground object (and making the background transparent or blank).

Why it is useful?

Background removal is widely used in practical applications where clean, distraction-free images are essential. For example:

- E-commerce: Online retailers use it to automatically remove photo backgrounds, creating uniform product images for listings without manual editing.

- Content creation: Designers and marketers use it to isolate subjects for thumbnails, banners, or social media posts.

- Virtual meetings or ID photos: The tool can replace messy or sensitive backgrounds with neutral ones for professional presentation.

- Augmented reality (AR): Developers can extract people or objects for overlay in AR scenes.

How to implement it?

Visit Background Remover AI Template and click “Use This Free Template” to duplicate the workflow in your own workspace.

Difficulty Level

Intermediate

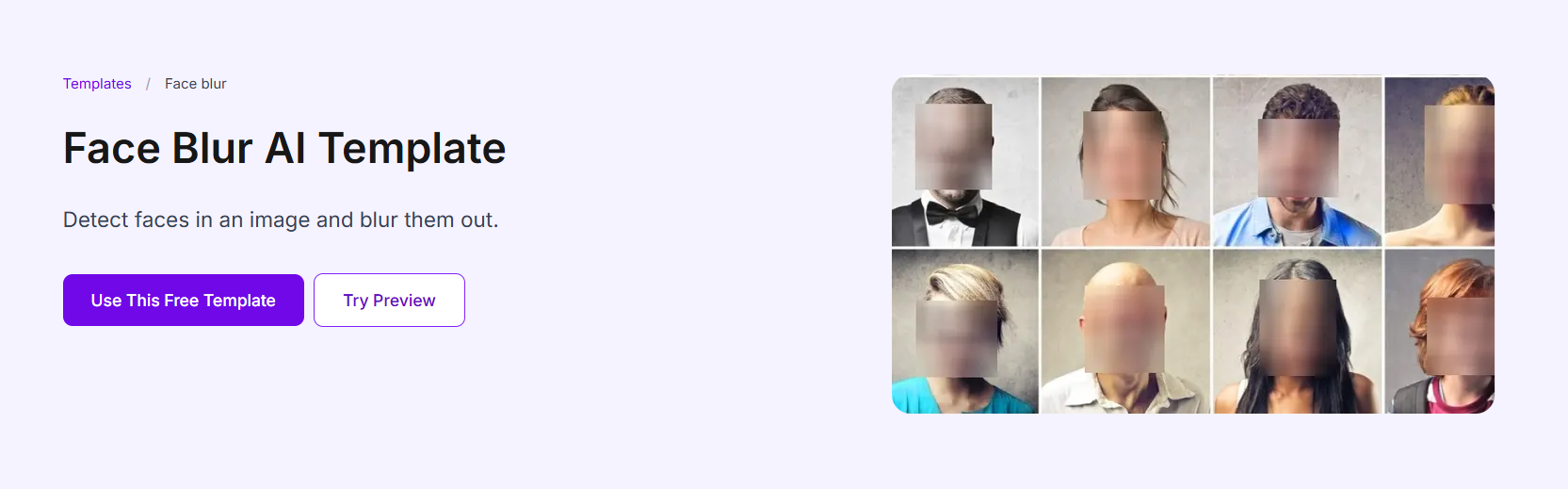

4. Face Blur

This project automatically detects all faces in an image and applies blurring (or pixelation) to them, so that identities are concealed while preserving the rest of the visual content.

Why it is useful?

Face blurring is vital wherever privacy, legal compliance, or anonymity matters. Some real-world scenarios:

- News media & journalism: When publishing photos of protests, crime scenes, or minors, outlets often blur faces to protect identities.

- Street or public imagery: Like in mapping services, automatic face blur prevents individuals’ faces from being exposed in publicly shared visuals.

- Surveillance systems & security: If video feeds are shared, analyzing them while blurring faces can respect privacy regulations.

- Social media / user content: Apps can let users blur bystanders’ faces before posting images or videos.

- Legal & compliance: In some jurisdictions, explicit consent is required to publish identifying imagery, face blur helps comply with privacy or data protection laws.

How to implement it?

Visit Face Blur AI Template and click “Use This Free Template” to clone the template into your workspace.

Difficulty Level

Intermediate

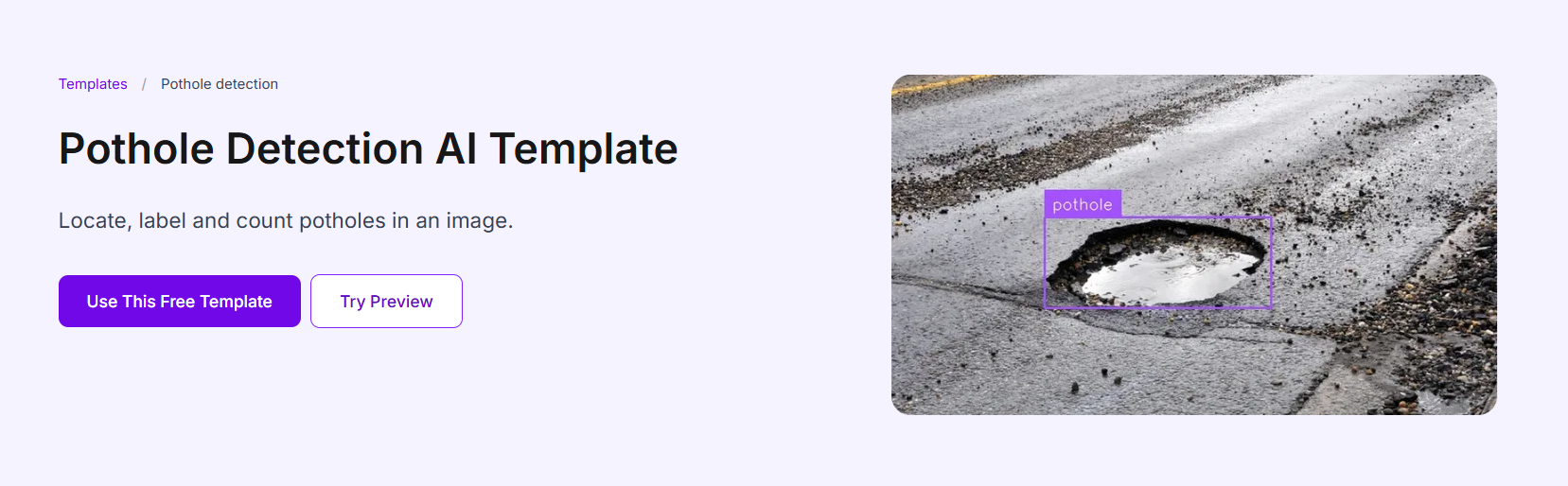

5. Pothole Detection

This project uses a computer vision model to automatically identify potholes on road surfaces. The model draws bounding boxes around sections of pavement with potholes and returns their locations, enabling systems to “see” and flag damaged road areas.

Why it is useful?

Pothole detection project can be utilized for several use cases like:

- Municipal & road maintenance: City agencies can use it to scan road networks, detect potholes early, and prioritize repair schedules before damage worsens.

- Autonomous driving & driver-assist systems: Vehicles can detect road defects ahead, slow down, or reroute to avoid pothole damage.

- Crowdsourced road health monitoring: Apps can allow users (or mounted cameras on vehicles) to contribute data, creating maps of road conditions.

How to implement it?

Visit Pothole Detection Template and click click “Use This Free Template” to clone the template into your workspace.

Difficulty Level

Intermediate

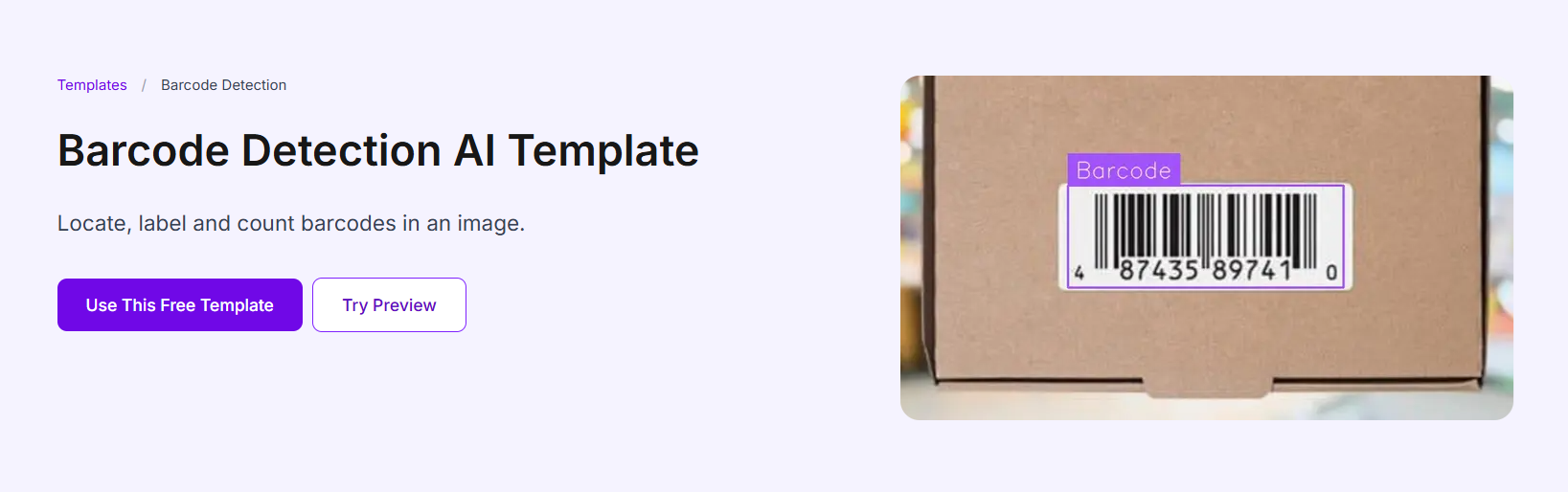

6. Barcode Detection

This project uses a computer vision model to locate, label, and count barcodes in images. It can detect multiple barcodes in a single photo, regardless of orientation or partial occlusion, and output bounding boxes around each detected code.

Why it is useful

While this template detects the position of barcodes in an image, integrating it with a barcode reader AI takes it a step further by automatically decoding the barcode values. This combination can transform how industries handle tracking and verification tasks. For example:

- Retail and Warehousing: Detecting and decoding barcodes from shelves or pallets enables automated inventory checks, restocking alerts, and price validation.

- Logistics and Delivery: A mounted camera system can scan multiple barcodes on parcels simultaneously, identify package IDs, and cross-check them with shipping databases for faster dispatching.

- Healthcare: Hospitals can detect and read barcodes on medication packaging or patient wristbands to ensure accurate tracking and prevent mix-ups.

- Manufacturing: Barcode reader AI can be paired with detection to trace parts through the production line, ensuring that each component is correctly logged at every stage.

How to implement it?

Visit Barcode Detection AI Template and click “Use This Free Template” to clone the template into your workspace.

Difficulty Level

Beginner

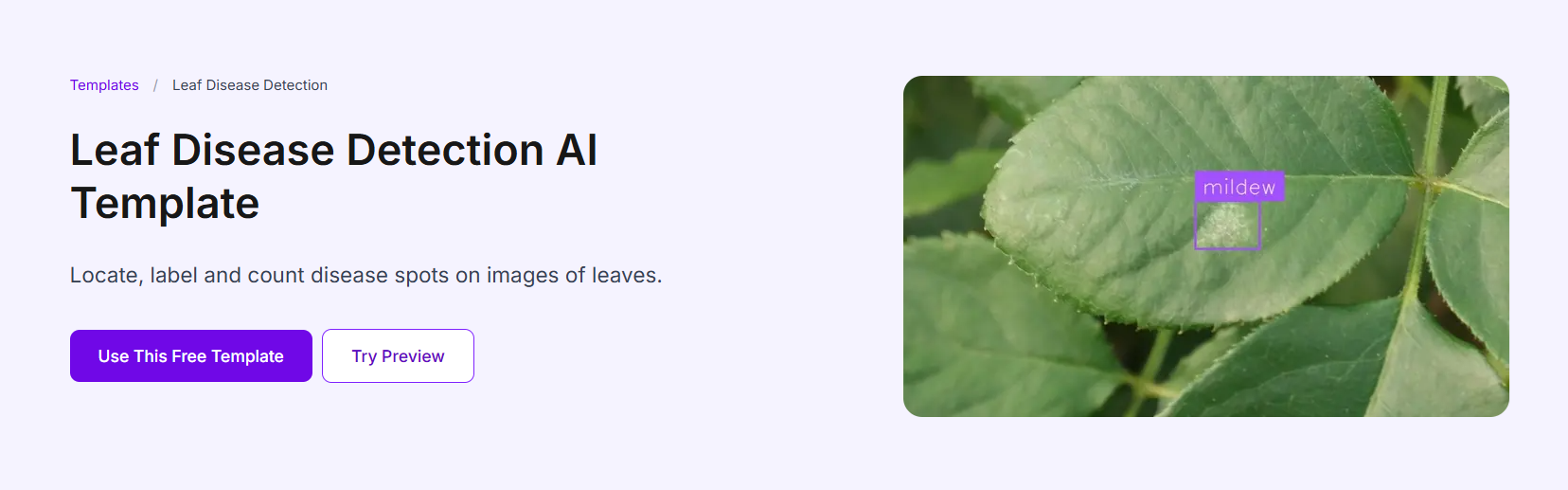

7. Leaf Disease Detection

This project uses a vision model to automatically locate, label, and count spots of disease on plant leaf images. It identifies disease regions (e.g. spots, lesions) against healthy leaf surfaces, making disease symptoms visually “visible” to machines. (Source: “Locate, label and count disease spots on images of leaves” on the template page).

Why it is useful

Agricultural scientists, crop consultants, and farmers often inspect leaves manually to monitor disease progression, a process that is time-consuming, subjective, and limited in scale. This template accelerates that process by automating detection across large datasets or in-field image captures. For example:

- Large farms or greenhouses: Enables early identification of disease outbreaks across many plants before visible symptoms spread.

- Crop trials or research settings: It helps compare disease treatments quantitatively by counting and classifying disease spots in controlled experiments.

- Precision agriculture systems: It can feed alerts when infection thresholds are reached, triggering targeted interventions (spraying, isolation, or further inspection).

- For agritech tools or mobile apps: Enables end users (farmers) to upload leaf images and get instant diagnostics, reducing the need for expert visits.

How to implement it

Visit Leaf Disease Detection AI Template and click “Use This Free Template” button to clone the workflow into your workspace.

Difficulty Level

Intermediate

8. Construction Safety Detection

This project uses a computer vision model to automatically detect and label safety-related elements on a construction site such as people, helmets, and safety vests. The model draws bounding boxes around each object and identifies whether workers are following safety protocols, enabling automated site monitoring and compliance checks.

Why it is useful?

This template is useful in following scenarios:

- Site compliance monitoring: Automatically verify whether workers are wearing required PPE (hardhats, vests, masks) and flag violations to safety officers in real time.

- Accident prevention: Detect risky behavior like a worker standing too close to heavy machinery without proper gear and trigger alerts before incidents occur.

- Access control & onboarding: Restrict site entry to individuals wearing full safety gear, or issue warnings at entry gates when someone lacks mandatory equipment.

How to implement it?

Visit Construction Safety Detection AI Template and click “Use This Free Template” to clone the workflow into your workspace.

Difficulty Level

Intermediate

9. Traffic Light Detection

This project uses a computer vision model to automatically detect traffic lights in an image or video frame. It identifies where traffic lights are located by drawing bounding boxes around them. The template helps localize traffic lights so it can be used to analyzed further by additional models or logic.

Why it is useful

The useful applications of this project in smart city's traffic management are following:

- Smart traffic systems: Detect and map the positions of traffic lights at intersections for building intelligent traffic control systems.

- Autonomous driving: Identify the presence and location of traffic lights so self-driving or driver-assist systems can focus on relevant areas for signal color recognition.

- Infrastructure inventory: Automatically catalog traffic light locations across large city datasets for maintenance and mapping.

- Enhancement for signal analysis: After detecting each traffic light, you can crop the detected region and feed it into a separate classifier model to determine whether the light is red, yellow, or green turning this into a complete signal recognition pipeline.

How to implement it?

Visit Traffic Light Detection AI Template and click “Use This Free Template” to clone the workflow and customize it.

Difficulty Level

Beginner

10. Bear Detection

This project uses a vision model to detect, label, and count bears in images or video frames. It draws bounding boxes around each bear it finds, identifying their presence and number.

Why it is useful?

Following are some use cases of this project:

- Wildlife monitoring & conservation: Automatically process camera trap images to track bear populations over time, helping researchers understand numbers, migration patterns, and habitat usage.

- Park safety & alerts: Detect bear presence near trails or visitor zones to issue warnings or trigger preventative measures to protect humans and wildlife.

- Conflict prevention: In regions where bears encroach into human settlements, detect them early to alert authorities and reduce dangerous encounters.

- Ecological research: Integrate counts with location data to map distribution shifts, study behavior, or correlate with environmental variables.

How to implement it?

Visit Bear Detection AI Template and and click “Use This Free Template” to clone the workflow into your workspace.

Difficulty Level

Beginner

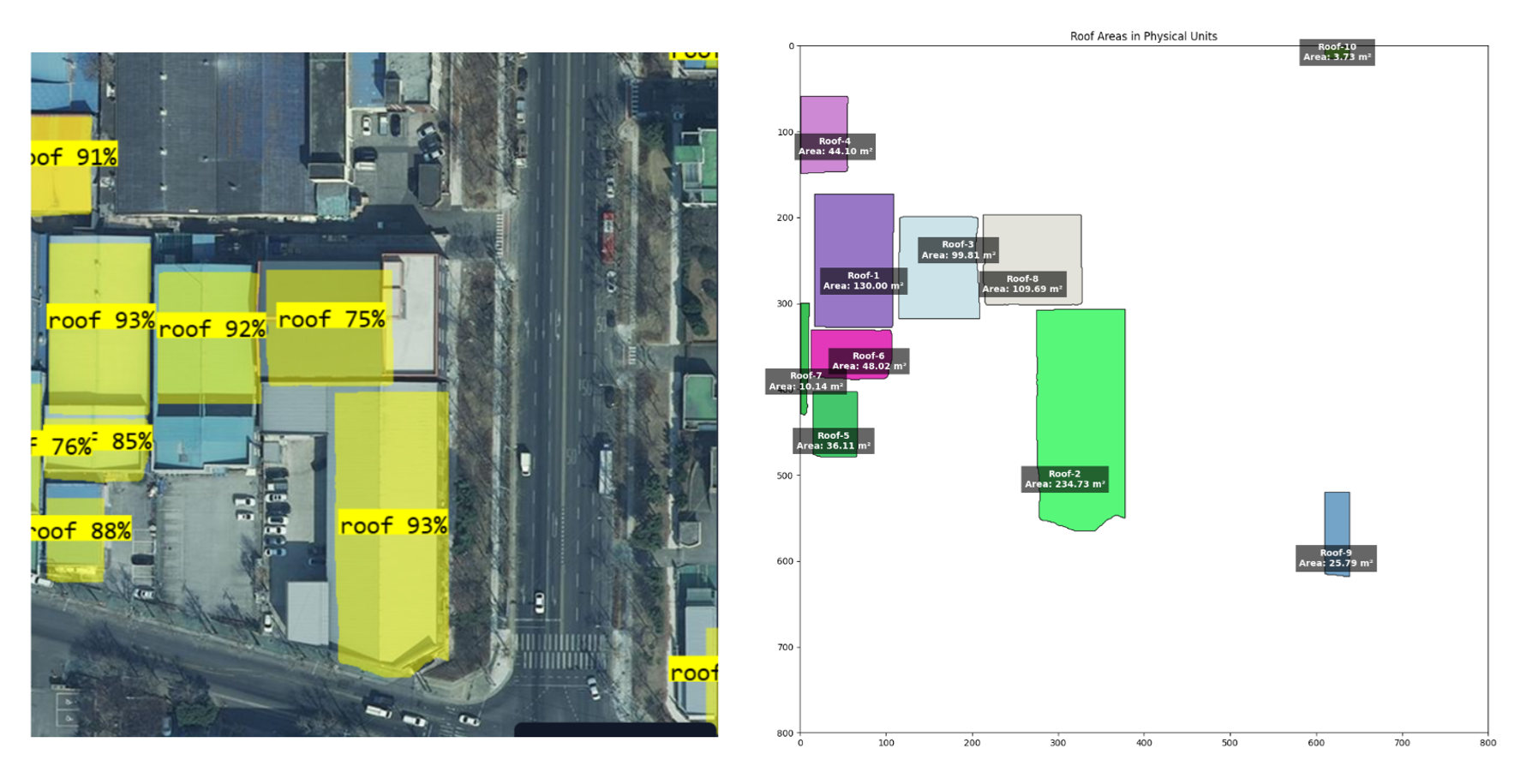

11. Solar Roof Measurement

This project uses computer vision to automatically detect roof areas from aerial or drone images, derive the polygon boundaries of each roof, and then compute the real-world surface area of the rooftop. The goal is to estimate how much space is available for solar panel installation.

Why it is useful?

- Solar feasibility planning: Helps solar installers determine how many panels can fit on a rooftop without physically visiting the site.

- Cost estimation & proposals: Enables more accurate quotes and solar system designs using area measurements rather than guesswork.

- Urban energy modeling: Municipalities or energy planners can map rooftop potentials across a city or region.

- Remote site assessment: Useful in regions where site visits are difficult, allowing remote measurement from aerial imagery.

How How to implement?

Follow the instruction given in blog Solar Roof Measurement with Computer Vision.

Difficulty Level

Advance

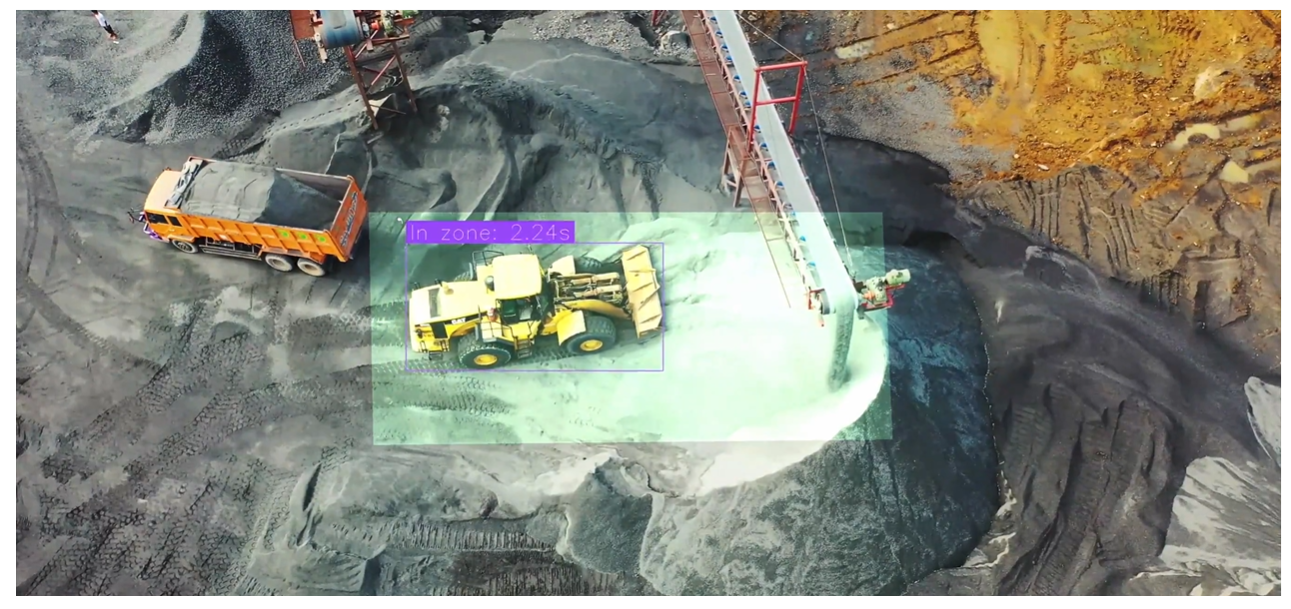

12. Red Zone Monitoring

This project tracks people and vehicles entering or lingering in designated “red zones” that are high-risk or restricted areas on an industrial site. The system draws bounding boxes around detected objects, records how long each stays in the zone, and flags periods of concern.

Why it is useful?

- Safety enforcement: Automatically detect when someone enters a dangerous area (e.g. near heavy machinery) and alert safety teams if they linger too long.

- Access control: Allow or deny passage based on whether people or vehicles cross into zones without authorization.

- Incident logging & audit trails: Maintain timestamps and records of red-zone entries for compliance, investigations, or accountability.

- Operational monitoring: Understand workflow bottlenecks by seeing which zones attract frequent traffic and for how long.

How to implement?

Follow the instruction given in blog How to Monitor Red Zones with Computer Vision

Difficulty Level

Intermediate

13. Dimension Measurement

This project builds a system that measures the real-world dimensions (length, width, height) of an object from images. It measure the dimensions by first segmenting the object and then applying geometry and optionally depth sensors to compute its size. It enables automated dimension inspection in dynamic environments.

Why it is useful?

Following are some of the use cases of this project:

- Pass/fail inspections: In manufacturing or quality control, you can check if packages fall within acceptable size tolerances.

- Package sorting & logistics: Automate measurement of parcels to determine packing or shipping categories.

- Inventory & fulfillment: Estimate volume or footprint of goods automatically to manage storage or pallet allocation.

- Scanning prototypes: Capture dimensions of new packages without manual measurement tools.

How to implement?

Follow the instruction given in blog Dimension Measurement with Computer Vision

Difficulty Level

Advance

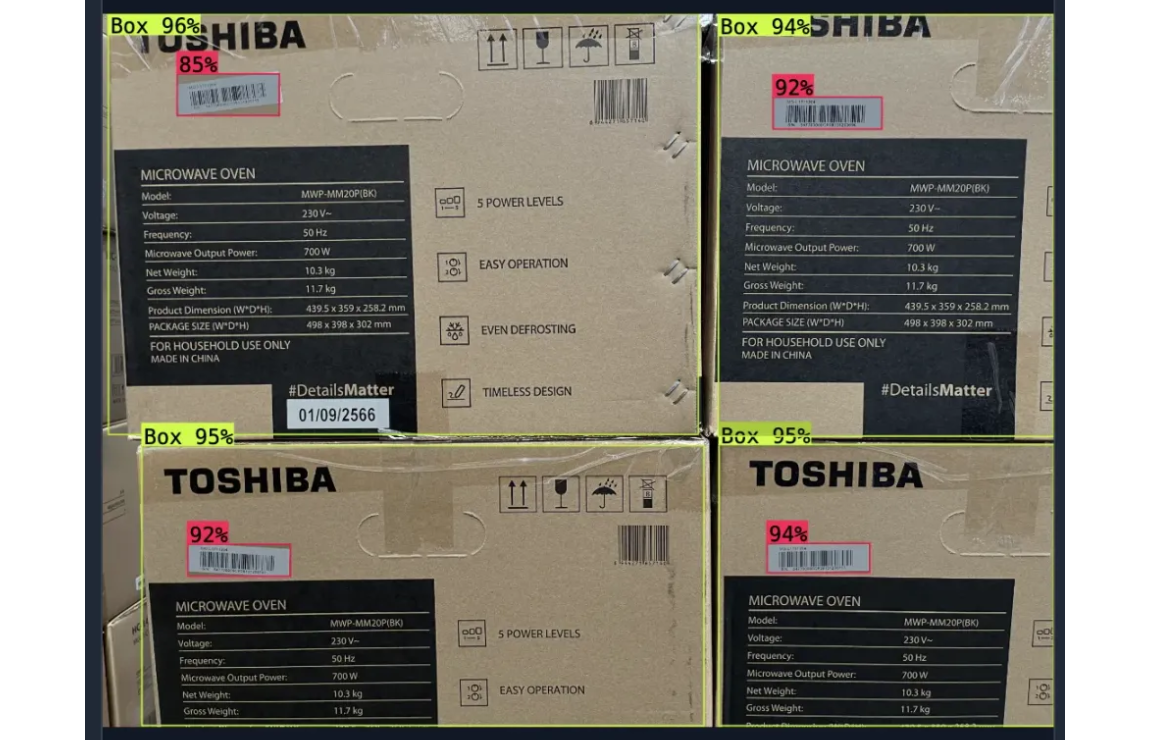

14. Label Placement Verification (on Packages)

This system uses computer vision to detect both the package’s box and its label, and then programmatically verify whether the label is placed in the correct region (for example, the top-left or bottom-right quadrant of the package). It flags misplacements that could cause scanner errors or misrouting.

Why it is useful?

This project can be used for following use cases:

- Shipping & warehouse efficiency: Ensures that labels are consistently placed so barcode readers and automated sorting systems can reliably read them. If a label is misplaced, the package might get misrouted or unscanned.

- Label standardization in packaging lines: Enforce corporate or regulatory placement rules (e.g. always top-left) across production lines or facilities.

How to implement?

Follow the instruction given in blog Label Verification AI: How to Verify Label Placement on Packages

Difficulty Level

Beginner

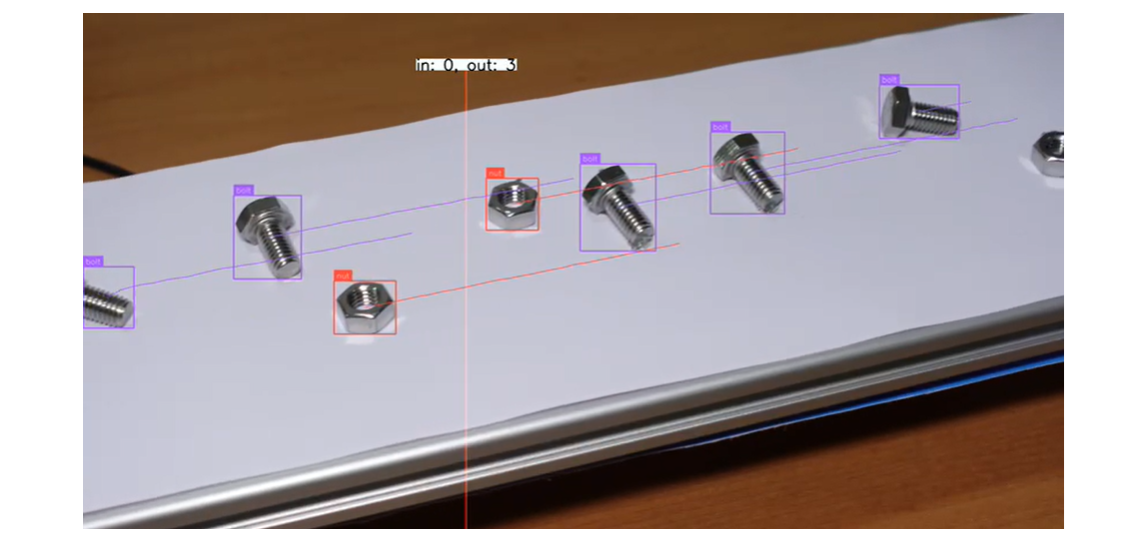

15. Count Objects on a Conveyor Belt

This project builds a vision system that detects objects (e.g., bolts, nuts, or small components) moving along a conveyor belt, and counts how many cross a defined region or pass a line.

Why it is useful?

Use this project for following use cases:

- Inventory management & throughput tracking: Automatically count parts as they pass through the line to monitor production volume in real time.

- Quality control: Detect missing or extra parts in a sequence by comparing the actual count against expected counts.

- Packaging verification: Ensure the correct number of components are packed before sealing or shipping.

- Process bottleneck detection: Identify slowdowns or jams if counting rate drops unexpectedly, alerting operators to intervene early.

How to implement?

Follow the instruction given in blog Count Objects on a Conveyor Belt Using Computer Vision

Difficulty Level

Beginner

16. Ball Tracking in Sports

This project builds a computer vision system to detect and track a ball in sports like soccer or basketball. It identifies the ball’s position in each frame, follows its path over time, and visualizes its movement.

Why it is useful?

Following are some real use cases of this project:

- Game analytics & strategy: Coaches or analysts can study ball trajectories, possession durations, and passing patterns to derive tactical insights.

- Broadcast augmentation: Sports broadcasts can overlay ball highlights, trajectories, or predicted paths to enhance viewer experience.

- Player training & coaching tools: Athletes can review tests with precise ball movement tracking to evaluate shots, passes, or movement patterns.

- Referee assistance & automated stats: The system can feed into automated decision support, counting touches, predicting goals, or flagging rule infractions.

How to implement?

Follow the instruction given in blog Ball Tracking in Sports with Computer Vision

Difficulty Level

Intermediate

17. Aerial Fire Detection with Drone Imagery

This project uses drones equipped with camera sensors and computer vision models to monitor landscapes and detect fires (flames, smoke) early on, autonomously. The system processes images or video from above, spots fire instances, and raises alerts.

Why it is useful?

This project can be utilized for various purposes in forest monitoring like:

- Early wildfire detection: By continually scanning remote or forested areas, the system can catch small fires before they spread.

- Rapid response & situational awareness: Alerts are sent immediately to fire control centers, helping teams plan interventions more efficiently.

- Coverage of inaccessible terrain: Drones can survey rugged or remote zones that are difficult for ground patrols to reach.

- Monitoring burn zones & controlled burns: The system can distinguish active fires from smoke or heat in controlled burn operations, improving safety.

- Disaster mitigation & prevention: Automated detection gives extra lead time, helping reduce the scale and damage of conflagrations.

How to implement?

Follow the instruction given in blog Aerial Fire Detection with Drone Imagery and Computer Vision

Difficulty Level

Beginner

18. Detect & Segment Oil Spills

This project uses computer vision to identify, segment, and analyze oil spills in aerial or drone imagery. It performs instance segmentation to mark precise spill boundaries and classifying spill thickness or color layer types (e.g. “Rainbow,” “Sheen,” “True Color”).

Why it is useful?

This project can be utilized for the following main purposes:

- Environmental monitoring & rapid response: Detect, measure, and map oil spills in remote or large maritime areas to guide cleanup efforts efficiently.

- Cleanup strategy optimization: Knowing the spill’s thickness and spread helps decide whether to use mechanical containment, chemical dispersants, or burning.

- Post-cleanup assessment: Comparing before/after images lets teams verify how effective a cleanup was and identify residual contamination.

- Cost & resource management: Precise measurement helps allocate cleanup crews, equipment, and materials more effectively.

- Regulatory & reporting compliance: Generates quantitative data for environmental agencies, legal cases, or public reporting.

How to implement?

Follow the instruction given in blog Detect and Segment Oil Spills Using Computer Vision

Difficulty Level

Intermediate

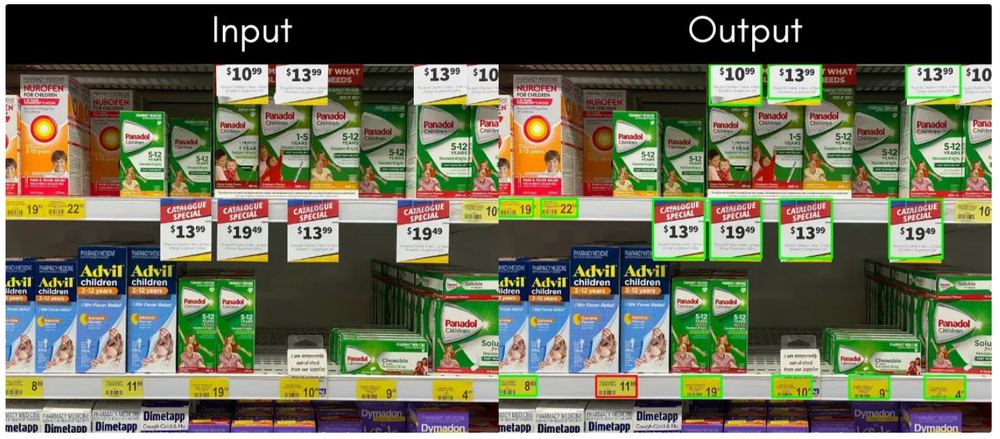

19. AI Shelf Price Verification: Matching Labels to POS Data

This project takes a photo of a store shelf, detects shelf label bounding boxes, reads the product names and prices using OCR, and then automatically checks those against a POS (Point-of-Sale) database. Labels matching the POS price are flagged as correct and the mismatches are highlighted.

Why it is useful?

This project can be used for following operations in retail:

- Retail accuracy audits: Instead of staff manually checking each shelf label against the system, a single image can reveal multiple pricing errors at once.

- Error reduction: Quickly catch mislabeled or mispriced items that might lead to customer confusion or loss.

- Efficiency in store operations: Accelerate shelf audits and price checks across many aisles or stores with minimal staff effort.

- Consistency across branches: Help large retail chains maintain uniform pricing and reduce discrepancies between POS systems and shelf labels.

How to implement?

Follow the instruction given in blog AI-Powered Shelf Price Verification: Matching Labels to POS Data

Difficulty Level

Beginner

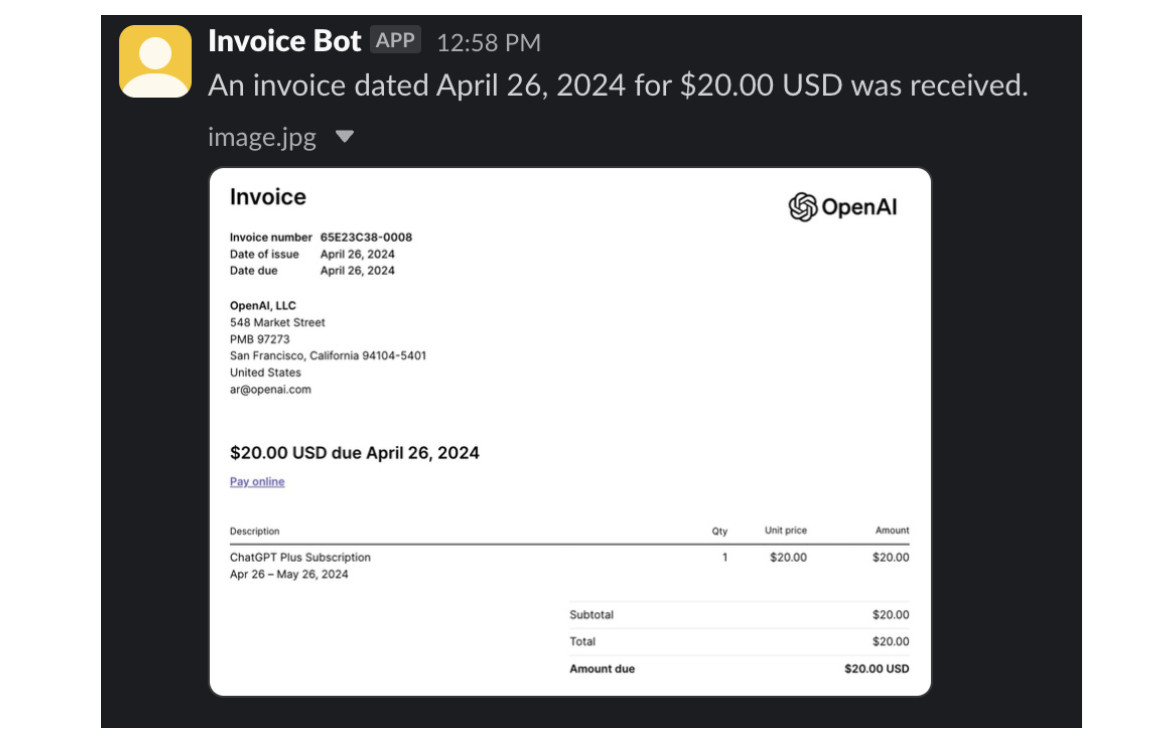

20. Invoice Reading with AI

This project builds a system that takes an image of an invoice (for example, a photograph or PDF screenshot) and uses AI to extract structured information from it such as the sender name, invoice ID, total amount, issue date, items, etc. Instead of just optical character recognition (OCR), it uses multimodal AI (vision + language) to understand and parse the invoice context.

Why it is useful?

This project can be utilized for the following benefits:

- Automated bookkeeping / accounts payable: Automatically parse invoices to reduce manual data entry, speed up processing, and avoid human errors.

- Notification systems: Trigger alerts (e.g. send a Slack message) when a new invoice arrives, summarizing key details.

- Digitization of legacy documents: Convert scanned or paper invoices into machine-readable records.

- Integrations with financial systems: Feed extracted invoice data into ERPs, accounting tools, or payment systems for end-to-end automation.

How to implement?

Follow the instruction given in blog How to Read an Invoice with AI

Difficulty Level

Intermediate

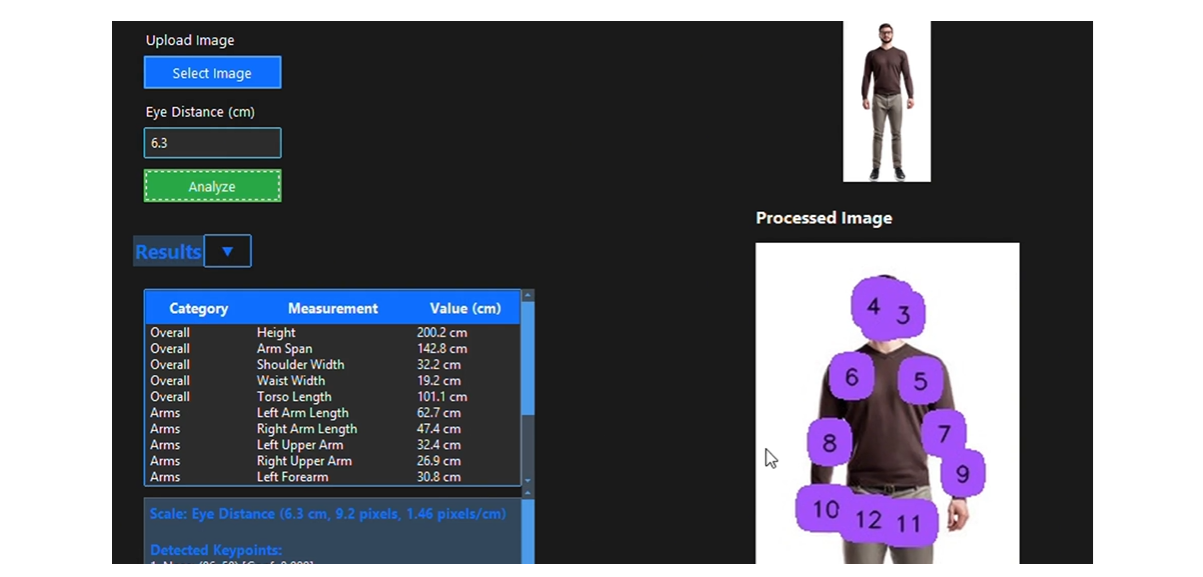

21. Automated Body Measurement

A vision system that detects keypoints (e.g. shoulders, hips, ankles, eyes) on the human body in an image, and then computes physical measurements (height, arm span, shoulder width, limb lengths) based on those keypoints.

Why it is useful?

Following are some important use-cases of this project:

- Fitness & wellness apps: Provide users with body metrics (height, limb lengths) without manual measuring.

- Fashion & tailoring: Enable virtual fitting or sizing tools by estimating users’ body dimensions from a photo.

- Healthcare & rehabilitation: Track body measurements over time for growth, posture, or therapy progress.

- E-commerce / custom gear: Help buyers pick size-specific products (clothing) based on their body measurements.

How to implement?

Follow the instruction given in blog Automated Body Measurement with Computer Vision

Difficulty Level

Intermediate

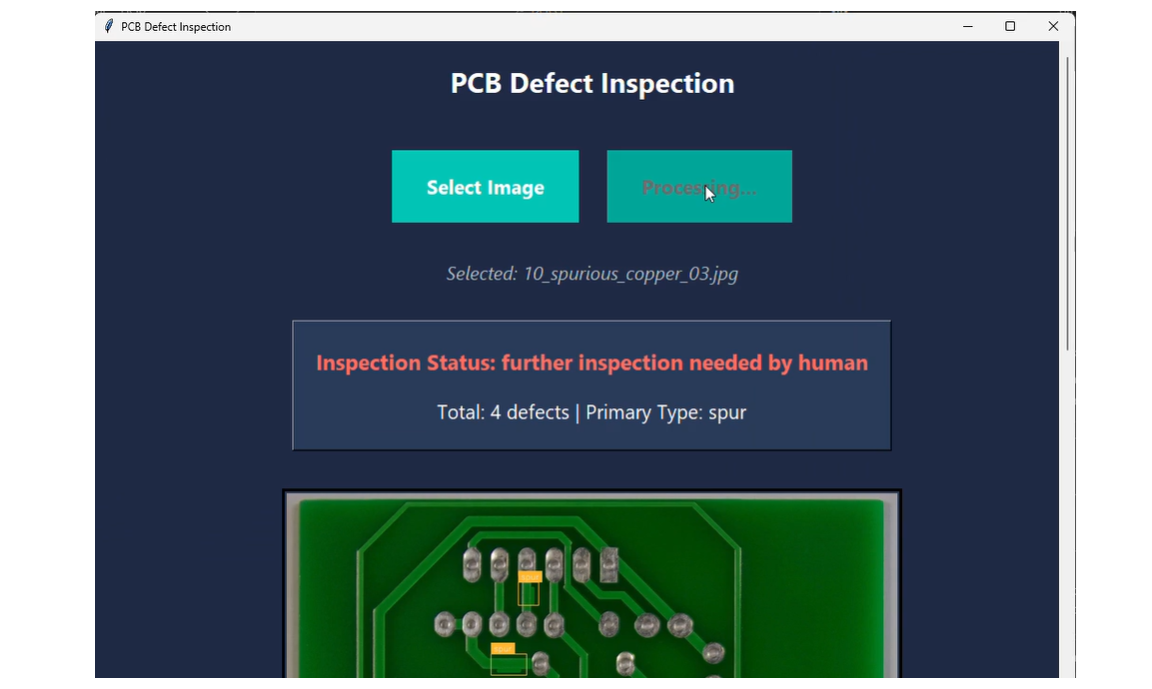

22. PCB Defect Detection

This project builds a real-time inspection system that uses computer vision to detect various types of defects on printed circuit boards (PCBs) such as open circuits, missing holes, short circuits, spurious copper, and other anomalies and classify whether a PCB passes or needs human review.

Why it is useful?

This project can be utilized for the following purposes:

- Quality control in electronics manufacturing: Automate defect detection to catch faulty PCBs before they go into devices.

- Throughput & cost savings: By flagging only defective units, the system reduces manual inspection time and improves yield.

- Tiered inspection decisions: Critical defects (e.g., open circuits) immediately trigger a “fail” decision whereas less severe ones (spurious copper) can be passed to human review.

- Traceability & audit logs: Store defect metadata (type, location, confidence) for traceability or for feedback to manufacturing processes.

How to implement?

Follow the instruction given in blog Real-Time PCB Defect Detection with Computer Vision

Difficulty Level

Intermediate

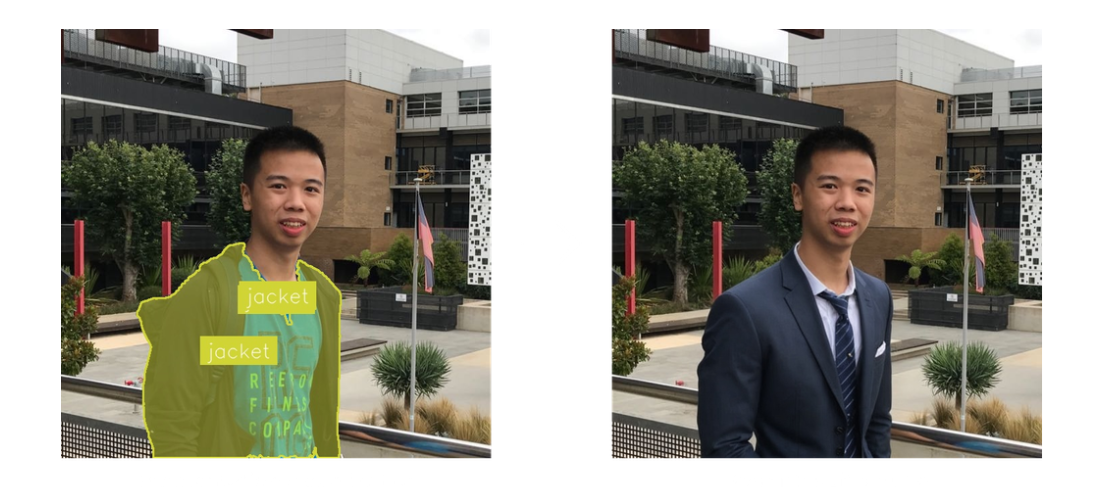

23. Transforming Outfits

This project builds a workflow that takes an input image of a person, detects clothing items (jackets, pants, dresses, etc.), segments their shapes precisely, and then uses generative inpainting to change or replace the outfit based on a user prompt (e.g. “80s fashion”, “summer dress” etc.).

Why it is useful?

This project can be used for following use cases:

- Fashion try-ons & virtual styling: Users can preview new outfits on their own photo before buying.

- Content creation & marketing: Generate fashion visuals, promotional images, or style variations without needing photoshoots.

- Design prototyping: Designers can visualize how clothing variations or styles appear on models quickly.

- Personalization & social apps: Let users customize their avatars or photos with style filters or outfit transformations.

How to implement?

Follow the instruction given in blog Transforming Outfits with Roboflow Workflows and Generative AI

Difficulty Level

Intermediate

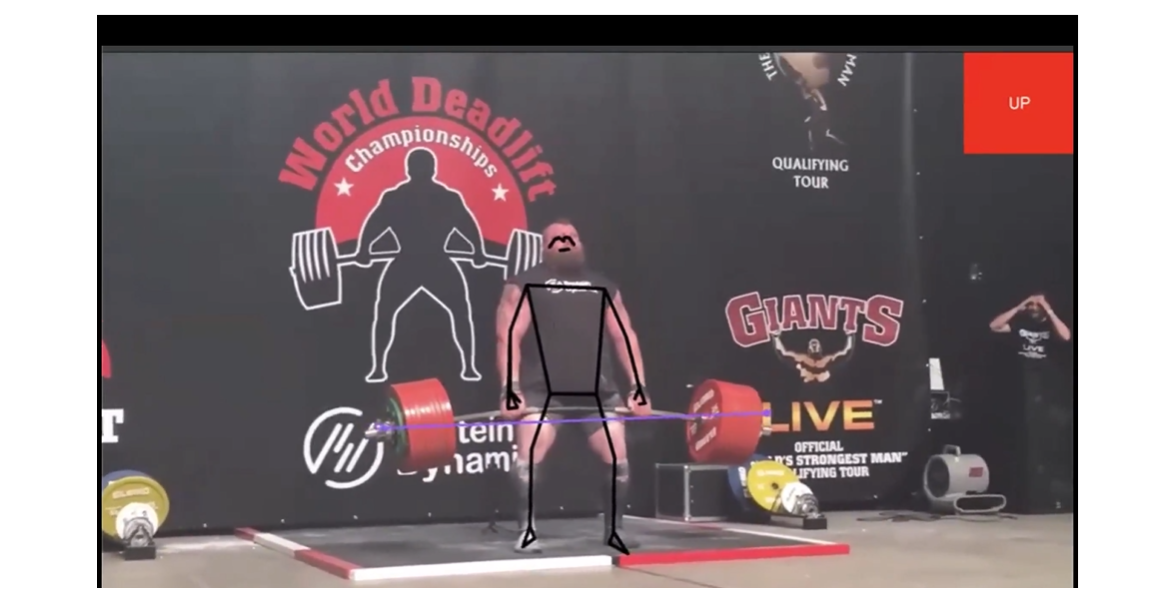

24. Workout Pose Correction Tool

This project builds an application that uses pose estimation (keypoint models) to analyze a person’s body posture during exercise. It compares detected joint positions or angles to ideal form to give feedback on whether the pose is correct.

Why it is useful?

Following are the important uses cases of this project:

- Fitness coaching & remote instruction: Gives users automatic feedback on form, reducing the need for an in-person coach.

- Training apps & platforms: Integrate it to offer pose correction in workout videos or AR/VR fitness systems.

How to implement?

Follow the instruction given in blog How to Create a Workout Pose Correction Tool

Difficulty Level

Beginner

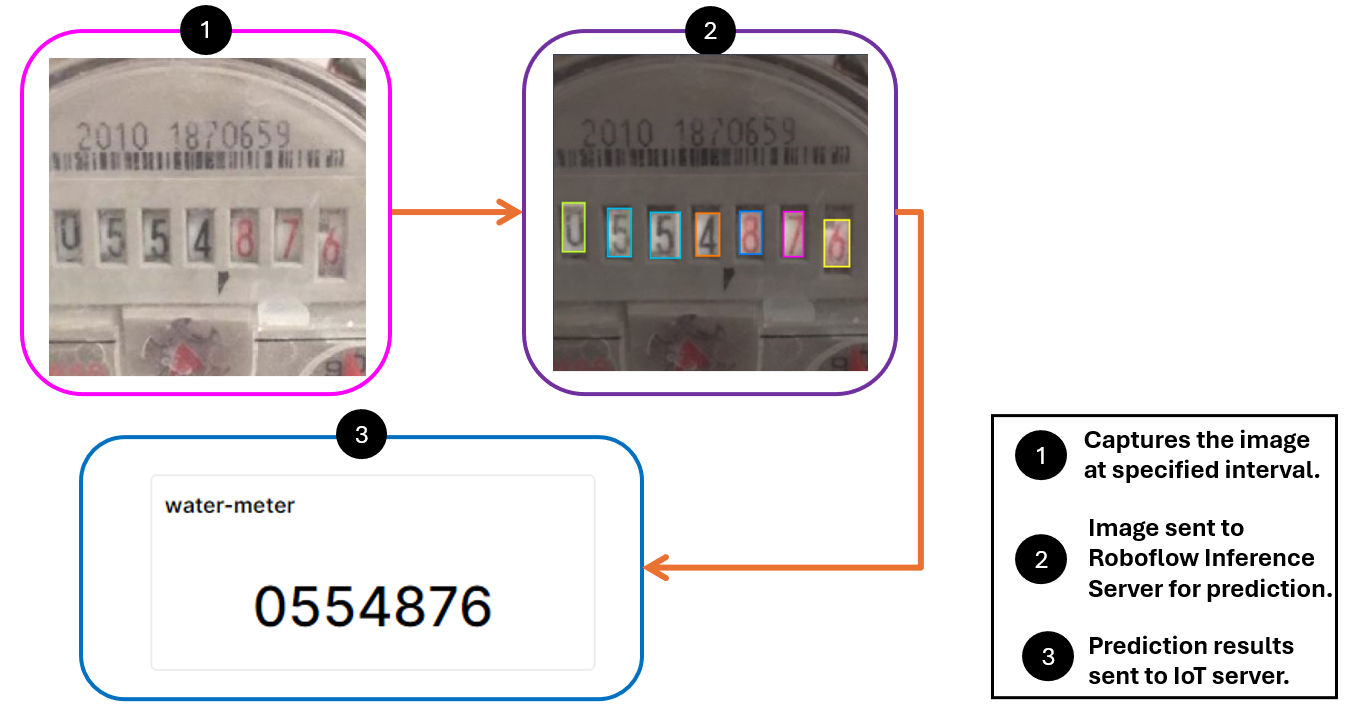

25. Automate Water Meter Reading

This project builds a computer vision system that reads the digits displayed on analog water meters from images, then transmits the readings remotely (e.g. via IoT) for monitoring or billing purposes.

Why it is useful?

The important application of this project are:

- Automated meter reading: Eliminates the need for manual inspections, saving time and labor costs.

- Improved accuracy: Reduces human errors in recording readings, especially in low-light or hard-to-reach areas.

- Real-time monitoring: Enables continuous tracking of water usage through IoT-connected cameras or sensors.

- Leak detection & alerts: Detect abnormal spikes or drops in readings to identify leaks early.

- Billing automation: Streamlines billing by sending verified readings directly to the utility’s database.

- Scalability: Ideal for smart cities or large housing complexes where thousands of meters need regular monitoring.

How to implement?

Follow the instruction given in blog Automating Water Meter Reading using Computer Vision

Difficulty Level

Advanced

Computer Vision Projects Conclusion

The projects in this blog show how vision AI is becoming easier to implement, and its wide variety of uses. Tools like Roboflow empower anyone to experiment, customize, and build powerful computer vision solutions quickly. Get started free today.

Not sure which project to begin with? Explore our Proven Framework ebook to follow simple steps to identify the best use case for your business.

Cite this Post

Use the following entry to cite this post in your research:

Timothy M. (Oct 6, 2025). Computer Vision Projects. Roboflow Blog: https://blog.roboflow.com/computer-vision-projects/