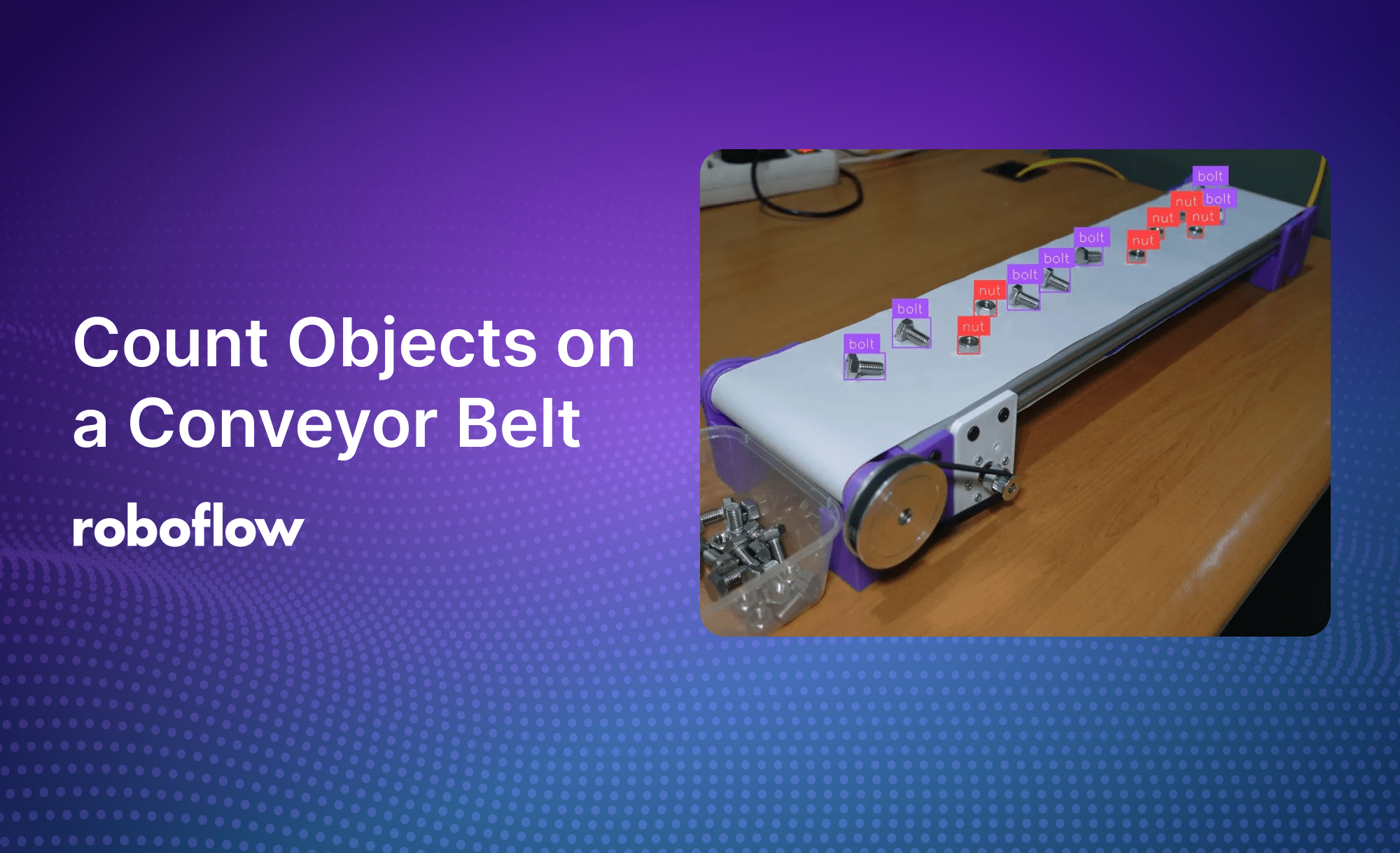

In many manufacturing environments, conveyor belts are used for transporting objects, especially small components such as bolts, nuts, or other fasteners through various stages of production. Being able to reliably count these objects in real-time improves inventory management, quality assurance, and overall efficiency.

Introduction

In this guide, we’ll walk through an end-to-end approach for detecting and counting objects on a conveyor belt using computer vision. The scenario we’ll focus on is counting bolts and nuts moving along a conveyor line, but the approach is adaptable to other objects and environments. This project involves:

- Collecting and annotating a dataset of bolts and nuts.

- Training a custom object detection model.

- Building a workflow application to count objects crossing a defined area.

- Deploying the solution locally to process real-time video streams or from video files.

By following these steps, you’ll have a system that can scale into a robust, production-grade solution.

Annotating data for model training

Accurate object detection starts with well-annotated data. Your first step involves collecting images or video frames that represent real-world conditions.

Data collection:

- Record short videos: Capture 1–2 minute clips of the bolts and nuts (or the objects of your choice) from different angles and under different lighting conditions.

- Extract frames: Using Roboflow, automatically convert your video into frames. About 1–2 image frames per second is sufficient, balancing thorough coverage with manageable dataset size.

Automated data annotation:

- Automated annotation with auto labeling: Roboflow’s built-in auto-labeling feature leverages advanced phrase grounding model – Grounding DINO to suggest bounding boxes for objects based on simple text prompts. For example, input prompts like “bolt” and “hex nut” (just using “nut” is ambiguous to other types of nut).

- Review, adjust, and approve: Review, accept, or adjust these predicted annotations to ensure accuracy.

- Dataset splitting: Split your annotated dataset into training (70%), validation (20%), and test (10%) sets.

- Pre-processing: Apply data augmentations—such as horizontal flips, brightness adjustments, or random cropping—to improve model robustness against real-world variability.

Model training process

Once your dataset is annotated and curated, it’s time to train your model. Roboflow streamlines this process, removing much of the complexity that usually comes with setting up training pipelines.

Training process:

- Select a model: Choose the Roboflow 3.0 Model with a MS COCO checkpoint as a starting point. This pre-trained backbone leverages rich features learned from tens of thousands of everyday objects, giving your model a head start.

- Initiate training: Kick off the training right from your browser. Roboflow handles cloud-based training infrastructure, allowing you to focus on the model’s performance.

- Monitor metrics: Within about ~20 minutes, you’ll see performance metrics like mean average precision (mAP), loss curves, and even a confusion matrix. Evaluate whether the model can reliably distinguish bolts from nuts, and use these insights to iterate if needed.

- Quick validation: After training, use Roboflow’s live inference tool—scan the QR code using your smartphone or upload an image—to test your model’s detection capabilities.

Build tracking and counting logic

The detection model output alone might not be enough for accurate counting. Objects can appear in multiple frames, leading to duplicate counts. Here is where Roboflow Workflows comes in, you can chain together post-processing steps to transform raw detections into useful deployable applications.

Creating a workflow:

- Add object detection: Start with your trained detection model as the first block in the workflow.

- Integrate ByteTrack for tracking: Add a tracking block (we'll use ByteTrack) that assigns consistent IDs to objects across frames. This prevents counting the same object multiple times as it moves along the belt.

- Line-based counting: Add a Line Counter Define one or more virtual lines across the conveyor belt’s frame. When an object crosses a line, it increments the count.

- Visualization blocks: Include visualization blocks so you can see bounding boxes, object IDs, and count overlays in real-time.

You can also make a copy of this pre-built workflow using this link.

Deploy your workflow locally

With your workflow defined and tested within Roboflow, it’s time to put it to use in the real world. For video inferencing you can either use Dedicated Deployment (this connects to the Roboflow cloud server allocated for your use) or Localhost. In this project we'll focus on Localhost so you can run the workflow on your local machine.

Local deployment steps:

- Setup environment: Install Python, Docker, and run

pip install inference-cli && inference serverstart as guided by this Roboflow Inference documentation. - Pull the inference server: Pull the inference server using Roboflow Inference.

- Run your python application: You can copy and run the Python code below which includes saving the output as a .mp4. Don’t forget to change the

workspace_name,workflow_id, andvideo_reference.

# Import the InferencePipeline object

from inference import InferencePipeline

import cv2

# Define the output video parameters

output_filename = "output_conveyor_count.mp4" # Name of the output video file

fourcc = cv2.VideoWriter_fourcc(*'mp4v') # Codec for the MP4 format

fps = 30 # Frames per second of the output video

frame_size = None # Will be determined dynamically based on the first frame

# Initialize the video writer object

video_writer = None

def my_sink(result, video_frame):

global video_writer, frame_size

if result.get("output_image"):

# Extract the output image as a numpy array

output_image = result["output_image"].numpy_image

# Dynamically set the frame size and initialize the video writer on the first frame

if video_writer is None:

frame_size = (output_image.shape[1], output_image.shape[0]) # (width, height)

video_writer = cv2.VideoWriter(output_filename, fourcc, fps, frame_size)

# Write the frame to the video file

video_writer.write(output_image)

# Optionally display the frame

cv2.imshow("Workflow Image", output_image)

cv2.waitKey(1)

# Initialize a pipeline object

pipeline = InferencePipeline.init_with_workflow(

api_key="*******************",

workspace_name="[workspace_name]",

workflow_id="[workflow_id]",

video_reference="[video_reference]", # Path to video, device id (int, usually 0 for built-in webcams), or RTSP stream URL

max_fps=30,

on_prediction=my_sink

)

# Start the pipeline

pipeline.start()

pipeline.join() # Wait for the pipeline thread to finish

# Release resources

if video_writer:

video_writer.release()

cv2.destroyAllWindows()

Now you can start processing video frames in real-time. Observe counts updating automatically as objects pass through the line counter.

Conclusion

By following these steps, you have seen how to:

- Annotate your dataset effortlessly, leveraging automated labeling tools to save time.

- Train a high-performing object detection model in the cloud without worrying about infrastructure.

- Build a Workflow that tracks and counts objects, ensuring accurate totals for inventory or quality control.

- Deploy your custom built solution locally.

This end-to-end approach streamlines the entire computer vision pipeline, the same principles apply to various applications, from inventory management to quality control.

Ready to build your computer vision project?

- Try Roboflow for free and explore our tutorials to get started.

- Join our community to share experiences, ask questions, and learn from others.

Cite this Post

Use the following entry to cite this post in your research:

Samuel A.. (Dec 12, 2024). Count Objects on a Conveyor Belt Using Computer Vision. Roboflow Blog: https://blog.roboflow.com/counting-objects-conveyor-belt/