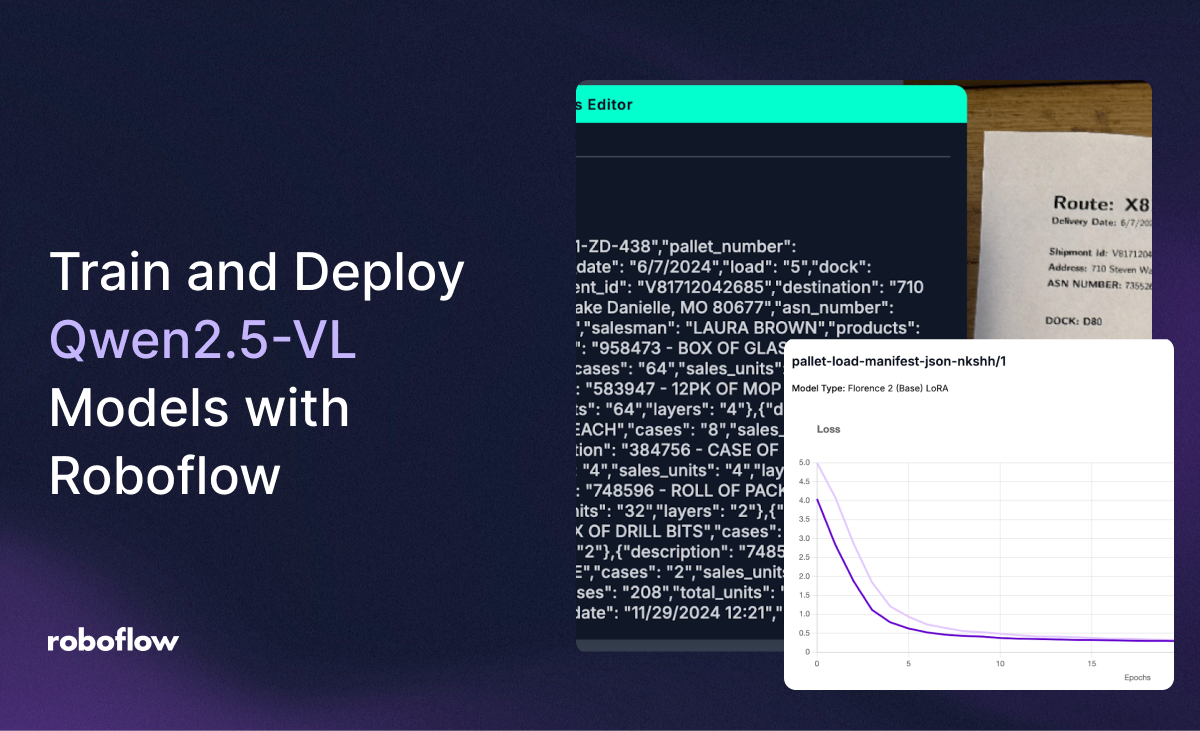

Qwen2.5-VL, released on January 28th 2025, is the latest vision-language model in the Qwen-VL series. You can use Qwen2.5-VL for a wide range of multimodal tasks, from vision question answering to OCR to extracting specific text from a document.

You can now fine-tune Qwen2.5-VL models with Roboflow, build applications with Qwen2.5-VL in Roboflow Workflows, and deploy your fine-tuned models on your own hardware using Roboflow Inference.

In this guide, we will walk through an example showing how to fine-tune and deploy a Qwen2.5-VL model that can read shipping palette manifests. This model could be used as part of an industrial inventory management solution.

Without further ado, let’s get started!

Step #1: Create a Multimodal Project

To get started, first create a free Roboflow account.

For this guide, we are going to use an open shipping pallet dataset available on Roboflow Universe. This dataset contains images of receipts, annotated with the text on the receipt. Here is an example of the image in the dataset with a corresponding JSON annotation of the data in the receipt:

To use this dataset in a project, go to the shipping palette dataset page then click “Fork Dataset” from the drop down:

This will create a copy of the dataset that you can use to fine-tune a model.

If you want to fine-tune a model for your own use case with a custom dataaset, you can create a “Multimodal” project from your Roboflow dashboard then upload your image data.

If you have annotations in our supported JSONL format, you can upload those too. If you don’t have annotations, you can label your data using Roboflow Annotate once you have uploaded your images.

Your annotations can be in whatever structure format you want, as long as the data is in JSONL.

For example, our shipping palette dataset uses the following structure:

{"route": "Q967-KG-646","pallet_number": "6","delivery_date": "10/25/2024","load": "4","dock": "D16","shipment_id": "R40692490452","destination": "251 Fox Plaza, West Maria, KY 31250","asn_number": "6428483118","salesman": "GORDON COMPTON","products": [{"description": "495827 - CASE OF PLASTIC BAGS","cases": "4","sales_units": "8","layers": "4"},{"description": "485927 - BOX OF DRYING CLOTHS","cases": "64","sales_units": "64","layers": "4"},{"description": "836495 - BOX OF WINDOW WIPES","cases": "8","sales_units": "64","layers": "4"},{"description": "728495 - BOX OF STAIN REMOVERS","cases": "32","sales_units": "8","layers": "2"}],"total_cases": "108","total_units": "144","total_layers": "14","printed_date": "12/05/2024 11:29","page_number": "67"}We have keys and values for the shipping route, pallet number, delivery date, dock, products on the manifest, and more.

Step #2: Create a Dataset Version

Once you have labeled your dataset, you can create a dataset version. A dataset version is a snapshot of a dataset at a given point in time. You can use dataset versions to train models on the Roboflow platform.

Click “Versions” in the left sidebar of your project to create a dataset version:

Apply two augmentations:

- Auto-orient, and;

- Resize (stretch) to 640x640.

For your first version, we recommend applying no augmentation steps. This will allow you to develop a baseline understanding of how your labeled dataset performs.

Once you have configured your dataset, click “Create” at the bottom of the page.

Your dataset version will be generated, then you will be taken to a page where you can start training your model.

Step #4: Train a Qwen2.5-VL Model

To start training your Qwen2.5-VL model, click “Custom Train” on your dataset version page:

A window will appear in which you can configure your training job. Choose the Qwen2.5-VL training option:

Then, you will be asked to confirm the model size. We currently support one size: 3B.

Then, choose to train from a Public Checkpoint:

Click “Start Training” to start your training job.

A notification will appear that shows an estimate of how long we think it will take to train your model. This will vary depending on the number of images in your dataset. You can expect multimodal fine-tuning jobs to take several hours on average.

As your model trains, you can view the loss and perplexity over time from the model training page:

Step #5: Deploy the Trained Qwen2.5-VL Model

Once you have trained your model, you can deploy it on your own hardware with Roboflow Inference.

Since Qwen2.5-VL is a multimodal vision-language model, we recommend deploying it on a device with at least a T4 NVIDIA GPU for optimal performance.

We are going to build a vision app with Roboflow Workflows, then deploy the model with Inference.

To get started, click "Workflows" in the left sidebar of your Roboflow dashboard. Then, create a new Workflow.

Click "Add Block", then search for Qwen2.5-VL:

Add the Qwen2.5-VL block. Click on the "Model ID" form field:

A window will pop up from which you can choose a trained model to use in your Workflow. Choose the model you just trained:

With your Qwen2.5-VL block set up, you can add additional logic to your Workflow. For example, you could send a Slack notification depending on whether the output of Qwen2.5-VL contains certain information.

For this guide, we'll stick with a one-step Workflow to run the model.

Here is our final Workflow:

We're now ready to deploy our model. We're going to deploy our model on our own hardware. You can also deploy your model to a dedicated cloud GPU server with Roboflow Dedicated Deployments.

Click "Deploy" in the top right corner of the Workflows web page. A window will pop up with deployment options. Select the "Run on an Image (Local)" tab.

First, make sure your device has Docker installed. If you don't already have Docker installed, refer to the official Docker installation instructions to get started.

Then, install Inference on your device and start an Inference server:

pip install inference-cli && inference server startThen, create a new Python file and add the code snippet from the Workflows web application. The code snippet will look like this:

from inference_sdk import InferenceHTTPClient

client = InferenceHTTPClient(

api_url="http://localhost:9001", # use local inference server

api_key="YOUR_API_KEY"

)

result = client.run_workflow(

workspace_name="YOUR_WORKSPACE",

workflow_id="YOUR_WORKFLOW_ID",

images={

"image": "YOUR_IMAGE.jpg"

}

)

Update the code above to use your project ID and API key.

For this guide, let’s try the model on the following image from our test set:

The Workflow returns:

[

{

"qwen_vl": {

"What is in the image?<system_prompt>": "{\"route\": \"W611-KB-718\",\"pallet_number\": \"4\",\"delivery_date\": \"6/12/2024\",\"load\": \"4\",\"dock\": \"D48\",\"shipment_id\": \"J826491437\",\"destination\": \"3373 Monroe Flat Suite 852, Ashleyhaven, MO 87156\",\"asn_number\": \"355699301\",\"salesman\": \"RONALD JAMES\",\"products\": [{\"description\": \"141421 - CASE OF LAMINATED SHEETERS\",\"cases\": \"4\",\"sales_units\": \"16\",\"layers\": \"5\"},{\"description\": \"357951 - 6PK OF HAND SANITIZER\",\"cases\": \"32\",\"sales_units\": \"64\",\"layers\": \"4\"},{\"description\": \"893975 - BOX OF LABEL SHEETS\",\"cases\": \"32\",\"sales_units\": \"64\",\"layers\": \"1\"},{\"description\": \"495867 - CASE OF SCRAPERS\",\"cases\": \"64\",\"sales_units\": \"64\",\"layers\": \"1\"},{\"description\": \"246810 - ROLL OF MASKING TAPE\",\"cases\": \"16\",\"sales_units\": \"4\",\"layers\": \"3\"},{\"description\": \"395847 - CASE OF FLOOR POLISHERS\",\"cases\": \"32\",\"sales_units\": \"16\",\"layers\": \"3\"},{\"total_cases\": \"180\",\"total_units\": \"228\",\"total_layers\": \"17\",\"printed_date\": \"11/29/2024 17:03\",\"page_number\": \"81\"}"

}

}

]When parsed as a JSON string, the result is:

{

"route": "W611-KB-718",

"pallet_number": "4",

"delivery_date": "6/12/2024",

"load": "4",

"dock": "D48",

"shipment_id": "J826491437",

"destination": "3373 Monroe Flat Suite 852, Ashleyhaven, MO 87156",

"asn_number": "355699301",

"salesman": "RONALD JAMES",

"products": [

{

"description": "141421 - CASE OF LAMINATED SHEETERS",

"cases": "4",

"sales_units": "16",

"layers": "5"

},

{

"description": "357951 - 6PK OF HAND SANITIZER",

"cases": "32",

"sales_units": "64",

"layers": "4"

},

{

"description": "893975 - BOX OF LABEL SHEETS",

"cases": "32",

"sales_units": "64",

"layers": "1"

},

{

"description": "495867 - CASE OF SCRAPERS",

"cases": "64",

"sales_units": "64",

"layers": "1"

},

{

"description": "246810 - ROLL OF MASKING TAPE",

"cases": "16",

"sales_units": "4",

"layers": "3"

},

{

"description": "395847 - CASE OF FLOOR POLISHERS",

"cases": "32",

"sales_units": "16",

"layers": "3"

},

{

"total_cases": "180",

"total_units": "228",

"total_layers": "17",

"printed_date": "11/29/2024 17:03",

"page_number": "81"

}

]

}Qwen VL has successfully identified:

- The products on the manifest.

- The route number.

- The pallet number.

- The delivery date.

- The shipment ID.

- And more.

Conclusion

You can now fine-tune and deploy Qwen2.5-VL models with Roboflow. In this guide, we demonstrated how to prepare data for, fine-tune, and run a multimodal Qwen2.5-V model for use in reading shipping manifests.

You can use the guidance above for any problem that VLMs can solve, from structured data extraction to image captioning to visual question answering.

To learn more about labeling data for multimodal models with Roboflow, read our multimodal labeling guide.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Mar 13, 2025). Launch: Fine-Tune and Deploy Qwen2.5-VL Models with Roboflow. Roboflow Blog: https://blog.roboflow.com/fine-tune-deploy-qwen2-5-vl/