On February 27th, 2025, OpenAI announced GPT-4.5, initially released to Pro users via the web application and API. OpenAI has announced plans to release the model more broadly in the coming week. According to OpenAI, the model supports “function calling, Structured Outputs, vision, streaming, system messages, evals, and prompt caching.”

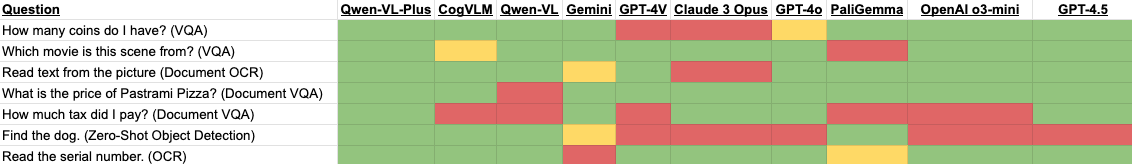

In this guide, we are going to walk through our analysis of GPT-4.5’s multimodal and vision features. We will use our standard benchmark images that we pass through new multimodal models. Curious to see how GPT-4.5 stacks up on our qualitative tests? Here is a summary of our results:

The model passed all of our tests, except for the object detection test. Object localization tasks continue to prove difficult for all foundation models.

Without further ado, let’s get started!

What is GPT-4.5?

GPT-4.5 is OpenAI’s “largest and best model for chat yet”, according to the model press release. OpenAI notes that responses from the model “feel more natural”.

In our testing, we found, anecdotally, that GPT-4.5’s responses for questions about images were more direct, concise, and informative than many other models. Whereas many models add superfluous information to their responses, GPT-4.5 has a tendency not to do this. We’ll show this throughout the examples later in this guide.

GPT-4.5 does not have reasoning capabilities built in. But, from OpenAI’s announcement it sounds like this model is a step in the direction for more unified reasoning capabilities in future models. On reasoning, OpenAI’s press release says:

Compared to OpenAI o1 and OpenAI o3‑mini, GPT‑4.5 is a more general-purpose, innately smarter model. We believe reasoning will be a core capability of future models, and that the two approaches to scaling—pre-training and reasoning—will complement each other.

GPT-4.5 is an expensive model to use. Whereas GPT-4o, one of OpenAI’s current flagship models, costs $2.50 / one million tokens for API use, GPT-4.5 costs $75.00 for the same number of tokens. This makes the model less practical to use programmatically. With that said, we expect the price to come down for future models.

You can read the OpenAI Models API reference for information on how to call the API. As of writing this post, you will need a Pro account to access the API.

Evaluating GPT-4.5 for Multimodal Tasks

We evaluated GPT-4.5’s multimodal support on several tasks, including:

- Object counting

- Visual question answering (VQA)

- Document OCR

- Document VQA

- Zero-shot object detection

- Real-world OCR

Below, we show our results from each test.

Our tests were run using the web interface.

GPT-4.5 object counting

Object counting measures how well a model can count the number of instances of an object in an image. We provided GPT-4.5 the following image of coins and asked “How many coins do I have?”

The model returned:

You have 4 coins.

In contrast, GPT O3 Mini, OpenAI’s latest reasoning model, returned.

It looks like there are four coins in the photo.

These two outputs show the difference we have found to be consistent in how GPT-4.5 answers questions. GPT-4.5 is more direct and concise, whereas O3 Mini – and other models – are more verbose. See our full analysis of O3 Mini.

With the same image, GPT-4o returned:

From the image, I can see that there are four coins in total.

- Three of them are 1 złoty coins (silver-colored).

- One of them is a 2 złote coin (bi-metallic, with a gold-colored outer ring and a silver-colored center).

So in total, you have four coins amounting to 5 złoty (3 × 1 zł + 2 zł = 5 zł).

GPT-4o technically answered the question, but included a lot of additional context that was not explicitly asked for. See our full analysis of GPT-4o.

GPT-4.5 Visual question answering (VQA)

We asked GPT-4.5 to identify the movie from an image. We provided the prompt “Which movie is this scene from?” with the following image:

GPT-4.5 answered “This scene is from the movie "Home Alone" (1990).”

In contrast, O3 Mini answered:

It’s a still from the original Home Alone (1990).

And GPT-4o answered:

This scene is from the classic Christmas movie "Home Alone" (1990).

It features Kevin McCallister (played by Macaulay Culkin) sitting in a beautifully decorated living room with a Christmas tree, stockings over the fireplace, and festive decorations. This movie is a holiday favorite, known for its heartwarming and comedic story about Kevin, a young boy who is accidentally left home alone during Christmas and must defend his house from two burglars.

O3 Mini answered the question but used more complex language (“a still”). GPT-4o was significantly more verbose and included context that was not asked for in our prompt.

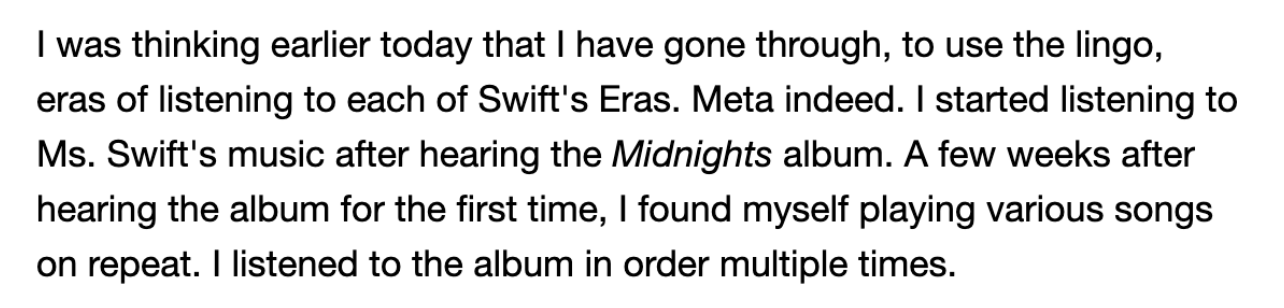

GPT-4.5 Document OCR

We provided GPT-4.5 with a screenshot of a paragraph of text and asked the model to “Read text from image.” Here is the screenshot we provided:

The model returned the text verbatim, including the italics on the word “Midnights”. For context, O3 Mini also returned the text, but did not add the italics on “Midnights”. GPT-4o read the text exactly.

GPT-4.5 Document question answering

We provided GPT-4.5 with the following photo of a menu and asked “How much does Pastrami Pizza cost?”:

GPT-4.5 returned:

The price of Pastrami Pizza is $27.

The model directly, and correctly, answered the question. The answer is the same as the one provided by GPT-4o. O3 Mini’s answer was slightly more verbose: “According to the menu in the image, the Pastrami Pizza costs $27.”

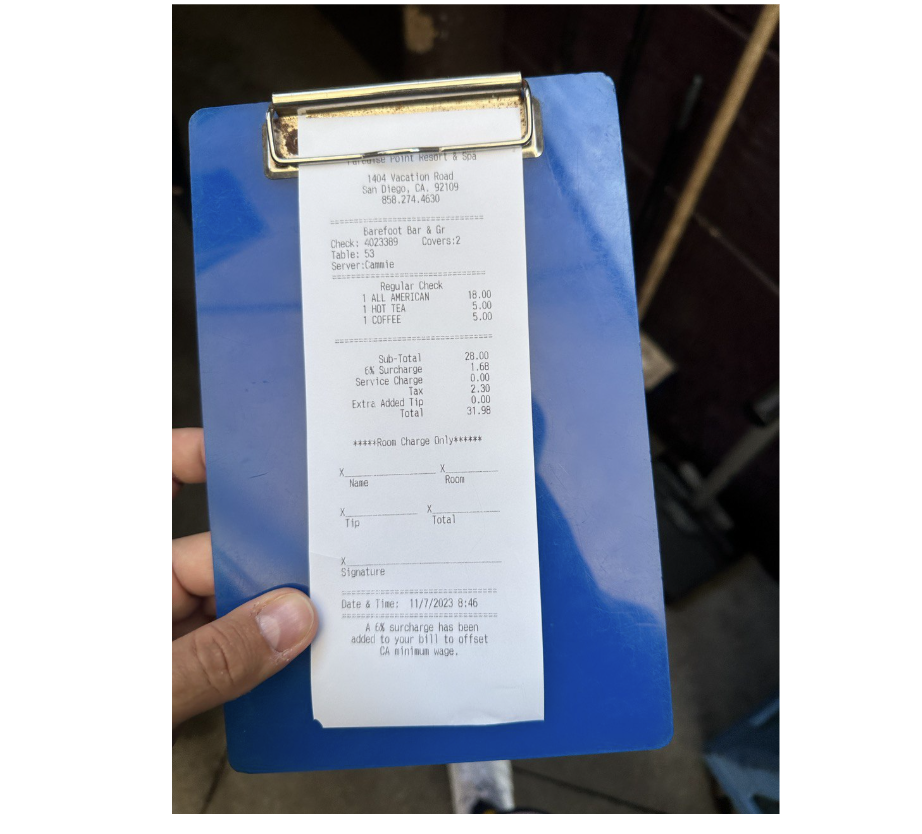

We also evaluated how the model performed at answering a question about a receipt. We uploaded the following receipt and asked “How much tax did I pay?”:

GPT-4.5 returned:

You paid $2.30 in tax.

This is correct. O3 Mini failed at this test, answering that the tax paid was $2.18. GPT-4o answered this question correctly with the same answer that GPT-4.5 returned.

GPT-4.5 Real-world OCR

We provided GPT-4.5 with a photo of a serial number and asked the model to read its contents with the prompt “Read the serial number.” Here is the photo we provided:

GPT-4.5 returned:

The serial number is: 3702692432

(Bold text copied directly from the result.)

This answer is correct. O3 Mini also answered this question correctly, but with more words: “It appears the serial number shown in the image is 3702692432.” GPT-4o’s answer was also correct and as concise as GPT-4.5’s answer.

GPT-4.5 Zero-shot object detection

GPT models have historically struggled with object detection and localization. You can fine-tune GPT-4 to identify objects with custom annotations. With that said, fine-tuning a model built with object detection in mind (i.e. YOLOv12, RT-DETR) will give you a model that will run significantly faster, can run on your own hardware, and will likely be more accurate.

We asked GPT-4.5 to identify a dog in an image, using the prompt “Detect the dog” and the following image as an input:

The model returned:

The dog in the image appears to be a Beagle.

Since we are looking for coordinates, we followed up with:> Return xyxy coordinates.The model returned:

The approximate bounding box coordinates (xyxy) for the dog in the image are:

- x1: 83

- y1: 535

- x2: 747

- y2: 882

Here is what these coordinates look like on our input image:

While the coordinates encapsulate some of the dog, the bounding box is not accurate. Much of the dog is not included in the bounding box.

The bounding box was more oblong than others we have seen from language models, so we transposed the box to be taller rather than wider. Here is the result:

The transposed bounding boxes encapsulate less background and more of the dog, but again fall short. A fine-tuned object detection model, on the other hand, has no problem at identifying and localizing objects.

Conclusion

GPT-4.5 is a new multimodal language model from OpenAI. This model, released in a research preview to Pro users, is accessible both from the OpenAI web interface and the API. The model is more terse and direct than OpenAI’s reasoning models like O1 and O3 Mini.

GPT-4.5 passed all of our tests except for the object detection one, a test at which previous GPT models have also failed.

GPT-4.5 is substantially more expensive than its predecessors, and was described by Sam Altman “a giant, expensive model.” With that said, we would expect either costs to come down over time or for another, more capable model to be made available that is cheaper.

You can learn more about GPT-4.5 in OpenAI’s model card or explore more multimodal models here

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Feb 28, 2025). GPT-4.5 Multimodal and Vision Analysis. Roboflow Blog: https://blog.roboflow.com/gpt-4-5-multimodal/