Large Multimodal Models (LMMs) such as OpenAI’s GPT-4 with Vision achieve impressive performance across a range of vision tasks. For example, you can ask GPT-4 with Vision to retrieve the text in a document and receive accurate results.

Many analyses have been run on the performance of GPT-4 with Vision, but all have one notable exception: the results are frozen in time. As new updates are made available, it is hard to track performance without explicitly running the same tests again.

With that in mind, the Roboflow team is excited to announce GPT Checkup, an open source and automated analysis tool for GPT-4 with Vision.

GPT Checkup runs a set of standard tests on GPT-4 with Vision every day, covering common vision tasks such as document OCR, object counting, object detection, and more.

In this guide, we are going to talk about what GPT Checkup is, how it works, and how you can contribute to GPT Checkup. Without further ado, let’s get started!

What is GPT Checkup?

GPT Checkup is a website that runs standard tests on GPT-4 with Vision every day. The website displays the prompts sent to GPT-4 as well as the response returned by the model. We show performance over the last seven days on the web application to measure stability over time.

We are excited about multimodality and know that one-off tests are not the best way to evaluate multimodal models, especially if a model is closed source. Knowing how a model performs across a range of tasks over time is crucial to build confidence in using LMMs in production applications. Running these tests takes time, so we built an automated solution for the community.

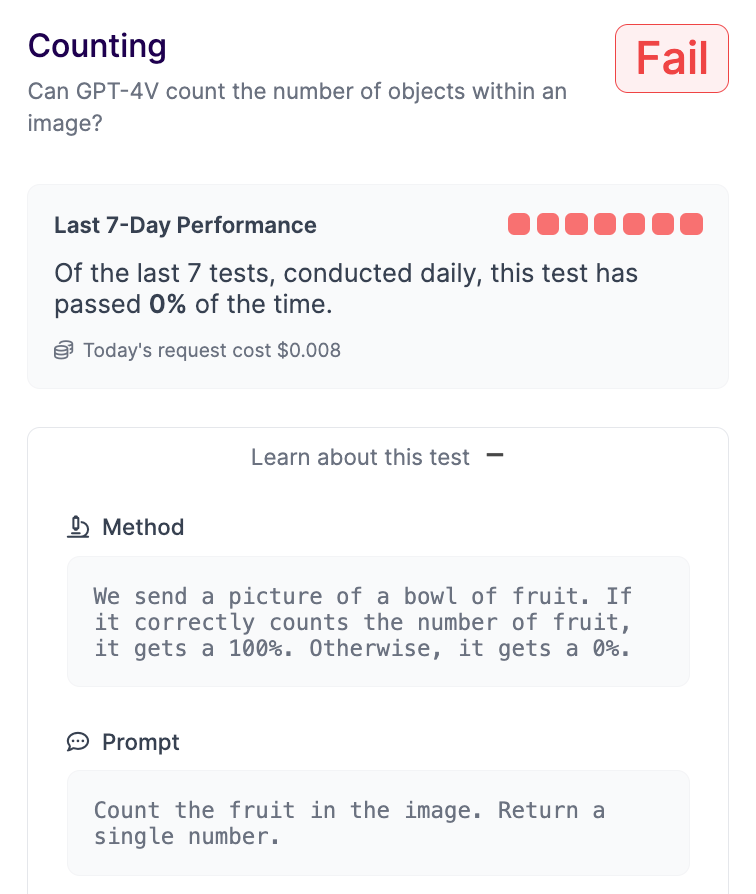

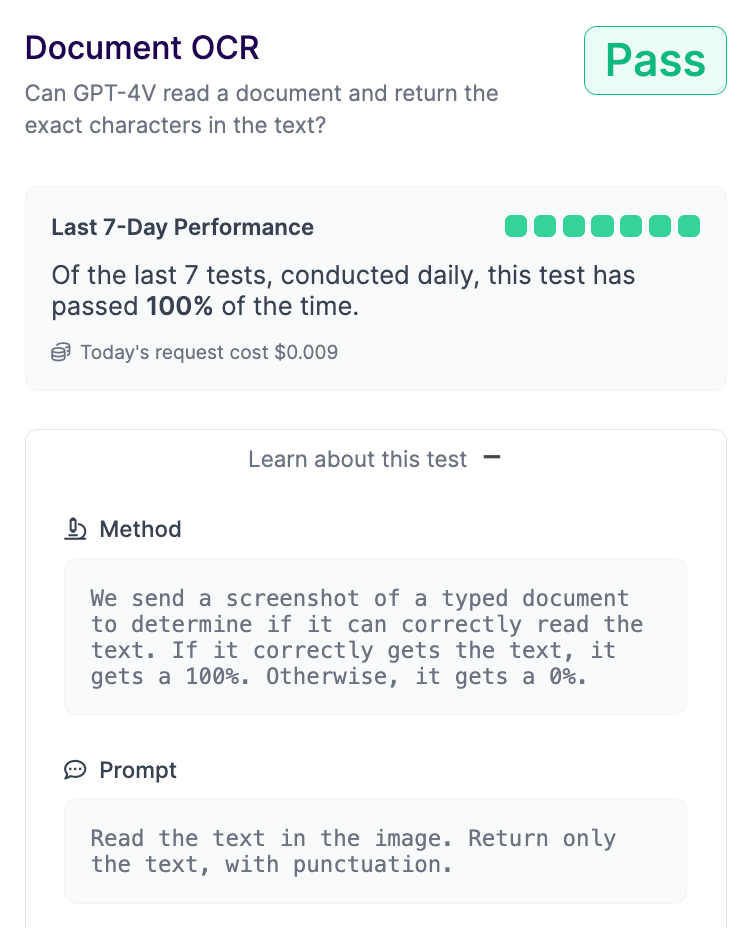

GPT Checkup allows you to understand how GPT-4 with Vision performs on various tasks. This information can be used as an input to questions you have about the extent to which GPT-4 with Vision could help you solve a problem. For instance, at the time of writing GPT Checkup reports that GPT-4 with Vision struggles with object counting but is able to accurately run OCR on a document.

We are excited to see how this site could be used to monitor how behavior changes over time. Perhaps an update improves model performance on one task; perhaps an update causes a deterioration in performance for a task. GPT Checkup lets us monitor for such scenarios, automatically.

At the time of writing, GPT Checkup analyzes the following capabilities:

- Object counting

- Handwriting OCR

- Object detection

- Graph understanding

- Color recognition

- Annotation quality assurance

- Object measurement

- Zero-shot classification

- Document OCR

- Structured data OCR

- Math OCR

We also calculate the average response time when we make all these requests and display it on the website.

The results from the GPT model are displayed on the web page and archived into a GitHub repository. You can use the site to see how GPT has performed on the standard tests every day for the last week. You can use the archived GitHub data to look further back.

How GPT Checkup Works

GPT Checkup features a set of standard prompts and images. These are sent to the GPT-4 with Vision API every day.

We have an expected result to which we compare the response from the API. For example, in the OCR tests we look for the GPT-4 with Vision output to be the same as our manual transcription; in the object counting test, we compare the GPT-4 with Vision response to the answer we know is correct.

Contribute to GPT Checkup

GPT Checkup is open source. Both the data from our tests, as well as the code we use to run our tests, is available on GitHub. You can add your own tests. To do so, refer to our instructions on how to contribute your own test to the site.

We will accept contributions that cover functionalities that are not already evaluated or that add unique tests that otherwise add value to the site. For example, tests that evaluate industry use cases are welcomed. You could add tests that:

- Evaluate GPT’s spacial awareness capabilities.

- Show GPT’s performance with Set of Mark prompting.

- Check if GPT can identify several attributes of an object at once (i.e. the color, make, and model of a popular car).

Roboflow will cover the API costs to run the tests each day.

Conclusion

GPT Checkup is an online tool that evaluates GPT-4 with Vision. The site runs a standard set of tests so you can see how GPT-4 with Vision performs over time. These tests cover a range of tasks, from object detection to object counting to document OCR.

You can use GPT Checkup to understand how one state of the art model – GPT-4 with Vision – performs on tasks that may be relevant to an application you are building. You can evaluate not only how the model performs today, but how the model performed in the past on the task.

There is one notable limitation with GPT Checkup: the site reports only the tests that have been run. We encourage you to use GPT Checkup as one of the many ways you explore multimodal models. Automated testing is no substitute for hands-on experience using your own data.

GPT Checkup is not affiliated with OpenAI.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Jan 5, 2024). Launch: GPT-4 Checkup. Roboflow Blog: https://blog.roboflow.com/gpt-4-checkup/