Roboflow has extensive deployment options for getting your model into production. But, sometimes, you just want to get something simple running on your development machine.

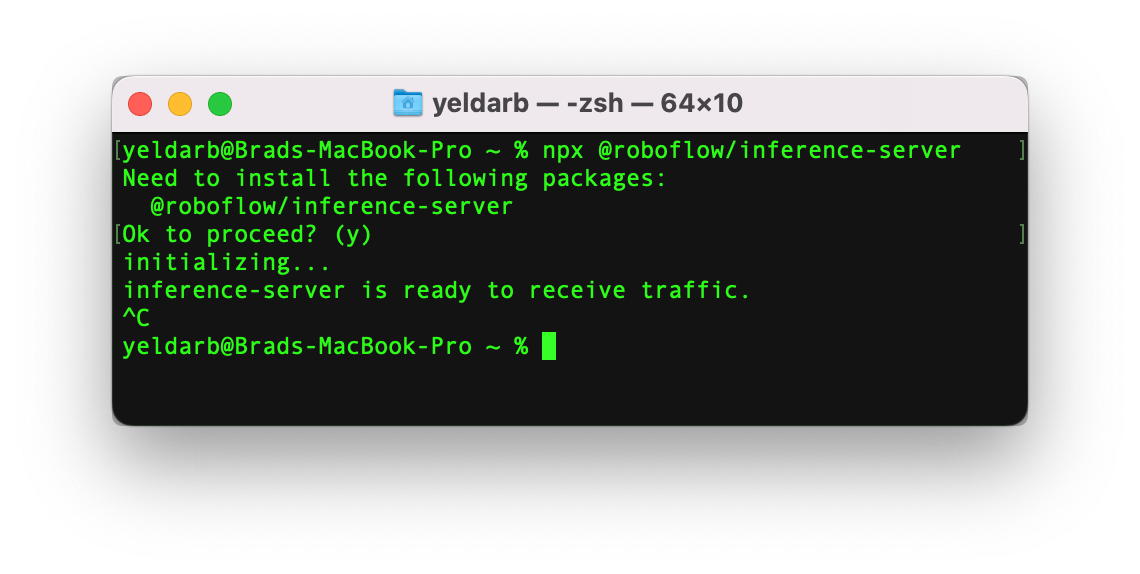

If you don't want to futz with installing CUDA and Docker, we've got you covered. The roboflow inference server now runs via npx. On any machine that supports tfjs-node (including any modern 64-bit Intel, AMD, or Arm CPU like the M1 Macbook or the Raspberry Pi 4), simply run npx @roboflow/inference-server

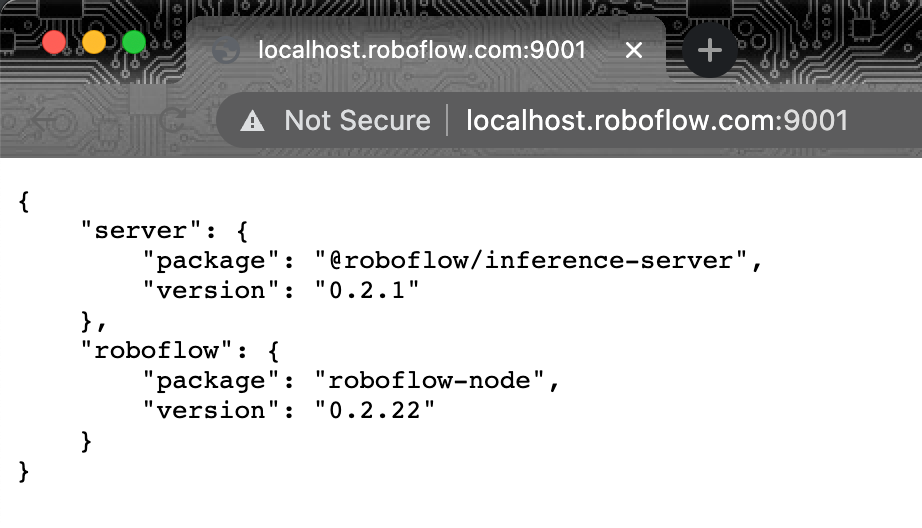

If this doesn't work, make sure you have node installed on your machine (installation instructions).This will download and install the inference server and run it locally on port 9001.

Now you can use any of our sample code or client SDKs to get predictions from your model.

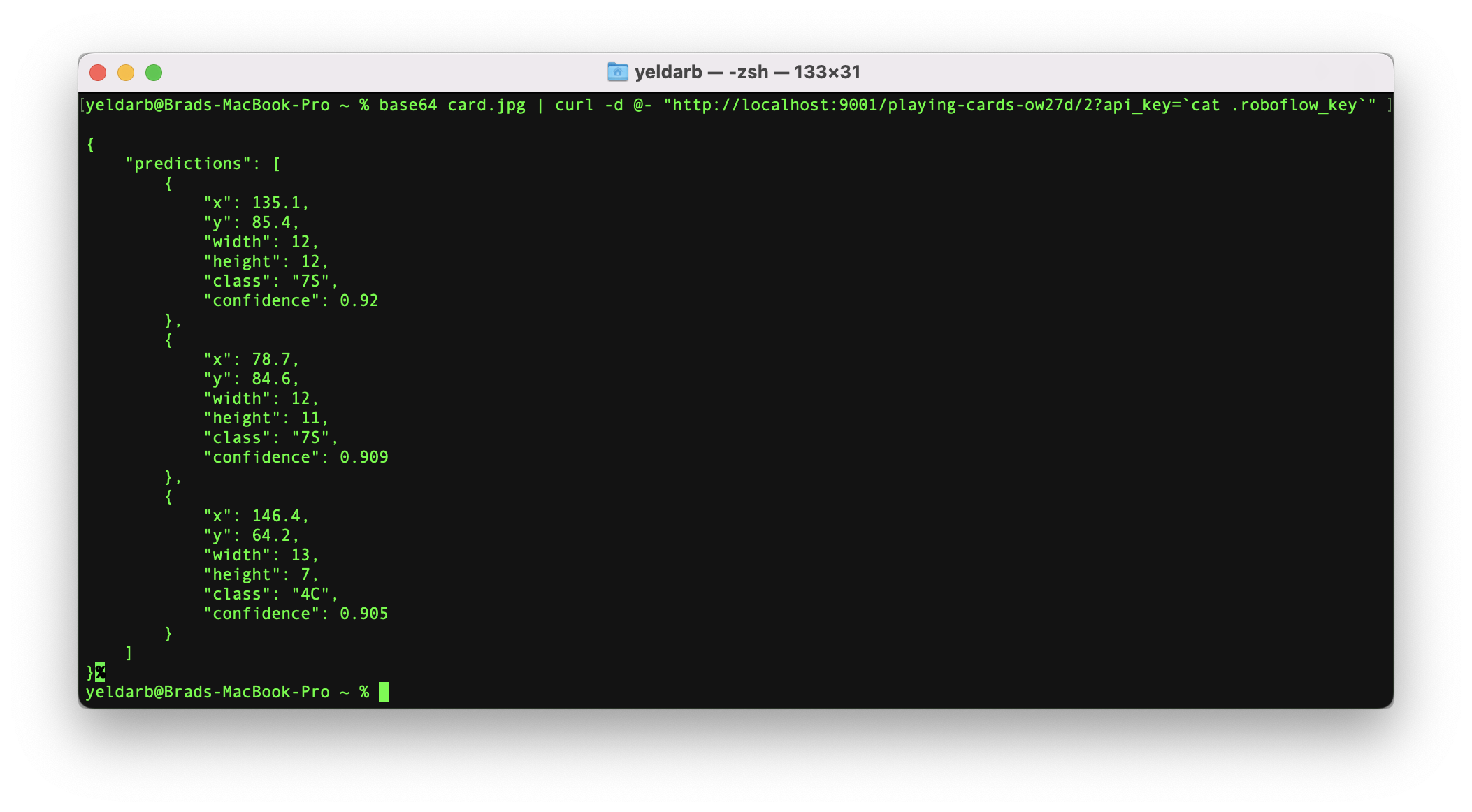

The simplest way to test it is using curl via your command line:

# save a picture of a playing card to card.jpg

# and put your roboflow api key in a file called .roboflow_key

# then run:

base64 card.jpg | curl -d @- "http://localhost:9001/playing-cards-ow27d/2?api_key=`cat .roboflow_key`"

Or you can use our python package, or any of our example code in the language of your choice.

Finding Models to Use

You can train your own custom model or choose one of the thousands of pre-trained models shared by others on Roboflow Universe.

Build Computer Vision Applications

Now you can build a computer vision powered application! For an example, check out our live-stream of creating a blackjack strategy app.

Be sure to share what you build with us by tagging @roboflow on twitter. If you build something neat, we might even send you some Roboflow swag.

Cite this Post

Use the following entry to cite this post in your research:

Brad Dwyer. (Sep 20, 2022). Launch: Test Computer Vision Models Locally. Roboflow Blog: https://blog.roboflow.com/locally-deploy-computer-vision/

Discuss this Post

If you have any questions about this blog post, start a discussion on the Roboflow Forum.