In the manufacturing sector, ensuring consistent product quality is paramount. However, traditional machine vision systems often struggle with variability in production environments, leading to challenges in maintaining this consistency.

I’m Dave Rosenberg, a solutions architect at Roboflow, and I have spent the last eight years in robotics and industrial automation. I have worked at a traditional machine vision company as well as with machine vision as both a manufacturing engineer at one of the worlds largest electronics companies and deployment engineer for robotics with machine vision in the plastics industry. I have first hand experience how frustrating and time consuming it can be when what was a well functioning system breaks down from changes in lighting from a dock door or vibration on a conveyor belt.

Many production environments have tried machine vision from incumbents in the past with mixed results. While traditional, rule-based vision systems can have success in inspecting objects in a controlled environment, small factors such as changes in lighting, cameras getting bumped slightly out of position, or frequent changeovers in the product can cause failures or a poor use case for these models.

In this article, we are going to talk about how AI solutions that leverage state-of-the-art computer vision technologies can be used to solve tough visual inspection problems in manufacturing.

Limitations of Traditional Machine Vision Systems

Traditional machine vision refers to the use of cameras and predefined algorithms to automate visual inspection tasks in manufacturing, such as detecting defects, measuring dimensions, and guiding machines. These systems rely heavily on predefined rules and parameters, making them effective for structured, repetitive tasks in controlled environments, like inspecting parts with consistent dimensions, verifying the presence of components, and aligning objects on automated assembly lines. However, they struggle when faced with variability in lighting, product design, and positioning, often requiring recalibration and reprogramming to maintain accuracy. While design changes are possible, they can be time-consuming and often require extended downtimes for retraining and manual adjustments.

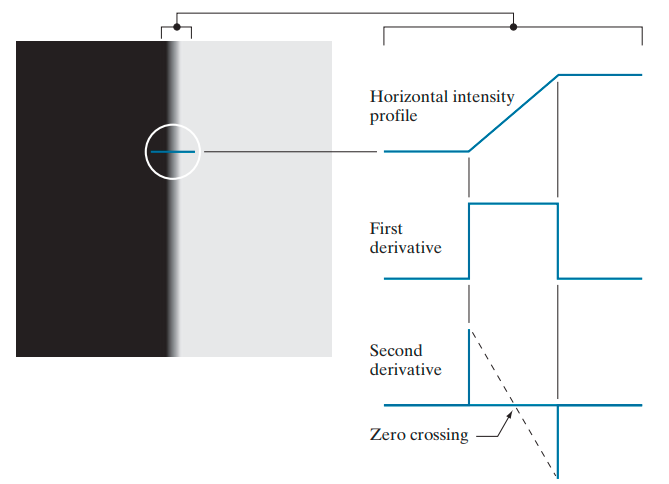

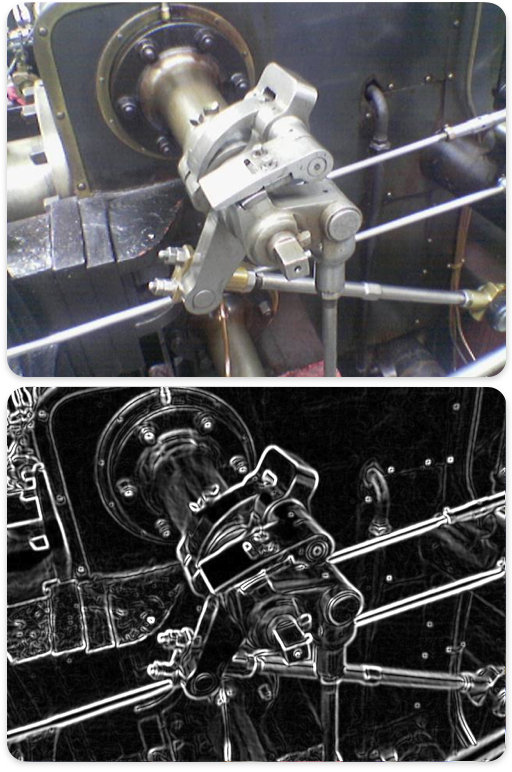

The images below demonstrates a method of machine vision using edge detection on a valve.

Image Left: (a) Two regions of constant intensity separated by an ideal vertical ramp edge. (b) Detailed near the edge, showing a horizontal intensity profile, together with its first and second derivatives. (Source: Digital Image Processing by R. C. Gonzalez & R. E. Woods) Images Right: A Sobel operator applied to a valve: Simpsons contributor/Edge detect

While traditional machine vision is ideal for stable environments, its limitations become apparent in dynamic production settings. Pairing traditional machine vision with deep learning models, combines the strengths of both approaches using machine vision for consistent rule-based tasks while deep learning handles complex variations and real-world adaptability without constant reprogramming. This allows manufacturers to maintain high levels of accuracy and efficiency even as production conditions change.

Common challenges include:

- Lighting Variations – Minor fluctuations in lighting can significantly impact the accuracy of inspections, as these systems lack adaptability to such changes.

- Positional Sensitivity – Slight shifts in camera positioning, due to vibrations or accidental bumps, can disrupt the system's ability to accurately assess products, leading to increased downtime for recalibration.

- Inflexibility to Design Changes – Modifications in product packaging or design often necessitate reprogramming of the system, resulting in operational delays and added costs.

- Limited Object Recognition – Traditional systems often struggle to accurately identify objects that vary in shape, color, or texture, especially when these variations were not anticipated during the system's initial programming.

- Scalability Issues – As production lines evolve, integrating new products or variations requires reprogramming and recalibration of traditional vision systems, hindering scalability.

- High Maintenance Requirements – Due to their sensitivity to environmental factors and rigid programming, traditional systems often demand regular maintenance and updates to remain functional, leading to increased operational downtime and expenses.

These limitations can lead to significant frustration among manufacturers, sometimes resulting in the abandonment of machine vision systems altogether.

How Deep Learning Models Solve These Challenges

Deep learning is a subset of artificial intelligence that utilizes neural networks to process and learn from extensive datasets, enabling systems to adapt and improve without explicit programming. Recent advancements—such as increased computational power, the availability of large datasets, and enhanced neural network architectures—have made deep learning more accessible and practical for industrial applications. In manufacturing, deep learning models can continuously learn and adapt to new data, allowing for quick training and deployment, even remotely. This adaptability reduces the need for constant reprogramming and enhances the system's robustness in handling complex and dynamic scenarios.

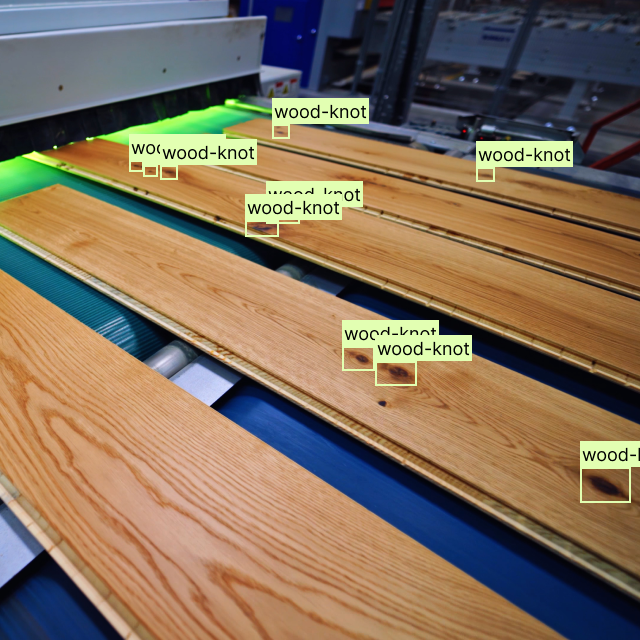

- Accurate Object Recognition – Whether your product is a plastic mold with subtle imperfections or a mechanical part with complex textures, Roboflow’s models can detect defects with high confidence, even if the product varies from batch to batch.

- Resistant to Lighting Changes – Roboflow’s deep learning models identify patterns and features rather than relying solely on pixel values. This makes them far more tolerant of lighting changes — whether it’s dim factory lighting or natural daylight, accuracy remains high.

- Adaptable to Position Changes – Deep learning models can continue to perform even if the camera shifts due to vibration or an accidental bump, without needing recalibration. The two-stage processing in Roboflow workflows is particularly effective: the first stage detects the object anywhere in the frame, while the second stage handles specific tasks such as optical character recognition (OCR) or presence detection.

- No Reprogramming for Product Changes – Deep learning models are trained on millions to billions of data points and don’t depend on the position of a product, enabling custom models to detect the same defect across multiple product lines and packaging designs without needing manual updates. This allows consistent performance even with product variations.

- Scalable and Easy to Update – Adding a new product or modifying an existing design is easy with Roboflow Workflows. You can train the model on the updated dataset and deploy it directly to production without complex coding or manual calibration.

- Lower Maintenance, Less Downtime – Roboflow’s models adapt and improve over time, reducing the need for frequent recalibration and maintenance compared to traditional systems, which lowers both costs and production interruptions. Predictions from the initial model can be easily reviewed and incorporated to enhance accuracy over time, ensuring continuous improvement.

Deep Learning Approach: A Case Study

To illustrate the advantages of deep learning models, consider a scenario involving the inspection of packaging with varying box sizes and frequently changing design patterns.

Traditional systems would struggle under these conditions, requiring constant adjustments to maintain accuracy. Some contract manufacturers have avoided machine vision because of this, even though the core product remains the same, packaging frequently changes. It’s still critical to ensure that crushed packaging, open or damaged boxes, or misaligned labels don’t make it out the door.

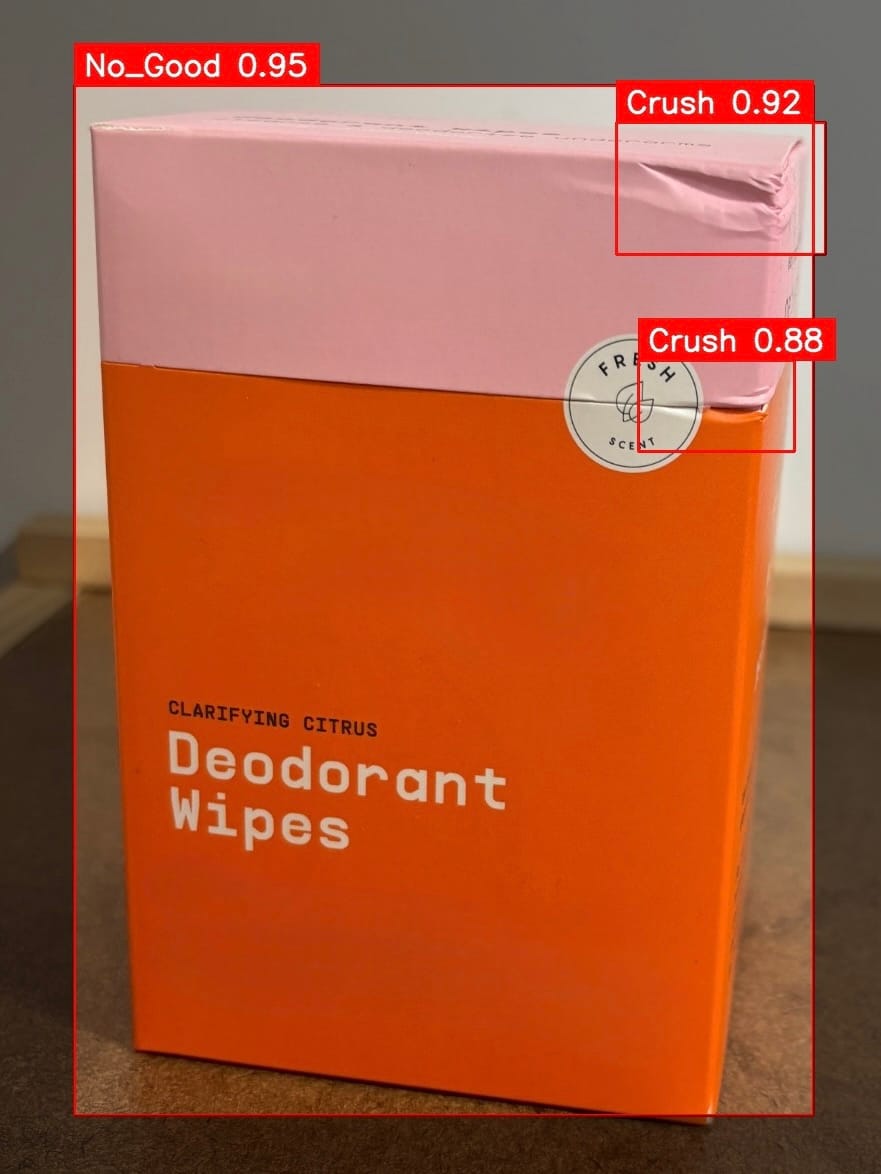

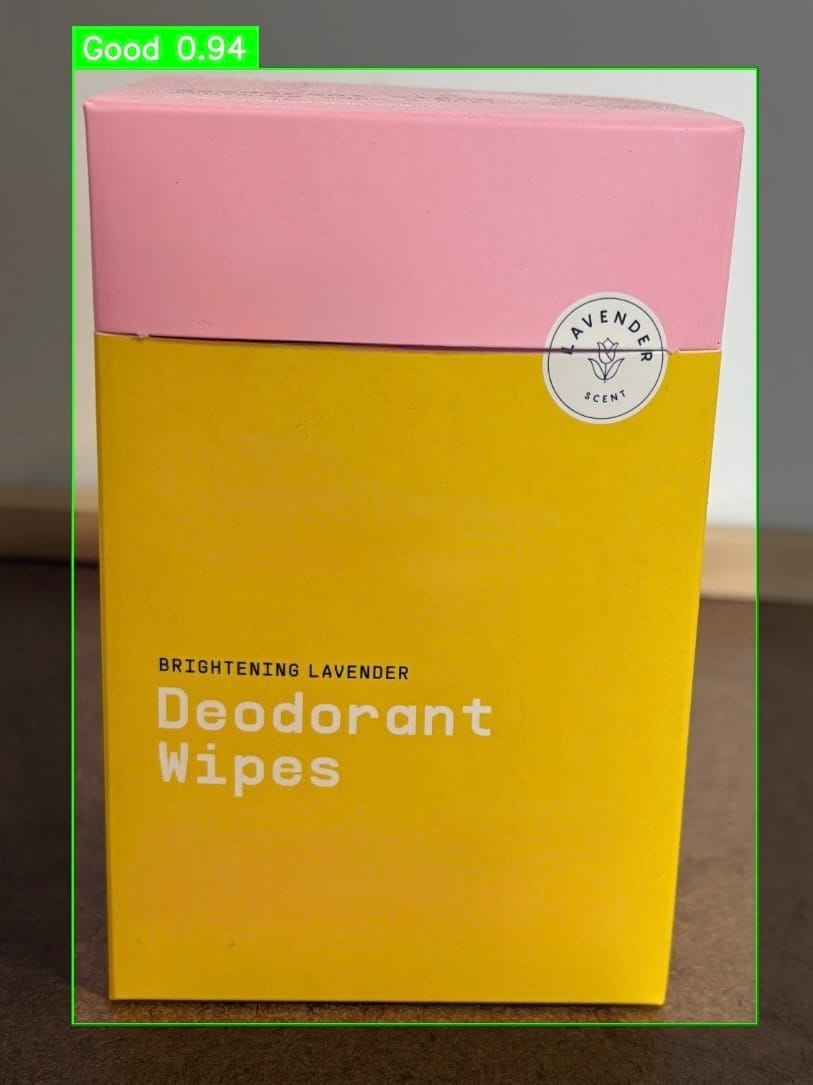

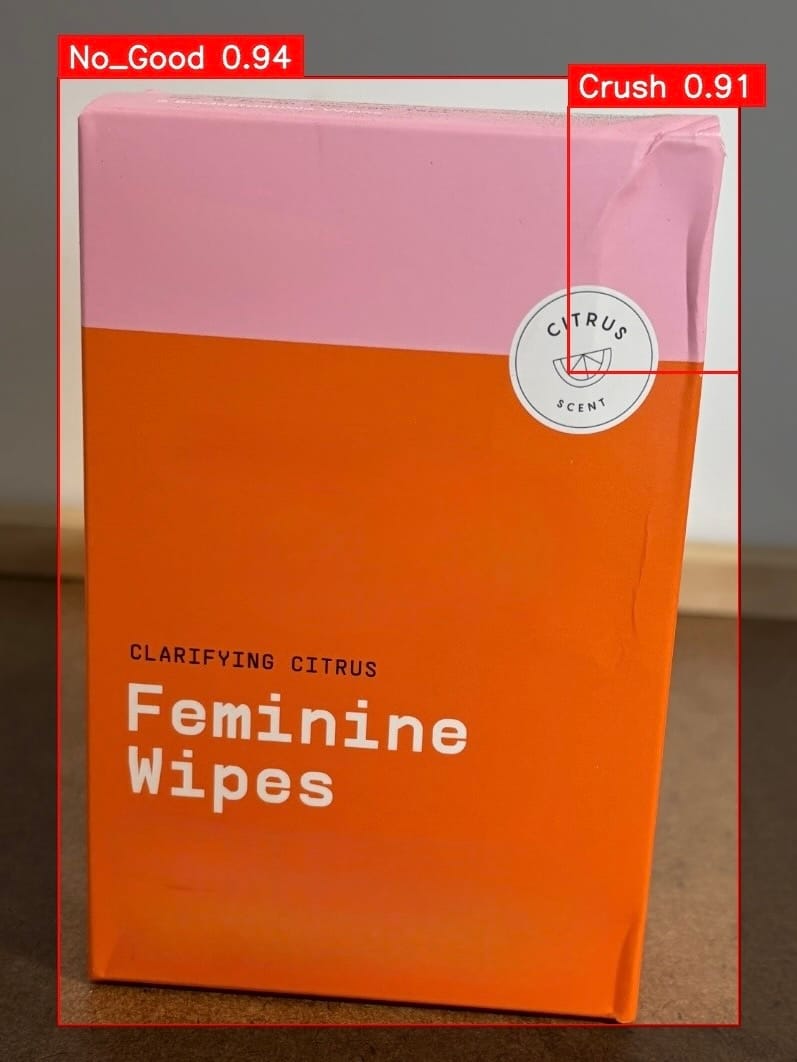

A Roboflow deep learning model was trained on variable packaging data to find tops of boxes that were crushed. Unlike traditional systems, the model demonstrated adaptability, accurately identifying defects across different packaging designs, varying lighting and camera angles, all without the need for continual reprogramming.

- False rejects decreased as the model learned to differentiate between acceptable variations and actual defects.

- Accuracy remained high even when new packaging designs were introduced.

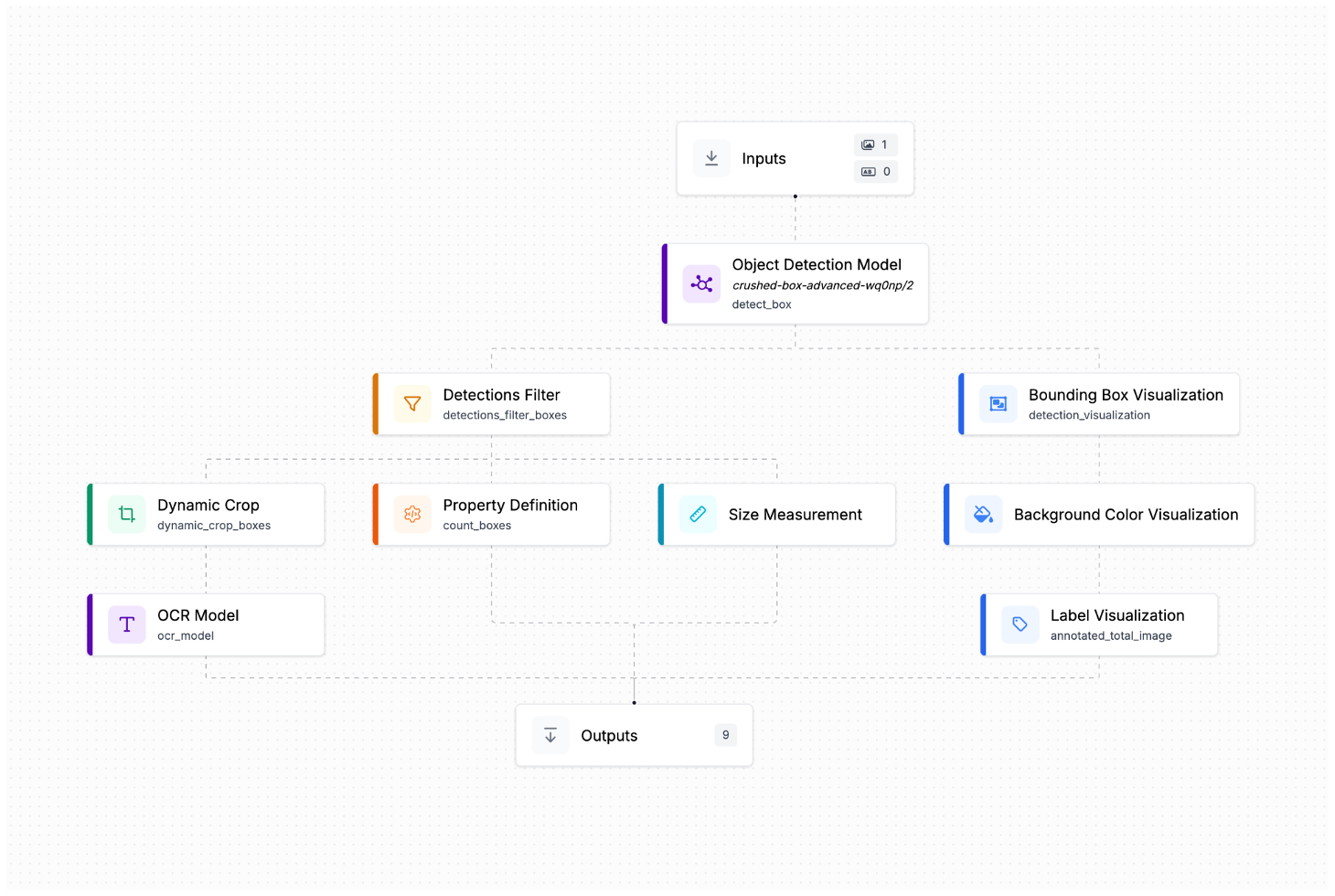

This model shows how crushed boxes can be detected using the same model across different packaging types. OCR was then run to identify what type of wipe box is damaged. (wipe brand removed)

The Roboflow Workflow allows the deep learning model to identify objects while a traditional machine vision model handles tasks like reading text, combining the strengths of both approaches for greater accuracy and adaptability.

Expanding Opportunities with Deep Learning

The flexibility of AI models opens new avenues for automation in manufacturing environments that were previously considered unsuitable for machine vision systems. For instance:

- Contract Manufacturers – Facilities producing the same core product but with varying packaging designs can implement Roboflow's adaptive models to maintain stringent quality control, regardless of packaging changes.

- Dynamic Production Lines – Manufacturers with frequently changing product designs or customizations can leverage deep learning to ensure consistent inspection standards without extensive system overhauls. This would be ideal in the injection molding industry where you need to identify the same molding defects like sink marks, short shots, or flash, but the molds are frequently changing sometimes on a daily or weekly basis.

- Difficult-to-Inspect Products – Roboflow excels in identifying irregular shapes, complex textures, and varied colors, even for products that traditional systems fail to recognize.

How Roboflow is Enabling the Future of Machine Vision

If past experiences with traditional machine vision systems have led to skepticism, it's time to reconsider. Roboflow’s deep learning solutions address the core challenges that have historically hindered the effectiveness of machine vision in variable production environments.

Roboflow combines the adaptability of deep learning with the reliability of traditional machine vision, providing manufacturers with a system that not only works but evolves over time.

With Roboflow, you can:

- Apply traditional machine vision techniques – Roboflow supports applying any technique from Kalman filters to edge detection so you can use the right tool for the job. For example, Roboflow Workflows supports any of the 2,500 methods available in OpenCV.

- Apply deep learning AI to existing machine vision cameras – Existing machine vision cameras provide images in formats that are readable in Roboflow. For example, if you have a camera deployed that is inspecting product labels, those same images can be used to train and deploy your own AI models for things like package inspection and deep learning techniques. Roboflow supports uploading images from most every camera or video.

- Train once, adapt forever – Roboflow’s deep learning models continually improve. Your model is constantly being trained on your new images. If you decide to experiment with a new model version such as YOLOv12, you can retrain your existing dataset and deploy the improved model without starting from scratch.

- Deploy fast, with minimal disruption – Roboflow’s cloud-based infrastructure makes it easy to deploy and update models without interrupting production — reducing downtime and increasing output.

- Achieve higher accuracy, lowering your costs of manufacturing – By reducing false positives, improving defect detection, and eliminating the need for constant reprogramming, Roboflow increases efficiency and reduces long-term costs.

The Bottom Line

Traditional machine vision systems have long been effective for consistent, repetitive tasks in controlled environments. However, their reliance on predefined rules makes them less adaptable to variations in production settings. In contrast, AI-based vision solutions utilizing deep learning offer greater flexibility, learning from diverse data to handle variability without extensive reprogramming. This adaptability allows businesses to address previously challenging scenarios, enhancing efficiency and reducing downtime.

Explore Roboflow today and discover how deep learning-based machine vision can improve your accuracy, efficiency, and bottom line.

Cite this Post

Use the following entry to cite this post in your research:

Dave Rosenberg. (Mar 14, 2025). How Deep Learning Solves Machine Vision’s Biggest Frustrations. Roboflow Blog: https://blog.roboflow.com/machine-vision-limits/