NeuralHash is the perceptual hashing model that back's Apple's new CSAM (child sexual abuse material) reporting mechanism. It's an algorithm that takes an image as input and returns a 96-bit unique identifier (a hash) that should match for two images that are "the same" (besides some minor perturbations like JPEG artifacts, resizing, or cropping).

It's really important that the opposite is also true (two images that are not the same do not have matching NeuralHashes) because otherwise Apple's system could falsely accuse people of trading in CSAM.

TLDR: We found 2 distinct image pairs in the wild that have identical NeuralHashes. We've created a GitHub to track NeuralHash collisions found in real life.

It's mathematically impossible for this to hold true for every pair of images (by the pigeonhole principle, if you're trying to encode images that are more than 96-bits into a 96-bit space there are bound to be collisions) but Apple has claimed that their system is robust enough that in a test of 100 million images they found just 3 false-positives against their database of hashes of known CSAM images (I could not find a source for how many hashes are in this database).

Looking for a video breakdown of what's happening? We've got you. Follow us on YouTube for more: https://bit.ly/rf-yt-sub

Artificially Created NeuralHash Collisions

Yesterday, news broke that researchers had extracted the neural network Apple uses to hash images from the latest operating system and made it available for testing the system. Quickly, artificially colliding adversarial images were created that had matching NeuralHashes. Apple clarified that they have an independent server-side network that verifies all matches with an independent network before flagging images for human review (and then escalation to law enforcement).

This photo of a girl was artificially modified to have the same NeuralHash as this photo of a dog.

We wrote yesterday about using OpenAI's CLIP model as a proof of concept for how this "sanity check" model might function to mitigate a DDoS attack against Apple's reviewers (or an attack on an individual user to frame them with benign images that appear to be CSAM to Apple's system).

Naturally Occurring NeuralHash Collisions

While the system's resistance to adversarial attacks is an important area to continue to probe, I was more interested in independently verifying Apple's stated false-positive rate on real-world images. Apple claims that their system "ensures less than a one in a trillion chance per year of incorrectly flagging a given account" -- is that realistic?

In order to test things, I decided to search the publicly available ImageNet dataset for collisions between semantically different images. I generated NeuralHashes for all 1.43 million images and searched for organic collisions. By taking advantage of the birthday paradox, and a collision search algorithm that let me search in n(log n) time instead of the naive n^2, I was able to compare the NeuralHashes of over 2 trillion image pairs in just a few hours.

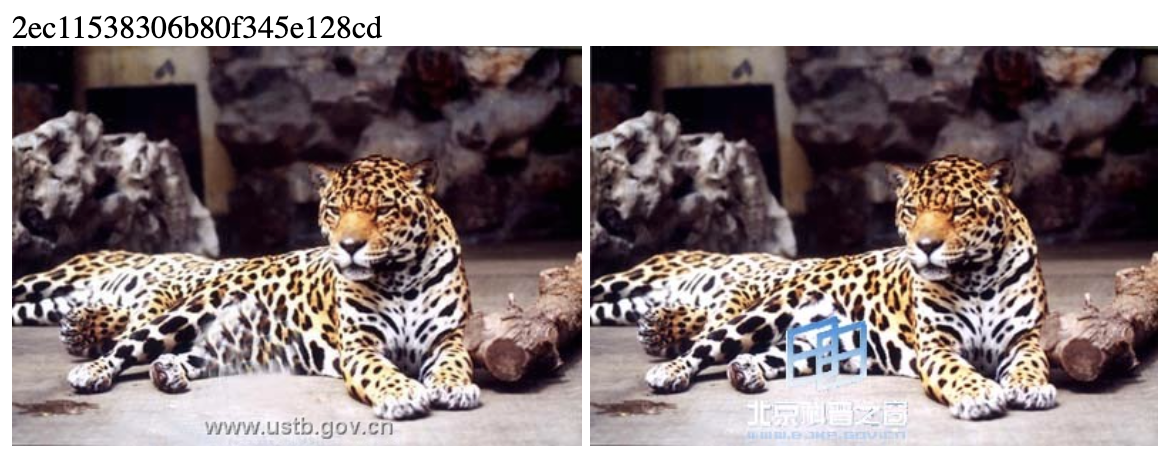

I found many (8272) truly duplicated images (their file contents matched bit for bit) and 595 actual collisions, but, upon manual inspection, most of those were examples of the algorithm doing its job (it had successfully detected slight perturbations like re-encodings, resizes, minor crops, color changes, watermarks, etc).

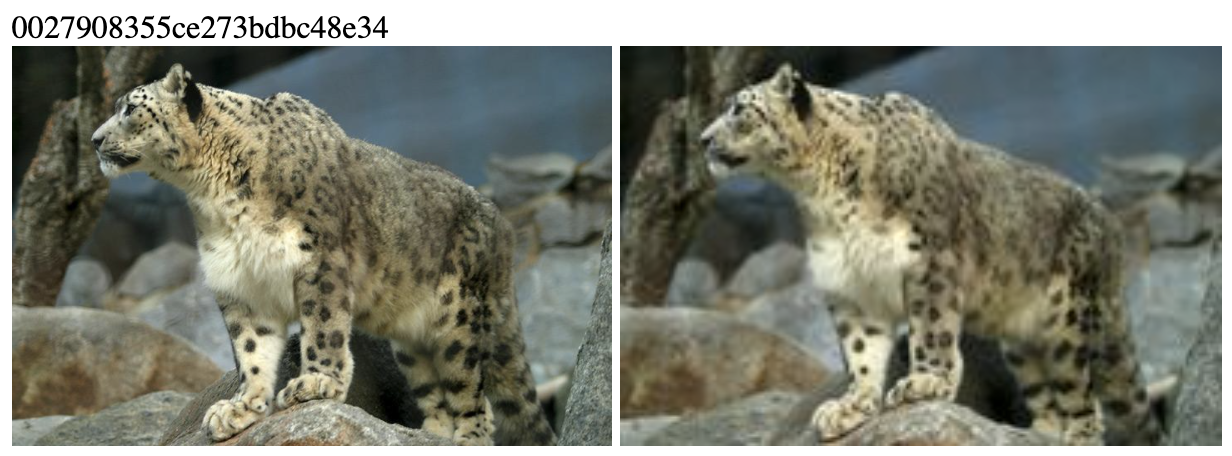

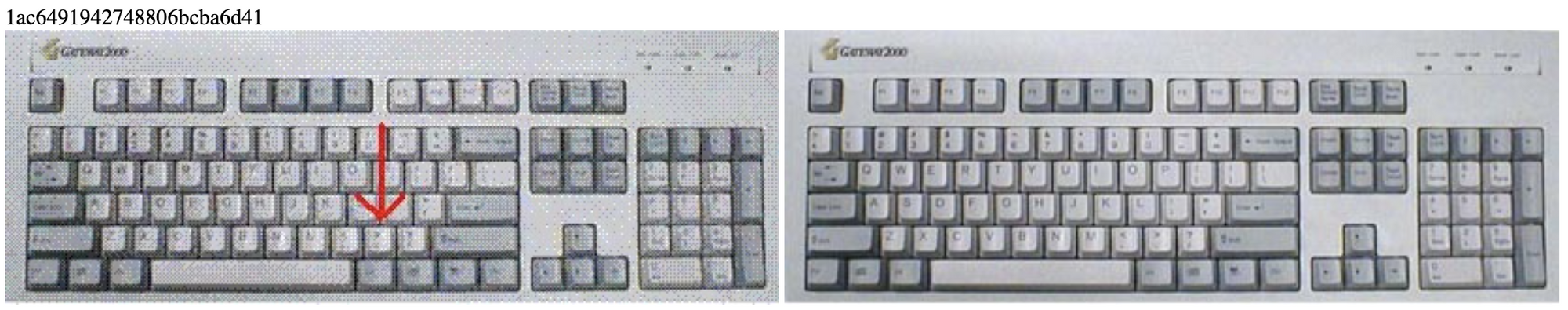

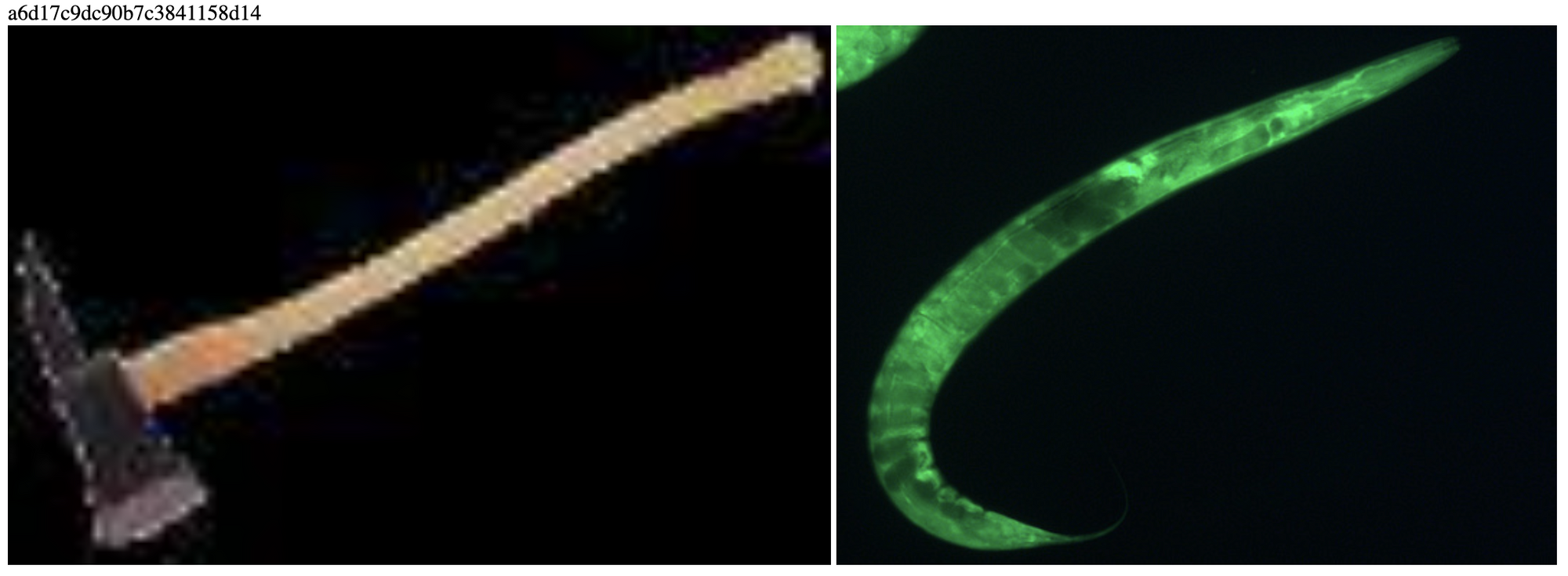

There were 2 examples of actual collisions between semantically different images in the ImageNet dataset.

This is a false-positive rate of 2 in 2 trillion image pairs (1,431,168^2). Assuming the NCMEC database has more than 20,000 images, this represents a slightly higher rate than Apple had previously reported. But, assuming there are less than a million images in the dataset, it's probably in the right ballpark.

Additionally, the collisions I found appear dissimilar to ones that might be present in the CSAM database – they both have solid colored backgrounds. The false-positive rate on images in the actual target domain may be even lower since they are likely to more closely match the training data.

Natural NeuralHash Collisions Found Elsewhere

I've started a GitHub repository of known real-world NeuralHash collisions. As of the time of publishing, I'm unaware of any other exact matches in the wild, but a Twitter thread by @SarahJamieLewis found some that vary by only 2-3 bits.

Please submit a PR with any you find in the wild (with their provenance and ideally with proof that they existed prior to the leak of the NeuralHash weights which led to the proliferation of artificially generated collisions).

NeuralHash Collisions

Apple's NeuralHash perceptual hash function performs its job better than I expected and the false-positive rate on pairs of ImageNet images is plausibly similar to what Apple found between their 100M test images and the unknown number of NCMEC CSAM hashes. More study should be done to determine the actual false-positive rate and calculate a realistic probability of innocent users being flagged (after all, Apple now has over 1.5 billion users so we are talking about a large pool of users at stake which increases the likelihood of even a low probability event manifesting).

The alternative attack vector of artificially created malicious images (benign images whose NeuralHash matches one of the CSAM images in NCMEC's database) should be explored further. Since we published our post yesterday, the quality of the artificial images has improved significantly – as yet, it's unclear how hard it would be to craft a single image that fooled both the client-side NeuralHash and Apple's server-side backup algorithm (while also appearing genuine to the human reviewers). It would not be good if the resilience of the system depended on the database hashes and the server-side model weights remaining secret.

Perhaps the most concerning part of the whole scheme is the database itself. Since the original images are (understandably) not available for inspection, it's not obvious how we can trust that a rogue actor (like a foreign government) couldn't add non-CSAM hashes to the list to root out human rights advocates or political rivals. Apple has tried to mitigate this by requiring two countries to agree to add a file to the list, but the process for this seems opaque and ripe for abuse.

Cite this Post

Use the following entry to cite this post in your research:

Brad Dwyer. (Aug 19, 2021). ImageNet contains naturally occurring NeuralHash collisions. Roboflow Blog: https://blog.roboflow.com/neuralhash-collision/