How do I build my application logic so that I can use my model at scale? That’s a great question.

To make the most of the Roboflow Serverless Hosted API and our Dedicated Deployment offerings, you can make parallel API requests. This involves making several requests at once rather than processing images sequentially.

In this guide, we are going to walk through how to scale vision inference requests in parallel with the Roboflow Serverless Hosted API or a Dedicated Deployment. These hosting offerings are ideal for running Roboflow Workflows that use models trained on or uploaded to Roboflow in scenarios where you need real-time results.

If you have a large volume of image or video data and do not need results in real time, you should try Batch Processing.

Without further ado, let’s get started!

Considerations for making API requests at scale

The Roboflow Serverless API scales with use. The more you use, the more our infrastructure scales. This allows you to integrate Roboflow as a dependable part of your system whether you are processing a consistent amount of data or need to work in bursts. When you use less, our infrastructure will scale down appropriately.

With that said, there are a few considerations to keep in mind when designing a system that uses a model or Workflow at scale.

First, there is a warm up time on API calls. When you first start using your Workflow with the Roboflow Serverless Hosted API, workers need to be spun up and initialised with your model and Workflow. The amount of time warm up takes varies depending on the complexity of your Workflow, but should take no more than a few seconds.

We recommend making a few dozen API call requests at the start of your scripts as “warm up” requests. These will be slower as infrastructure spins up.

Our Serverless API can handle up to 20 RPS. This means that you can make twenty requests per second with images to process. Our API will then process your request and return a result. If you make too many requests, you will receive a 429 error indicating that you should reduce the number of requests that you make.

All requests are metered. Your API use will show up in your billing dashboard.

If a request cannot be processed in ten seconds, the request will time out.

How to make parallel requests to a Workflow

Let’s walk through how to make parallel requests to a Workflow.

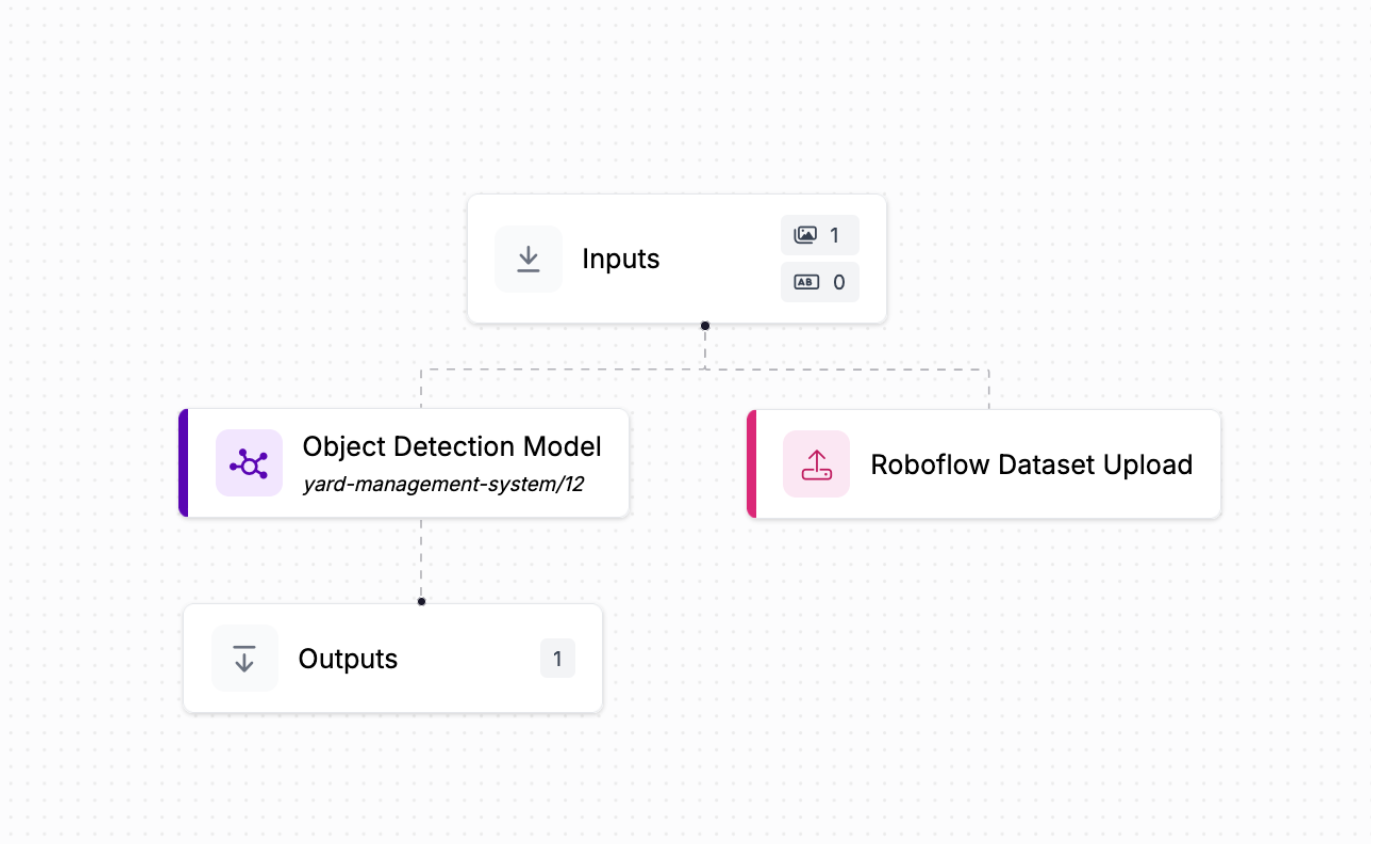

To get started, navigate to Roboflow Workflows. Create a new Workflow or open an existing one. For this guide, we are going to use a Workflow that calls an object detection model trained on Roboflow and returns the bounding box coordinates from the model. The Workflow will also send all images on which we run inference to a Roboflow dataset for active learning.

Every Workflow has a Hosted Serverless API endpoint that you can use to call the model. This is available from the “Deploy” button in the top right of the Workflows editor. Your code snippet will look something like this:

from inference_sdk import InferenceHTTPClient

client = InferenceHTTPClient(

api_url="https://detect.roboflow.com",

api_key="API_KEY"

)

result = client.run_workflow(

workspace_name="WORKSPACE_NAME",

workflow_id="WORKFLOW_ID",

images={

"image": "YOUR_IMAGE.jpg"

},

use_cache=True # cache workflow definition for 15 minutes

)

This script makes single requests at a time. We can modify it to make parallel requests.

To make concurrent requests, we can use the concurrent.futures Python package.

Create a new Python script and add the following code:

import concurrent.futures

from inference_sdk import InferenceHTTPClient

import os

import time

DATASET_LOCATION = "./data"

client = InferenceHTTPClient(

api_url="https://detect.roboflow.com",

api_key="API_KEY"

)

def run_workflow(image):

return client.run_workflow(

workspace_name="roboflow-universe-projects",

workflow_id="custom-workflow-7",

images={

"image": image

},

use_cache=True # cache workflow definition for 15 minutes

), image

def make_requests(images):

start = time.time()

with concurrent.futures.ThreadPoolExecutor(max_workers=10) as executor:

futures = [executor.submit(run_workflow, image) for image in images]

results = {}

for future in concurrent.futures.as_completed(futures):

result, image = future.result()

results[image] = result

print(f"Time taken: {time.time() - start}")

return results

make_requests(os.listdir(f"{DATASET_LOCATION}/valid/images"))

In this code, we define a few functions:

- run_workflow: Runs a Workflow with an input image. You can configure this to accept multiple inputs if relevant.

- make_requests: Accepts a list of images and processes them in parallel. The max_workers argument limits the number of concurrent requests that will be made to 10.

We then start making requests. The first requests will be a bit slower as our infrastructure is provisioned with your Workflow. Then, you can expect a consistent level of performance.

Note that you may be rate limited if you try to make too many requests at a time. The code above does not guard against rate limiting, although setting a max_workers value of 10 ensures you stay within the acceptable use limits of our API.

To ensure data integrity, we recommend implementing exponential backoffs.

Benchmarking performance

You can benchmark what performance you can expect after warm up using the Roboflow Inference CLI:

inference benchmark api-speed --api-key API_KEY --workflow-id=WORKFLOW_ID --workspace-name=WORKSPACE_NAME --host https://detect.roboflow.com --rps=10 --benchmark_requests=200 --warm_up_requests=50

Above, set your Roboflow API key, your Workflow ID, and your Workspace name. This command will make 50 warm up requests then 200 benchmark requests. The 200 benchmark requests will be made at 10 requests per second (RPS).

To benchmark on a Dedicated Deployment, replace the --host argument with the API URL associated with your Deployment.

As the script runs, you will be see several performance numbers, including:

- Average latency, measured in milliseconds.

- RPS that could be handled (this should be close to the –rps argument you provide in an input).

- The p75 and p90 latencies.

- The request error rate.

Conclusion

You can make parallel requests to the Roboflow Serverless Hosted API. This will allow you to process images concurrently instead of sequentially. Our infrastructure is designed to scale up and down with use. This means that our infrastructure can handle concurrent requests with ease, up to our acceptable use limits.

In this guide, we covered how to use the concurrent.futures library to make concurrent requests. We also discussed the “warm up time” you may observe when making your first few requests to our Serverless API, and the importance of setting a max_workers value to ensure you do not make too many requests.

If you have questions about scaling vision systems to hundreds of thousands or millions of API calls, contact the Roboflow sales team.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Feb 19, 2025). Parallel Inference Requests with Roboflow. Roboflow Blog: https://blog.roboflow.com/parallel-inference/