GPT-5 is in #1 on our Vision Checkup test of 80+ hand-written prompts oriented around real-world tasks. The assessment covers everything from analyzing assembly lines for overhanging objects to analyzing and understanding complex grid layouts such as the position pieces on a chess board.

All of the first five places on our vision task leaderboard are occupied by OpenAI models, followed then by Gemini 2.5, Anthropic’s Claude 4 models, and more. This speaks to the quality of OpenAI’s training processes, especially for vision.

We published a blog post yesterday showing our initial results, but now that we have had time to play with the model more we have more reflections to share.

Our impressions of GPT-5, generally, are that the model does not represent a step-change increase in performance on visual tasks. Is the model a good daily driver for multimodal vision tasks? Yes! Does the model perform well at real-world tasks? Yes! Are there areas where the model hasn't improved? Yes. We'll explore our findings related to strengths and weaknesses below.

GPT-5 in Action: Strong Reasoning Potential

GPT-5 is consistently good at VQA problems and understanding how objects relate spatially. It shows limited performance on object detection, but the model was not explicitly trained for that task so the extent to which this is meaningful is somewhat limited.

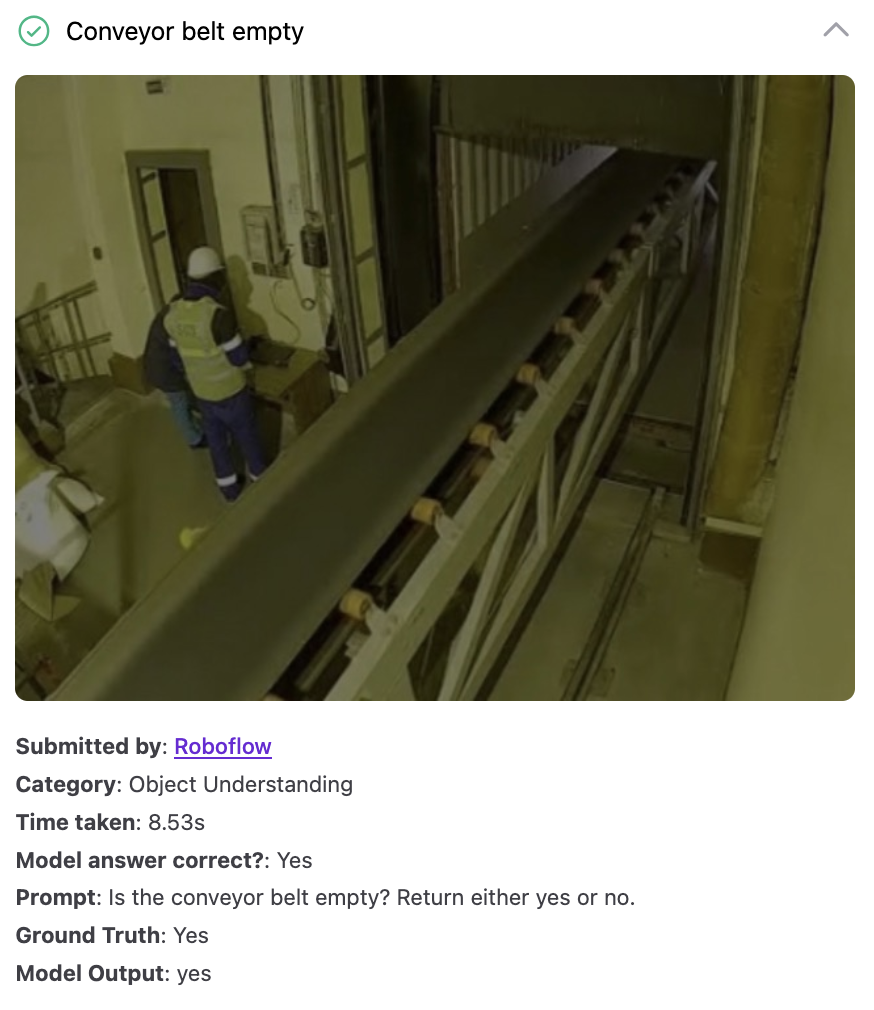

Two prompts where GPT-5 does well.

In the right example above, we ask a complex, multi-step question about the colour of the object that the robotic arm is closest to picking up. The model successfully answers "black". This is complex because there are several objects around the robotic arm. Success on this task shows the model's strong spatial reasoning capabilities.

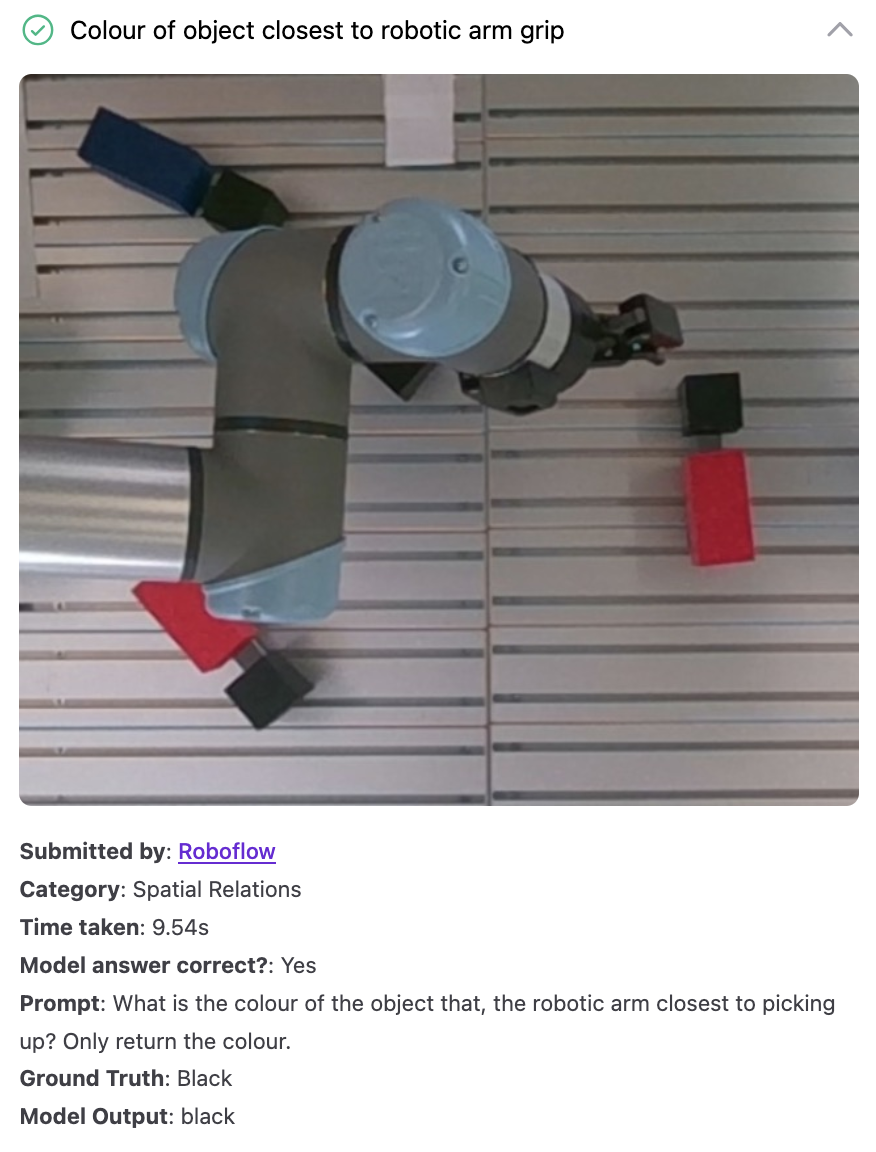

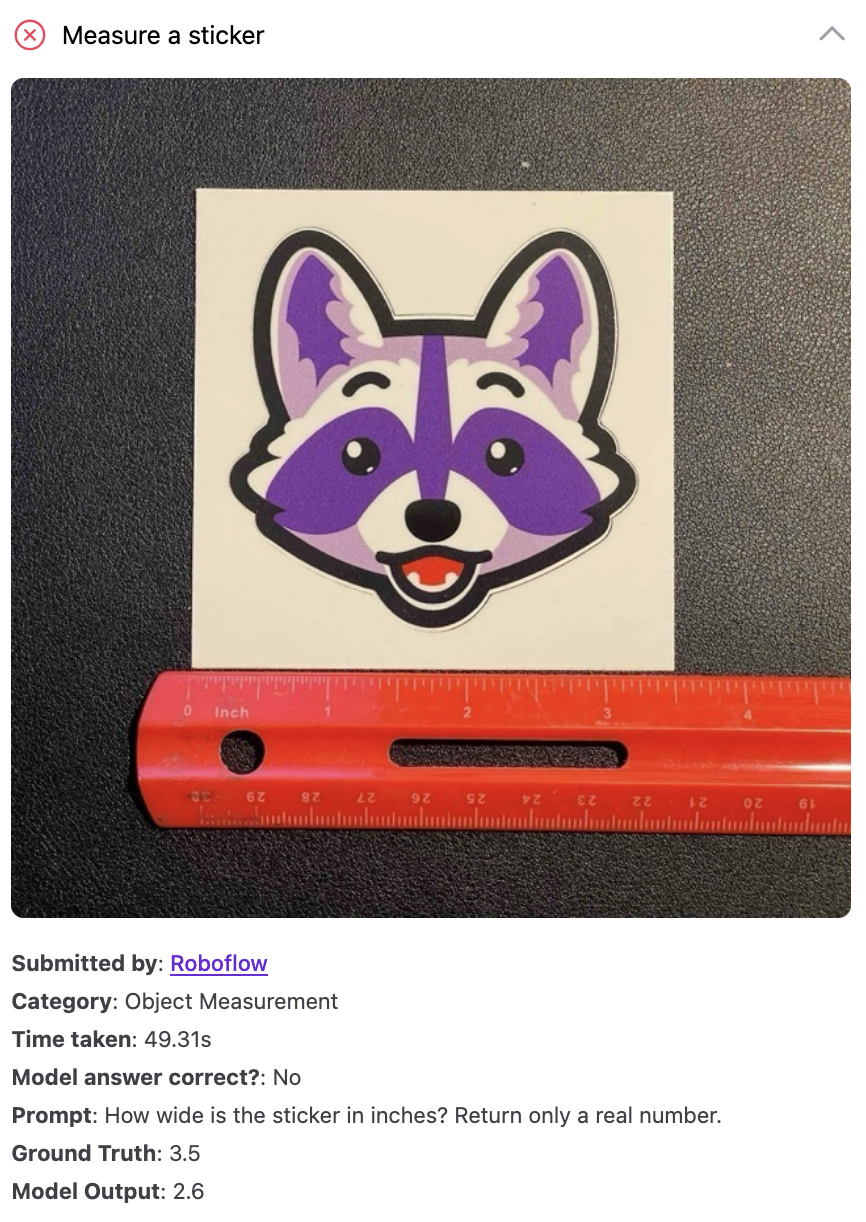

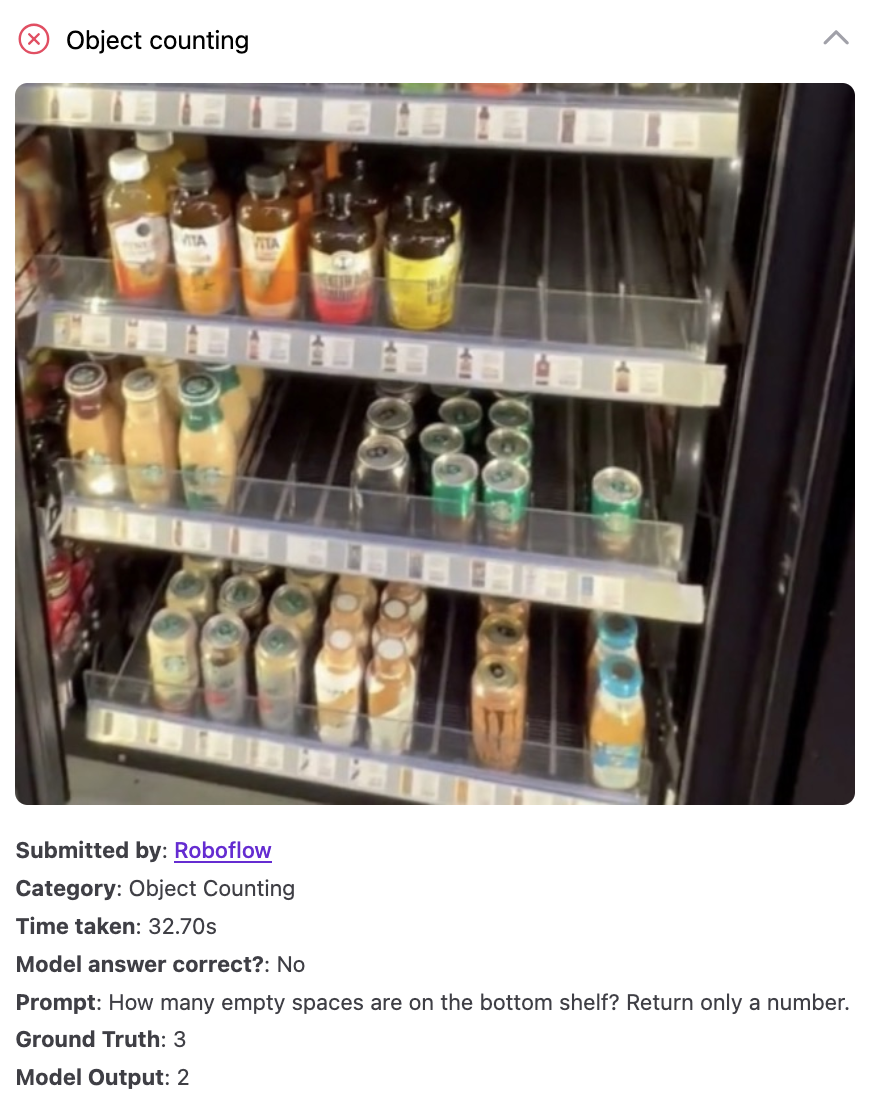

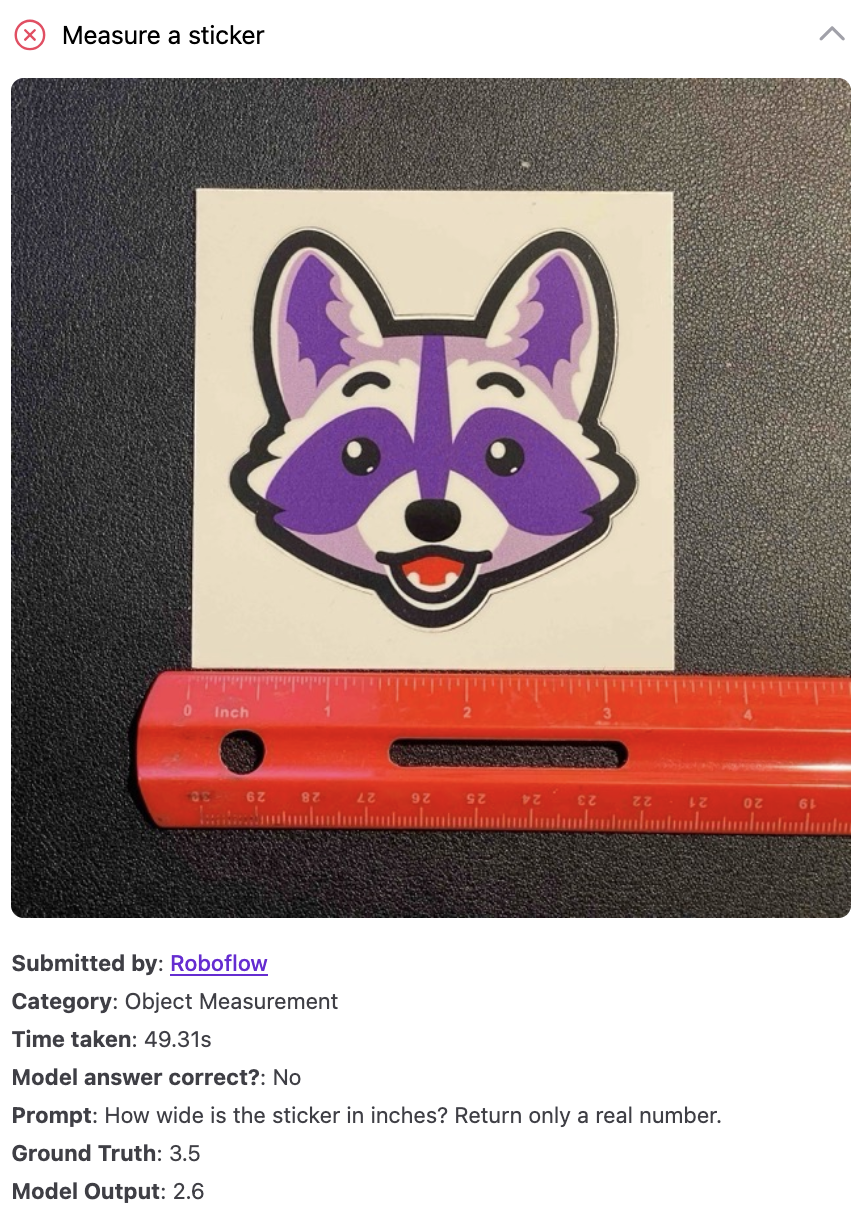

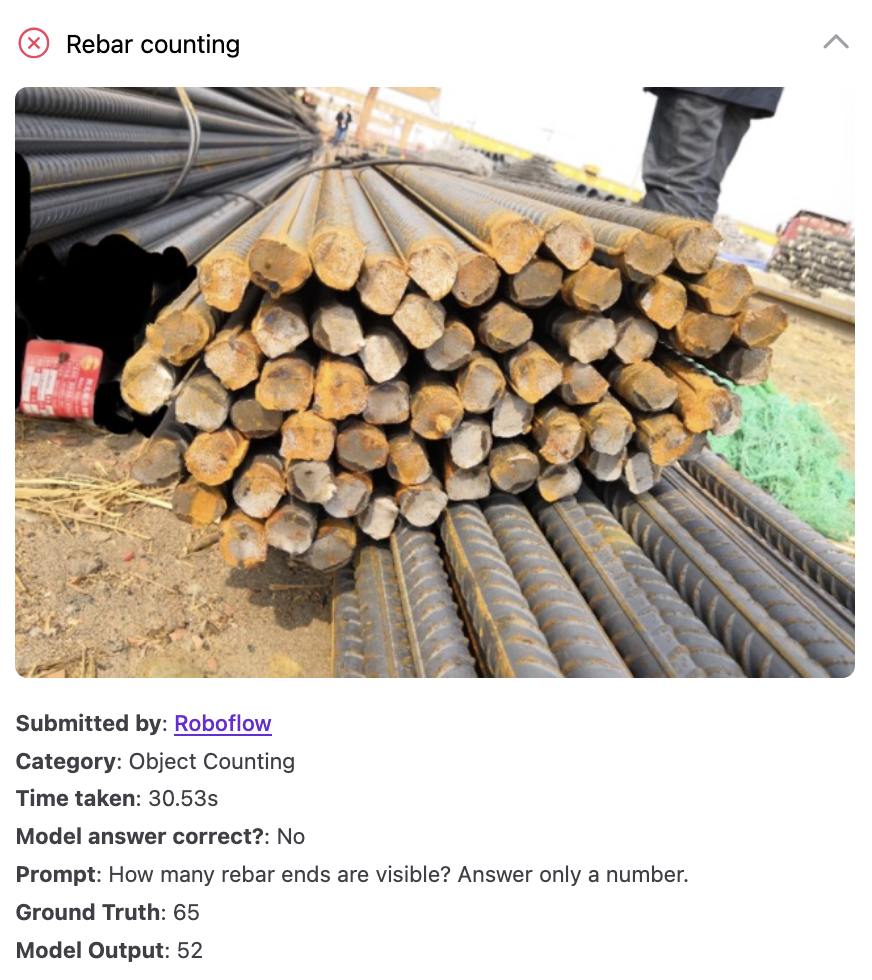

But the model also struggles with object counting (“how many screws are in this image?) and object measurement with a reference (“how wide is the sticker?”). These are tasks that we have consistently seen multimodal models struggle with.

Two tasks where the model struggles.

OpenAI’s announcement was more focused on the audio part of the model’s multimodal capabilities, as well as improvements in coding capabilities.

That is to say that while, from the announcement, we didn’t expect a huge lift in qualitative performance, we think the languishing performance on our object measurement and counting “vibe checks” show that there are still research or data strides that need to be made to improve performance on various vision tasks.

Progress in Visual Understanding: A History

Back in 2023, we tested GPT-4 with Vision, OpenAI’s GPT model with multimodal image capabilities. With the model, you could ask a question about the contents of an image. We were impressed, and our conviction in a trend we have followed for years came to fruition: that the breakthroughs in language modeling would soon come to computer vision.

By creating a language model with vision capabilities, GPT models are able to use the rich semantics they learn from text and the context that connects images and text to provide detailed answers to questions. A task like handwriting OCR went from extremely difficult without a complex pipeline in place to something anyone could do through a free application.

Progress in Visual Understanding

Since 2023, we have come a long way. GPT-4 with Vision had impressive OCR and some document understanding capabilities, but still failed at many tests like counting coins and reliably understanding receipts.

As new models came out – Gemini, Qwen-VL 2.5, GPT “o” reasoning models – our few hand-crafted tests became outdated. The models got better and better at our general VQA and OCR tasks.

With that said, a few areas stood out as being consistently difficult:

- Object counting

- Object measurement with a reference

- Object detection

Counting and measurement tests at which the model struggled.

The latter – object detection – was a particular blind spot with multimodal models not trained explicitly for this task. OpenAI released support for fine-tuning a GPT-4o model for object detection, but its performance was limited and not practical for production applications due to costs.

When benchmarked on RF100-VL, a multi-domain object detection benchmark, GPT-5 came behind Gemini 2.5 Pro. We reported the following results in our first impressions post after running GPT-5 against the benchmark:

we got the mAP50:95 of 1.5. This is significantly lower than the current SOTA of Gemini 2.5 Pro of 13.3.

In addition, we have also experienced variability with the performance of models. After GPT-4 with Vision came out, we set up a website that tracked the performance of the model, then improved OpenAI models. The website ran the same assessments every day. In some cases, the model would answer correctly one time, then incorrectly when prompted again.

This came to the fore when we tested GPT-5. We had to run our assessments several times due to varied results. While the small sample size of our tests – ~80 prompts – means incorrect answers causes noticeable variability, we noted that while GPT-5 was consistently good it was still, like other models, inconsistent on other tasks.

This leads us to a key learning we had working with GPT-5: as reasoning models become more widely available, benchmarking multiple times and ensuring the results are correct every time is essential for any task where you need reliable outputs (which, realistically, is most real-world tasks).

Where We Go from Here

GPT-5 is a strong model for multimodal understanding. With that said, the model does not have any new features like enhanced performance on object detection, counting, segmentation, or several other tasks (detection and segmentation being tasks that Gemini models in particular can be used for).

Our impressions match the general “vibes” we have read from the community: while the model is strong at many tasks, there are significant limitations. For vision in particular, similar prompts may give you two different answers, and the model struggles with counting and measurement.

We are always excited to test new models! OpenAI’s history in multimodal models demonstrates how far we can come in a short space of time. In two years our ideas of what is possible with multimodal models has fundamentally changed. This model wasn’t a step change for vision, but we have to ask: What about the next model? We’ll keep our eyes peeled and run our qualitative tests as new models come out, and as the community’s understanding of GPT-5 grows.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Aug 8, 2025). Reflections on GPT-5 Vision Capabilities. Roboflow Blog: https://blog.roboflow.com/reflections-on-gpt-5-vision-capabilities/