Today we are announcing the Serverless Video Streaming API, the simplest way to run AI on live video streams.

Previously, using AI to understand live video streams meant managing complex cloud infrastructure or configuring local hardware. The Serverless Video Streaming API removes those barriers, allowing you to run powerful vision models and workflows on real-time video streams immediately. You can test workflows in a browser and then deploy them into production in minutes.

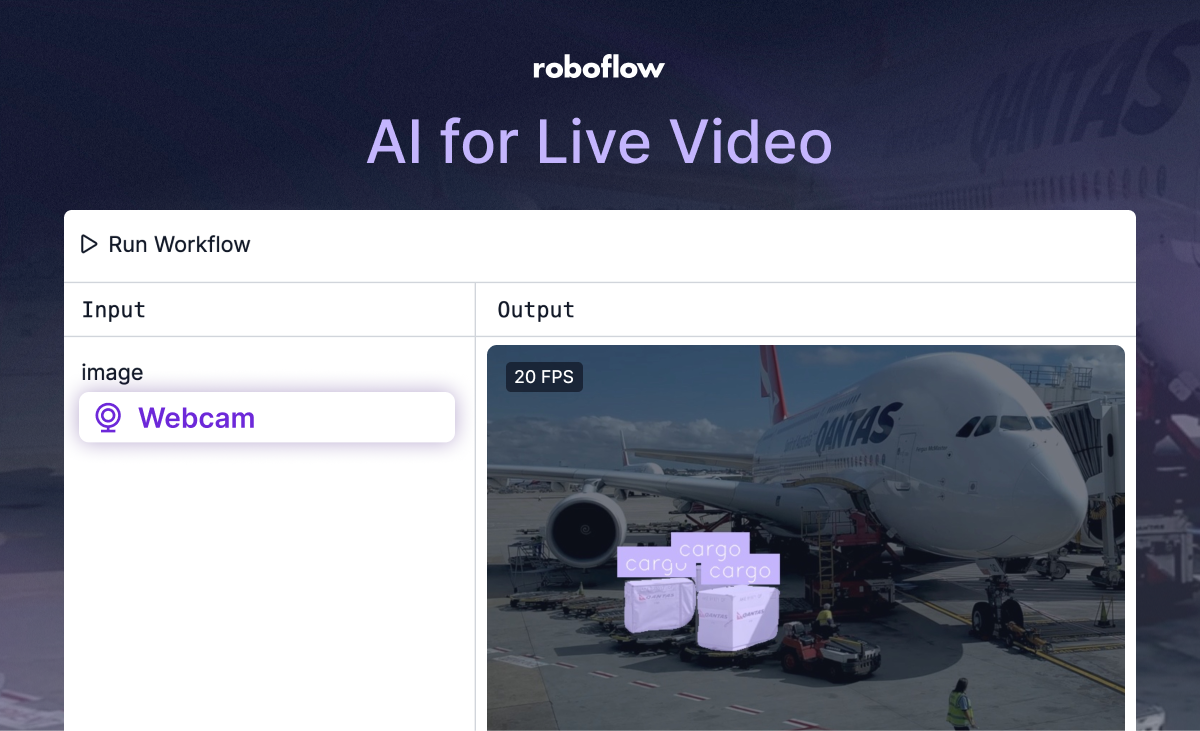

Testing a vision model and workflow with the Serverless Video Streaming API

What is the Serverless Video Streaming API?

Designed with real-time applications in mind, the Serverless Video Streaming API allows you to stream input from webcams, RTSP, or files directly to the cloud via WebRTC without the need to configure an inference environment yourself.

This is the ideal choice to test and deploy vision applications if you want to get running quickly and avoid costs related to over provisioning:

- No infrastructure management: Simply start running your vision workflows on live videos with a few clicks. Because the computing instances are ephemeral and destroyed immediately, no data persists on the server after the stream ends.

- Automatic, infinite scalability: Unlike a dedicated server that requires manual scaling, this API scales infinitely to meet your needs. If you need to process one stream or a thousand, the system adjusts automatically.

- Cost efficiency: You only pay for the time the stream is processing. If you aren't streaming, you aren't paying.

Video: See the API in action

This video walks through the process and includes demonstrations of a mobile fitness app and multi-stream RTSP processing powered by the Serverless Video Streaming API.

Solving real-world deployment challenges

The Serverless Video Streaming API accelerates the prototyping and deploying process for computer vision workflows. Here are a few examples scenarios:

1. Automatic scaling for bursty traffic

For applications with fluctuating workloads, the automatic provisioning of the Serverless Video Streaming API is often more cost-effective than standing up dedicated hardware. For example, a mobile application might have 100 concurrent users in the morning and 7,000 users during peak evening hours. This API allows you to handle these spikes without paying for idle servers during downtime.

2. Unified processing for distributed feeds

Bring computer vision workflows to thousands of video sources without the need to manage computing resources for each individual feed. Instead of setting up hardware at every physical location, you can route streams from distributed devices – like mobile phones or security cameras – to a centralized cloud endpoint. This simplifies your architecture, allowing you to process simultaneous feeds without managing a fleet of physical servers or supporting different operating systems.

3. Run larger models on live video feeds

Leveraging powerful cloud GPUs unlocks the ability to run larger vision models on live video streams or apply more complex logic in your workflows. For example, a media company broadcasting a live sports event might want to use a heavier model and workflow to track each player's movements and display real-time analytics.

4. Hybrid architecture for on-demand inference

Combining edge inference with the Serverless Video Streaming API unlocks new applications while optimizing costs. You can run a lightweight model locally to handle basic detection, then trigger the cloud API when deeper analysis is required. For example, a drone inspecting wind turbines might use a lightweight model to identify and navigate to an area of interest, like a turbine blade. From there, it can stream video to the cloud, where a defect-detection model identifies imperfections that the onboard hardware couldn't see.

How to get started

If you want to try out the Serverless Video Streaming API for prototyping an application or deploying to production, check out the resources below.

Testing in the browser

You can test your pipelines immediately without writing code.

- Log in to Roboflow and navigate to the Workflows tab.

- Select a Workflow and click Test Workflow.

- Choose a source, like Webcam or RTSP Stream.

- Modify additional options, like GPU or region.

- Click Run to start processing the stream.

Testing a webcam video stream in Workflows

Integrating the API with your applications

If you are ready to integrate the API with your application, please check out the Serverless Video Streaming documentation which includes instructions for getting started and links to example projects.

An exercise application powered by the Serverless Video Streaming API and JavaScript SDK

Using Python and JavaScript SDKs, you can define your input source and start receiving inference data or annotated video streams instantly with just a few lines of code.

- JavaScript SDK: For developers building full stack JavaScript web applications, this SDK allows you to now build computer vision applications for the web or mobile (using React Native), while still using Python-native computer vision components like ByteTrack – without having to host your own Python backend.

- Python SDK: When integrating the API with Python application, this SDK streamlines the process and allows you to process video streams without configuring everything yourself.

For example, you can try out the API in your application with the below template:

import cv2 as cv

from inference_sdk import InferenceHTTPClient

from inference_sdk.webrtc import VideoMetadata, StreamConfig, WebcamSource

API_KEY = "<your API key>"

WORKFLOW = "<your workflow>"

WORKSPACE = "<your workspace>"

STREAM_OUTPUT = "<stream output (taken from workflow outputs)>"

DATA_OUTPUT = "<data output (taken from workflow outputs)>"

client = InferenceHTTPClient.init(

api_url="https://serverless.roboflow.com", api_key=API_KEY

)

source = WebcamSource() # Other options: RTSPSource, VideoFileSource, ManualSource

config = StreamConfig(

stream_output=[STREAM_OUTPUT],

data_output=[DATA_OUTPUT],

requested_region="us"

)

session = client.webrtc.stream(

source=source,

workflow=WORKFLOW,

workspace=WORKSPACE,

image_input="image",

config=config,

)

@session.on_frame

def show_frame(frame, metadata):

cv.imshow("WebRTC SDK - Webcam", frame)

if cv.waitKey(1) & 0xFF == ord("q"):

session.close()

@session.on_data()

def on_message(data: dict, metadata: VideoMetadata):

print(f"Frame {metadata.frame_id}: {data[DATA_OUTPUT]}")

session.run()Bring AI to your live streams today

The new Serverless Video Streaming API is the simplest way to run computer vision workflows on live video. By removing the need to manage complex infrastructure and offering instant browser-based testing, it drastically speeds up prototyping and reduces the time it takes to get your applications into production.

Ready to start streaming? Log into Roboflow, visit the Workflows tab, and start testing streams on your webcam or RTSP feeds immediately.

Cite this Post

Use the following entry to cite this post in your research:

Patrick Deschere, Balthasar Huber, Grzegorz Klimaszewski. (Dec 22, 2025). AI for Live Video: Introducing the Serverless Streaming API. Roboflow Blog: https://blog.roboflow.com/serverless-video-streaming-api/