Announced on February 20, 2025, SmolVLM2 is a multimodal image and video understanding model developed by engineers on the Hugging Face TB (Textbook) Research team.

With SmolVLM2, you can ask questions about the contents of images or videos. For example, you can ask SmolVLM2 to count objects in an image, read line items on receipts, run optical character recognition, and more.

In this guide, we are going to walk through our findings after running qualitative tests on SmolVLM2. Our analysis covers object detection, object counting, OCR, and more. Our tests are specifically focused on the image capabilities of SmolVLM2.

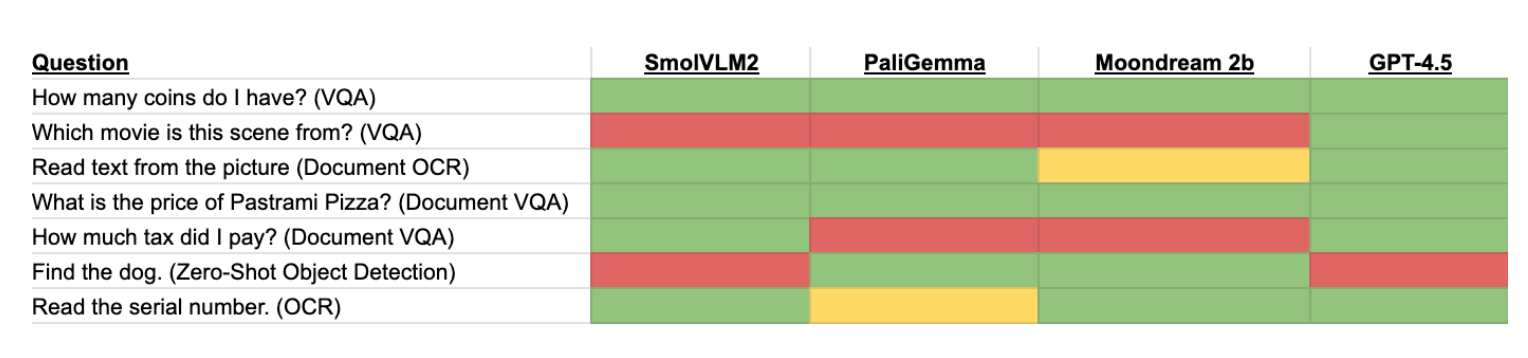

Curious to see our high-level results? Here is how SmolVLM2 compares to other multimodal models we have evaluated on our qualitative benchmarks:

Without further ado, let’s get started!

What is SmolVLM2?

SmolVLM2, developed by engineers on the Hugging Face TB Research team, is part of the “Smol Models” initiative. This initiative is focused on making “efficient and lightweight AI models [...] that can run effectively on-device while maintaining strong performance.” SmolVLM2 is capable of both image and video understanding.

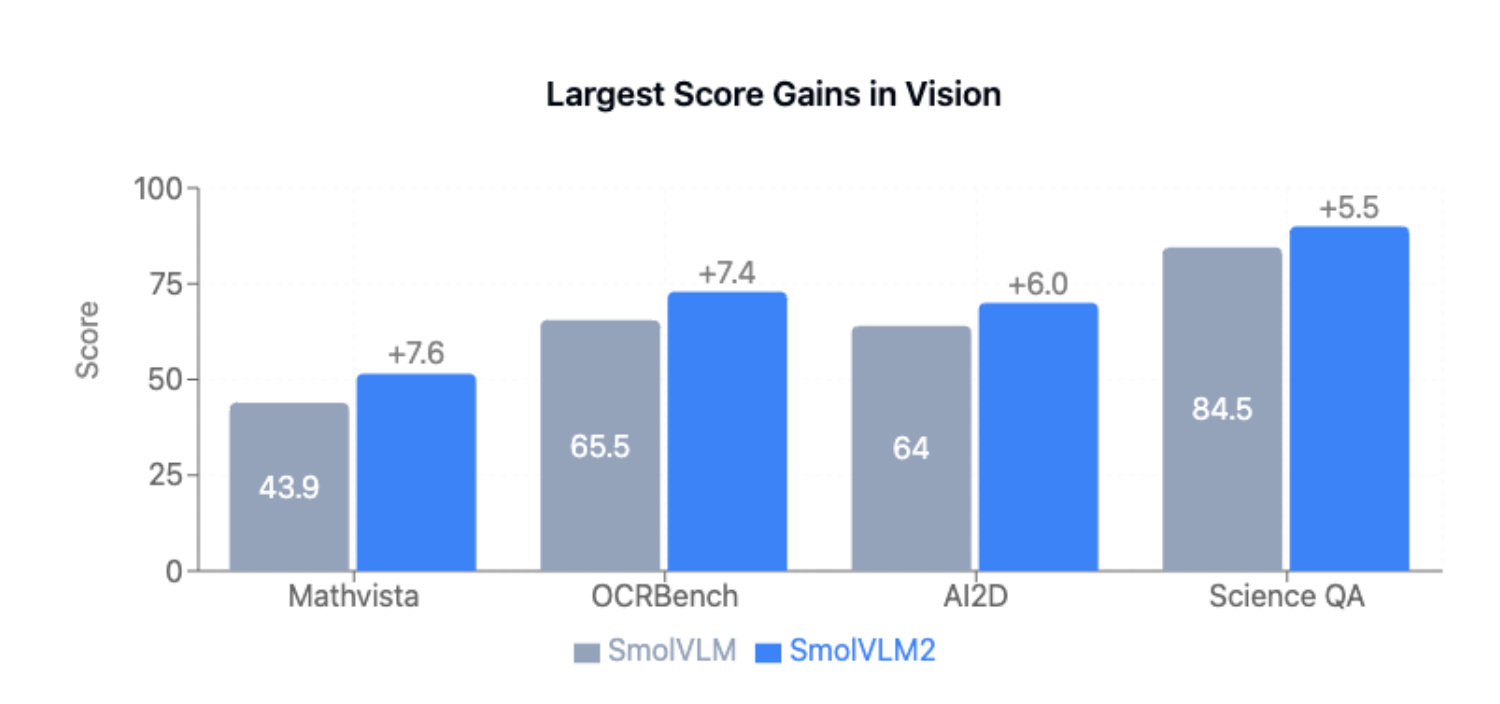

SmolVLM2 comes in three sizes: 256M, 500M, and 2.2B. The larger the model, the better performance you can expect on your tasks. When compared with the first SmolVLM model released in November 2024, SmolVLM outperformed on the Mathvista, OCRBench, AI2D, and ScienceQA benchmarks:

According to Hugging Face, the SmolVLM2 models “outperform any existing models per memory consumption. Looking at Video-MME (the go-to scientific benchmark in video), SmolVLM2 joins frontier model families on the 2B range and we lead the pack in the even smaller space.”

Evaluating SmolVLM2 for Multimodal Image Tasks

We evaluated SmolVLM2’s multimodal support on several tasks, including:

- Object counting

- Visual question answering (VQA)

- Document OCR

- Document VQA

- Zero-shot object detection

- Real-world OCR

Below, we show our results from each test.

Our tests were run using the HuggingFaceTB/SmolVLM2-2.2B-Instruct checkpoint. We used a T4 GPU on Google Colab to run our tests.

SmolVLM2 object counting

We started our analysis by asking SmolVLM2 to count the number of objects in an image. We provided the following image of coins and asked SmolVLM2 “How many coins do I have?”

SmolVLM2 returned the answer:

4

The model successfully counted the number of objects. When compared to other models we have analyzed, this response is much more direct. The model returned exactly the information we requested, without additional information (i.e. the denomination of the currency).

SmolVLM2 Visual question answering (VQA)

We then asked SmolVLM2 to identify the movie from which a screenshot was taken. We asked “Which movie is this scene from?” and provided the following image:

SmolVLM2 returned:

The scene is from the movie "A Christmas Story."

This is incorrect. The correct answer is Home Alone. PaliGemma, another small, multimodal vision model also incorrectly answered this question. With that said, cloud models like those in the GPT series and Claude were able to answer this question accurately.

We then asked SmolVLM2 to read the contents of a menu. We provided the following menu and asked “What is the price of Pastrami Pizza?”:

SmolVLM returned:

The price of Pastrami Pizza is $27.

The model successfully read the menu and returned the price of the pastrami pizza.

SmolVLM2 Document OCR

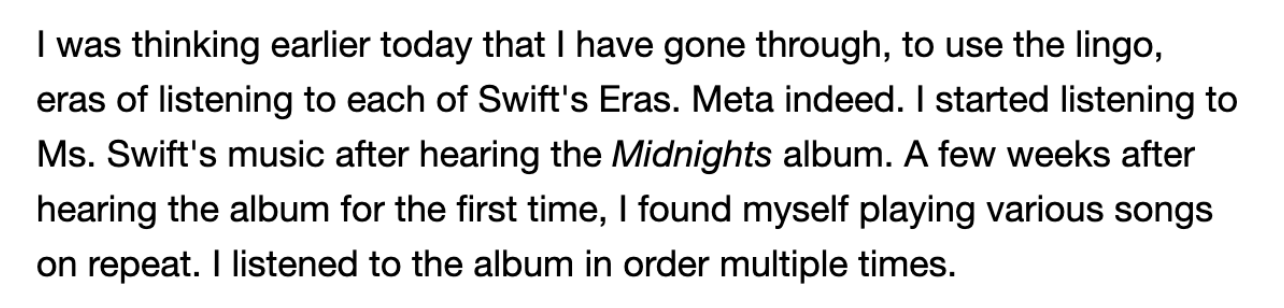

We then tested SmolVLM2’s performance on document OCR. We provided the following screenshot of a web page and asked SmolVLM2 to “Read text from the picture”.

The model returned:

I was thinking earlier today that I have gone through, to use the lingo, eras of listening to each of Swift's Eras. Meta indeed. I started listening to Ms. Swift's music after hearing the Midnights album. A few weeks after hearing the album for the first time, I found myself playing various songs on repeat. I listened to the album in order multiple times.

The response is missing the italics for the word “Midnights”, but is otherwise correct.

SmolVLM2 Document question answering

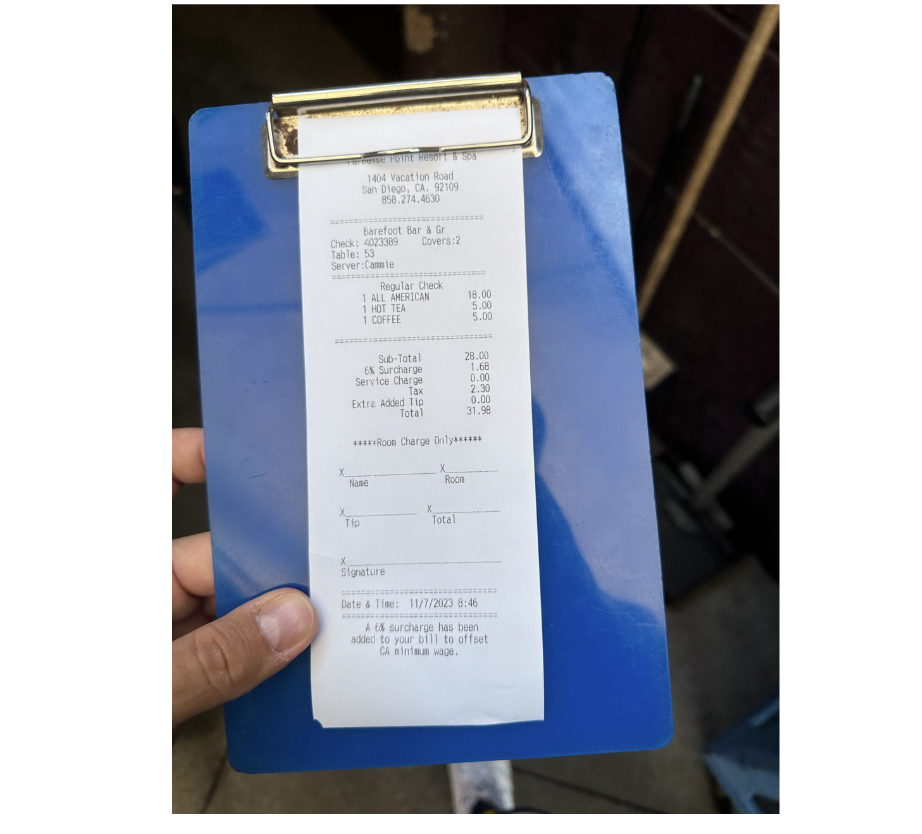

We then challenged SmolVLM2 to a task with which many models fail: receipt OCR. For example, Claude 3 Opus, GPT-4o, and GPT-4.5 have performed well at our receipt OCR test, but OpenAI o3-minI and PaliGemma failed the test.

We provided the following image of a receipt and asked SmolVLM2 “How much tax did I pay?”

SmolVLM returned:

2.30

This is the correct answer.

SmolVLM2 Real-world OCR

We provided the following image of a tire and asked SmolVLM2 to “Read the serial number”:

The model returned:

3702692432.

SmolVLM2 correctly identified all of the numbers in the serial number.

SmolVLM2 Zero-shot object detection

While SmolVLM2 does not mention support for zero-shot object detection, we evaluated the model on a zero-shot detection task. We provided the following image and asked the model to “Return xyxy coordinates for the dog in the image.”:

The model returned:

[19.5, 1.5, 19.5, 1.5]

These coordinates are invalid.

Conclusion

SmolVLM2 is a new, multimodal vision and video model developed by Hugging Face’s TB Research team. In this guide, we evaluated SmolVLM2’s capabilities on a range of image tasks, from object counting to OCR.

SmolVLM2 passed our object counting, document OCR, document VQA, and real-world OCR tasks. The model did not pass our zero-shot object detection and movie scene VQA tasks.

Given the model is so small, it may be ideal for running on the edge, or on smaller servers you run. For instance, you could deploy SmolVLM2 as a service for OCR.

Curious to learn more about how other multimodal models perform on our tests? Check out our list of multimodal model blog posts in which we analyze the capabilities of various new and state-of-the-art multimodal models.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Mar 11, 2025). SmolVLM2: Multimodal and Vision Analysis. Roboflow Blog: https://blog.roboflow.com/smolvlm2/