Introduction

Human beings experience the world through multiple senses. We see, hear, touch and read, and our brains integrate these diverse signals into a coherent understanding of the environment. Recent advances in artificial intelligence (AI) have tried to mimic this ability by developing multimodal deep‑learning models - systems that can learn collectively from images, text, audio or video.

These models power tasks such as image captioning, speech‑to‑text, video understanding and cross‑modal retrieval. However, to train such models large, well‑curated multimodal datasets is required. The quality of data directly influences a model’s performance, thus datasets that combine multiple modalities are a crucial resource for AI researchers and developers.

This blog post provides a detailed introduction to multimodal deep learning, and explains why multimodal datasets are so important. It covers ten of the most influential multimodal datasets used in computer vision and related fields. Each dataset description includes links to research papers and code repositories, a summary of modalities, size and licensing information, and guidance on where to access the data. You will also learn practical tips for using multimodal datasets and a list of resources for finding additional datasets. So, let’s get started.

What Is Multimodal Deep Learning?

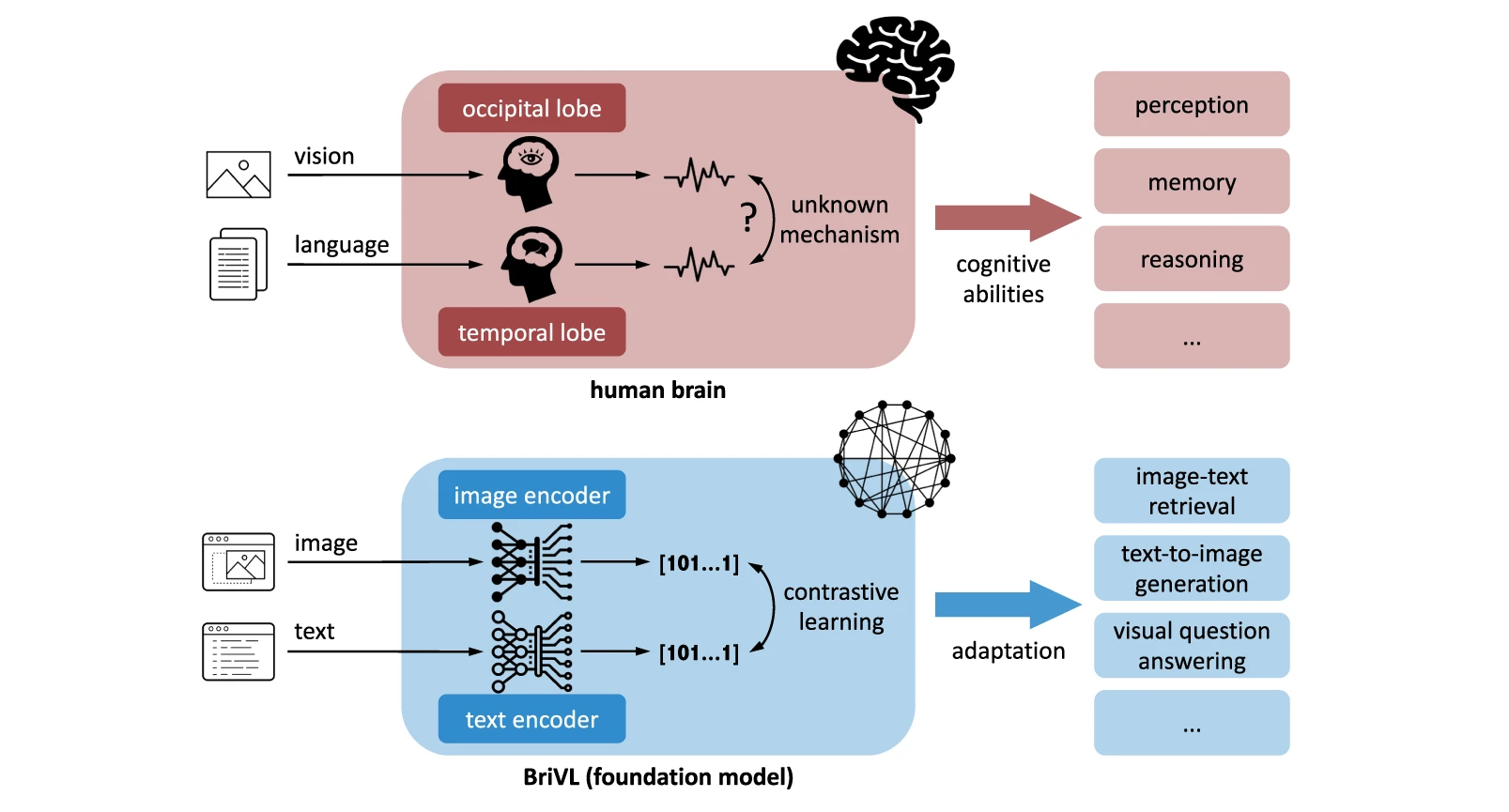

In traditional deep learning (a branch of machine learning), a model typically learns from a single type of data, such as images or text. Multimodal deep learning refers to models that combine two or more modalities, such as text, images, audio, video, sensor measurements, and more, and learn how they interrelate. With the goal of performing tasks more effectively than single-modality models. This replicates human cognition. Just as we use sight, sound, and touch to understand our world, AI systems learn richer, context-aware representations by fusing multiple modalities.

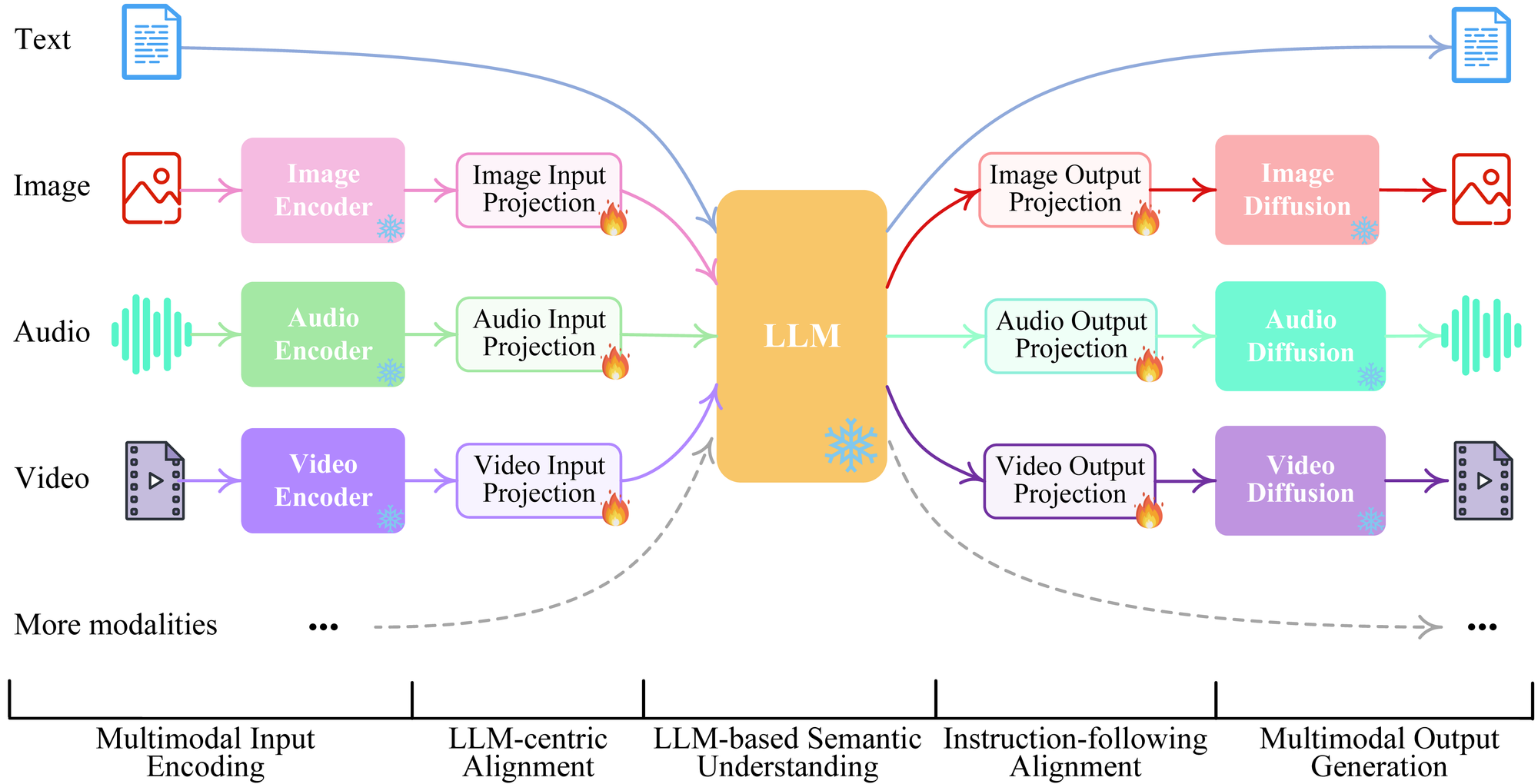

For example, NExT-GPT is an innovative “any-to-any” multimodal large language model (MM-LLM) presented at ICML 2024, designed to both understand inputs and generate outputs across arbitrary combinations of text, images, video, and audio.

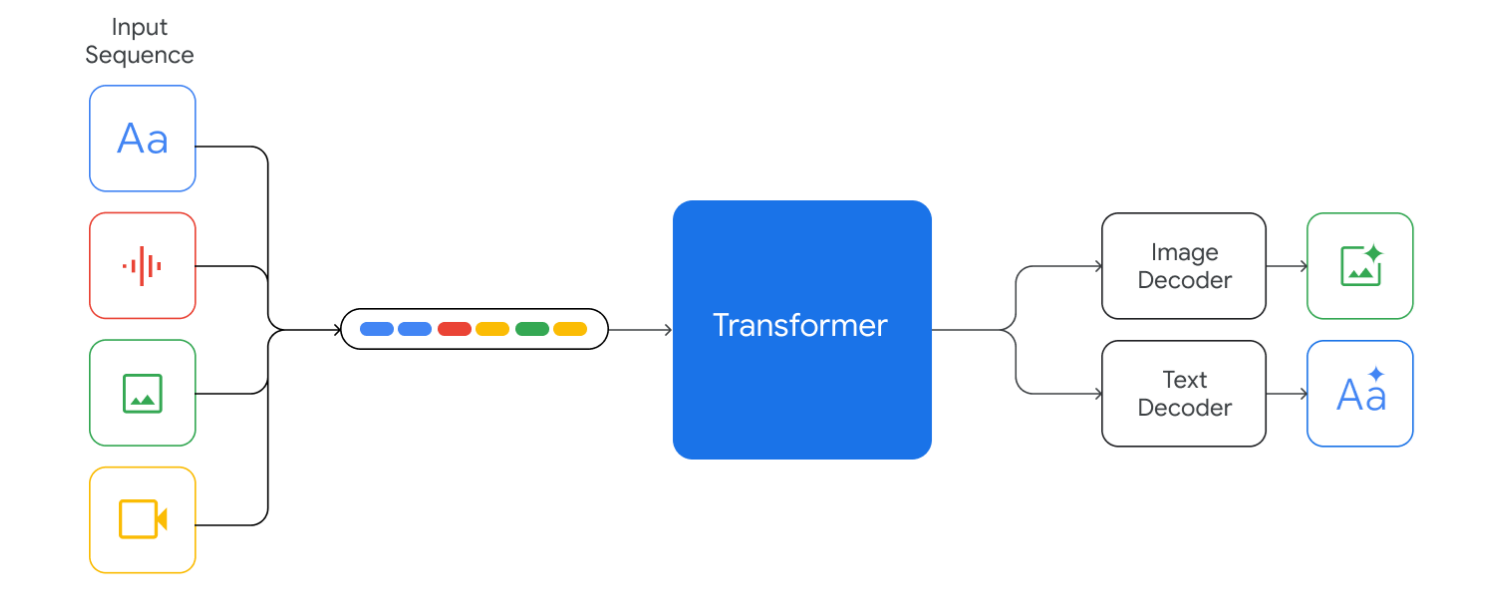

Another example is Gemini, a multimodal deep learning model by Google. It demonstrates strong understanding and reasoning across multiple input types such as image, audio, video, and text within a single foundational model.

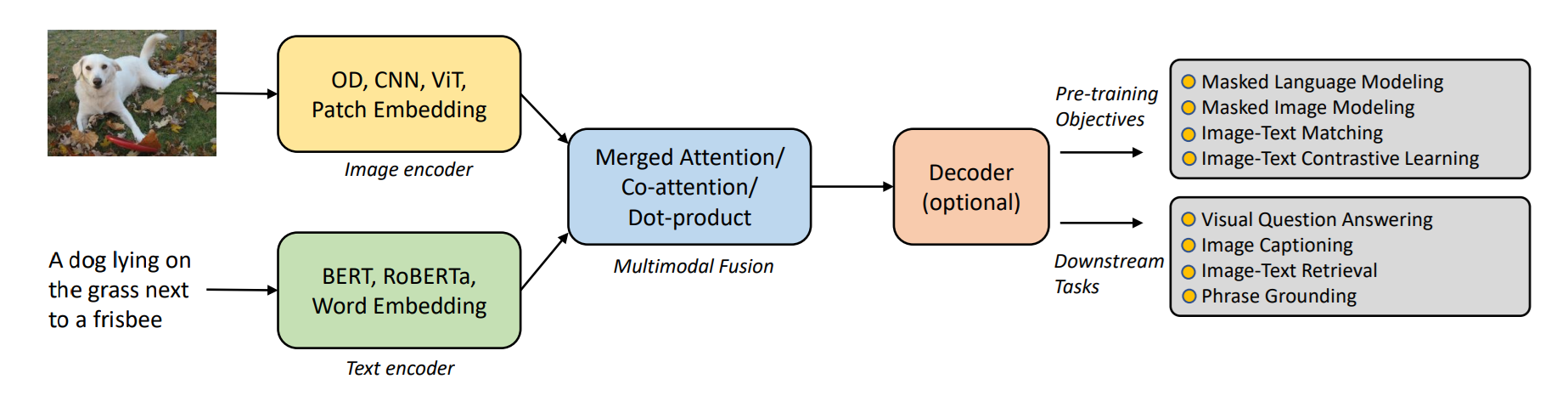

Similarly, by training on two modalities - images with associated text - a vision language model can learn to map visual patterns to semantic concepts and can generate captions or answer questions about images.

Multimodal learning not only improves performance on tasks that naturally involve multiple modalities but also enables zero‑shot generalization.

What Is A Multimodal Dataset?

A multimodal dataset is a dataset that contains more than one type of data (or "modality") aligned together for training and evaluating AI systems. Modalities can include:

- Text (captions, transcripts, documents)

- Images (photos, frames, visual scenes)

- Audio (speech, environmental sounds, music)

- Video (sequences combining vision + audio)

- Other sensor data (touch, motion, physiological signals, etc.)

The key feature is that these modalities are linked or synchronized, for example, an image with a caption, or a video with spoken dialogue and subtitles. Multimodal datasets enable AI systems to learn relationships between different types of inputs, much like humans integrate vision, hearing, and language.

Top 10 Multimodal Datasets

The following datasets span a range of tasks and modalities, including images, text, audio and video. Each dataset is briefly described, with key statistics, modalities, licensing information and access links. A summary table follows the descriptions.

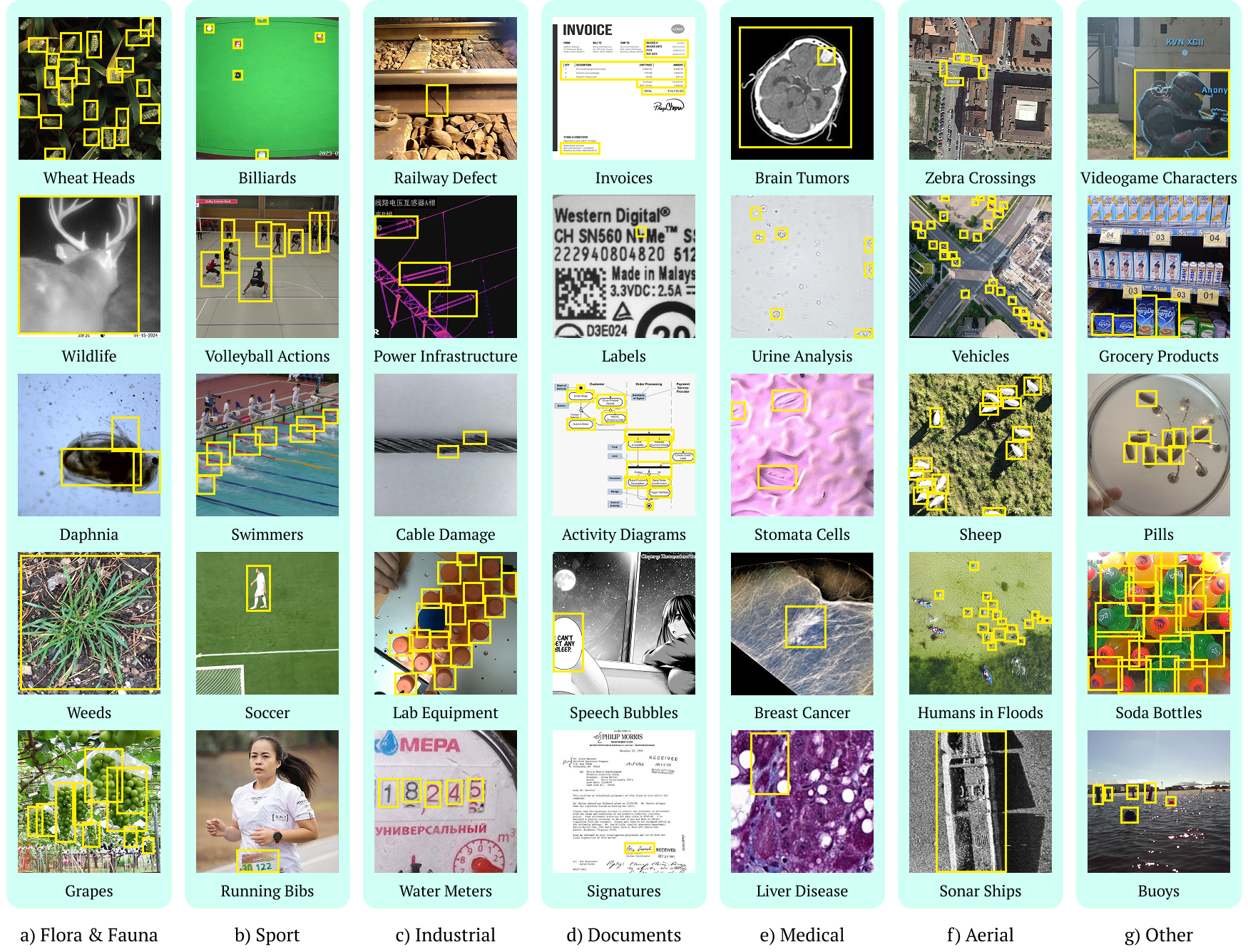

1. RF100-VL (Roboflow100 Vision-Language Benchmark)

RF100-VL is a large-scale multimodal benchmark designed to evaluate vision–language models (VLMs) on out-of-distribution object detection tasks. It is built from 100 diverse datasets on Roboflow Universe, covering domains such as aerial imagery, medical scans, industrial defects, wildlife monitoring, and documents. Each dataset provides images with bounding box annotations along with few-shot text and visual examples to test multimodal reasoning.

Following are the dataset features:

- Size: 164,149 images with 1,355,491, annotations across seven domains, including aerial, biological, and industrial imagery.

- Modalities: RF100-VL combines images with textual instructions and bounding box annotations. It also includes few-shot visual examples. These multimodal inputs support tasks like object detection, cross-modal retrieval, and few-shot generalization across diverse domains.

- License: Released for research benchmarking; sourced from public Roboflow Universe datasets.

RF100-VL exposes the limitations of current VLMs in real-world applications. Models like Qwen2.5-VL and GroundingDINO achieve very low zero-shot accuracy (<2% AP), showing the difficulty of transferring web-scale pretraining to specialized or unseen domains. By providing diverse tasks and multimodal cues, RF100-VL serves as a rigorous benchmark for few-shot adaptation, robustness, and generalization in multimodal AI.

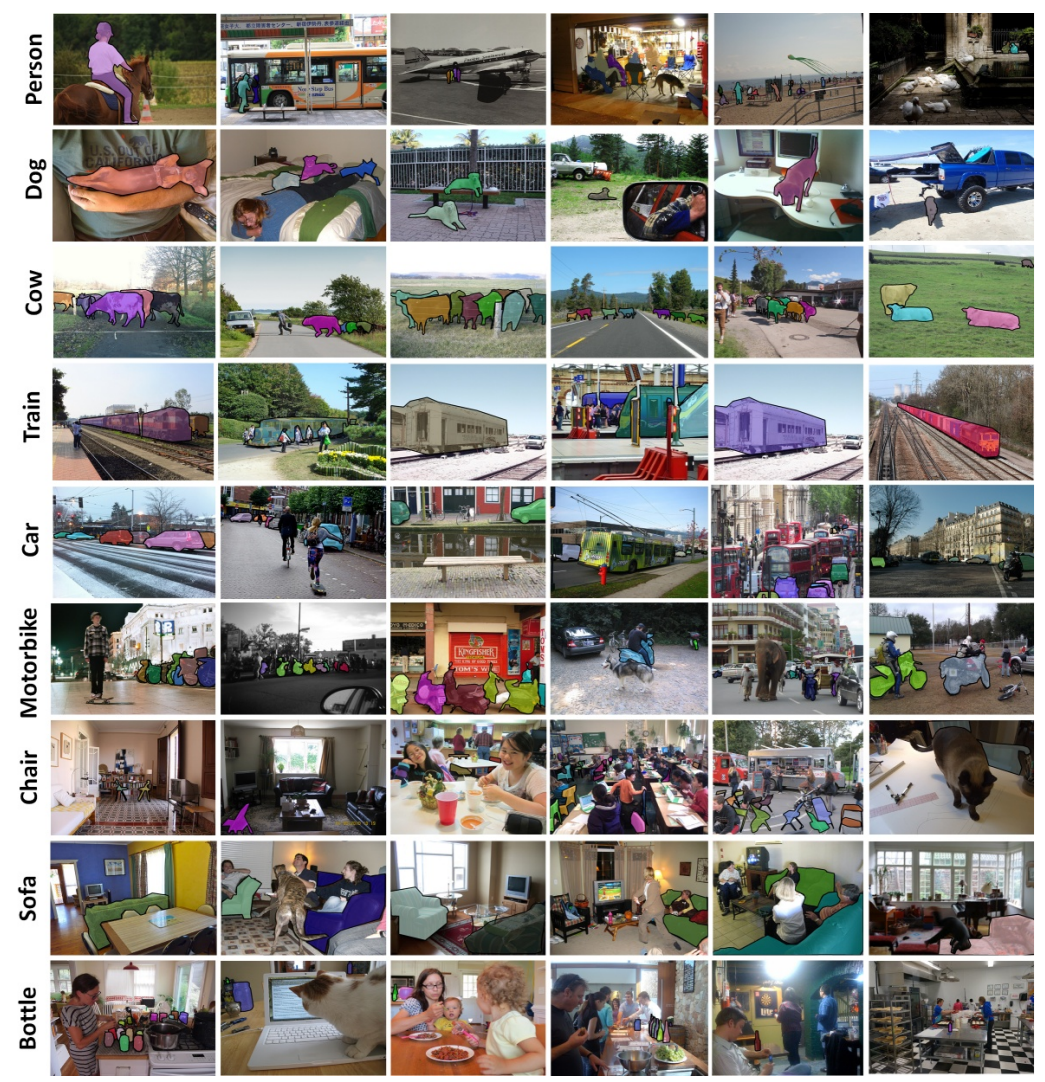

2. MS COCO (Common Objects in Context)

MS COCO is one of the most widely used datasets in computer vision. Developed by Microsoft Research, it contains images of everyday scenes with dense annotations for object detection, segmentation and captioning. The dataset consists of over 330,000 images, each annotated with 80 object categories and five captions describing the scene. The images depict common objects in their natural context, such as people interacting with objects at home or outdoors.

Following are the dataset features:

- Size: 330K+ images, 80 object categories, 91 stuff categories, 5 captions per image, 250,000 people with keypoints.

- Modalities: The primary modalities are images and text (captions). Additional annotations include bounding boxes, segmentation masks, keypoints for human poses, and panoptic segmentation labels. These multiple annotation types enable tasks like instance segmentation and pose estimation.

- License: The images are licensed under various Creative Commons licenses; annotation files are released under a Creative Commons Attribution 4.0 license. Users must respect the original Flickr licenses attached to each image.

COCO has become the default benchmark for image captioning and object detection due to its scale and diversity. It is often used for pre‑training vision models and evaluating multimodal tasks such as image captioning and VQA.

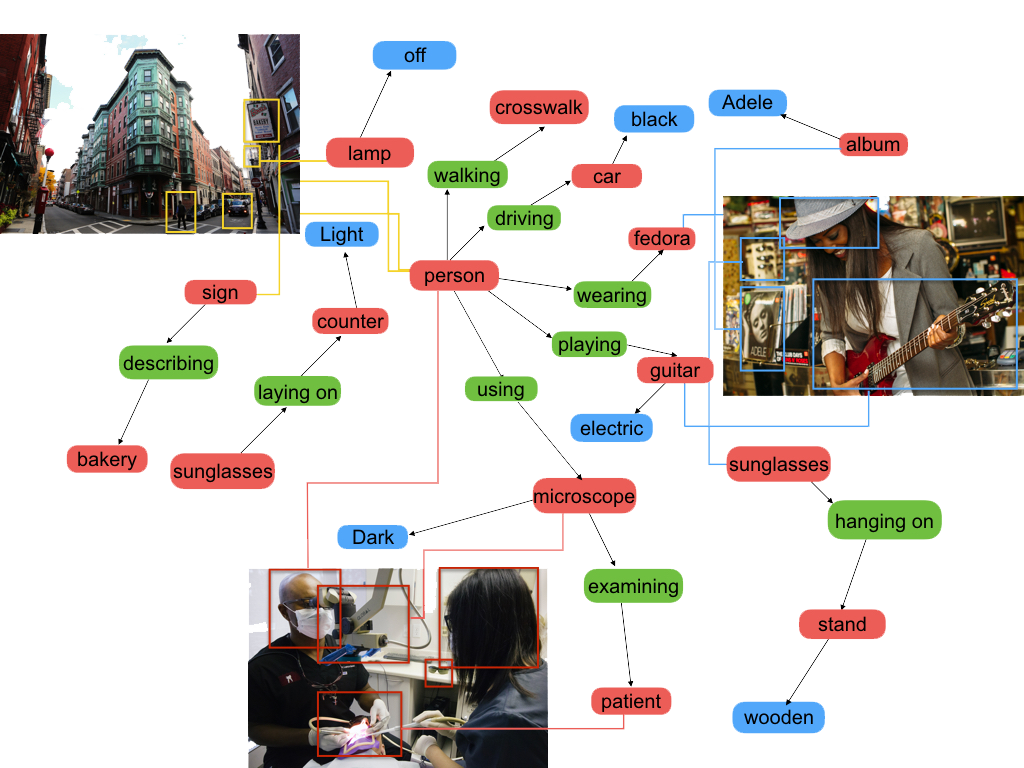

3. Visual Genome

Visual Genome bridges the gap between images and language by providing dense annotations of objects, attributes, relationships and region descriptions. The dataset contains 108,077 images (taken mostly from COCO) and includes 5.4 million region descriptions, 1.7 million question-answer pairs, 3.8 million object instances, 2.8 million attributes and 2.3 million relationships. Each image has on average, 35 objects, 26 attributes, and 21 pairwise relationships.

Following are the dataset features:

- Size: 108K images, 5.4 M region descriptions, 1.7 M question-answer pairs, 3.8 M object instances.

- Modalities: Images are annotated with textual descriptions at the region level (captions), attributes (e.g., red chair), relationships (e.g., person riding horse) and question-answer pairs. This rich annotation makes the dataset suitable for tasks requiring fine‑grained visual reasoning.

- License: Creative Commons Attribution 4.0 International (CC BY 4.0).

Visual Genome provides dense semantic information that goes beyond simple captions, enabling models to perform reasoning tasks like scene graph generation and VQA. Its CC BY 4.0 license facilitates widespread academic and commercial use.

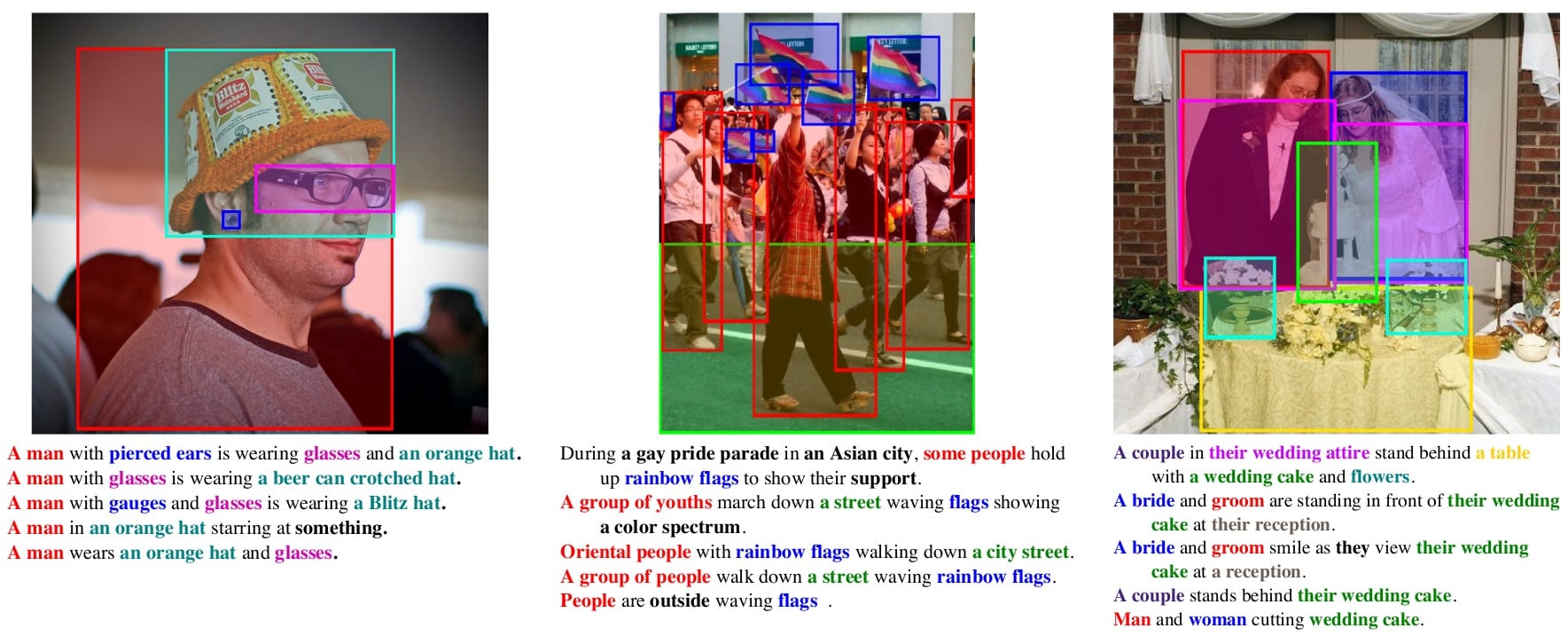

4. Flickr30K Entities

Flickr30K Entities extends the Flickr30K image-caption dataset by adding bounding box annotations and coreference chains for all noun phrases in the captions. The dataset contains 31,783 real‑world images collected from Flickr, with five crowd‑sourced captions per image (a total of 158,915 captions). Flickr30K Entities augments these captions with 276k manually annotated bounding boxes. The dataset is designed to improve grounded language understanding by linking phrases in captions to specific image regions.

Following are the dataset features:

- Size: 31,783 images, 158,915 captions, 276k manually annotated bounding boxes and 244k coreference chains.

- Modalities: Images and textual captions with bounding boxes and coreference annotations. Each caption is linked to objects via entity annotations.

- License: The dataset follows the original Flickr30K license, which allows research and academic use on non‑commercial projects. Users must respect Flickr’s terms of use.

Flickr30K Entities enables supervised learning of phrase grounding and improves performance on tasks such as grounding textual queries in images by linking textual phrases to image regions.

5. ImageBind (Meta AI)

ImageBind is a large-scale dataset for training unified multimodal embeddings. It links images with multiple modalities, including text, audio, depth, thermal, and IMU (motion), without requiring pairwise annotations for every combination.

Following are the dataset features:

- Size: Millions of examples across modalities, scale not fixed as it is built from multiple sources.

- Modalities: Images, text, audio, depth, thermal, IMU.

- License: Research use only.

ImageBind is first large-scale dataset enabling six modalities to be aligned into a single embedding space. It underpins state-of-the-art multimodal AI models for retrieval, classification, and cross-modal generation.

6. Wikipedia‑based Image Text (WIT)

WIT is a large‑scale, multilingual image-text dataset extracted from Wikipedia. It contains 37.6 million image-text examples (11.5 million unique images) covering 108 languages. Each example pairs an image with the surrounding contextual text from the corresponding Wikipedia article.

Following are the dataset features:

- Size: 37.6 M image–text pairs, 11.5 M unique images across 108 languages.

- Modalities: Images and multilingual text descriptions (captions, alt‑text, and contextual paragraphs). Examples span Wikipedia categories such as history, science, nature and art.

- License: Creative Commons Attribution‑ShareAlike 3.0 (CC BY‑SA 3.0).

WIT’s scale and multilingual coverage make it good choice for training cross‑lingual vision-language models and evaluation tasks such as multilingual image captioning and retrieval. Unlike previous datasets limited to English, WIT enables research on less‑represented languages.

7. LAION‑5B

Developed by the LAION non‑profit collective, LAION‑5B is one of the largest open multimodal datasets available. It contains 5.85 billion CLIP‑filtered image-text pairs extracted from the web, with 2.32 billion English pairs and the rest spanning multiple languages. The dataset democratizes access to large‑scale data used for training foundation models like CLIP and Stable Diffusion.

Following are the dataset features:

- Size: 5.85 billion image–text pairs.

- Modalities: Images scraped from the web paired with alt‑text or surrounding text. The data is filtered using CLIP to remove unrelated pairs and contains metadata such as image resolution, similarity score and license when available.

- License: Creative Commons Attribution 4.0 (CC BY 4.0). However, each image is individually licensed according to its source; the dataset includes a license field indicating the original Creative Commons license when available. Users must filter the dataset based on their use case and respect the original licenses.

LAION‑5B democratizes access to massive image-text data. Researchers use subsets of LAION‑5B to train models like Stable Diffusion and DALL‑E. Its scale helps training foundation models with billions of parameters. Nevertheless, users must be cautious about content quality and copyright, the dataset is non‑curated and may contain NSFW or copyrighted material.

8. MSR‑VTT

MSR‑VTT (Microsoft Research Video‑to‑Text) is a large video captioning dataset created to bridge the gap between video content and natural language. It contains 10,000 web video clips totaling approximately 41.2 hours, with 200,000 clip–sentence pairs. The videos were collected using 257 queries from a commercial video search engine; each query has about 118 videos. Each clip is annotated with about 20 captions by Amazon Mechanical Turk workers.

Following are the dataset features:

- Size: 10K video clips, 41.2 hours of footage, 200K captions with approximately 20 captions per video.

- Modalities: Video clips and natural language descriptions. Clips cover diverse categories such as sports, music, cooking, social events and animals. Each clip has multiple independent captions.

- License: Not explicitly specified. MSR‑VTT is released for research purposes; users should consult Microsoft’s terms of use or contact the authors for commercial usage.

MSR‑VTT has become a standard benchmark for video captioning and video‑to‑text tasks. The diverse set of clips and multiple captions per clip enable models to learn a variety of descriptions and evaluate generalization across topics.

9. Conceptual Captions

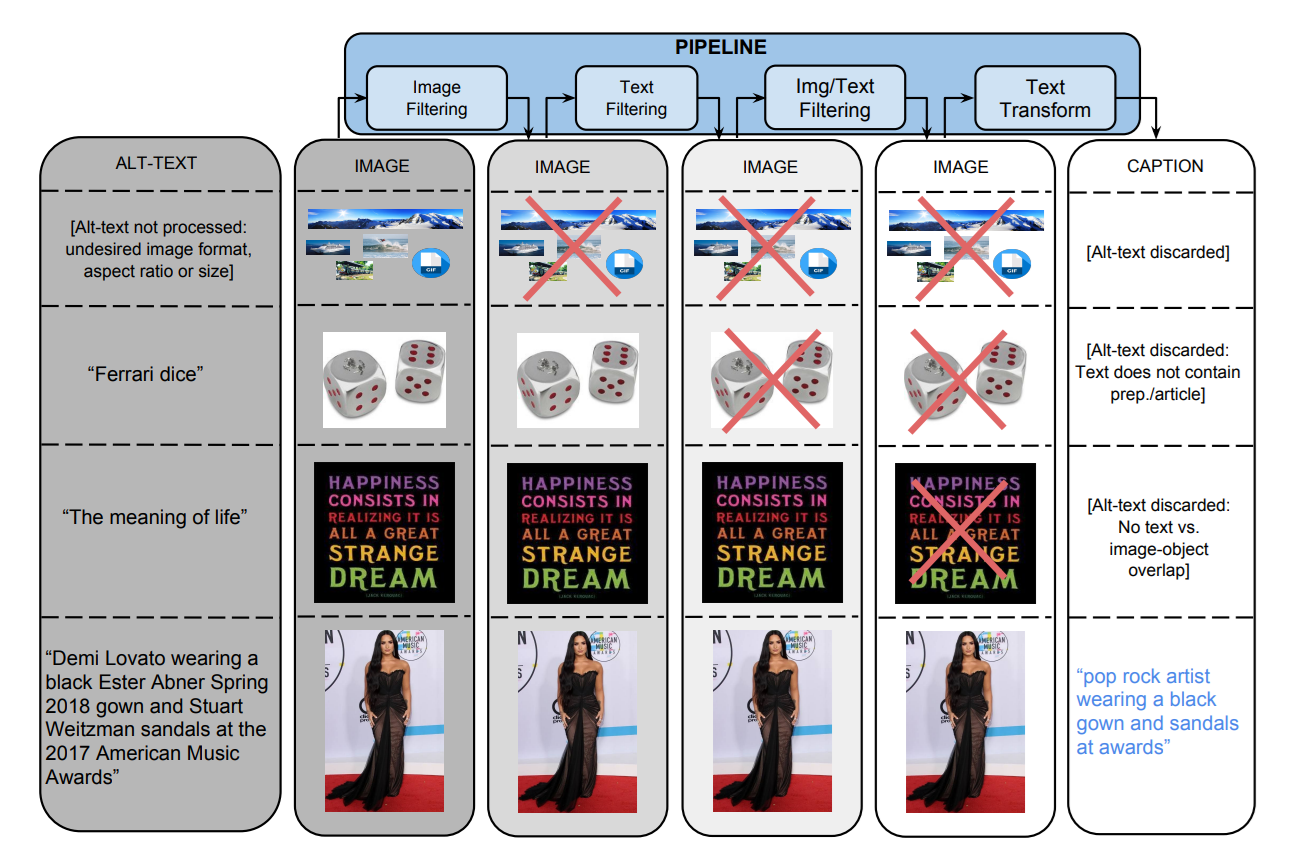

Google’s Conceptual Captions dataset (often abbreviated CC3M) contains approximately 3.3 million image-caption pairs extracted from the web. The dataset was created by automatically filtering alt‑text from billions of web pages to select high‑quality captions. Proper names and location references were replaced with more general words to help models learn general concepts.

Following are the dataset features:

- Size: ~3.3 million image–caption pairs.

- Modalities: Images paired with cleaned alt‑text captions. Each image has a single caption.

- License: The dataset may be freely used for any purpose, although acknowledgement of Google as the data source is appreciated. The license file specifies that the data is provided “as is” without warranty

Conceptual Captions provides a large‑scale, machine‑curated dataset that is diverse and less constrained than human‑annotated datasets like COCO. The dataset has been widely used to pre‑train image captioning models and is a precursor to larger datasets like LAION. Its license allows unrestricted use, making it attractive for research and industry.

10. VaTeX

VaTeX (Video And Text) is a multilingual video captioning dataset containing 41,250 videos and 825,000 captions (English and Chinese). The training set comprises 25,991 videos with 259,910 English and 259,910 Chinese captions, while the validation set includes 3,000 videos with 30,000 captions in each languageeric-xw.github.io. VaTeX aims to support tasks such as multilingual video captioning, video‑guided machine translation and video understanding.

Following are the dataset features:

- Size: 41,250 videos, 825,000 captions. Training set: 25,991 videos with 259,910 captions per language; validation set: 3,000 videos.

- Modalities: Videos (with YouTube IDs), English captions and Chinese captions. Each video has ten captions (five regular and five parallel translations) in both languages.

- License: Creative Commons Attribution 4.0 International (CC BY 4.0).

VaTeX offers one of the first large‑scale bilingual datasets for video captioning. The parallel English-Chinese captions enable research on video‑guided machine translation and cross‑modal multilingual learning. The dataset’s licensing facilitates open research and commercial use.

The following table shows the summary of our top 10 datasets.

| Dataset | Size / Scale | Modalities | License |

|---|---|---|---|

| RF100-VL (Roboflow100-VL) | 164,149 images, 1.35M annotations, 7 domains | Images, text instructions, bounding boxes, few-shot visual examples | Research benchmarking (public Roboflow Universe sources) |

| MS COCO | 330K+ images, 80 object categories, 91 stuff categories, 5 captions per image, 250,000 people with keypoints | Images, text (captions), bounding boxes, segmentation masks, keypoints, panoptic segmentation | Images: various CC licenses; annotations: CC BY 4.0 |

| Visual Genome | 108K images, 5.4M region descriptions, 1.7M Q&A pairs, 3.8M objects | Images with captions, attributes, relationships, question–answer pairs | CC BY 4.0 |

| Flickr30K Entities | 31,783 images, 158,915 captions, 276K boxes, 244K corefs | Images, text captions, bounding boxes, entity coreference annotations | Flickr30K license (research use, non-commercial; respect Flickr ToS) |

| ImageBind (Meta) | Millions of examples across multiple sources (scale varies) | Images, text, audio, depth, thermal, IMU | Research use only |

| WIT (Wikipedia Image-Text) | 37.6M image–text pairs, 11.5M unique images, 108 languages | Images with multilingual text (captions, alt text, contextual paragraphs) | CC BY-SA 3.0 |

| LAION-5B | 5.85B image–text pairs (2.32B English, rest multilingual) | Images with alt text/surrounding text, metadata (resolution, similarity) | CC BY 4.0 (respect original licenses per image) |

| MSR VTT | 10K video clips (~41.2 hrs), 200K captions | Video clips, multiple natural language captions | Research use; Microsoft terms of use apply |

| Conceptual Captions (CC3M) | ~3.3M image–caption pairs | Images with cleaned alt-text captions | Freely usable; attribution to Google appreciated |

| VaTeX | 41,250 videos, 825K captions (English + Chinese) | Videos, English captions, Chinese captions (parallel bilingual captions) | CC BY 4.0 |

Benefits of Multimodal Datasets in Computer Vision

Multimodal datasets are foundational for training and evaluating multimodal deep learning models. They provide richer and more contextual information than single‑modality datasets, which enable models to learn deeper semantic relationships. Following are the benefits of multimodal datasets in computer vision:

- Richer Contextual Understanding: Multimodal datasets provide more than one type of input (e.g., images plus text, or video plus audio). This allows models to capture context that a single modality might miss. For example, imagine an image of a dog sitting on a skateboard, without a multimodal dataset the model would detect only two objects “dog” and “skateboard”. Now, if the dataset also includes a caption like, “A dog riding a skateboard in the park,” the text provides extra context. The model can learn not only to recognize the objects, but also to connect them into a meaningful scene (the action of “riding”).

- Improved Robustness and Accuracy: By combining modalities, models can cross-check information. If an image is noisy or unclear, the text caption or audio signal can reinforce the correct interpretation. This redundancy reduces error rates and improves model reliability in real-world environments. For example, suppose you have a blurry photo where it’s hard to tell if the object is a “cat” or “dog.” A multimodal dataset that also provides a caption like “A brown dog sleeping on the couch” can guide the model toward the correct interpretation. The cross-check between vision and text reduces misclassification caused by poor image quality.

- Better Alignment with Human Perception: Humans naturally combine vision, hearing, and language when making sense of the world. Multimodal datasets allow AI systems to learn in a similar way, making them better suited for tasks such as video captioning, visual question answering, or human–robot interaction. For example, in a video of a cooking tutorial, the image shows someone stirring a pot, while the audio contains speech like “Now we add salt and mix well.” Just like humans combine what they see and hear to understand the full meaning, a multimodal dataset teaches the model to fuse both cues, enabling it to understand not only the action but also the intention (what’s being cooked, what step is happening).

- Enables Advanced Applications: Many emerging applications like autonomous vehicles, medical diagnostics, and assistive technologies require information from multiple sources (e.g., images + sensor data + natural language). Multimodal datasets are the foundation for training such systems. For example, in autonomous driving, the car uses cameras (images), LiDAR (depth sensing), and GPS + maps (structured text data). By training on multimodal datasets that combine these sources, the system can recognize a pedestrian crossing even in low-light conditions where a camera alone might fail. Here, multimodal integration enables safety-critical applications.

- Generalization Across Tasks: Training on multimodal data helps models transfer knowledge across domains. A model trained on an image-caption dataset like COCO can later be adapted to video-caption tasks. For example, after learning how to match “dog riding skateboard” with an image, the same model can generalize to recognizing “dog jumping off skateboard” in a video clip, even without retraining from scratch. This transfer across modalities and tasks makes multimodal datasets valuable for scalability.

These benefits underscore why investing in the creation and curation of multimodal datasets is crucial for advancing AI research.

Tips For Using Multimodal Datasets

Multimodal datasets are powerful but come with challenges. Here are some practical tips for working with them:

- Understand the license and usage terms: Every dataset comes with different license and terms how it can be used. Some are open for research but restricted for commercial use, while others may require special agreements. For example, the MIMIC-CXR medical dataset requires signing a Data Use Agreement, so you ca not just download and use it freely. On the other hand, LAION-5B is under CC BY 4.0, which allows broad use, but each individual image may still carry its own license. If you plan to use a dataset in a commercial project, you must first check and follow these license terms.

- Check data quality and annotation consistency: Datasets often have labelling errors or noise. Before training, you should clean them up by checking samples manually or using similarity checks. Using accurate, high-quality data makes the model learn better. For example, suppose you have an image of a cat paired with the caption “A dog playing in the park.” If you don’t fix this mismatch, the model will learn the wrong relationship between images and text. Filtering or correcting such errors ensures the model learns the correct cross-modal connections.

- Check alignment of modalities: In multimodal datasets, you must make sure different types of data line up properly. For example, if you have a dataset of images and captions, each caption should describe the right image, not a different one. Similarly, if you are working with video and audio, the speech should match the exact video segment, so the person’s lips and words are in sync.

- Handle imbalance and bias: Multimodal datasets don’t always represent all categories, modalities or languages equally. Some groups may be overrepresented while others have very little data. For example, in the WIT dataset, most captions are in English, but captions in low-resource languages are much fewer. If this imbalance isn’t handled, models may work well for English but poorly for other languages. Techniques like re-sampling, data augmentation, or balancing strategies can help reduce this bias and improve fairness.

- Preprocess each modality appropriately: Different types of data need different preparation steps before training. Images often need resizing and normalization, text requires tokenization and cleaning, and audio is usually converted into spectrograms. Therefore, it is important to pre-process data of each modality with proper technique.

- Augment with synthetic or small annotated data: If some categories or modalities are missing, you can fill the gap by creating synthetic data or adding a small set of manually labeled samples. Suppose your image-text dataset has plenty of photos of cars but very few of bicycles. You could generate synthetic bicycle images using tools like GANs or diffusion models, and pair them with short captions such as “A person riding a bicycle.” Alternatively, you could manually annotate a small set of real bicycle images with captions. This helps the model learn better balance across categories.

Where to Find Multimodal Datasets

Apart from the top datasets above, there are numerous resources where you can discover other multimodal datasets. Following are my favorite places to look for multimodal dataset:

- Roboflow Universe: Roboflow Universe is a community‑driven platform for sharing computer‑vision datasets. It hosts thousands of datasets, many of which are multimodal dataset. You can search for “multimodal” datasets on Roboflow Universe at. The platform provides dataset previews, annotation formats and export tools.

- Hugging Face Datasets: Hugging Face maintains an extensive library of datasets accessible through its Python API. You can search multimodal dataset based on tasks categories like “image-text-to-text” or “visual-question-answering” etc.

- Kaggle: Platforms like Kaggle host competitions and share the accompanying datasets. For example, the Flickr8k Image Captioning dataset is available on Kaggle. It contains 8,000+ images, each paired with captions describing the scene. Such datasets are widely used for training and fine-tuning image–text models when large-scale resources are not available.

- IEEE Dataport: A robust, research-oriented data platform developed by IEEE that allows researchers and institutions to store, share, access, and manage datasets across diverse disciplines. You can search for multimodal dataset there.

Multimodal Dataset Conclusion

Multimodal datasets are key to modern AI. They bring different modalities such as images, text, audio, and video, helping researchers build AI models that can understand the environment and reason like human. As AI systems get more advanced, the need for large, diverse, and responsibly collected datasets will only increase. The field is still growing quickly, and future breakthroughs may come from how wisely and creatively we use these resources.

Cite this Post

Use the following entry to cite this post in your research:

Timothy M. (Aug 18, 2025). Top 10 Multimodal Datasets. Roboflow Blog: https://blog.roboflow.com/top-multimodal-datasets/