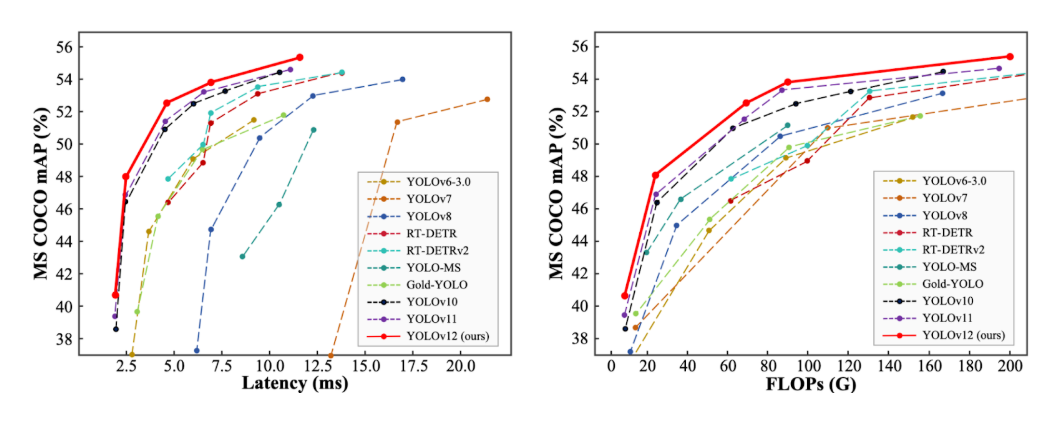

Released on February 18th, 2025, YOLOv12 is a state-of-the-art computer vision model architecture. YOLOv12 was made by researchers Yunjie Tian, Qixiang Ye, David Doermann and introduced in the paper “YOLOv12: Attention-Centric Real-Time Object Detectors”. YOLOv12 has an accompanying open source implementation that you can use to fine-tune models.

The model achieves both a lower latency and higher mAP when benchmarked on the Microsoft COCO dataset. Here are the performance graphs released with the model paper:

In this guide, we are going to walk through how to fine-tune a YOLOv12 model on a custom dataset. We will:

- Create a custom dataset with labeled images

- Export the dataset for use in model training

- Train the model using the a Colab training notebook

- Run inference with the model

Here is an example of predictions from a model trained to identify shipping containers:

Let’s begin!

We also have an accompanying video guide:

Step #1: Install YOLOv12 and Download Dataset

To get started, open the Roboflow YOLOv12 model training notebook in Google Colab. Below, we will walk through the main steps.

First, we need to install a few dependencies:

- The YOLOv12 model, which we will use for training;

- supervision, which we will use to post-process model predictions, and;

- Roboflow, which we will use to download our dataset.

YOLOv12 has been released as a GitHub repository without a pip package. Thus, we will need to build the project from source.

To install the model and its dependencies, run:

!git clone https://github.com/sunsmarterjie/yolov12

%cd yolov12

!pip install roboflow supervision flash-attn --upgrade -q

!pip install -r requirements.txt

!pip install -e .

!pip install --upgrade flash-attnNext, we need a dataset. If you don't already have an object detection dataset, check out our Getting Started with Roboflow guide for a walk-through of how to prepare a detection dataset.

For this guide, we are going to use an open source shipping container dataset.

If you don't already have one, create a free Roboflow account then copy your API key. You can then set it below so you can download the shipping container dataset.

from google.colab import userdata

from roboflow import Roboflow

ROBOFLOW_API_KEY = userdata.get('ROBOFLOW_API_KEY')

rf = Roboflow(api_key=ROBOFLOW_API_KEY)

project = rf.workspace("roboflow-universe-projects").project("yard-management-system")

version = project.version(11)

dataset = version.download("yolov8")

We need to make a few changes to our downloaded dataset so it will work with YOLOv12. Run the following code to prepare your dataset for training:

!sed -i '$d' {dataset.location}/data.yaml

!sed -i '$d' {dataset.location}/data.yaml

!sed -i '$d' {dataset.location}/data.yaml

!sed -i '$d' {dataset.location}/data.yaml

!echo -e "test: ../test/images\ntrain: ../train/images\nval: ../valid/images" >> {dataset.location}/data.yamlStep #2: Train a YOLOv12 model

We are now ready to train a YOLOv12 model.

Below, replace yolov12s.yaml with the checkpoint from which you want to start training. You can use:

- yolov12n.pt

- yolov12s.pt

- yolov12m.pt

- yolov12l.pt

- yolov12x.pt

We recommend training for at least 250 epochs.

Once you have set your training checkpoint, run the code cell below to start training.

from ultralytics import YOLO

model = YOLO('yolov12s.yaml')

results = model.train(

data=f'{dataset.location}/data.yaml',

epochs=250

)

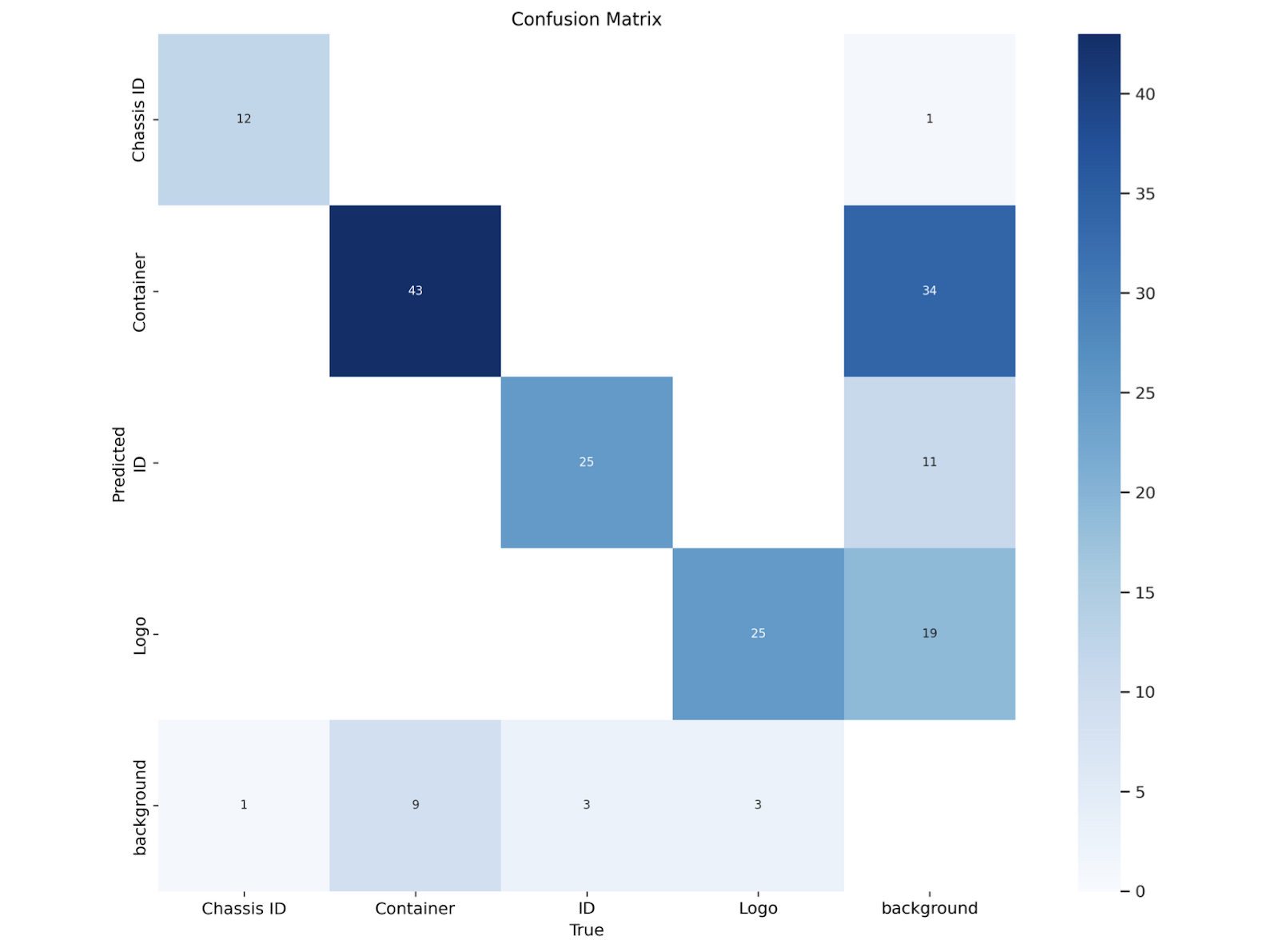

You can view your model confusion matrix with this code:

from IPython.display import Image

Image(filename=f'/content/yolov12/runs/detect/train/confusion_matrix.png', width=600)

Here is the confusion matrix after training a model for 250 epochs on the shipping container dataset:

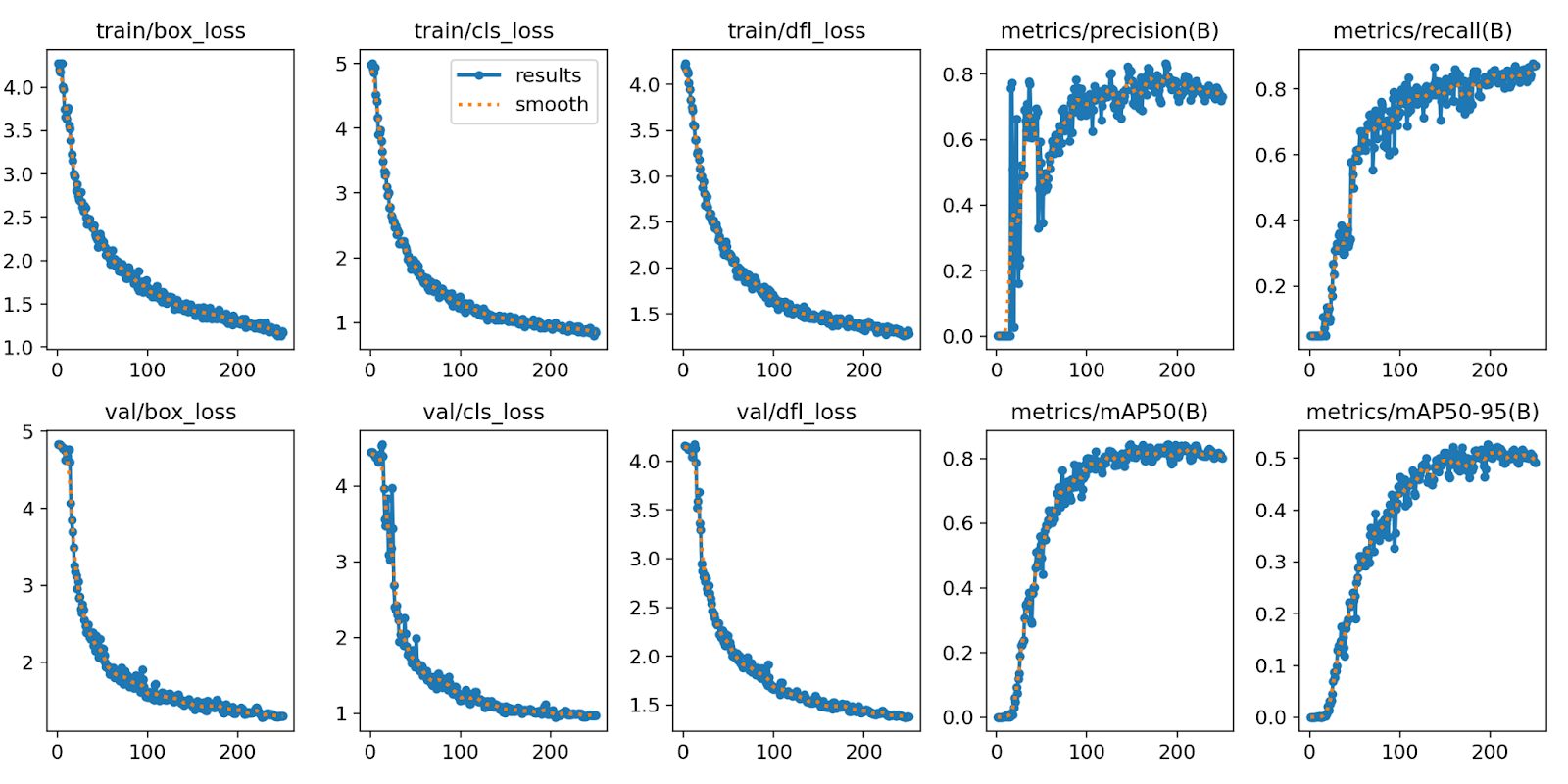

You can view your training graphs with this code:

Image(filename=f/content/yolov12/runs/detect/train/results.png', width=600)

Step #3: Run inference with trained model

We can run our trained model on a random image from the `valid` set of our dataset using the following code:

import random

model = YOLO(f"/{HOME}/yolov12/runs/detect/train/weights/best.pt")

ds = sv.DetectionDataset.from_yolo(

images_directory_path=f"{dataset.location}/valid/images",

annotations_directory_path=f"{dataset.location}/valid/labels",

data_yaml_path=f"{dataset.location}/data.yaml"

)

image = random.choice(list(ds.images.keys()))

image = cv2.imread(image)

bounding_box_annotator = sv.BoundingBoxAnnotator()

label_annotator = sv.LabelAnnotator()

results = model(image)[0]

detections = sv.Detections.from_ultralytics(results).with_nms()

bounding_box_annotator = sv.BoundingBoxAnnotator()

label_annotator = sv.LabelAnnotator()

annotated_image = bounding_box_annotator.annotate(

scene=image, detections=detections)

annotated_image = label_annotator.annotate(

scene=annotated_image, detections=detections)

sv.plot_image(annotated_image)

Above, we load the valid split of our dataset into a supervision DetectionDataset object. This object contains the ground truth annotations and images in our dataset. We then choose a random image from the valid split, run our fine-tuned model on the image, then display the results.

Here are the results:

Our model successfully identifies the container, the container ID, the container logo, and the chassis ID, four classes in our dataset and present in the image above.

Conclusion

YOLOv12 is a computer vision model developed by Yunjie Tian, Qixiang Ye, and David Doermann. You can train object detection models with the YOLOv12 architecture.

In this guide, we walked through how to train a YOLOv12 model. We prepared a dataset in Roboflow, then exported the dataset in the YOLOv8 PyTorch TXT format (compatible with YOLOv12) for use in training a model. We trained our model in a Google Colab environment using the YOLOv12n weights, evaluated it on images from our model test set, and deployed it to Roboflow.

The full code from this guide is available as a notebook. To explore more training tutorials, check out Roboflow Notebooks, our open source repository of computer vision notebooks.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Feb 19, 2025). How to Train a YOLOv12 Object Detection Model on a Custom Dataset. Roboflow Blog: https://blog.roboflow.com/train-yolov12-model/