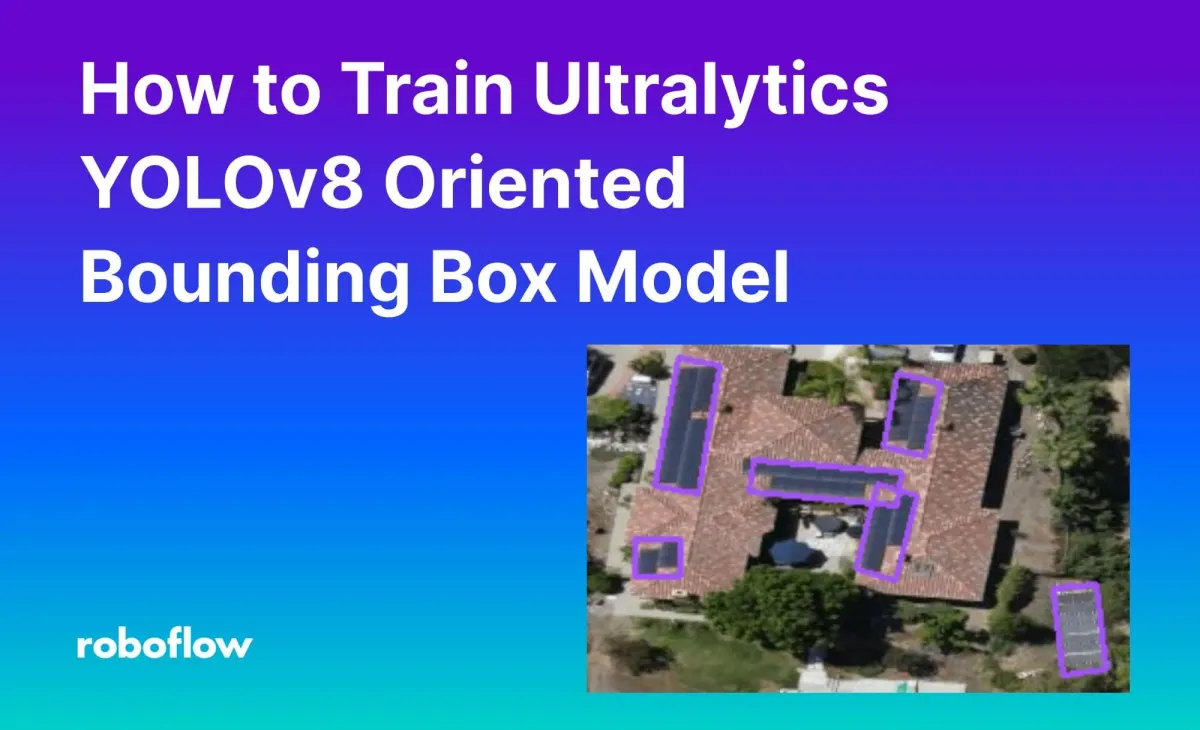

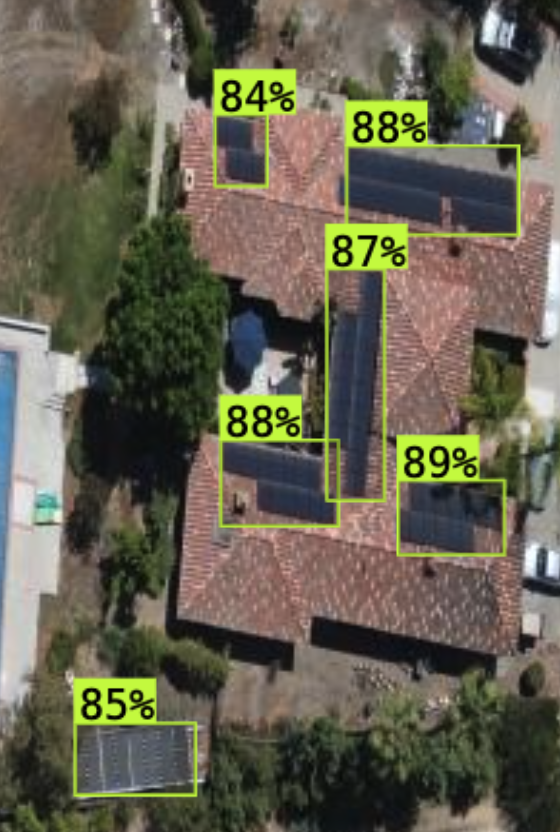

Object detection models return bounding boxes. These boxes indicate where an object of interest is in an image. In many models, such as YOLOv8, bounding box coordinates are horizontally-aligned. This means that there will be spaces around angled objects. The following image shows an object detection prediction on a solar panel:

You can retrieve bounding boxes whose edges match an angled object by training an oriented bounding boxes object detection model.

In this guide, we are going to show how you can train a YOLOv8 Oriented Bounding Boxes (YOLOv8-OBB) model on a custom dataset.

Without further ado, let’s get started!

Step #1: Collect Data

Before we can train a model, we need a dataset with which to work. For this guide, we are going to train a model to detect solar panels. Such a model could be used for aerial surveying by an ordnance survey organization to better understand adoption of solar panels in an area.

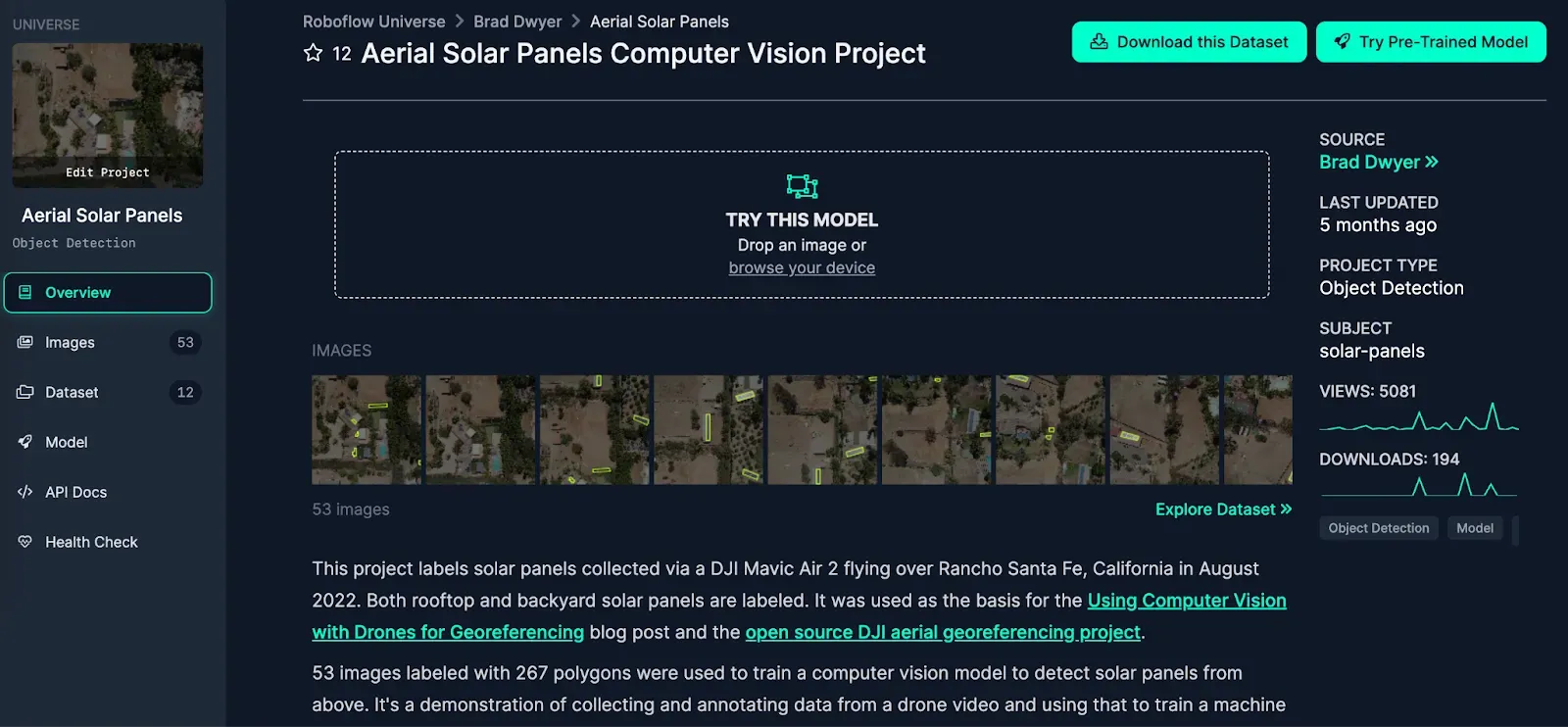

To gather data, we are going to use a dataset from Roboflow Universe, a community where over 250,000 public computer vision datasets have been shared on the web. We will use the Aerial Solar Panels dataset. This dataset contains 53 aerial images of solar panels.

If you have another use case in mind, you can use Roboflow Universe to find images for use in training your model. If you already have images to use in training your model, you can skip to the next step!

Step #2: Create a Roboflow Project

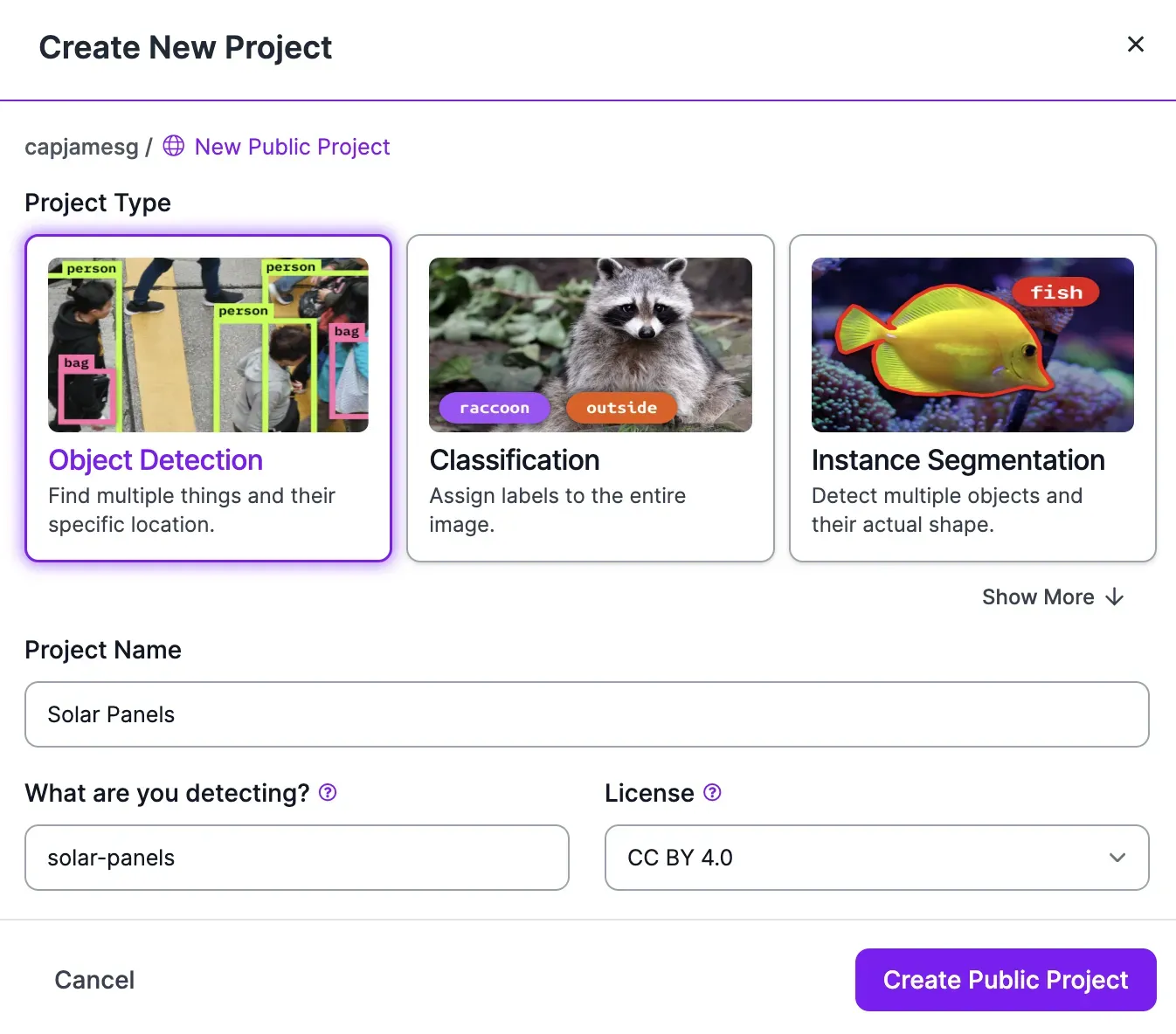

We need to label our images with oriented bounding boxes so that our model knows exactly where objects of interest are in an image. We are going to annotate our data in Roboflow Annotate.

To start labeling, first create a free Roboflow account. Then, click the “Create a Project” button on your Roboflow dashboard. Create an Object Detection project.

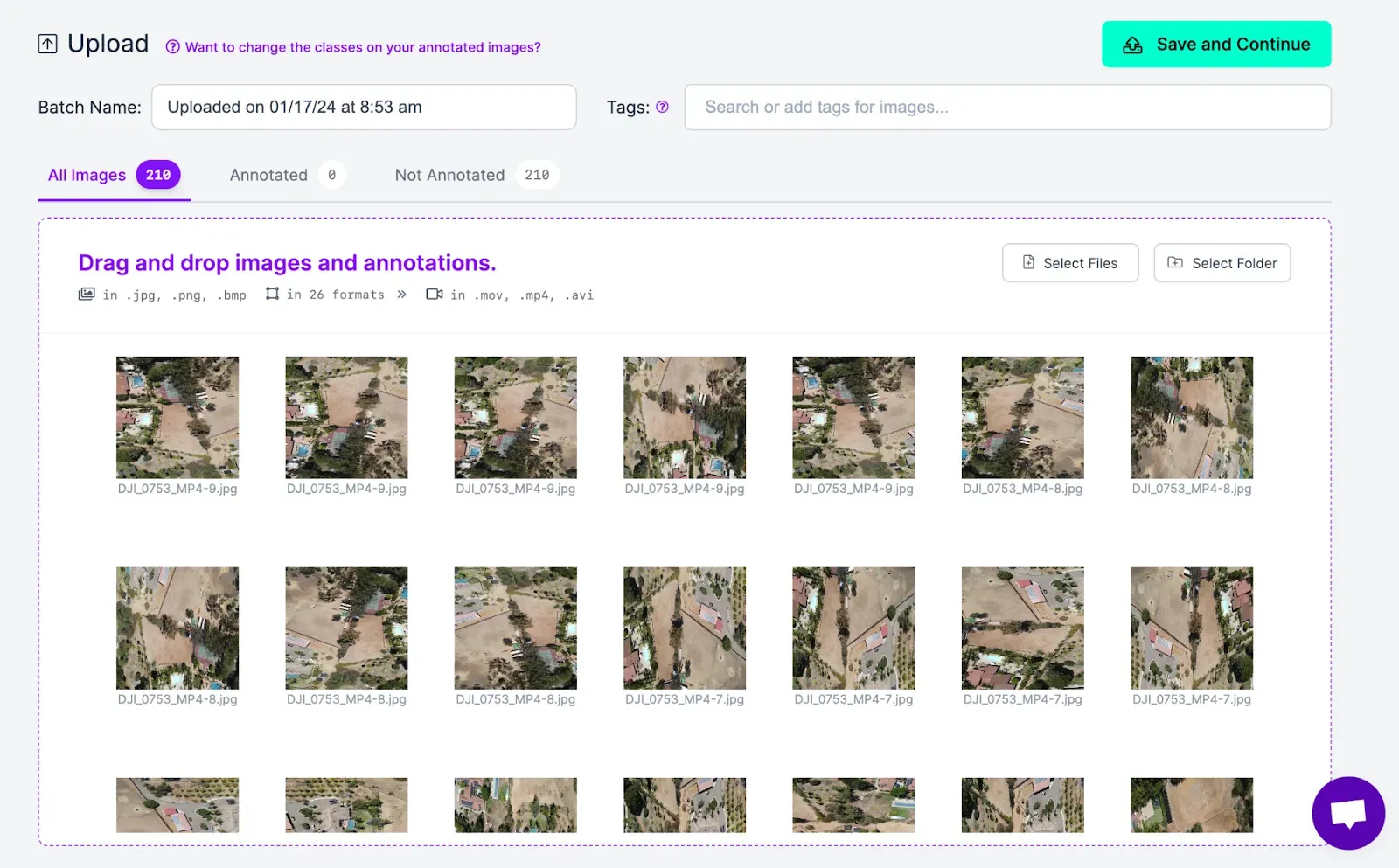

Once you have created a project, you will be asked to upload data in the web interface. You can do so by dragging images you want to label into the web application. If you already have YOLOv8 OBB labels, you can upload them in the same web interface alongside your images.

If you are working with the solar panel dataset, upload the images in the “train” folder.

Click “Save and Continue” to confirm the upload of your images to the Roboflow platform.

Step #3: Annotate Data with OBB Labels

With your images uploaded, you are ready to annotate your images.

To start labeling your images, click “Annotate” in the left sidebar. Then, choose an image that you want to label. When you select an image, you will be taken into the Roboflow Annotate web interface:

For a traditional object detection model, we would use a bounding box annotation tool, which enables us to draw a horizontally-aligned box around objects of interest. These annotations will be used during the training process to help a model learn how to identify objects.

For OBB, we need to use a separate annotation tool: the polygon tool. This tool allows us to draw arbitrary points around an object. You must draw four points around objects of interest, one for each corner of the object we want to identify.

To label with the polygon tool, select the polygon icon in the left sidebar, or press P on your keyboard. Click where you want to add the first point of your polygon on the image. Then, trace a box around the object of interest.

Repeat this process for all images in your dataset.

Step #4: Create a Dataset Version

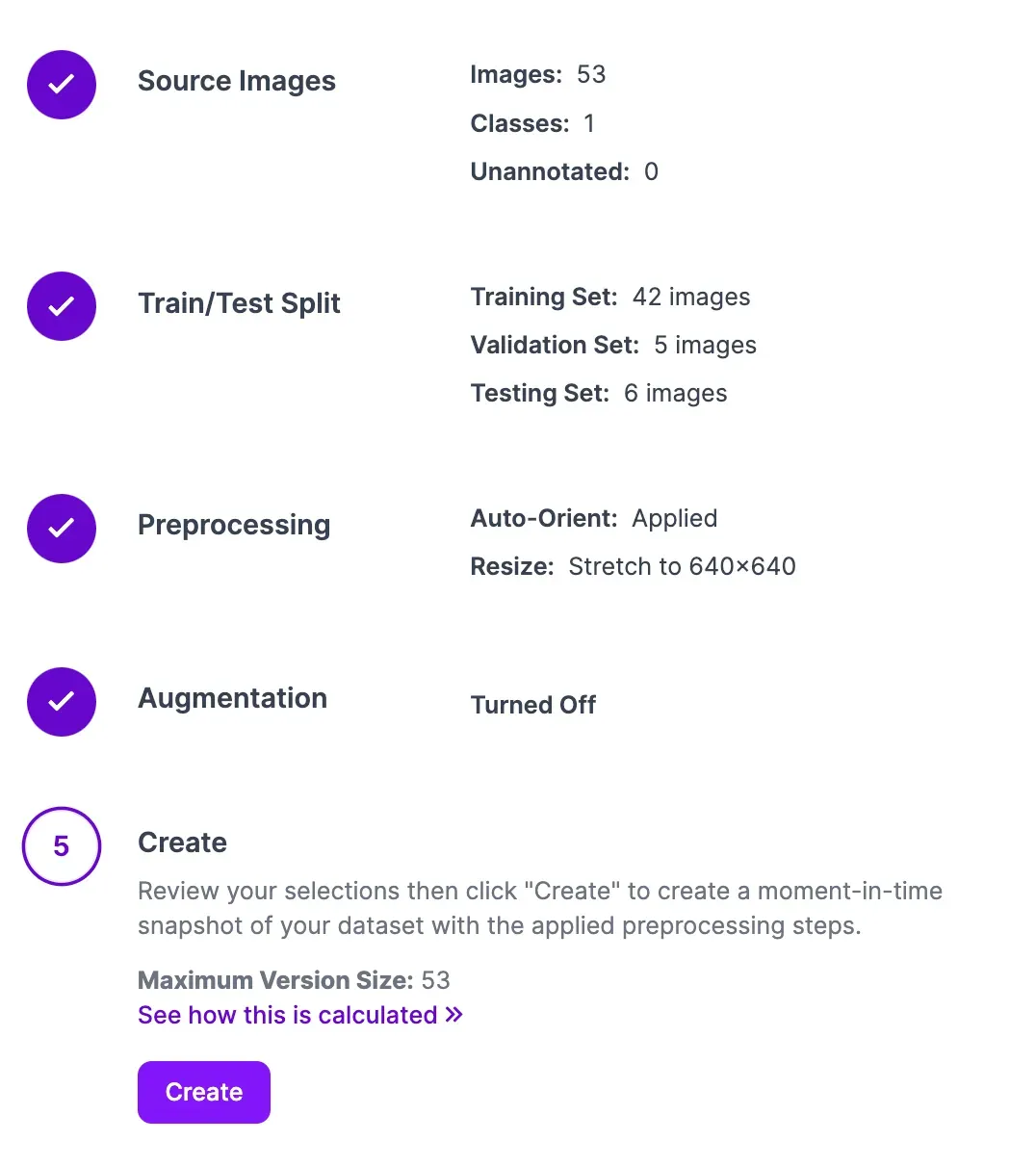

Once you have labeled all of your data, you are ready to create a dataset version. A dataset version is a snapshot of your labeled data that is frozen in time. You can use the dataset version feature to track how your dataset changes over time.

You can add augmented images and apply preprocessing steps to images when you create a dataset version.

To create a dataset version, click Generate in the left sidebar of your Roboflow project.

You will be asked to configure your dataset version. In the train/test/split section, aim to have a 70/20/10 split of data between your train, test, and validation sets. This will ensure you have enough examples of images on which to train and evaluate performance of your model.

At the Preprocessing and Augmentation stages, you can apply preprocessing steps like image resizing, greyscale, dynamic crop, as well as augmentations like brightness changes.

We recommend leaving these values as their defaults for your first model. This allows you to learn how your dataset performs when used to train a model without any changes. Indeed, if your dataset is not well annotated or contains images not representative of your use case, augmentations will not assist you in improving model performance.

To learn more about preprocessing and augmentation, refer to our image preprocessing and augmentation guide.

Once you have configured your dataset version, click Continue. Your dataset version will be generated.

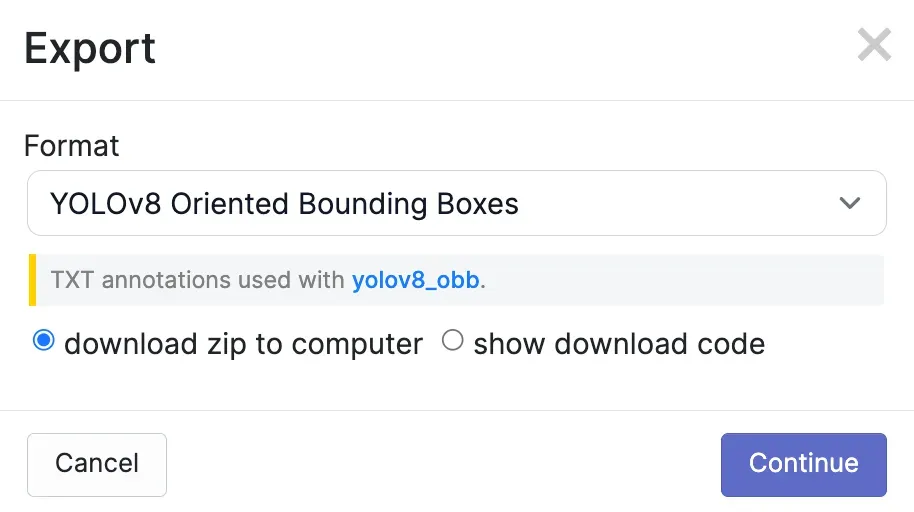

Step #5: Export Data

Roboflow Train does not support training YOLOv8 OBB models. With that said, you can export image data from Roboflow for use in training an OBB model.

We are going to train our model in a Google Colab notebook. Colab is an interactive programming environment offered by Google. You can use Colab to access a T4 GPU for free. You can use the free GPU available in Colab to train your computer vision model.

We have made a YOLOv8 OBB notebook to follow along with this guide. Open the YOLOv8 OBB notebook.

To get started, we need to export our data from Roboflow into our notebook. We can do so using the Roboflow Python package.

On your dataset page, click “Export Data”. Select “Get download code”. A code snippet will appear that you can use to export your labeled dataset. The snippet will look like this:

Run this code to download your data into your programming environment.

The code will look like this:

from roboflow import Roboflow

rf = Roboflow(api_key="API_KEY")

project = rf.workspace("capjamesg").project("metal-defect-detection-d3wl6")

dataset = project.version(2).download("yolov8-obb")Your API key will be filled in when you download the snippet.

We need to make a change to the yolov8-obb.yaml file in our dataset before we can train our model. Run the following code to replace the root value in the YAML file with the required value to train our model:

import yaml

with open(f'{dataset.location}/data.yaml', 'r') as file:

data = yaml.safe_load(file)

data['path'] = dataset.location

with open(f'{dataset.location}/data.yaml', 'w') as file:

yaml.dump(data, file, sort_keys=False)Step #4: Train a YOLOv8 OBB Model

Now that we have a dataset ready, we can train a YOLOv8 OBB model. To get started, you need to install the ultralytics pip package. You can do so using the following command:

pip install "ultralytics<=8.3.40"Add the following code to your notebook to start training a model:

from ultralytics import YOLO

model = YOLO(“yolov8n-obb.pt”)

results = model.train(data=f”{dataset.location}/yolov8-obb.yaml', epochs=100, imgsz=640)In this code, we import the Ultralytics library, load a pre-trained OBB model, then train that model using our custom dataset that we labeled in Roboflow. Our training job will run for 100 epochs.

The amount of time training takes will depend on the hardware on which you are running and how many images are in your dataset.

Step #5: Test the Model

Once your model has trained, you can test it on an image in your dataset.

To visualize results from our model, we will use the supervision Python package. supervision provides an extensive suite of utilities for working with vision models, including annotation tools for use in visualizing bounding boxes and polygons.

To install supervision, run:

pip install supervisionLet’s test our model on the following image from our test set:

We can do so using the following code:

model = YOLO('runs/obb/train2/weights/best.pt')

import os

import random

random_file = random.choice(os.listdir(f"{dataset.location}/test/images"))

file_name = os.path.join(f"{dataset.location}/test/images", random_file)

results = model(file_name)

import supervision as sv

import cv2

detections = sv.Detections.from_ultralytics(results[0])

oriented_box_annotator = sv.OrientedBoxAnnotator()

annotated_frame = oriented_box_annotator.annotate(

scene=cv2.imread(file_name),

detections=detections

)

sv.plot_image(image=annotated_frame, size=(16, 16))Our model successfully identified the location of the solar panel. Instead of showing bounding boxes, our model returned oriented bounding boxes (polygons). There is little space between the object of interest – the solar panel – and its surrounding boxes.

Below are results from a regular object detection model without OBB (left) and a model with OBB (right). These results have been cropped to highlight the region of interest as it can be hard to see the difference in bounding box positions without zooming in.

In the first image above, the bounding box is not as tightly aligned as it is in the OBB model results.

Conclusion

YOLOv8 Oriented Bounding Boxes (OBB) models identify objects of interest with a closer fit than a traditional YOLOv8 model. Oriented bounding boxes are angled to fit box-shaped images, whereas object detection models without oriented boxes return predictions that are horizontally aligned.

In this guide, we walked through how to train a YOLOv8 OBB model. We collected data from Roboflow Universe, labeled images with polygons in Roboflow, generated a dataset version, and trained a model using our dataset.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Feb 6, 2024). How to Train a YOLOv8 Oriented Bounding Box (OBB) Model. Roboflow Blog: https://blog.roboflow.com/train-yolov8-obb-model/