When we train computer vision models, we often take ideal photos of our subjects. We line up our subject just right and curate datasets of best case lighting.

But our deep learning models in production aren't so lucky.

Deliberately introducing imperfections into our datasets is essential to making our machine learning models more resilient to the harsh realities they'll encounter in real world situations.

Degrading image quality is exactly the type of task that can be completed in post processing – without the headache of collecting more data and needing to label it.

The Impact of Blur

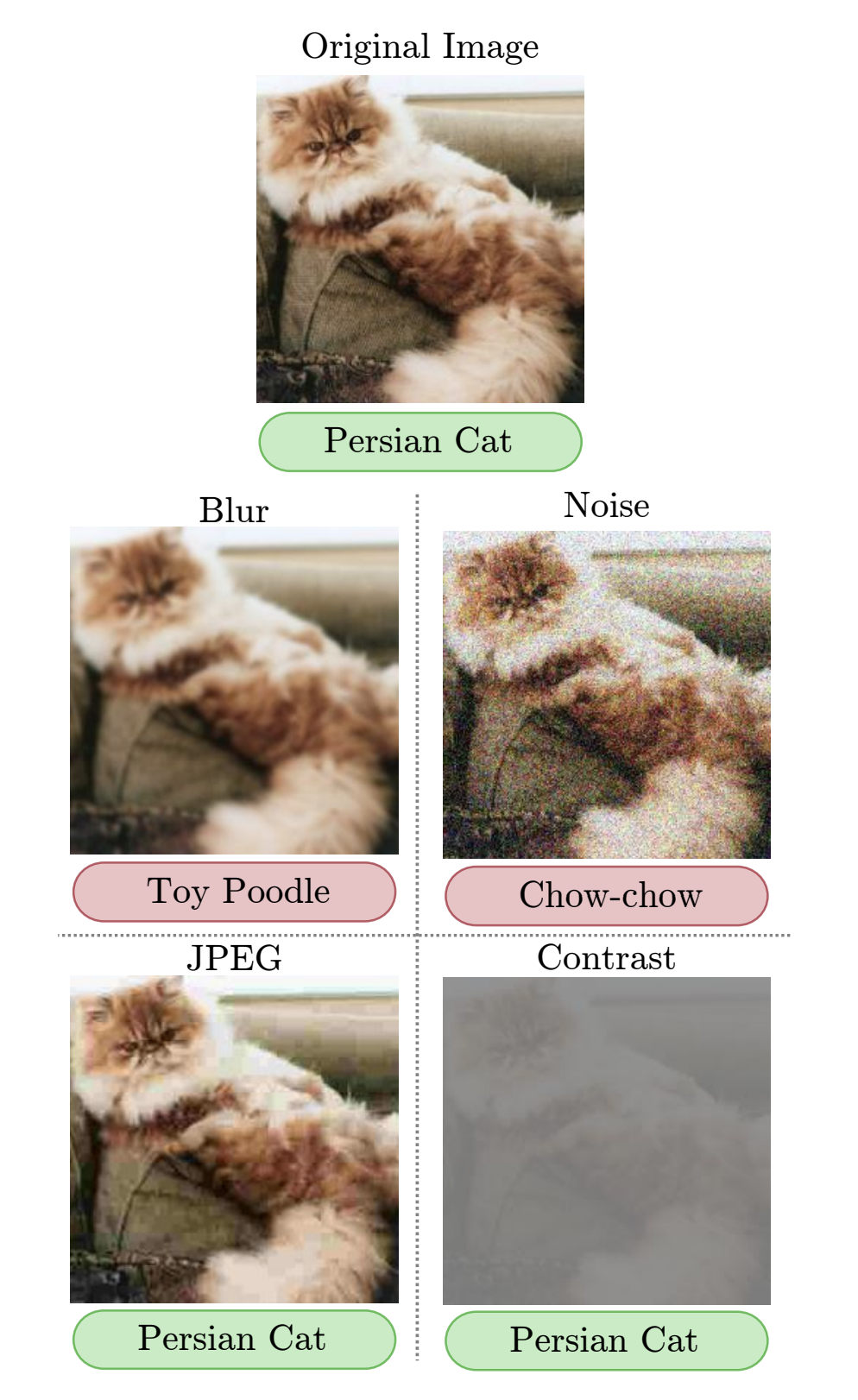

Many types of imperfection can make their way into an image: blur, poor contrast, noise, JPEG compression, and more. Of these, blurring is among the most detrimental.

Researchers from Arizona State University considered the impact blurring may have on image classification, especially as compared to other techniques. In their research, blur and noise had the most adverse consequences on simple classification tasks from a variety of convolutional neural network architectures.

Researchers suspect blur particularly obscures convolution's ability to locate edges in early levels of feature abstraction, causing inaccurate feature abstraction early in a network's training.

Blur Use Cases

Blur can be applied as a preprocessing technique or image augmentation technique. If all images in production may have blur involved, all images in training, validation, and testing should be blurred in preprocessing. More commonly, however, only some images may have blur.

Consider:

- A camera is stationary, but objects it is detecting are often moving; e.g. a camera is watching traffic

- A camera is moving, but objects it is detecting are stationary; e.g. a user has a mobile app to scan a book cover

- A camera and its objects its detecting are moving; e.g. a drone is flying over a windy field of crops to detect

In each of these examples, blur is a useful augmentation step. That is, some of our images are varied in random amounts to simulate different amounts of blur.

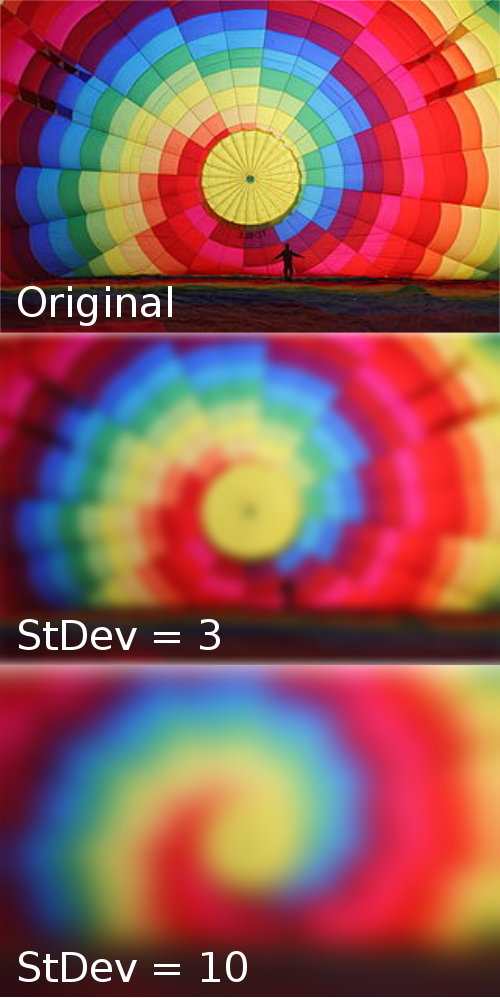

Implementing Blur

At its core, blurring an image involves taking neighboring pixels and averaging them – in effect, reducing detail and creating what we perceive as blur. Thus, at its core, when we implement different amounts of blur, we're determining how many neighboring pixels to include. We measure this spread from a single pixel as the standard deviation in both the horizontal (X) and vertical (Y) direction. The larger the standard deviation, the more blur an image receives.

Libraries like open CV have implementations of Gaussian filters that create Gaussian blur for use in open source python scripts. Leveraging these methods, you can add a random amount of blue to each of your images in your training pipeline. It's recommended that you vary the degree of blur input images receive to more closely mirror real world situations.

Alternatively, Roboflow enables you to easily set a maximum amount of Gaussian blur you'd like an individual image to receive (say, n), and each image in the training set receives anywhere from (0,n) amount of blur, sampled in a uniform random manner.

Roboflow also keeps a log of how each image was varied so you can easily see what level of blur may be most problematic.

Happy building!

Cite this Post

Use the following entry to cite this post in your research:

Joseph Nelson. (Mar 13, 2020). The Importance of Blur as an Image Augmentation Technique. Roboflow Blog: https://blog.roboflow.com/using-blur-in-computer-vision-preprocessing/