A water meter measures the volume of water used by a residential or commercial building. It helps utility companies and property owners monitor water consumption for billing purposes, leak detection, and resource management.

Water meters often require reading to be done manually which is time-consuming and labour-intensive, especially when reading is to be done by visiting remote locations or large numbers of meters to be monitored. This is where computer vision can be helpful.

Furthermore, manually entering water meter information is prone to error. If a digit is mis-typed – possible in the often dark and cramped environments in which many water meters are – it may take a while to rectify the mistake.

In this blog post we will learn how to build a computer vision system to read data from water meters and transmit the data remotely.

Automate Remote Water Meter Reading using AI: How the System Works

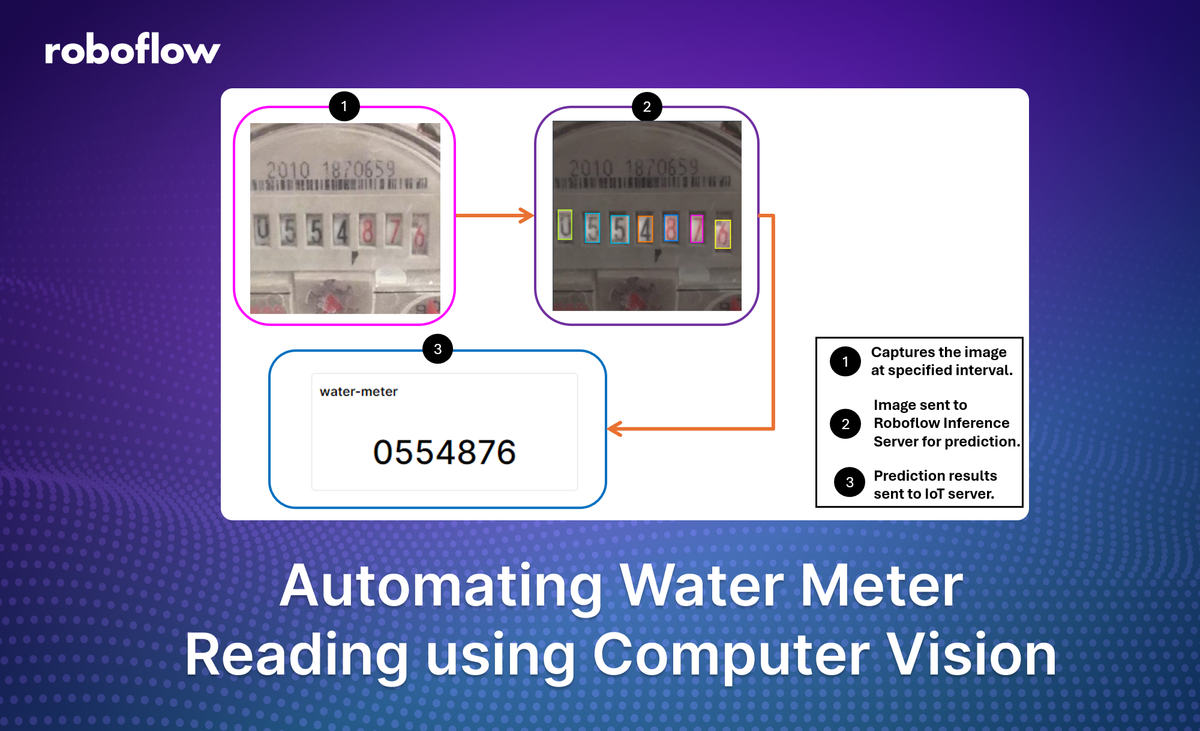

The system operates by using a computer vision model to detect and identify numbers from water meters. It consists of the following components:

- Camera node captures images of the water meter.

- A computer vision model built with Roboflow that analyzes the captured images, then detects and extracts numbers from the water meter display.

- Extracted data is sent to the Quibtro IoT platform via MQTT and data is displayed.

The transmitted data can then be used for various purposes, such as monitoring water usage or generating bills.

How To Build The Water Meter Reading Application

To build this application, we will:

- Build an object detection model.

- Write inference script to detect numbers in water meter image and send data via MQTT.

- Setup Qubitro IoT server to receive and visualize data.

Step 1: Build object detection model

For this project, we will use the water meter dataset from FESB workspace on Roboflow Universe. We will then train and deploy it using the Roboflow.

The water meter computer vision project dataset on Roboflow Universe consists of more than 4K+ images of water meters.

The dataset is labeled for digits from 0 to 9, indicated by respective class labels. Following is an example of class labels.

To build the computer vision model, I downloaded the dataset and uploaded it to my own workspace.

Once the dataset version is generated, I trained the model using Roboflow auto training. The following image shows the metrics of the trained model. The trained model is available here.

Step 2: Write inference script to detect numbers in water meter image and send data via MQTT

To run inference on an image, we can use following script:

# import the inference-sdk

from inference_sdk import InferenceHTTPClient

# initialize the client

CLIENT = InferenceHTTPClient(

api_url="https://detect.roboflow.com",

api_key="ROBOFLOW_API_KEY"

)

# infer on a local image

result = CLIENT.infer("water_meter.jpg", model_id="water-meter-monitoring/1")

print(result)

For the water_meter.jpg image below:

The code generates the following output:

{'inference_id': 'a88ac8ce-e750-493a-b861-6f74734ec43c', 'time': 0.0358694110000215, 'image': {'width': 416, 'height': 416}, 'predictions': [{'x': 258.0, 'y': 237.0, 'width': 30.0, 'height': 62.0, 'confidence': 0.9480071663856506, 'class': '8', 'class_id': 8, 'detection_id': '8a757580-2f8c-4732-bef4-9fe38b0ec665'}, {'x': 316.5, 'y': 236.0, 'width': 31.0, 'height': 64.0, 'confidence': 0.936906099319458, 'class': '7', 'class_id': 7, 'detection_id': '05b6b394-73ea-4900-baa3-068ed9b44155'}, {'x': 202.0, 'y': 237.0, 'width': 30.0, 'height': 58.0, 'confidence': 0.9148543477058411, 'class': '4', 'class_id': 4, 'detection_id': 'd7240eec-d1c2-4480-a1b4-03355260620c'}, {'x': 145.0, 'y': 236.5, 'width': 32.0, 'height': 59.0, 'confidence': 0.9080735445022583, 'class': '5', 'class_id': 5, 'detection_id': 'c74f9a86-2458-4537-94ea-103658e34d17'}, {'x': 87.0, 'y': 234.0, 'width': 30.0, 'height': 62.0, 'confidence': 0.8975856900215149, 'class': '5', 'class_id': 5, 'detection_id': '72c74e39-4029-428b-af2b-9c6772a0376c'}, {'x': 27.5, 'y': 229.0, 'width': 33.0, 'height': 54.0, 'confidence': 0.8934838771820068, 'class': '0', 'class_id': 0, 'detection_id': '33a9d3c9-14d8-4a09-b24a-47e8c85c4912'}, {'x': 371.5, 'y': 245.5, 'width': 29.0, 'height': 59.0, 'confidence': 0.883609414100647, 'class': '6', 'class_id': 6, 'detection_id': 'ae89df94-dad2-4738-95da-c2c05d074c4b'}]}In the output we can see that the detected digits, i.e. 8745506, are not in sequence as they appear on the water meter, i.e. 0554876. We can solve this problem by applying post-processing with coordinates.

After detecting the digits, each detection will have a bounding box with coordinates (x, y, width, height). We can sort these bounding boxes based on their x coordinate, which represents the horizontal position of the digits in the image. Sorting the bounding boxes by the x coordinate will give us the digits in the left-to-right order, corresponding to their natural sequence on the meter. It can be done with the following code.

# Sort the predictions by the x-coordinate

sorted_predictions = sorted(predictions, key=lambda p: p['x'])Here is the complete inference code that sorts the coordinates by their x values, allowing us to read the numbers from left to right:

from inference_sdk import InferenceHTTPClient

import os

# Initialize the inference client

CLIENT = InferenceHTTPClient(

api_url="https://detect.roboflow.com",

api_key="ROBOFLOW_API_KEY" # Add your API key here

)

# Perform inference on the captured image

def perform_inference(image_path):

if not image_path:

print("No image to process.")

return

# Perform inference

result = CLIENT.infer(image_path, model_id="water-meter-monitoring/1")

# Process the inference result

predictions = result['predictions']

# Sort the predictions by the x-coordinate

sorted_predictions = sorted(predictions, key=lambda p: p['x'])

# Extract the class labels and join them to form the digit sequence

digit_sequence = ''.join([pred['class'] for pred in sorted_predictions])

# Print the digit sequence

print("Detected digit sequence:", digit_sequence)

# Main function to capture image and perform inference

def main():

# specify test image source

image_path = "water_meter.jpg"

# Perform inference on the captured image

perform_inference(image_path)

# Run the main function

if __name__ == "__main__":

main()

To create an automated system, we need to ensure that the image is captured automatically at specified intervals (everyday 9:00 AM in our example) and that predictions are run on the captured image. To achieve this, we can use the following code which captures the image from the webcam everyday at 9:00 AM. Below is the final code for our application.

import cv2

import schedule

import time

from datetime import datetime

from inference_sdk import InferenceHTTPClient

import os

import paho.mqtt.client as mqtt

import json

import ssl

# Qubitro MQTT client setup

broker_host = "broker.qubitro.com"

broker_port = 8883

device_id = "" # Add your device ID here

device_token = "" # Add your device token here

# Callback functions for MQTT

def on_connect(client, userdata, flags, rc):

if rc == 0:

print("Connected to Qubitro!")

client.on_subscribe = on_subscribe

client.on_message = on_message

else:

print("Failed to connect, visit: https://docs.qubitro.com/platform/mqtt/examples return code:", rc)

def on_subscribe(mqttc, obj, mid, granted_qos):

print("Subscribed, " + "qos: " + str(granted_qos))

def on_message(client, userdata, message):

print("Received message =", str(message.payload.decode("utf-8")))

def on_publish(client, obj, publish):

print("Published: " + str(publish))

# Initialize the MQTT client

mqtt_client = mqtt.Client(client_id=device_id)

context = ssl.SSLContext(ssl.PROTOCOL_TLSv1_2)

mqtt_client.tls_set_context(context)

mqtt_client.username_pw_set(username=device_id, password=device_token)

mqtt_client.connect(broker_host, broker_port, 60)

mqtt_client.on_connect = on_connect

mqtt_client.on_publish = on_publish

mqtt_client.loop_start()

# Inference client setup

CLIENT = InferenceHTTPClient(

api_url="https://detect.roboflow.com",

api_key="ROBOFLOW_API_KEY" # Add your API key here

)

# Capture an image from the webcam

def capture_image_from_webcam():

cap = cv2.VideoCapture(0)

if not cap.isOpened():

print("Error: Could not open webcam.")

return None

ret, frame = cap.read()

if ret:

image_path = f"webcam_image_{datetime.now().strftime('%Y%m%d_%H%M%S')}.jpg"

cv2.imwrite(image_path, frame)

print(f"Image saved to {image_path}")

else:

print("Error: Could not read frame.")

image_path = None

cap.release()

return image_path

# Perform inference on the captured image

def perform_inference(image_path):

if not image_path:

print("No image to process.")

return None

result = CLIENT.infer(image_path, model_id="water-meter-monitoring/1")

predictions = result['predictions']

sorted_predictions = sorted(predictions, key=lambda p: p['x'])

digit_sequence = ''.join([pred['class'] for pred in sorted_predictions])

print("Detected digit sequence:", digit_sequence)

return digit_sequence

# Task to capture image, perform inference, and send data to Qubitro

def daily_task():

print("Running daily task...")

image_path = capture_image_from_webcam()

digit_sequence = perform_inference(image_path)

if digit_sequence:

payload = {

"reading-digits": digit_sequence,

}

mqtt_client.publish(device_id, payload=json.dumps(payload))

# Schedule the daily task at 9:00 AM

schedule.every().day.at("11:47").do(daily_task)

# Keep the script running to check for scheduled tasks

while True:

schedule.run_pending()

time.sleep(60)

The code captures images at specified intervals using the schedule library and performs predictions on these images. The capture_image_from_webcam function captures the image, and perform_inference processes it for digit detection. The script runs continuously, checking for scheduled tasks to execute.

We are also sending our prediction results, in the daily_task() function, via MQTT to Quibtro IoT & Data Management platform. For this the device id and token needs to be specified in the following lines.

device_id = "" # Add your device ID here

device_token = "" # Add your device token here

We will learn how to obtain this in the next step.

Step 3: Setup Qubitro IoT server to receive and visualize data

We will now set up Qubitro to receive data and visualize it. Qubitro is an Internet of Things (IoT) platform that enables users to connect, manage, and analyze IoT devices and data. It provides tools for device management, data visualization, and real-time monitoring, making it easier to deploy and scale IoT solutions. The platform supports various communication protocols, including MQTT, for secure and efficient data transfer.

First, signup and login to your Qubitro account. Next, Click on “New Project” to create a new project. Specify the name and description of your project.

Once the project is created, add a “New Source” in the project, an MQTT protocol based end point in our example.

Next, specify the details for your device, i.e. name and the type of device.

Once the device is created, you can find the device id and token in the “Settings” tab of your device (as shown in following figure). Use this information in the code from step 2.

Next, create a dashboard for your device where the data will be visible. Go to the dashboard tab of your device and click the link “Go to dashboard”.

Next, specify the details (name and tags) for your dashboard:

Next, we need to widgets to the dashboard. This widget will show the data sent by the device running the code from step 2. Click on “New Widget”.

Select the widget type as “Stat” and click “Add point”.

In the "Add point" dialog box, select your project, device, and value variable. If the value dropdown is empty, run the sample code provided by Qubitro, using "reading-digits" as the key in the payload. This will populate the value in the “connect data point” dialog. You can find the sample "code snippet" under the "Overview" tab of your device.

Finally, when you run code (given in the step 2), you will see the last value from the inference script in the Quibtro dashboard as shown in the following figure.

How to Automate Water Meter Reading Using Computer Vision

In this project, we automated the water meter reading using computer vision. We trained a computer vision model using Roboflow for digit detection. The detected readings were then securely transmitted to Qubitro IoT platform for remote monitoring and analysis. This approach showcases the integration of Roboflow's powerful computer vision capabilities with IoT solutions, highlighting the useful application of Computer Vision in practical, real-world scenarios. Get started with Roboflow today.

Cite this Post

Use the following entry to cite this post in your research:

Timothy M. (Jul 30, 2024). Automating Water Meter Reading using Computer Vision. Roboflow Blog: https://blog.roboflow.com/water-meter-reading/