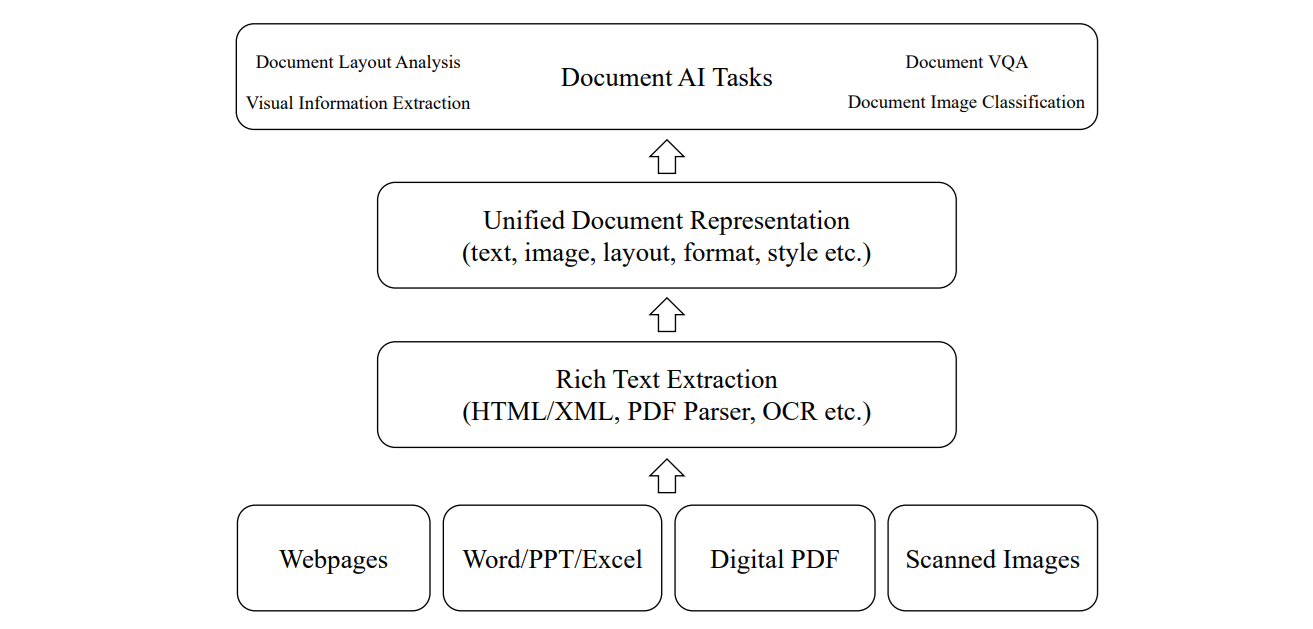

Document AI, also called Document Intelligence, refers to the use of artificial intelligence technologies, such as machine learning, natural language processing (NLP), and computer vision, to automate the extraction, analysis, and understanding of information from documents.

For example, a company might use Document AI to process invoices by automatically reading the vendor’s name, amount, and due date, then feeding that data into an accounting system. It is widely used in industries like finance, healthcare, legal, and logistics to save time and minimize errors.

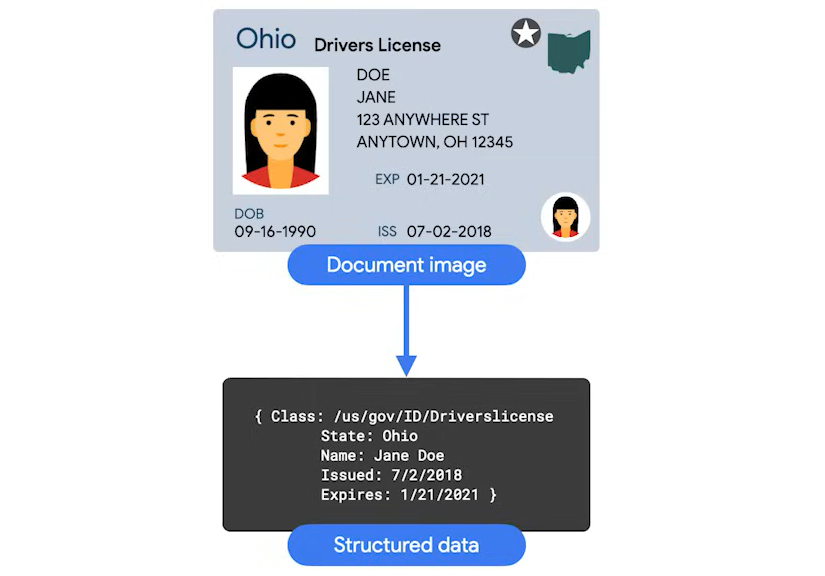

The primary purpose of Document AI is to automate the extraction and organization of information from unstructured or semi-structured documents. It transforms documents such as scanned images, PDFs, or forms into structured, machine-readable data. This enables organizations to reduce manual data entry, improve accuracy, and quickly integrate the information into business processes for analytics, compliance, and decision-making.

A Document AI system can perform the following operations:

- Extract Data: Identify and extract specific information like names, dates, numbers, or clauses from documents (e.g., invoices, contracts, forms).

- Classify Documents: Categorize documents based on their type or content (e.g., sorting emails, legal agreements, or receipts).

- Understand Context: Interpret the meaning or intent behind the text, often using NLP to handle complex language or handwritten notes.

- Automate Processes: Integrate with business systems to input extracted data into databases, trigger actions, or flag anomalies.

Today, multimodal models powered by natural language processing are commonly used for document processing. Multimodal models accept both text and image inputs and return responses based on the inputs. For example, you can ask multimodal models to extract data, classify a document, run character recognition, and more.

Role of Computer Vision in Document AI

Computer vision plays a crucial role in Document AI by enabling machines to "see" and understand visual elements in documents.

Computer vision facilitating tasks like optical character recognition (OCR), layout analysis, and entity extraction which ultimately transform unstructured data into structured data and usable information. Here are several keyways computer vision is integrated into Document AI:

Optical Character Recognition (OCR)

Computer vision helps identify areas in an image that contain text. Once text areas are detected, OCR converts the visual representation of text into machine-readable characters.

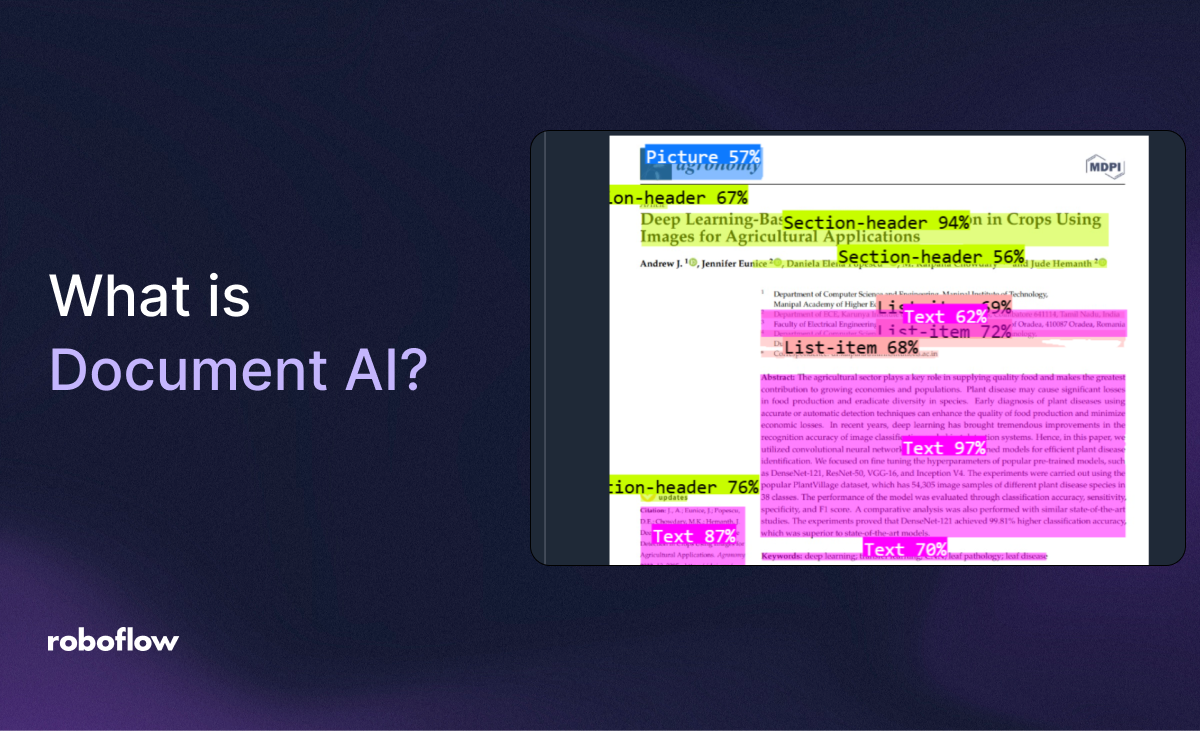

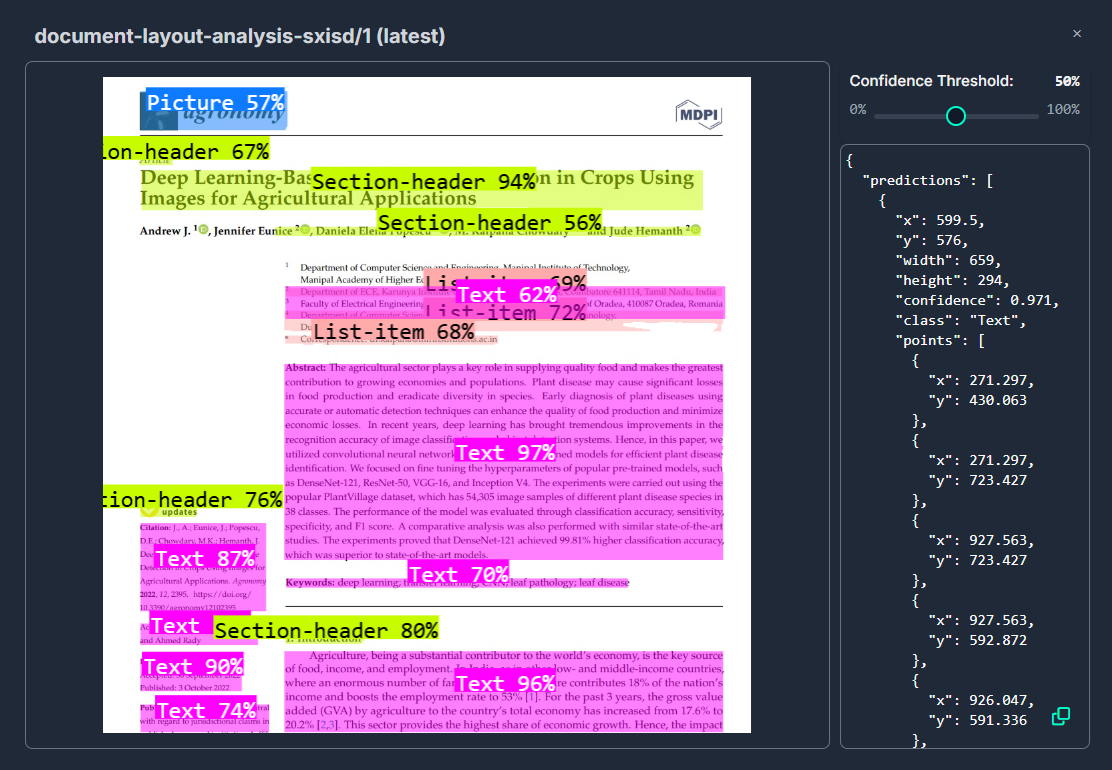

Layout Analysis

Computer vision helps to detect and segment various components of a document, such as headers, footers, paragraphs, tables, and images. It helps in analyzing the spatial arrangement of elements to reconstruct the document’s layout, which is crucial for preserving context and formatting.

Document Classification and Organization

Computer vision helps in recognizing visual patterns and structures (like logos, signatures, or specific formatting). Computer vision can also assist in classifying documents (e.g., invoices, contracts, or forms etc.) automatically and can also flag documents with unusual layouts or potential errors that may require human review.

Enhanced Data Extraction

Computer vision can help improve the clarity of scanned images or photos, which is essential for accurate text extraction. Beyond text, computer vision identifies non-textual elements like graphs, diagrams, or stamps, which can then be processed separately or linked with textual data.

Computer vision transforms raw document images into structured data by using pattern recognition and deep learning techniques. This integration not only boosts the accuracy of text extraction through OCR but also enables a richer understanding of document layouts and content and leads to more robust and scalable Document AI solutions.

Document AI Use Cases and Demos

Roboflow Workflows provides a low-code, visual interface that helps to easily build Document AI projects. Roboflow Workflows provides several blocks that can be used to build the Document AI pipeline.

In the sections below, we are going to build four applications that process documents using AI. We will cover structured data extraction, classification, summarisation, and VQA.

Document AI: Structured Data Extraction

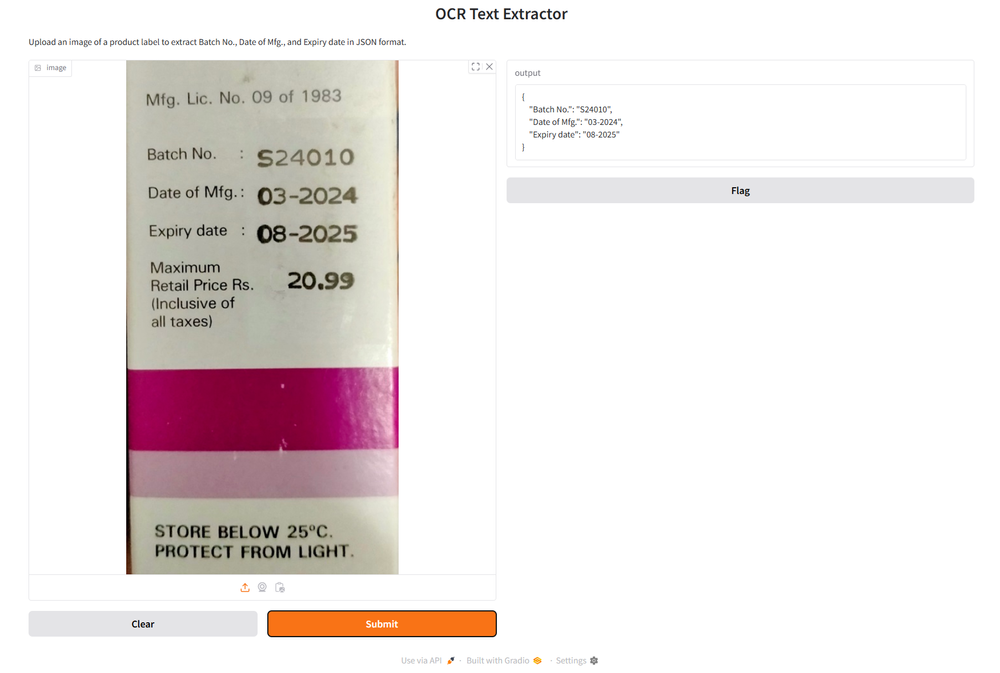

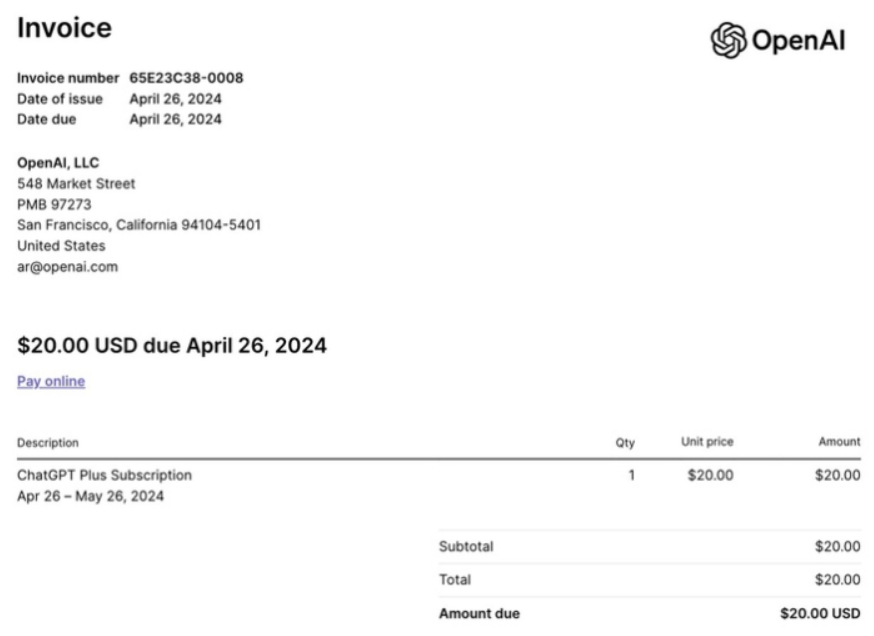

Models capable of multimodal understanding like OpenAI's GPT model can identify key-value pairs or specific entities (like names, dates, or amounts) and then output them in a structured format (e.g., JSON, CSV). This structured data can then be easily integrated into databases, used for analytics, or fed into other business processes.

For this example, we will use the invoice image given below.

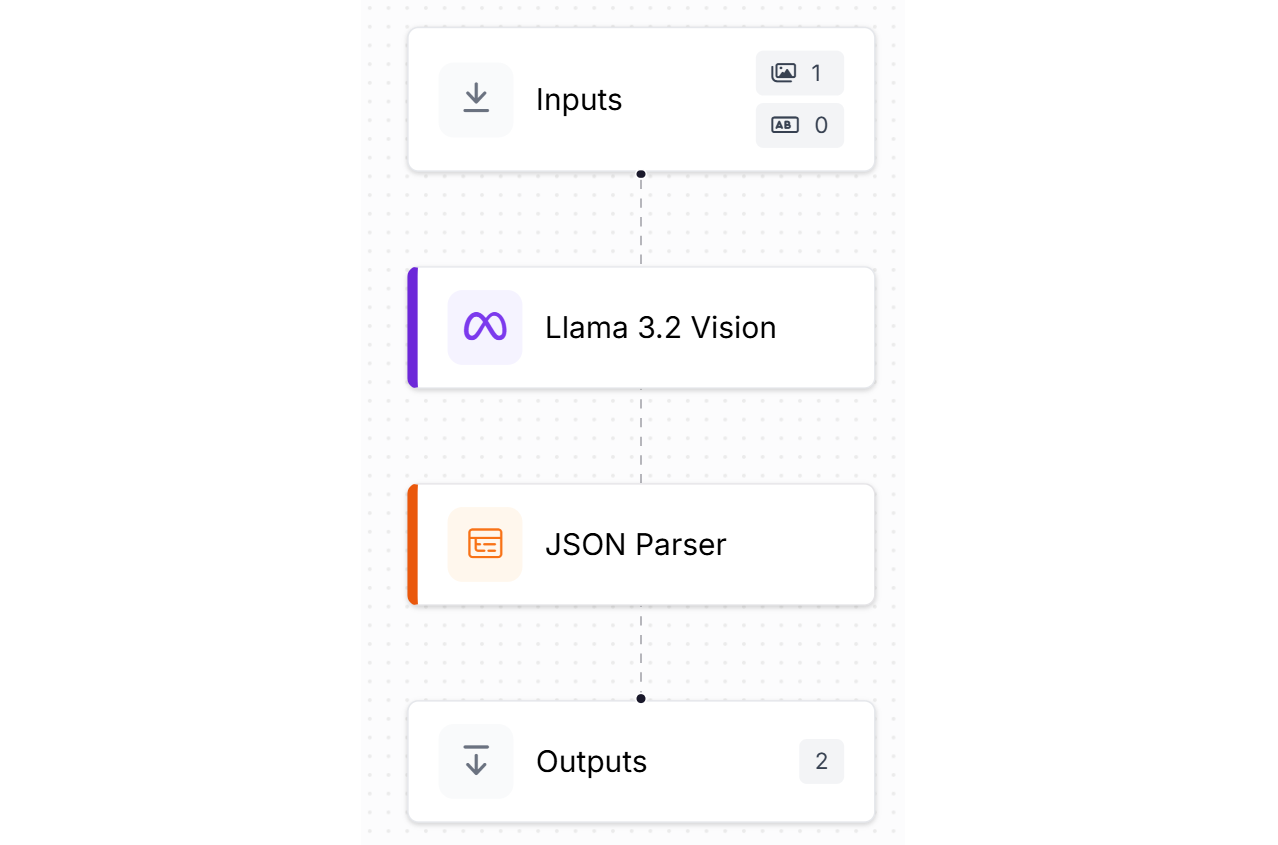

We, will create a following Workflow to extract the structured data. In the workflow we will use Llama 3.2 Vision block to read and extract OCR and use the JSON Parser block to generate the structured data that can be utilized for different purposes.

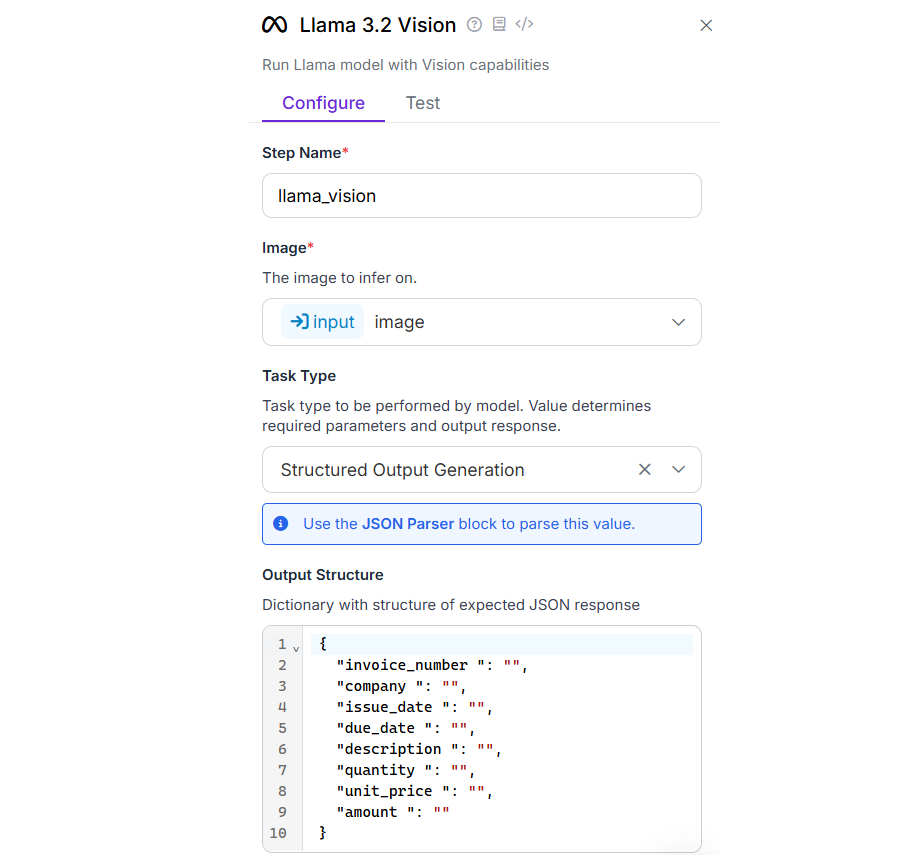

The Llama 3.2 vision block should be configured as follows. Select the “Structured Output Generation” as task type and Output Structure property to following:

{

"invoice_number ": "",

"company ": "",

"issue_date ": "",

"due_date ": "",

"description ": "",

"quantity ": "",

"unit_price ": "",

"amount ": ""

}

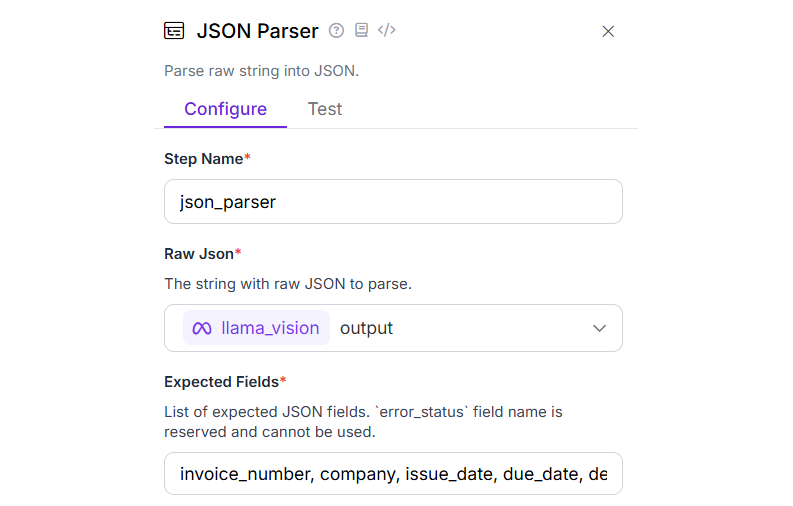

Within the JSON Parser block, list every field you want to extract from the output of the Llama 3.2 Vision block by specifying them in the "Expected Fields" property.

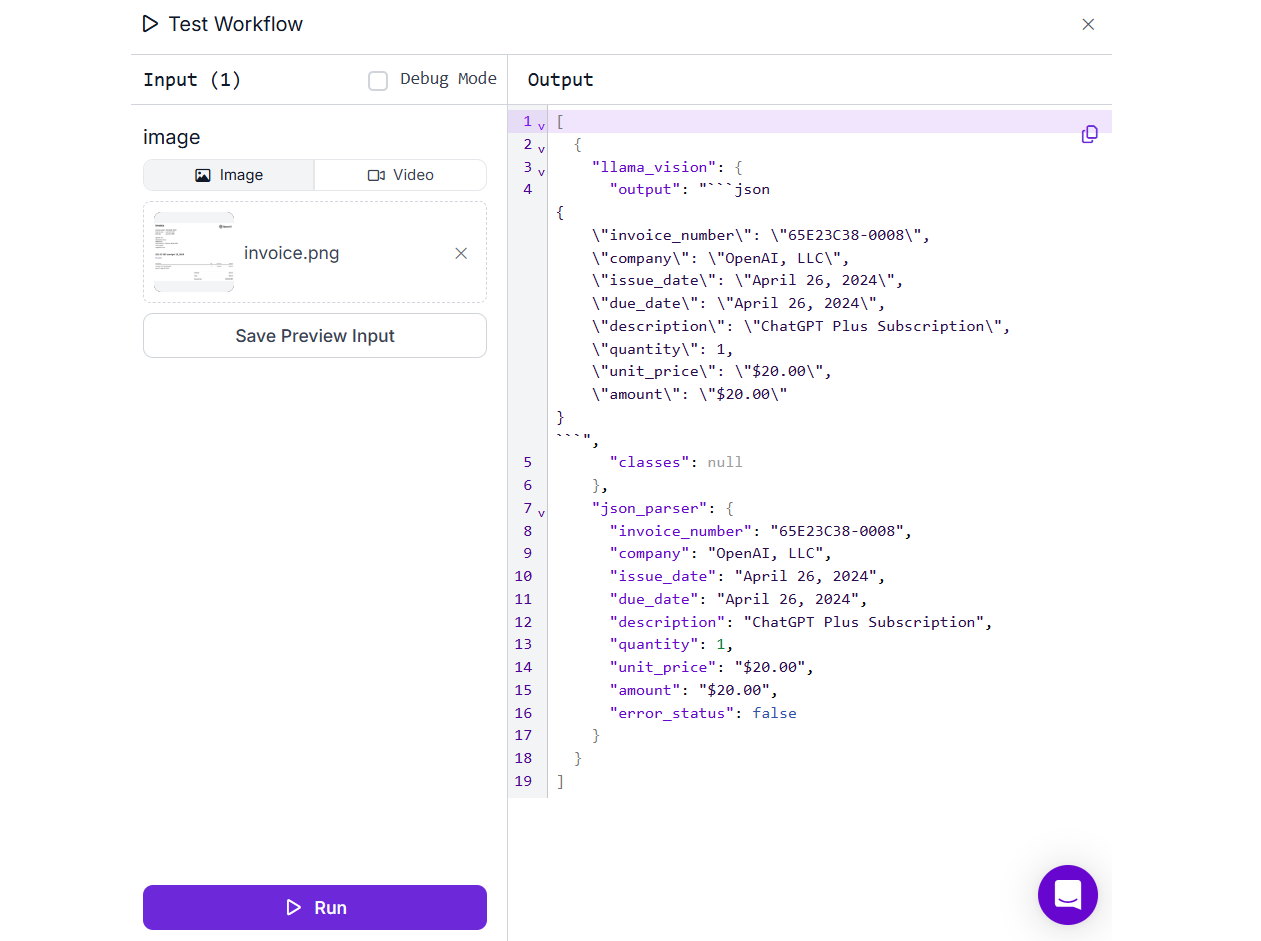

Now run the Workflow, upload the input image and you should see output similar to following.

Document Processing AI: Summarization

Document AI can automatically generate concise summaries of lengthy texts. By extracting the main points, it helps users quickly understand the essential content of a document without having to read it in full. This is particularly useful when dealing with large volumes of documents or when a quick review is needed. For this example we will create a Roboflow Workflow that will use OpenAI block in the Roboflow Workflow and use the gpt-4o model for the task.

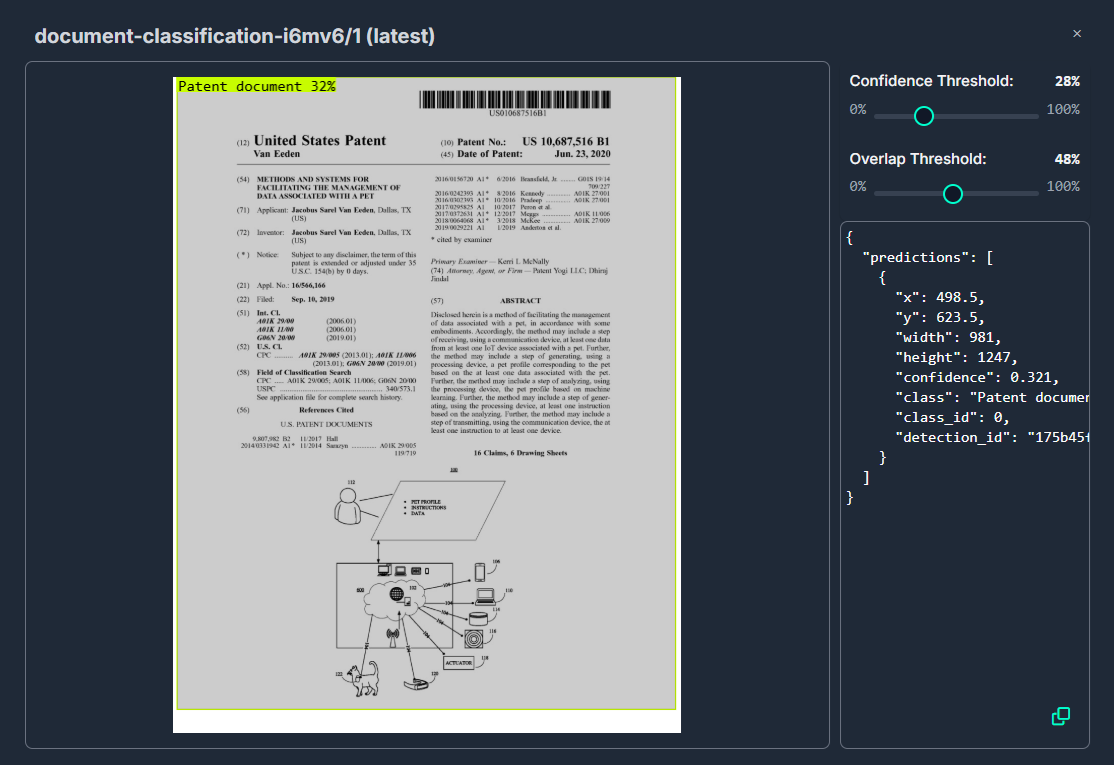

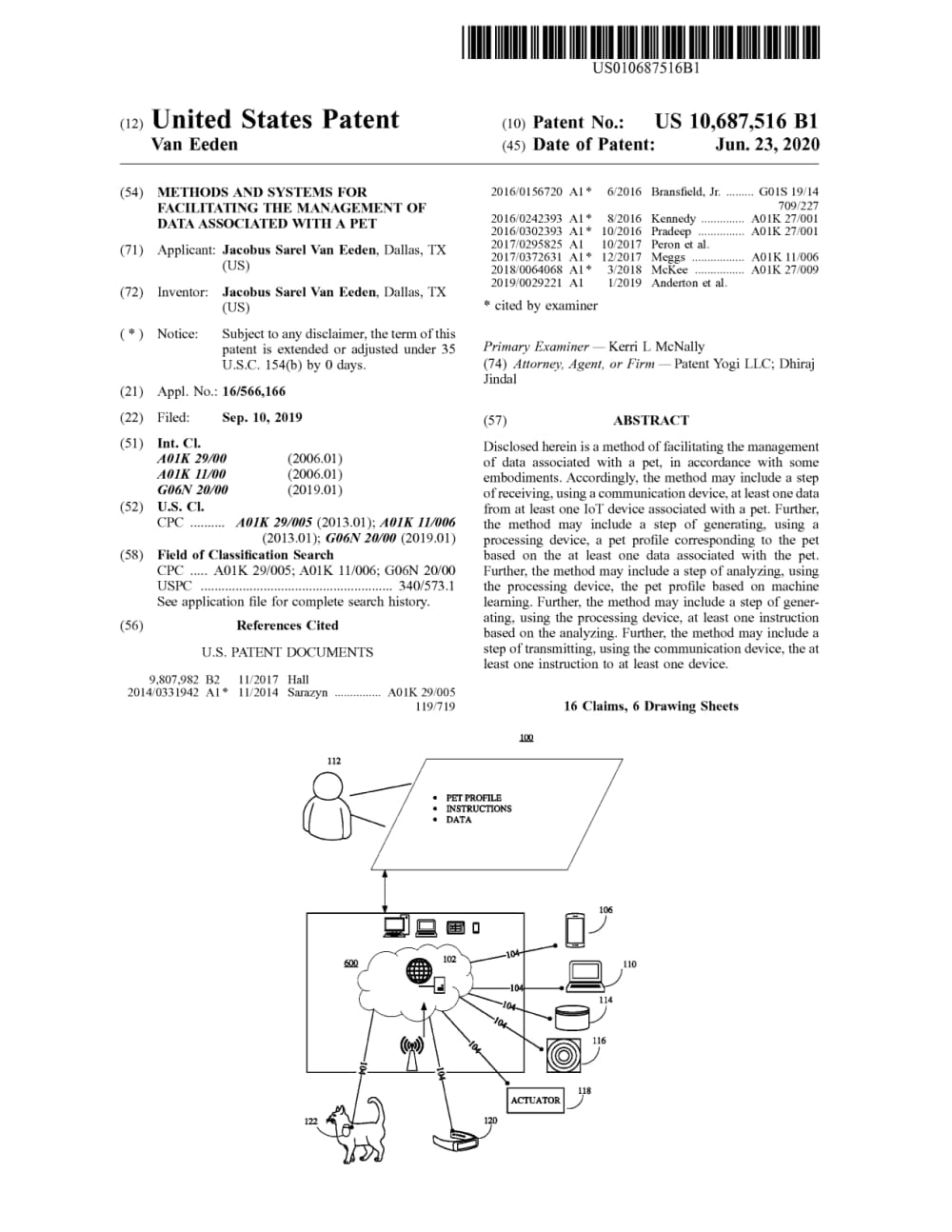

For this example we will use following patent document and generate its summary.

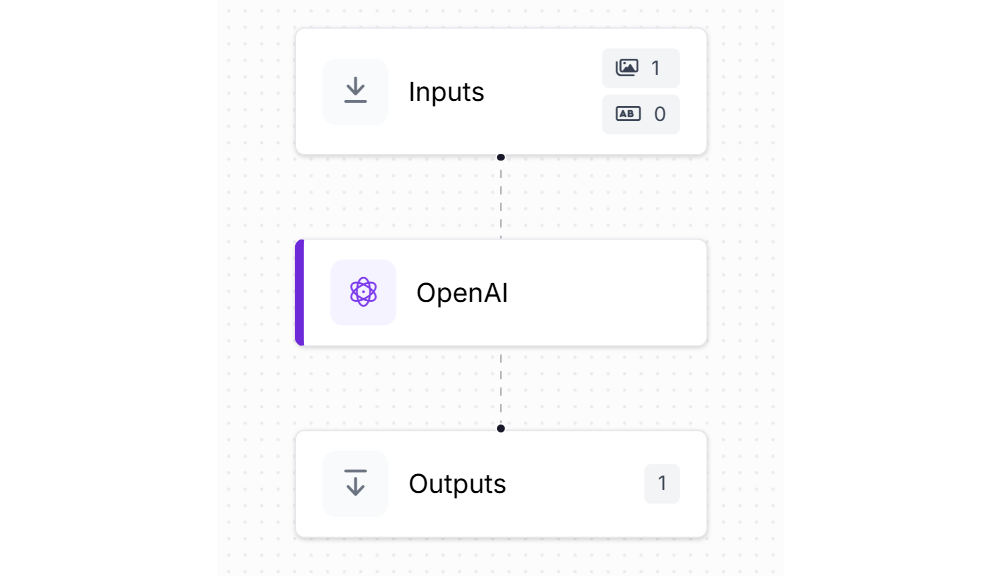

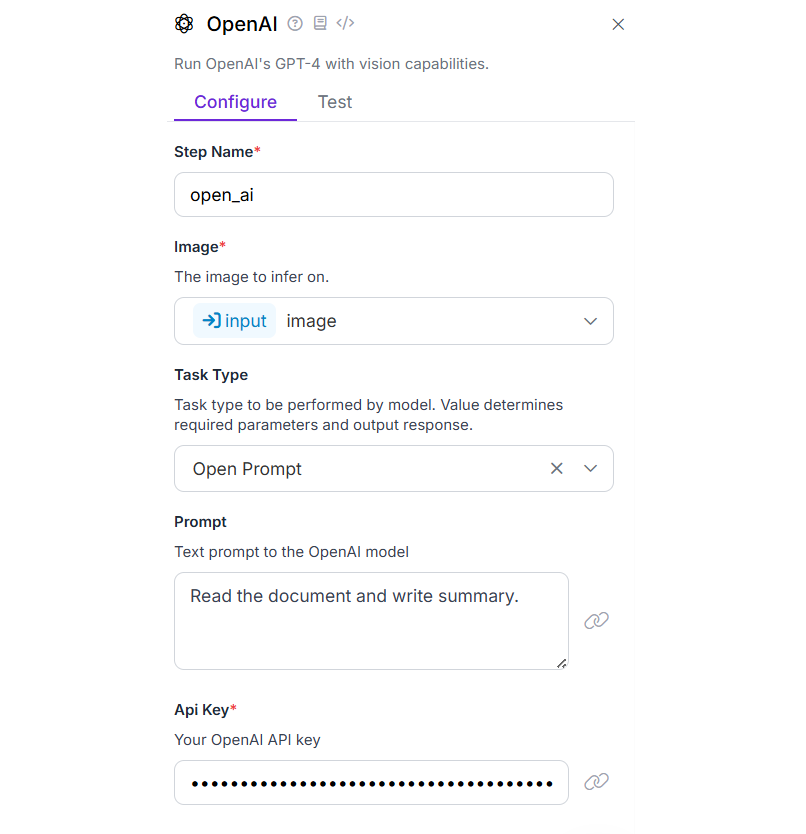

Create a new Roboflow Workflow with OpenAI block as following.

In the OpenAI block configuration choose Task Type as “Open Prompt” and specify the prompt as follows:

“Read the document and write summary.”

Now run the workflow and upload the patent document image. You should see output similar to following.

[

{

"open_ai": {

"output": "The document is a United States patent (US 10,687,516 B1) for a method and system designed to manage data associated with a pet. Filed by Jacobus Sarel Van Eeden, it involves using a communication device to receive data linked to a pet. The system includes generating a pet profile, processing instructions and data, and analyzing them. Machine learning is utilized for improved analysis. The system can generate and transmit instructions to devices or actuators based on this analysis, facilitating better pet management.",

"classes": null

}

}

]

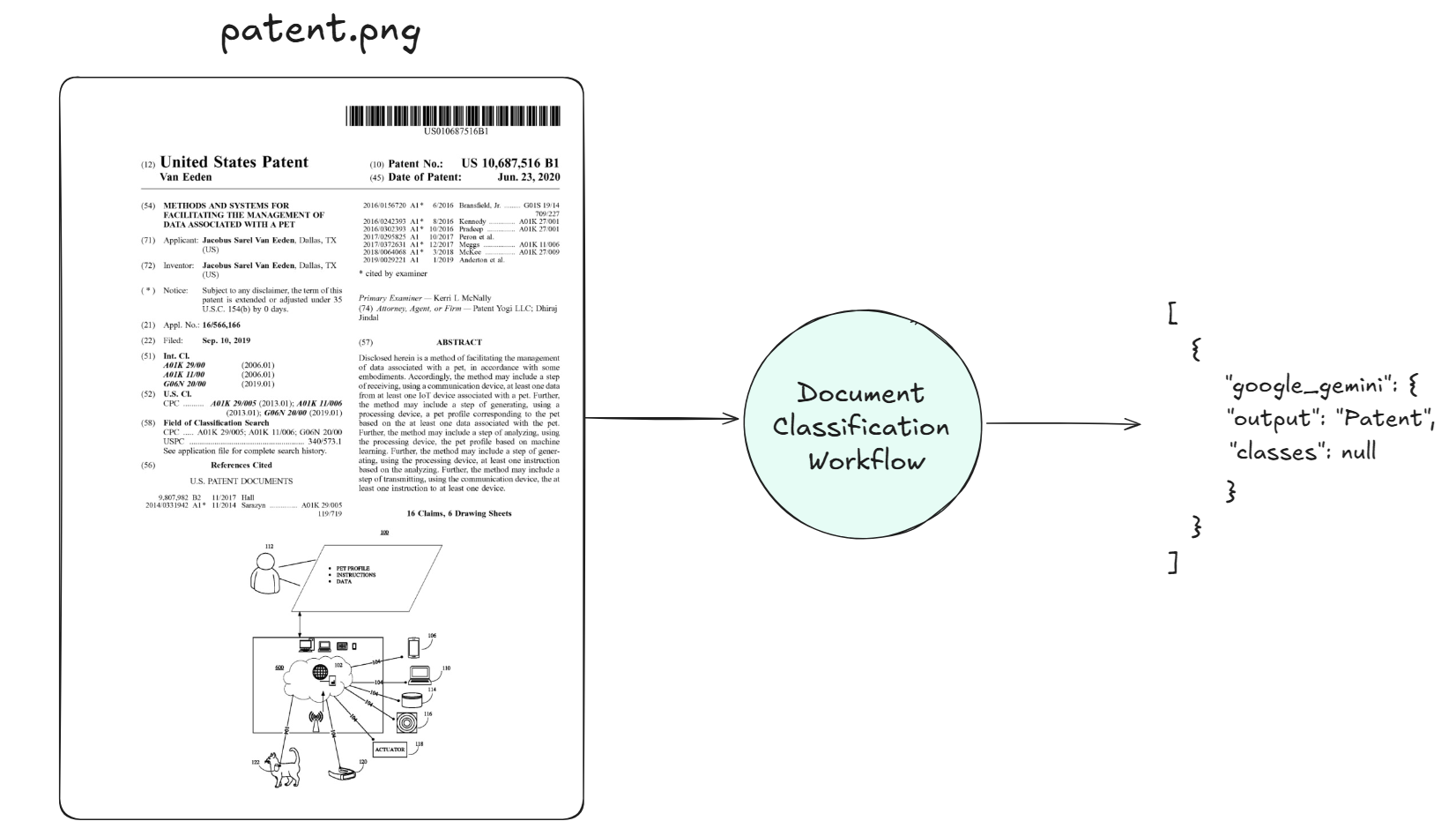

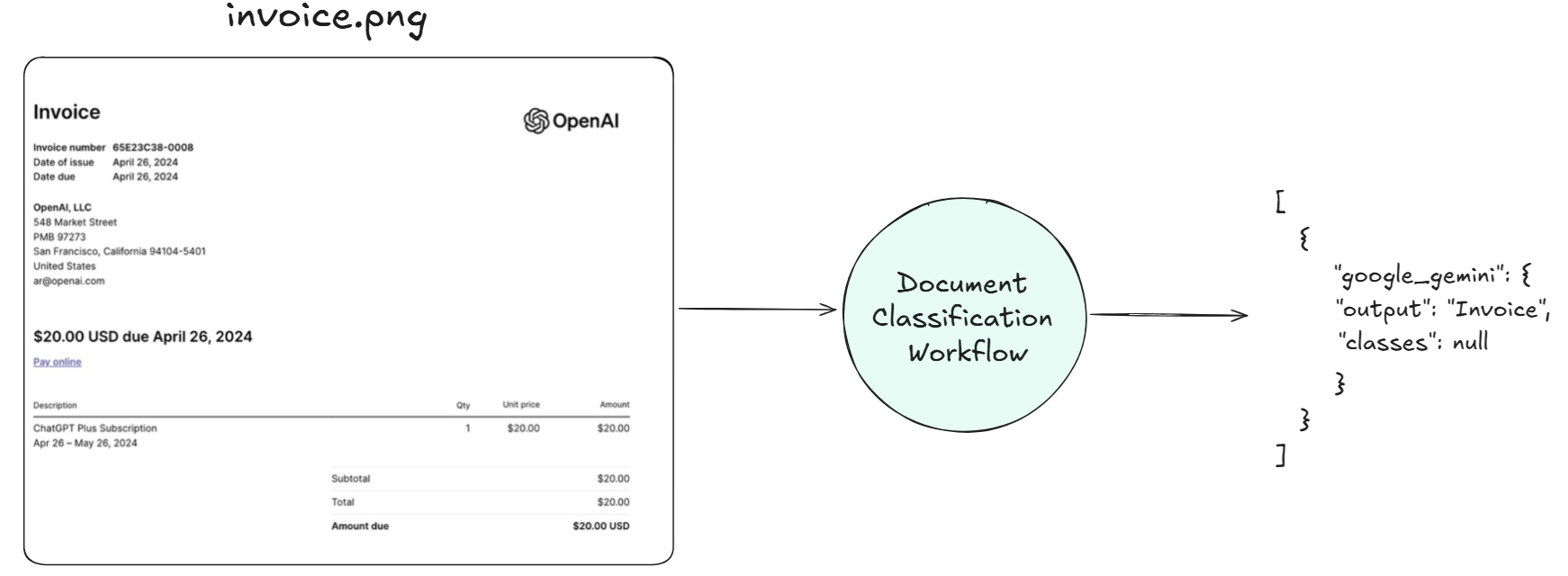

Document AI: Classification

Document classification is another important task performed by Document AI. Document classification is the process for identifying the type of document.

By analyzing content, layout, and context, Document AI can automatically classify documents into predefined categories (such as invoices, contracts, reports, etc.). This aids in organizing and indexing documents for more efficient search, retrieval and management.

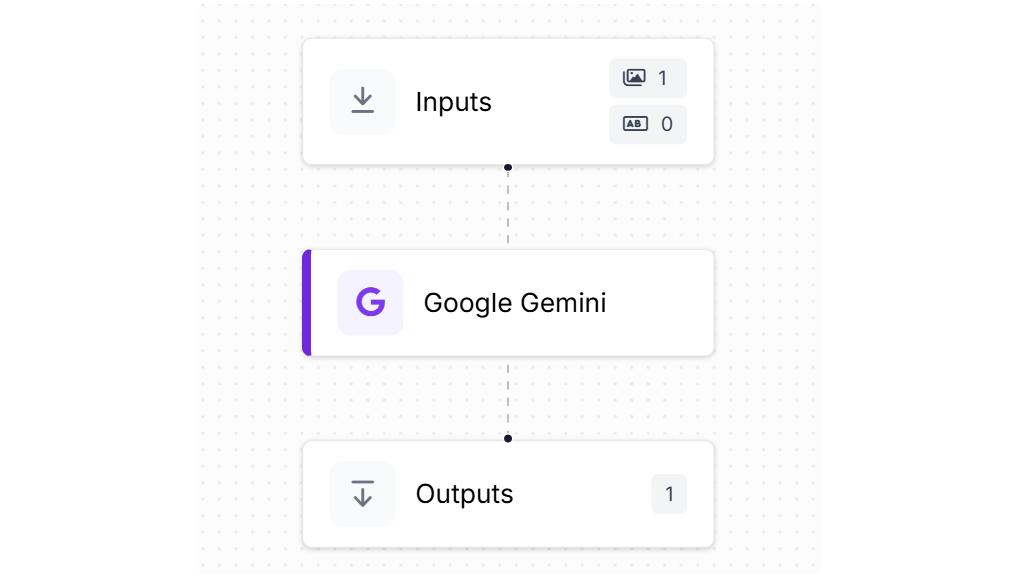

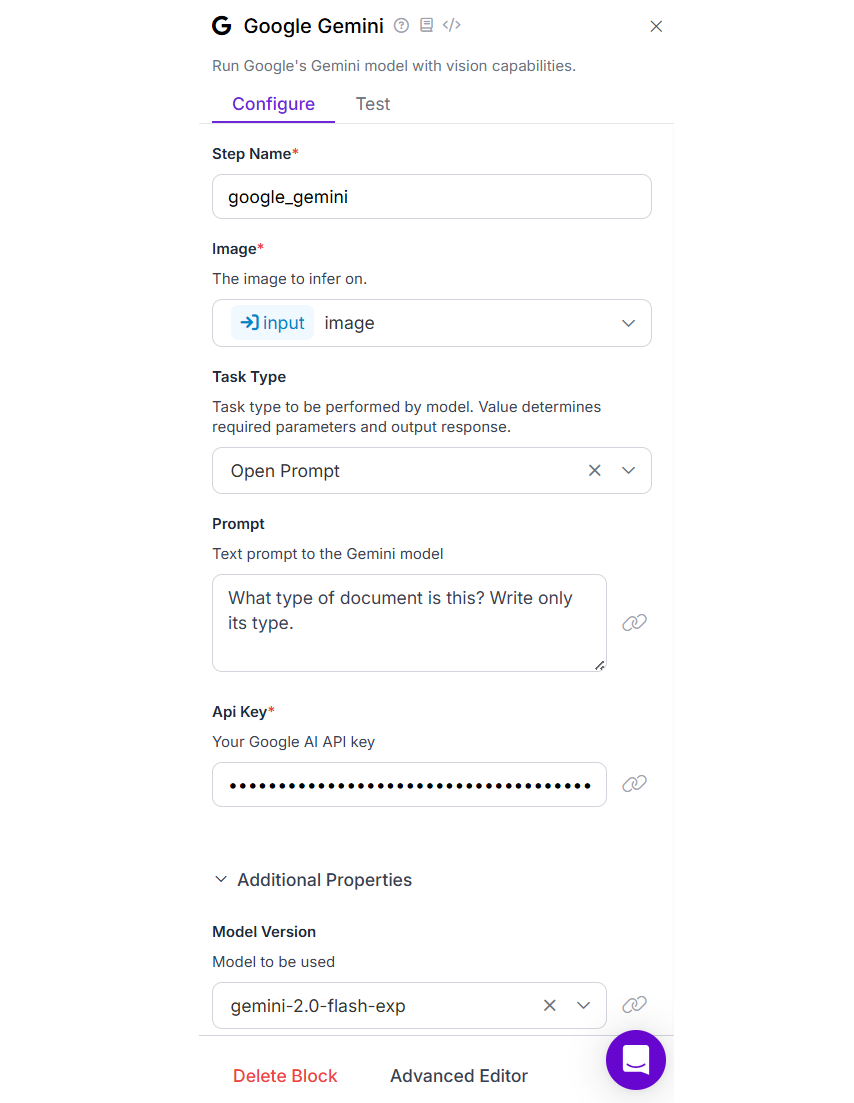

This is valuable for enterprise content management and regulatory compliance. For this example we will create a Roboflow Workflow with Google Gemini block and use the Gemini 2.0 Flash model.

Create a new Roboflow Workflow and add Google Gemini block to it.

Configure the Google Gemini block by choosing Task Type as “Open Prompt” and specify the following text prompt in the “Prompt” property.

What type of document is this? Write only its type.

Choose “gemini-2.0-flash-exp” for the “Model Version” property in the “Additional Properties”.

Now run the Workflow. You can try with different document images to see how the Workflow classifies document types. Testing it with the above patent and invoice images, you should see the result similar to the following.

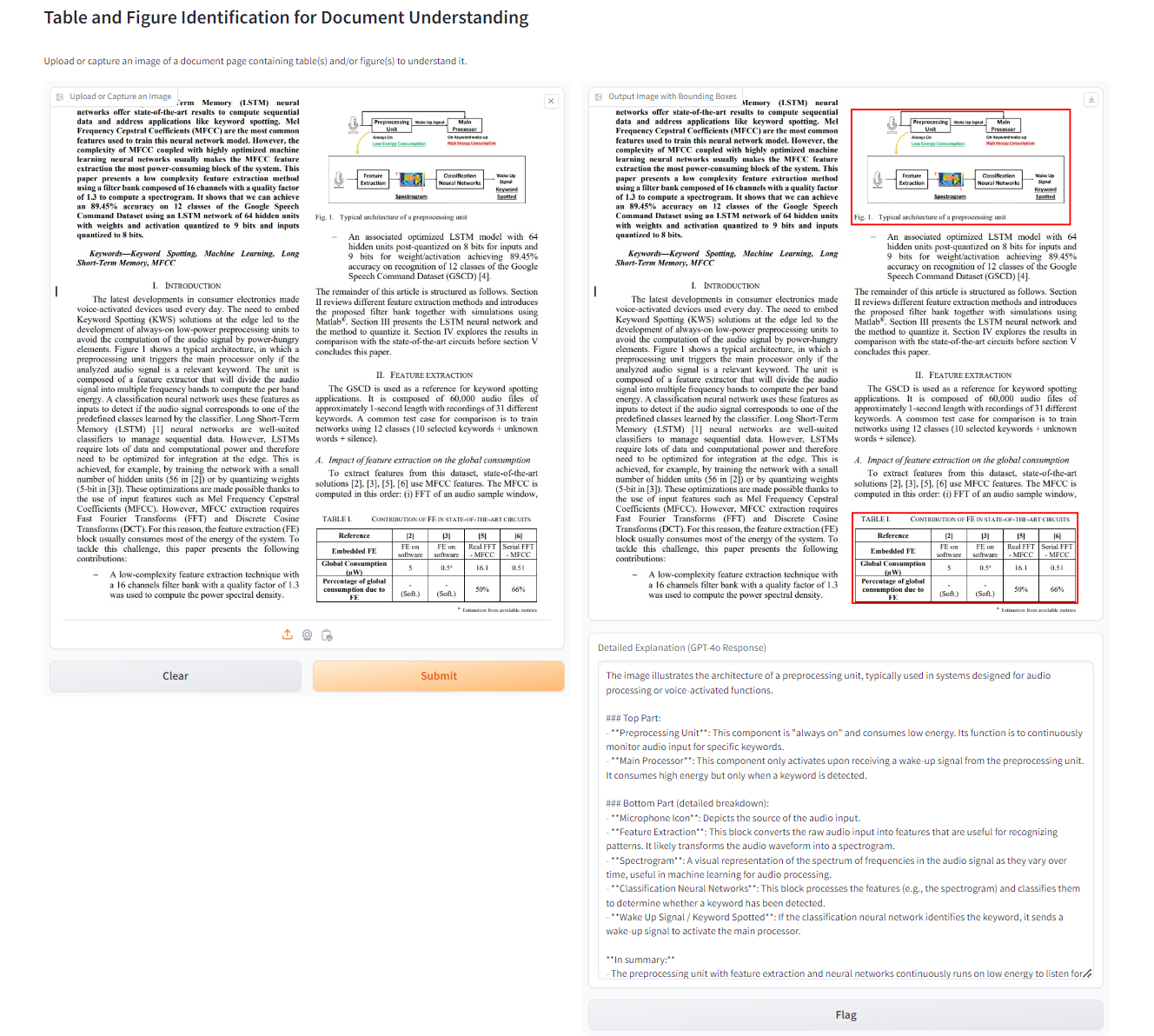

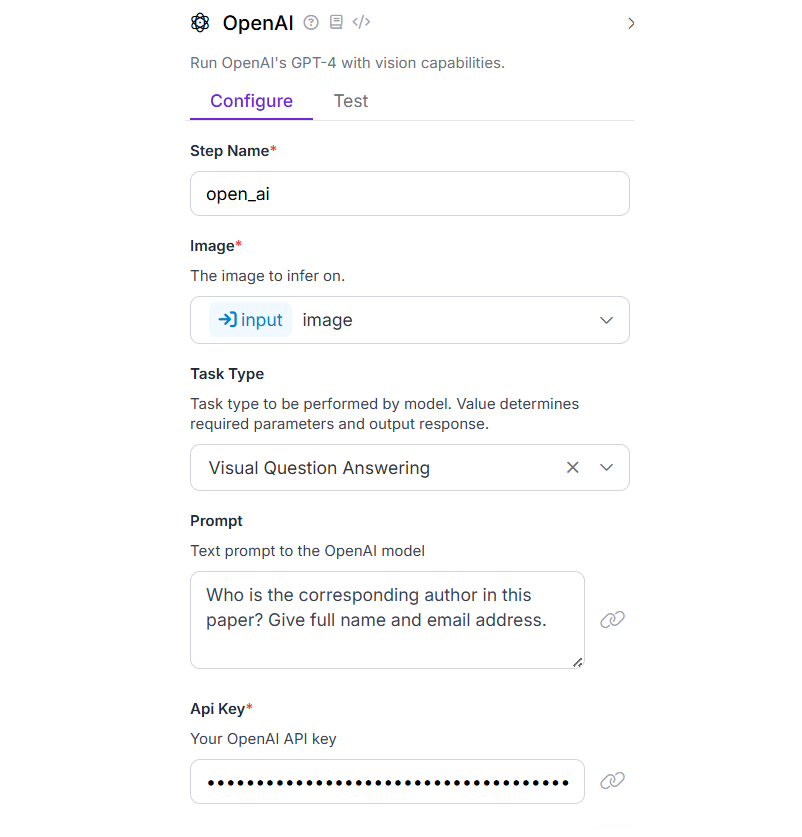

Document AI: Visual Question Answering

Visual Question Answering (VQA) in the context of Document AI is a task that lets machines answer questions about the content of a document by interpreting both its text and visual elements. Unlike traditional text-based question answering, which relies solely on textual data, VQA combines NLP and computer vision to understand and extract information from documents that may include text, images, tables, layouts, and other visual features. In Document AI, this capability is particularly useful for processing complex, unstructured, or semi-structured documents like invoices, forms, contracts, or infographics.

Let’s build an example Workflow for VAQ. We will use the same Workflow from our example 2 in this blog that uses OpenAI Block. Configure the OpenAI block by choosing Task Type as “Visual Question Answering” and specify the following text prompt.

Who is the corresponding author in this paper? Give name and email address.

We will use the following research paper document image for this example.

In the above image you can see that only the corresponding authors email is given, but no where it specify name along with it. But in the text prompt we are also asking the name along with email. The Llama 3.2 Vision model not only detects the email of corresponding author but also accurately associate name of the author with name. The following the the output that you will get.

[

{

"open_ai": {

"output": "The corresponding author is M. Kalpana Chowdary, and the email address is dr.kalpana@mlrinstitutions.ac.in.",

"classes": null

}

}

]

These use cases highlight the versatility of Document AI in handling diverse document-related tasks without being tied to a specific industry. By transforming raw, unstructured documents into actionable information, Document AI streamlines processes, enhances data accessibility, and reduces manual workload.

Document Processing AI

Document AI transforms unstructured documents into valuable, structured data by leveraging OCR, NLP, and computer vision. This technology streamlines workflows across industries, reducing manual work and minimizing errors.

Roboflow simplifies building Document AI projects with its intuitive, low-code platform that integrates various AI blocks for tasks different Document AI related tasks. This streamlines the conversion of unstructured data into actionable insights.

Cite this Post

Use the following entry to cite this post in your research:

Timothy M. (Mar 28, 2025). What Is Document Processing AI? The Ultimate Guide. Roboflow Blog: https://blog.roboflow.com/what-is-document-ai/